基于深度神经网络的人脸表情识别

Preprocessing Fer2013

数据集

链接:fer2013.csv

提取码:mzvb

复制这段内容后打开百度网盘手机App,操作更方便哦

参考 下载fer2013数据集和数据的处理

基于深度卷积神经网络的人脸表情识别(附GitHub地址)

------------------------1.数据的预处理---------------------------

# create data and label for FER2013

# labels: 0=Angry, 1=Disgust, 2=Fear, 3=Happy, 4=Sad, 5=Surprise, 6=Neutral

import csv

import os

import numpy as np

import h5py

file = 'data/fer2013.csv'

# Creat the list to store the data and label information

Training_x = []

Training_y = []

PublicTest_x = []

PublicTest_y = []

PrivateTest_x = []

PrivateTest_y = []

datapath = os.path.join('data','data.h5')

if not os.path.exists(os.path.dirname(datapath)): #如果不存在目录名,则新建一个目录名

os.makedirs(os.path.dirname(datapath))

with open(file,'r') as csvin:

data=csv.reader(csvin)

for row in data:

if row[-1] == 'Training':

temp_list = []

for pixel in row[1].split( ): #以空格为分隔符

temp_list.append(int(pixel))

I = np.asarray(temp_list)

Training_y.append(int(row[0]))

Training_x.append(I.tolist())

if row[-1] == "PublicTest" :

temp_list = []

for pixel in row[1].split( ):

temp_list.append(int(pixel))

I = np.asarray(temp_list)

PublicTest_y.append(int(row[0]))

PublicTest_x.append(I.tolist())

if row[-1] == 'PrivateTest':

temp_list = []

for pixel in row[1].split( ):

temp_list.append(int(pixel))

I = np.asarray(temp_list)

PrivateTest_y.append(int(row[0]))

PrivateTest_x.append(I.tolist())

print(np.shape(Training_x))

print(np.shape(PublicTest_x))

print(np.shape(PrivateTest_x))

datafile = h5py.File(datapath, 'w') #创建文件并写入数据

datafile.create_dataset("Training_pixel", dtype = 'uint8', data=Training_x)

datafile.create_dataset("Training_label", dtype = 'int64', data=Training_y)

datafile.create_dataset("PublicTest_pixel", dtype = 'uint8', data=PublicTest_x)

datafile.create_dataset("PublicTest_label", dtype = 'int64', data=PublicTest_y)

datafile.create_dataset("PrivateTest_pixel", dtype = 'uint8', data=PrivateTest_x)

datafile.create_dataset("PrivateTest_label", dtype = 'int64', data=PrivateTest_y)

datafile.close()

print("Save data finish!!!")

方案二

# create data and label for FER2013

# labels: 0=Angry, 1=Disgust, 2=Fear, 3=Happy, 4=Sad, 5=Surprise, 6=Neutral

import csv

import os

import numpy as np

import h5py

class Fer2013(object):

def __init__(self):

"""

构造函数

"""

self.file='data/fer2013.csv'

def get_Training(self):

Training_y=[]

Training_x=[]

data = csv.reader(open('data/fer2013.csv', 'r'))

for row in data:

if row[-1] == 'Training':

temp_list = []

for pixel in row[1].split( ): #以空格为分隔符

temp_list.append(int(pixel))

I = np.asarray(temp_list)

Training_y.append(int(row[0]))

Training_x.append(I.tolist())

return Training_y,Training_x

def get_Public(self):

PublicTest_y=[]

PublicTest_x=[]

data = csv.reader(open('data/fer2013.csv', 'r'))

for row in data:

if row[-1] == "PublicTest" :

temp_list = []

for pixel in row[1].split( ):

temp_list.append(int(pixel))

I = np.asarray(temp_list)

PublicTest_y.append(int(row[0]))

PublicTest_x.append(I.tolist())

return PublicTest_y,PublicTest_x

def get_Private(self):

PrivateTest_y=[]

PrivateTest_x=[]

data = csv.reader(open('data/fer2013.csv', 'r'))

for row in data:

if row[-1] == 'PrivateTest':

temp_list = []

for pixel in row[1].split( ):

temp_list.append(int(pixel))

I = np.asarray(temp_list)

PrivateTest_y.append(int(row[0]))

PrivateTest_x.append(I.tolist())

return PrivateTest_y,PrivateTest_x

if __name__=='__main__':

fer=Fer2013()

train_y,train_x=fer.get_Training()

print('Training: ',np.shape(train_y),' ',np.shape(train_x))

public_y, public_x = fer.get_Public()

print('public: ',np.shape(public_y),' ',np.shape(public_x))

private_y, private_x = fer.get_Private()

print('private: ',np.shape(private_y),' ',np.shape(private_x))

# Training: (28709,) (28709, 2304)

# public: (3589,) (3589, 2304)

# private: (3589,) (3589, 2304)

用pickle保存文件train_x,train_y

if __name__=='__main__':

import pickle

fer=Fer2013()

train_y,train_x=fer.get_Training()

file_image=open('image.txt','wb')

pickle.dump(train_x,file_image,-1)

pickle.dump(train_y,file_image,-1) #dump使用list用-1

file_image.close()

fr=open('image.txt','rb')

train_x=pickle.load(fr)

train_y=pickle.load(fr)

fr.close()

把这些数据集打包成Dataloader中的dataset

import torch.utils.data as Data

fr = open('image.txt', 'rb')

train_data = pickle.load(fr)

train_labels = pickle.load(fr)

fr.close()

train_labels = np.asarray(train_labels)

train_data = np.asarray(train_data)

train_data = train_data.reshape((28709, 48, 48))

train_labels=torch.from_numpy(train_labels)

train_data=torch.from_numpy(train_data)

torch_dataset=Data.TensorDataset(train_data, train_labels)

loader = Data.DataLoader(

dataset=torch_dataset,

batch_size=128,

shuffle=True,

num_workers=2

)

for i,(batch_X,batch_Y) in enumerate(loader):

print(i)

break

而 exit(0) 则表示程序是正常退出的,退出代码是告诉解释器的(或操作系统,可做测试用。

-----------------------------tips------------------------------

图像的transforms的组合变换

import matplotlib.pyplot as plt

import torchvision.transforms as tf

from PIL import Image

import PIL

import numpy as np

import torch

import imageio

img = imageio.imread('http://ww1.sinaimg.cn/large/7ec23716gy1g6rx3xt8x1j203d02j3ye.jpg')

img = Image.fromarray(img)

transforms=transforms.Compose([

transforms.RandomHorizontalFlip(),

transforms.CenterCrop(48),

])

for i in range(9):

plt.subplot(331+i)

plt.axis('off')

t=transforms(img)

print(type(t))

plt.imshow(t)

plt.show()

参考:DataLoader和DataSet

--------------------2,读取数据-------------------------------------------

DataLoader中的dataset

''' Fer2013 Dataset class'''

from __future__ import print_function

from PIL import Image

import numpy as np

import h5py

import torch.utils.data as data

import matplotlib.pyplot as plt

class FER2013(data.Dataset):

"""`FER2013 Dataset.

Args:

train (bool, optional): If True, creates dataset from training set, otherwise

creates from test set.

transform (callable, optional): A function/transform that takes in an PIL image

and returns a transformed version. E.g, ``transforms.RandomCrop``

"""

def __init__(self, split='Training', transform=None):

self.transform = transform

self.split = split # training set or test set

self.data = h5py.File('./data/data.h5', 'r', driver='core')

# now load the picked numpy arrays

if self.split == 'Training':

self.train_data = self.data['Training_pixel']

self.train_labels = self.data['Training_label']

self.train_data = np.asarray(self.train_data)

self.train_data = self.train_data.reshape((28709, 48, 48))

elif self.split == 'PublicTest':

self.PublicTest_data = self.data['PublicTest_pixel']

self.PublicTest_labels = self.data['PublicTest_label']

self.PublicTest_data = np.asarray(self.PublicTest_data)

self.PublicTest_data = self.PublicTest_data.reshape((3589, 48, 48))

else:

self.PrivateTest_data = self.data['PrivateTest_pixel']

self.PrivateTest_labels = self.data['PrivateTest_label']

self.PrivateTest_data = np.asarray(self.PrivateTest_data)

self.PrivateTest_data = self.PrivateTest_data.reshape((3589, 48, 48))

def __getitem__(self, index):

"""

Args:

index (int): Index

Returns:

tuple: (image, target) where target is index of the target class.

"""

if self.split == 'Training':

img, target = self.train_data[index], self.train_labels[index]

elif self.split == 'PublicTest':

img, target = self.PublicTest_data[index], self.PublicTest_labels[index]

else:

img, target = self.PrivateTest_data[index], self.PrivateTest_labels[index]

# doing this so that it is consistent with all other datasets

# to return a PIL Image

img = img[:, :, np.newaxis] #(48,48,1)

img = np.concatenate((img, img, img), axis=2) #(48,48,3)

img = Image.fromarray(img)

print(len(img))

if self.transform is not None:

img = self.transform(img)

return img, target

def __len__(self):

if self.split == 'Training':

return len(self.train_data)

elif self.split == 'PublicTest':

return len(self.PublicTest_data)

else:

return len(self.PrivateTest_data)

------------------------3.建立模型-------------------------------

'''VGG11/13/16/19 in Pytorch.'''

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.autograd import Variable

cfg = {

'VGG11': [64, 'M', 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M'],

'VGG13': [64, 64, 'M', 128, 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M'],

'VGG16': [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 'M', 512, 512, 512, 'M', 512, 512, 512, 'M'],

'VGG19': [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 256, 'M', 512, 512, 512, 512, 'M', 512, 512, 512, 512, 'M'],

}

class VGG(nn.Module):

def __init__(self, vgg_name):

super(VGG, self).__init__()

self.features = self._make_layers(cfg[vgg_name])

self.classifier = nn.Linear(512, 7)

def forward(self, x):

out = self.features(x)

out = out.view(out.size(0), -1)

out = F.dropout(out, p=0.5, training=self.training)

out = self.classifier(out)

return out

def _make_layers(self, cfg):

layers = []

in_channels = 3

for x in cfg:

if x == 'M':

layers += [nn.MaxPool2d(kernel_size=2, stride=2)]

else:

layers += [nn.Conv2d(in_channels, x, kernel_size=3, padding=1),

nn.BatchNorm2d(x),

nn.ReLU(inplace=True)]

in_channels = x

layers += [nn.AvgPool2d(kernel_size=1, stride=1)]

# print(*layers)

return nn.Sequential(*layers)

---------------------------------4训练模型----------------------------------

TypeError: h5py objects cannot be pickled?

还未找到解决方案

应该不是代码错误的问题而是接口本身存在问题,故采用第二种方法,用pickle保存相关数据

重新进行数据的预处理

**相关数据的保存**

# create data and label for FER2013

# labels: 0=Angry, 1=Disgust, 2=Fear, 3=Happy, 4=Sad, 5=Surprise, 6=Neutral

import csv

import os

import numpy as np

import h5py

file = 'data/fer2013.csv'

# Creat the list to store the data and label information

Training_x = []

Training_y = []

PublicTest_x = []

PublicTest_y = []

PrivateTest_x = []

PrivateTest_y = []

datapath = os.path.join('data','data.h5')

if not os.path.exists(os.path.dirname(datapath)):

os.makedirs(os.path.dirname(datapath))

with open(file,'r') as csvin:

data=csv.reader(csvin)

for row in data:

if row[-1] == 'Training':

temp_list = []

for pixel in row[1].split( ):

temp_list.append(int(pixel))

I = np.asarray(temp_list)

Training_y.append(int(row[0]))

Training_x.append(I.tolist())

if row[-1] == "PublicTest" :

temp_list = []

for pixel in row[1].split( ):

temp_list.append(int(pixel))

I = np.asarray(temp_list)

PublicTest_y.append(int(row[0]))

PublicTest_x.append(I.tolist())

if row[-1] == 'PrivateTest':

temp_list = []

for pixel in row[1].split( ):

temp_list.append(int(pixel))

I = np.asarray(temp_list)

PrivateTest_y.append(int(row[0]))

PrivateTest_x.append(I.tolist())

print(np.shape(Training_x))

print(np.shape(PublicTest_x))

print(np.shape(PrivateTest_x))

import pickle

train_file=open('data/train.txt','wb')

pickle.dump(Training_x,train_file)

pickle.dump(Training_y,train_file)

train_file.close()

public_test_file=open('data/public_test.txt','wb')

pickle.dump(PublicTest_x,public_test_file)

pickle.dump(PublicTest_y,public_test_file)

public_test_file.close()

private_test_file=open('data/private.txt','wb')

pickle.dump(PrivateTest_x,private_test_file)

pickle.dump(PrivateTest_y,private_test_file)

private_test_file.close()

# datafile = h5py.File(datapath, 'w')

# datafile.create_dataset("Training_pixel", dtype = 'uint8', data=Training_x)

# datafile.create_dataset("Training_label", dtype = 'int64', data=Training_y)

# datafile.create_dataset("PublicTest_pixel", dtype = 'uint8', data=PublicTest_x)

# datafile.create_dataset("PublicTest_label", dtype = 'int64', data=PublicTest_y)

# datafile.create_dataset("PrivateTest_pixel", dtype = 'uint8', data=PrivateTest_x)

# datafile.create_dataset("PrivateTest_label", dtype = 'int64', data=PrivateTest_y)

# datafile.close()

print("Save data finish!!!")

数据集包装成dataset的过程(重点)

''' Fer2013 Dataset class'''

from __future__ import print_function

from PIL import Image

import numpy as np

import h5py

import torch.utils.data as data

import pickle

class FER2013(data.Dataset):

"""`FER2013 Dataset.

Args:

train (bool, optional): If True, creates dataset from training set, otherwise

creates from test set.

transform (callable, optional): A function/transform that takes in an PIL image

and returns a transformed version. E.g, ``transforms.RandomCrop``

"""

def __init__(self, split='Training', transform=None):

self.transform = transform

self.split = split # training set or test set

# self.data = h5py.File('./data/data.h5', 'r', driver='core')

train_fr=open('data/train.txt','rb')

public_fr=open('data/public_test.txt','rb')

private_fr=open('data/private.txt','rb')

# now load the picked numpy arrays

if self.split == 'Training':

self.train_data = pickle.load(train_fr)

self.train_labels = pickle.load(train_fr)

self.train_data = np.asarray(self.train_data)

self.train_data = self.train_data.reshape((28709, 48, 48))

train_fr.close()

elif self.split == 'PublicTest':

self.PublicTest_data = pickle.load(public_fr)

self.PublicTest_labels = pickle.load(public_fr)

self.PublicTest_data = np.asarray(self.PublicTest_data)

self.PublicTest_data = self.PublicTest_data.reshape((3589, 48, 48))

public_fr.close()

else:

self.PrivateTest_data = pickle.load(private_fr)

self.PrivateTest_labels = pickle.load(private_fr)

self.PrivateTest_data = np.asarray(self.PrivateTest_data)

self.PrivateTest_data = self.PrivateTest_data.reshape((3589, 48, 48))

private_fr.close()

def __getitem__(self, index):

"""

Args:

index (int): Index

Returns:

tuple: (image, target) where target is index of the target class.

"""

if self.split == 'Training':

img, target = self.train_data[index], self.train_labels[index]

elif self.split == 'PublicTest':

img, target = self.PublicTest_data[index], self.PublicTest_labels[index]

else:

img, target = self.PrivateTest_data[index], self.PrivateTest_labels[index]

# doing this so that it is consistent with all other datasets

# to return a PIL Image

img = img[:, :, np.newaxis]

img = np.concatenate((img, img, img), axis=2) #(48,48,3)

img = Image.fromarray(np.uint8(img)) #转化成PIL文件了 np.uint8(0-255)

if self.transform is not None:

img = self.transform(img)

return img, target

def __len__(self):

if self.split == 'Training':

return len(self.train_data)

elif self.split == 'PublicTest':

return len(self.PublicTest_data)

else:

return len(self.PrivateTest_data)

import torchvision.transforms as transforms

# # 定义对数据的预处理

transform_train = transforms.Compose([

transforms.RandomCrop(44), #依据给定的size随机裁剪

transforms.RandomHorizontalFlip(), #依据概率p对PIL图片进行水平翻转,默认是0.5

transforms.ToTensor(), #array -> Tensor

])

import cv2

import torch

if __name__ == '__main__':

trainset = FER2013(split='Training', transform=transform_train)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=128, shuffle=True, num_workers=1)

for i,(batch_x,batch_y) in enumerate(trainloader):

print(batch_x.numpy().shape) #(128,3,44,44)

print(batch_y.numpy().shape) #(128,)

break

训练模型

# Training

def train(epoch):

print('\nEpoch: %d' % epoch)

global Train_acc

net.train()

train_loss = 0

correct = 0

total = 0

if epoch > learning_rate_decay_start and learning_rate_decay_start >= 0:

frac = (epoch - learning_rate_decay_start) // learning_rate_decay_every

decay_factor = learning_rate_decay_rate ** frac

current_lr = opt.lr * decay_factor ##指数衰减。例如:随着迭代轮数的增加学习率自动发生衰减

utils.set_lr(optimizer, current_lr) # set the decayed rate

else:

current_lr = opt.lr

print('learning_rate: %s' % str(current_lr))

for batch_idx, (inputs, targets) in enumerate(trainloader):

if use_cuda:

inputs, targets = inputs.cuda(), targets.cuda()

optimizer.zero_grad()

inputs, targets = Variable(inputs), Variable(targets)

outputs = net(inputs)

loss = criterion(outputs, targets)

loss.backward() #反向传播

utils.clip_gradient(optimizer, 0.1) #梯度截断

optimizer.step() #用SGD更新参数

train_loss += np.array(loss.data)

_, predicted = torch.max(outputs.data, 1) #返回每一行最大值的元素,并返回索引

total += targets.size(0)

correct += predicted.eq(targets.data).cpu().sum()

utils.progress_bar(batch_idx, len(trainloader), 'Loss: %.3f | Acc: %.3f%% (%d/%d)'

% (train_loss/(batch_idx+1), 100.*correct/total, correct, total))

Train_acc = 100.*correct/total

保存模型

# Save checkpoint.

PrivateTest_acc = 100.*correct/total

if PrivateTest_acc > best_PrivateTest_acc:

print('Saving..')

print("best_PrivateTest_acc: %0.3f" % PrivateTest_acc)

state = {

'net': net.state_dict() if use_cuda else net,

'best_PublicTest_acc': best_PublicTest_acc,

'best_PrivateTest_acc': PrivateTest_acc,

'best_PublicTest_acc_epoch': best_PublicTest_acc_epoch,

'best_PrivateTest_acc_epoch': epoch,

}

if not os.path.isdir(path):

os.mkdir(path)

torch.save(state, os.path.join(path,'PrivateTest_model.t7'))

best_PrivateTest_acc = PrivateTest_acc

best_PrivateTest_acc_epoch = epoch

加载模型

parser.add_argument('--resume', '-r', action='store_true', help='resume from checkpoint')

#直接运行python a.py,输出结果False

#运行python a.py --resume,输出结果True

opt = parser.parse_args() #建立解析对象

if opt.resume:

# Load checkpoint.

print('==> Resuming from checkpoint..')

assert os.path.isdir(path), 'Error: no checkpoint directory found!'

checkpoint = torch.load(os.path.join(path,'PrivateTest_model.t7'))

net.load_state_dict(checkpoint['net'])

best_PublicTest_acc = checkpoint['best_PublicTest_acc']

best_PrivateTest_acc = checkpoint['best_PrivateTest_acc']

best_PrivateTest_acc_epoch = checkpoint['best_PublicTest_acc_epoch']

best_PrivateTest_acc_epoch = checkpoint['best_PrivateTest_acc_epoch']

start_epoch = checkpoint['best_PrivateTest_acc_epoch'] + 1

else:

print('==> Building model..')

图像增广后,取结果的平均值

transform_test = transforms.Compose([

transforms.TenCrop(cut_size),

transforms.Lambda(lambda crops: torch.stack([transforms.ToTensor()(crop) for crop in crops])),

])

应用到测试图像后,增广图像,结果取平均

bs, ncrops, c, h, w = np.shape(inputs)

outputs = net(inputs)

outputs_avg = outputs.view(bs, ncrops, -1).mean(1) # avg over crops

打印结果

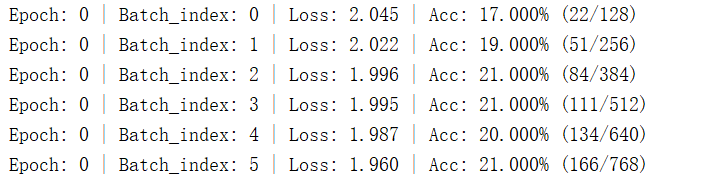

print('Epoch: %d | Batch_index: %d | Loss: %.3f | Acc: %.3f%% (%d/%d) '

%(epoch,batch_idx,train_loss/(batch_idx+1), 100.*correct/total, correct, total))

在terminal鼠标右键close session清除对话,可以重新使用命令符

可以在Python文件直接使用命令符语句

cmd = 'python mainpro_FER.py --resume'

os.system(cmd)

fer2013 PrivateTest集上的结果

def PublicTest(epoch):

global PublicTest_acc

global best_PublicTest_acc

global best_PublicTest_acc_epoch

net.eval()

PublicTest_loss = 0

correct = 0

total = 0

for batch_idx, (inputs, targets) in enumerate(PublicTestloader):

bs, ncrops, c, h, w = np.shape(inputs)

inputs = inputs.view(-1, c, h, w)

if use_cuda:

inputs, targets = inputs.cuda(), targets.cuda()

inputs, targets = Variable(inputs, volatile=True), Variable(targets)

outputs = net(inputs)

outputs_avg = outputs.view(bs, ncrops, -1).mean(1) # avg over crops

loss = criterion(outputs_avg, targets)

PublicTest_loss += loss.data[0]

_, predicted = torch.max(outputs_avg.data, 1)

total += targets.size(0)

correct += predicted.eq(targets.data).cpu().sum()

utils.progress_bar(batch_idx, len(PublicTestloader), 'Loss: %.3f | Acc: %.3f%% (%d/%d)'

% (PublicTest_loss / (batch_idx + 1), 100. * correct / total, correct, total))

# Save checkpoint.

PublicTest_acc = 100.*correct/total

if PublicTest_acc > best_PublicTest_acc:

print('Saving..')

print("best_PublicTest_acc: %0.3f" % PublicTest_acc)

state = {

'net': net.state_dict() if use_cuda else net, #保存网络

'acc': PublicTest_acc,

'epoch': epoch,

}

if not os.path.isdir(path):

os.mkdir(path)

torch.save(state, os.path.join(path,'PublicTest_model.t7'))

best_PublicTest_acc = PublicTest_acc #最好的精确值

best_PublicTest_acc_epoch = epoch #最佳迭代次数

图像可视化

"""

visualize results for test image

"""

import numpy as np

import matplotlib.pyplot as plt

from PIL import Image

import torch

import torch.nn as nn

import torch.nn.functional as F

import os

from torch.autograd import Variable

import transforms as transforms

from skimage import io

from skimage.transform import resize

from models import *

cut_size = 44

transform_test = transforms.Compose([

transforms.TenCrop(cut_size),

transforms.Lambda(lambda crops: torch.stack([transforms.ToTensor()(crop) for crop in crops])),

])

def rgb2gray(rgb):

return np.dot(rgb[...,:3], [0.299, 0.587, 0.114])

raw_img = io.imread('images/1.jpg')

gray = rgb2gray(raw_img)

gray = resize(gray, (48,48), mode='symmetric').astype(np.uint8)

img = gray[:, :, np.newaxis]

img = np.concatenate((img, img, img), axis=2)#(48,48,3)

img = Image.fromarray(img) #转化成PIL文件

inputs = transform_test(img) #transform

class_names = ['Angry', 'Disgust', 'Fear', 'Happy', 'Sad', 'Surprise', 'Neutral']

net = VGG('VGG19')#加载网络模型

checkpoint = torch.load(os.path.join('FER2013_VGG19', 'PrivateTest_model.t7'),map_location='cpu')

net.load_state_dict(checkpoint['net'])

# net.cuda()

net.eval()

ncrops, c, h, w = np.shape(inputs)

inputs = inputs.view(-1, c, h, w)

# inputs = inputs.cuda()

inputs = Variable(inputs, volatile=True)

outputs = net(inputs)

outputs_avg = outputs.view(ncrops, -1).mean(0) # avg over crops

#使用数据增强后的结果测试效果会更强

score = F.softmax(outputs_avg)

# print(type(score)) #tensor

_, predicted = torch.max(score.data, 0)

plt.rcParams['figure.figsize'] = (13.5,5.5)

axes=plt.subplot(1, 3, 1)

plt.imshow(raw_img)

plt.xlabel('Input Image', fontsize=16)

axes.set_xticks([])

axes.set_yticks([])

plt.tight_layout()

plt.subplots_adjust(left=0.05, bottom=0.2, right=0.95, top=0.9, hspace=0.02, wspace=0.3)

plt.subplot(1, 3, 2)

ind = 0.1+0.6*np.arange(len(class_names)) # the x locations for the groups

width = 0.4 # the width of the bars: can also be len(x) sequence

color_list = ['red','orangered','darkorange','limegreen','darkgreen','royalblue','navy']

for i in range(len(class_names)):

plt.bar(ind[i], score.data.cpu().numpy()[i], width, color=color_list[i])

plt.title("Classification results ",fontsize=20)

plt.xlabel(" Expression Category ",fontsize=16)

plt.ylabel(" Classification Score ",fontsize=16)

plt.xticks(ind, class_names, rotation=45, fontsize=14)

axes=plt.subplot(1, 3, 3)

emojis_img = io.imread('images/emojis/%s.png' % str(class_names[int(predicted.cpu().numpy())]))

plt.imshow(emojis_img)

plt.xlabel('Emoji Expression', fontsize=16)

axes.set_xticks([])

axes.set_yticks([])

plt.tight_layout()

# show emojis

plt.show()

plt.savefig(os.path.join('images/results/l.png'))

plt.close()

print("The Expression is %s" %str(class_names[int(predicted.cpu().numpy())]))