机器学习之决策树算法(2)

上面一篇博文我们使用决策树建立模型预测泰坦尼克号幸存者的数量。但出现了过拟合现象,现在我们尝试着优化模型参数,提高预测精度。

首先我们把上面一篇数据处理的代码复制过来:

import pandas as pd;

def DataAnalyse():

data=pd.read_csv("./titanic/train.csv");

"""数据中有些对我们完全没有用的信息,我们要去掉,比如:名字,票号,船舱号,样本的ID号"""

data.drop(["PassengerId","Cabin","Ticket","Name","Embarked"],axis=1,inplace=True);#删除了四个,我们还有7个特征。其中一个是标签

"""对性别进行编码"""

data["Sex"]=(data['Sex']=='male').astype("int")

"""处理登船港口"""

#labels=data["Embarked"].unique().tolist()

#data["Embarked"]=data["Embarked"].apply(lambda n:labels.index(n))

"""数据中有一些没有值得,我们全部补0"""

data=data.fillna(0)

Y_train=data["Survived"]

data.drop(["Survived"],axis=1,inplace=True)#在本身上操作。

X_train=data;

return X_train,Y_train;

from sklearn.model_selection import train_test_split

def datasplit(X,Y):

x_train,x_test,y_train,y_test=train_test_split(X,Y,test_size=0.2);

return x_train,x_test,y_train,y_test;

from sklearn.tree import DecisionTreeClassifier接下来我们选用限制决策树深度的方式来进行优化,首先我们知道,决策树至少有两层,但好像不太好限制最高有几层。我们先设置最高有6*2=12层吧。

import numpy as np;

def max_depth(x_train, x_test, y_train, y_test):

depth=range(2,15);

trscore=[];

tsscore=[];

for i in depth:

clf=DecisionTreeClassifier(max_depth=i);

clf.fit(x_train,y_train);

train_score = clf.score(x_train, y_train)

trscore.append(train_score)

test_score = clf.score(x_test, y_test)

tsscore.append(test_score)

best_index=np.argmax(tsscore);

print(tsscore)

best_score=tsscore[best_index]

x=depth[best_index]

print("最优深度:",x,"得分:",best_score)

plt.figure();

plt.plot(depth,tsscore,".g--",label="测试")

plt.plot(depth,trscore,".r--",label="训练")

plt.show()

if __name__ == '__main__':

X_train,Y_train=DataAnalyse();

x_train, x_test, y_train, y_test=datasplit(X_train,Y_train)

max_depth(x_train, x_test, y_train, y_test)运行结果:

[0.776536312849162, 0.8212290502793296, 0.8212290502793296, 0.8547486033519553, 0.8379888268156425, 0.8044692737430168, 0.8044692737430168, 0.7877094972067039, 0.7932960893854749, 0.776536312849162, 0.7877094972067039, 0.7430167597765364, 0.7653631284916201]

最优深度: 5 得分: 0.8547486033519553比上一篇的结果好一些了。

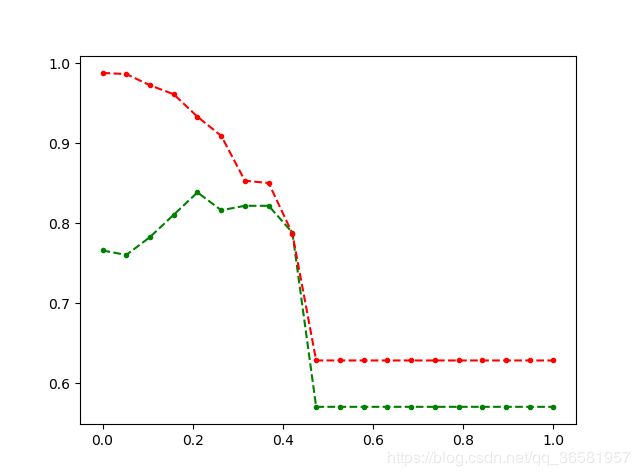

为了更直观的展示一下,我们尝试着把它图形化。

图中红线是训练集评分,绿色是测试集评分。可以看出测试集在决策树深度为5的时候评分开始下降了。所以可以确定是5。另外需要指明的一点是我们的训练集和测试集是随机分的,所有每次运行的结果是不一样的。

下面我们尝试修改阈值来优化模型,尝试提高预测精度。

from sklearn.model_selection import train_test_split

def datasplit(X,Y):

x_train,x_test,y_train,y_test=train_test_split(X,Y,test_size=0.2);

return x_train,x_test,y_train,y_test;

from sklearn.tree import DecisionTreeClassifier

import numpy as np;

import matplotlib.pyplot as plt;

def Value(x_train, x_test, y_train, y_test):

value=np.linspace(0,1,20)

trscore=[];

tsscore=[];

for i in value:

clf=DecisionTreeClassifier(criterion="gini",min_impurity_split=i);

clf.fit(x_train,y_train);

train_score = clf.score(x_train, y_train)

trscore.append(train_score)

test_score = clf.score(x_test, y_test)

tsscore.append(test_score)

best_index=np.argmax(tsscore);

print(tsscore)

best_score=tsscore[best_index]

x=value[best_index]

print("最优阈值:",x,"得分:",best_score)

plt.figure();

plt.plot(value,tsscore,".g--",label="测试")

plt.plot(value,trscore,".r--",label="训练")

plt.show()

if __name__ == '__main__':

X_train,Y_train=DataAnalyse();

x_train, x_test, y_train, y_test=datasplit(X_train,Y_train)

Value(x_train, x_test, y_train, y_test)运行结果:

[0.7653631284916201, 0.7597765363128491, 0.7821229050279329, 0.8100558659217877, 0.8379888268156425, 0.8156424581005587, 0.8212290502793296, 0.8212290502793296, 0.7877094972067039, 0.5698324022346368, 0.5698324022346368, 0.5698324022346368, 0.5698324022346368, 0.5698324022346368, 0.5698324022346368, 0.5698324022346368, 0.5698324022346368, 0.5698324022346368, 0.5698324022346368, 0.5698324022346368]

最优阈值: 0.21052631578947367 得分: 0.8379888268156425