Linux centos7.5 编译hadoop源码

为什么要编译hadoop?

由于Apache给出的hadoop的安装包没有提供带c程序访问的接口,所以我们在使用本地库(本地库可以用来做压缩,以及支持c程序等等)的时候就会出现问题,需要对hadoop源码包重新编译。

1、linux准备环境

1.1 关闭防火墙(学习使用)

[root@localhost ~]# systemctl status firewalld

[root@localhost ~]# systemctl stop firewalld

[root@localhost ~]# systemctl disable firewalld

1.2 关闭selinux(学习使用)

# 修改selinux的配置文件

vim /etc/selinux/config修改内容:

SELINUX=disabledps:

- 什么是SELinux

- SELinux是Linux的一种安全子系统

- Linux中的权限管理是针对于文件的, 而不是针对进程的, 也就是说, 如果root启动了某个进程, 则这个进程可以操作任何一个文件

- SELinux在Linux的文件权限之外, 增加了对进程的限制, 进程只能在进程允许的范围内操作资源

- 为什么要关闭SELinux

- 如果开启了SELinux, 需要做非常复杂的配置, 才能正常使用系统, 在学习阶段, 在非生产环境, 一般不使用SELinux

- SELinux的工作模式

- enforcing 强制模式

- permissive 宽容模式

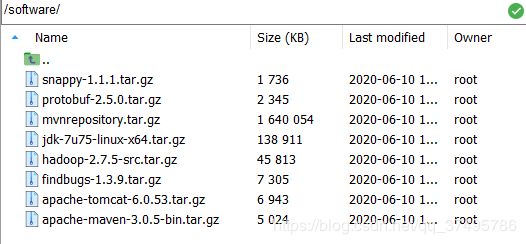

- disable 关闭1.3 创建目录,并将所需文件上传

[root@localhost /]# mkdir software

[root@localhost /]# cd software/

[root@localhost software]#2、安装jdk

[root@localhost software]# rpm -qa | grep java

[root@localhost software]# tar -zxvf jdk-7u75-linux-x64.tar.gz

[root@localhost software]# vim /etc/profile

增加如下内容:

export JAVA_HOME="/software/jdk1.7.0_75"

export CLASSPATH="$JAVA_HOME/lib"

export PATH="$JAVA_HOME/bin:$PATH"让/etc/profile 文件中配置生效,并查看Java版本

[root@localhost jdk1.7.0_75]# source /etc/profile

[root@localhost jdk1.7.0_75]# java -version

java version "1.7.0_75"

Java(TM) SE Runtime Environment (build 1.7.0_75-b13)

Java HotSpot(TM) 64-Bit Server VM (build 24.75-b04, mixed mode)

3、配置maven

[root@localhost software]# tar -zxvf apache-maven-3.0.5-bin.tar.gz

[root@localhost software]# vim /etc/profile

[root@localhost software]# source /etc/profile增加如下内容:

export JAVA_HOME="/software/jdk1.7.0_75"

export CLASSPATH="$JAVA_HOME/lib"

export PATH="$JAVA_HOME/bin:$PATH"

(以下是新增的)

export MAVEN_HOME=/software/apache-maven-3.0.5

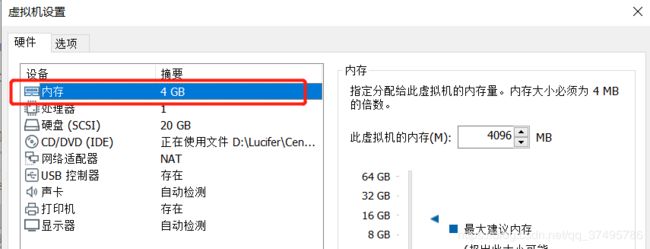

export MAVEN_OPTS="-Xms4096m -Xmx4096m"

export PATH=$MAVEN_HOME/bin:$PATH

4、解压本地仓库,并配置本地仓库、中央仓库

[root@localhost software]# tar -zxvf mvnrepository.tar.gz[root@localhost software]# cd mvnrepository

[root@localhost mvnrepository]# pwd

/software/mvnrepository

[root@localhost mvnrepository]# cd ..

[root@localhost software]# cd apache-maven-3.0.5

[root@localhost apache-maven-3.0.5]# ls

bin boot conf lib LICENSE.txt NOTICE.txt README.txt

[root@localhost apache-maven-3.0.5]# cd conf/

[root@localhost conf]# ls

settings.xml

[root@localhost conf]# vim settings.xmlmaven中配置新增部分:

/software/mvnrepository/

(省略......)

aliyun

aliyun Maven

*

http://maven.aliyun.com/nexus/content/groups/public/

5、配置findbugs

[root@localhost software]# tar -xvf findbugs-1.3.9.tar.gz

[root@localhost software]# vim /etc/profile

[root@localhost software]# source /etc/profile新增内容:

export JAVA_HOME="/software/jdk1.7.0_75"

export CLASSPATH="$JAVA_HOME/lib"

export PATH="$JAVA_HOME/bin:$PATH"

export MAVEN_HOME=/software/apache-maven-3.0.5

export MAVEN_OPTS="-Xms4096m -Xmx4096m"

export PATH=$MAVEN_HOME/bin:$PATH

(新增部分)

export FINDBUGS_HOME=/software/findbugs-1.3.9

export PATH=$FINDBUGS_HOME/bin:$PATH

6、安装依赖包

在线安装一些依赖包:

yum install autoconf automake libtool cmake

yum install ncurses-devel

yum install openssl-devel

yum install lzo-devel zlib-devel gcc gcc-c++bzip2压缩需要的依赖包:

yum install -y bzip2-devel7、安装protobuf

[root@localhost software]# tar -zxvf protobuf-2.5.0.tar.gz

[root@localhost software]# cd protobuf-2.5.0

[root@localhost protobuf-2.5.0]# ls

aclocal.m4 config.sub depcomp install-sh Makefile.am python

autogen.sh configure editors INSTALL.txt Makefile.in README.txt

CHANGES.txt configure.ac examples java missing src

config.guess CONTRIBUTORS.txt generate_descriptor_proto.sh ltmain.sh protobuf-lite.pc.in vsprojects

config.h.in COPYING.txt gtest m4 protobuf.pc.in

[root@localhost protobuf-2.5.0]# ./configure

(省略.....)

[root@localhost protobuf-2.5.0]# make && make install

(省略.....耐心等待....)

8、安装snappy

[root@localhost software]# tar -zxvf snappy-1.1.1.tar.gz

(省略.....)

[root@localhost snappy-1.1.1]# ./configure

(省略.....)

[root@localhost snappy-1.1.1]# make && make install

(省略.....)

9、编译hadoop

[root@localhost software]# tar -zxvf hadoop-2.7.5-src.tar.gz

(省略.....)

[root@localhost hadoop-2.7.5-src]# ls

BUILDING.txt hadoop-client-modules hadoop-mapreduce-project hadoop-tools README.txt

dev-support hadoop-cloud-storage-project hadoop-maven-plugins hadoop-yarn-project

hadoop-assemblies hadoop-common-project hadoop-minicluster LICENSE.txt

hadoop-build-tools hadoop-dist hadoop-project NOTICE.txt

hadoop-client hadoop-hdfs-project hadoop-project-dist pom.xml

[root@localhost hadoop-2.7.5-src]# mvn package -DskipTests -Pdist,native -Dtar -Drequire.snappy -e -X

(时间比较长......耐心等待........)注:

问题: 如果命令不是在hadoop-2.7.5-src目录下执行mvn package -DskipTests -Pdist,native -Dtar -Drequire.snappy -e -X,则会报如下错误:

The goal you specified requires a project to execute but there is no POM in this directory (/software/apache-maven-3.0.5/conf). Please verify you invoked Maven from the correct directory. -> [Help 1]

org.apache.maven.lifecycle.MissingProjectException: The goal you specified requires a project to execute but there is no POM in this directory (/software/apache-maven-3.0.5/conf). Please verify you invoked Maven from the correct directory.原因:当前目录下没有pom.xml,目录不要找错了。

等待........编译成功:

[INFO] Apache Hadoop Main ................................ SUCCESS [1:08.058s]

[INFO] Apache Hadoop Build Tools ......................... SUCCESS [1:34.298s]

[INFO] Apache Hadoop Project POM ......................... SUCCESS [33.924s]

[INFO] Apache Hadoop Annotations ......................... SUCCESS [18.272s]

[INFO] Apache Hadoop Assemblies .......................... SUCCESS [0.242s]

[INFO] Apache Hadoop Project Dist POM .................... SUCCESS [10.577s]

[INFO] Apache Hadoop Maven Plugins ....................... SUCCESS [21.456s]

[INFO] Apache Hadoop MiniKDC ............................. SUCCESS [21.365s]

[INFO] Apache Hadoop Auth ................................ SUCCESS [16.569s]

[INFO] Apache Hadoop Auth Examples ....................... SUCCESS [9.642s]

[INFO] Apache Hadoop Common .............................. SUCCESS [2:14.069s]

[INFO] Apache Hadoop NFS ................................. SUCCESS [7.531s]

[INFO] Apache Hadoop KMS ................................. SUCCESS [16.149s]

[INFO] Apache Hadoop Common Project ...................... SUCCESS [0.077s]

[INFO] Apache Hadoop HDFS ................................ SUCCESS [2:18.683s]

[INFO] Apache Hadoop HttpFS .............................. SUCCESS [19.533s]

[INFO] Apache Hadoop HDFS BookKeeper Journal ............. SUCCESS [12.978s]

[INFO] Apache Hadoop HDFS-NFS ............................ SUCCESS [4.069s]

[INFO] Apache Hadoop HDFS Project ........................ SUCCESS [0.046s]

[INFO] hadoop-yarn ....................................... SUCCESS [0.043s]

[INFO] hadoop-yarn-api ................................... SUCCESS [1:35.579s]

[INFO] hadoop-yarn-common ................................ SUCCESS [38.506s]

[INFO] hadoop-yarn-server ................................ SUCCESS [0.063s]

[INFO] hadoop-yarn-server-common ......................... SUCCESS [14.256s]

[INFO] hadoop-yarn-server-nodemanager .................... SUCCESS [21.133s]

[INFO] hadoop-yarn-server-web-proxy ...................... SUCCESS [3.585s]

[INFO] hadoop-yarn-server-applicationhistoryservice ...... SUCCESS [10.922s]

[INFO] hadoop-yarn-server-resourcemanager ................ SUCCESS [22.381s]

[INFO] hadoop-yarn-server-tests .......................... SUCCESS [10.018s]

[INFO] hadoop-yarn-client ................................ SUCCESS [12.430s]

[INFO] hadoop-yarn-server-sharedcachemanager ............. SUCCESS [4.094s]

[INFO] hadoop-yarn-applications .......................... SUCCESS [0.049s]

[INFO] hadoop-yarn-applications-distributedshell ......... SUCCESS [2.936s]

[INFO] hadoop-yarn-applications-unmanaged-am-launcher .... SUCCESS [2.242s]

[INFO] hadoop-yarn-site .................................. SUCCESS [0.038s]

[INFO] hadoop-yarn-registry .............................. SUCCESS [6.001s]

[INFO] hadoop-yarn-project ............................... SUCCESS [3.378s]

[INFO] hadoop-mapreduce-client ........................... SUCCESS [0.192s]

[INFO] hadoop-mapreduce-client-core ...................... SUCCESS [23.094s]

[INFO] hadoop-mapreduce-client-common .................... SUCCESS [18.725s]

[INFO] hadoop-mapreduce-client-shuffle ................... SUCCESS [4.673s]

[INFO] hadoop-mapreduce-client-app ....................... SUCCESS [11.250s]

[INFO] hadoop-mapreduce-client-hs ........................ SUCCESS [6.795s]

[INFO] hadoop-mapreduce-client-jobclient ................. SUCCESS [6.088s]

[INFO] hadoop-mapreduce-client-hs-plugins ................ SUCCESS [2.123s]

[INFO] Apache Hadoop MapReduce Examples .................. SUCCESS [6.972s]

[INFO] hadoop-mapreduce .................................. SUCCESS [2.558s]

[INFO] Apache Hadoop MapReduce Streaming ................. SUCCESS [5.398s]

[INFO] Apache Hadoop Distributed Copy .................... SUCCESS [14.310s]

[INFO] Apache Hadoop Archives ............................ SUCCESS [2.460s]

[INFO] Apache Hadoop Rumen ............................... SUCCESS [8.569s]

[INFO] Apache Hadoop Gridmix ............................. SUCCESS [4.836s]

[INFO] Apache Hadoop Data Join ........................... SUCCESS [3.423s]

[INFO] Apache Hadoop Ant Tasks ........................... SUCCESS [2.513s]

[INFO] Apache Hadoop Extras .............................. SUCCESS [4.264s]

[INFO] Apache Hadoop Pipes ............................... SUCCESS [5.905s]

[INFO] Apache Hadoop OpenStack support ................... SUCCESS [5.088s]

[INFO] Apache Hadoop Amazon Web Services support ......... SUCCESS [25.001s]

[INFO] Apache Hadoop Azure support ....................... SUCCESS [6.645s]

[INFO] Apache Hadoop Client .............................. SUCCESS [6.854s]

[INFO] Apache Hadoop Mini-Cluster ........................ SUCCESS [0.517s]

[INFO] Apache Hadoop Scheduler Load Simulator ............ SUCCESS [9.235s]

[INFO] Apache Hadoop Tools Dist .......................... SUCCESS [7.879s]

[INFO] Apache Hadoop Tools ............................... SUCCESS [0.067s]

[INFO] Apache Hadoop Distribution ........................ SUCCESS [29.673s]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 18:58.886s

[INFO] Finished at: Wed Jun 10 12:39:38 CST 2020

[INFO] Final Memory: 254M/3959M

查看编译后的hadoop的包存放位置:

[root@localhost hadoop-2.7.5-src]# ls

BUILDING.txt hadoop-client-modules hadoop-mapreduce-project hadoop-tools README.txt

dev-support hadoop-cloud-storage-project hadoop-maven-plugins hadoop-yarn-project

hadoop-assemblies hadoop-common-project hadoop-minicluster LICENSE.txt

hadoop-build-tools hadoop-dist hadoop-project NOTICE.txt

hadoop-client hadoop-hdfs-project hadoop-project-dist pom.xml

[root@localhost hadoop-2.7.5-src]# cd hadoop-dist/

[root@localhost hadoop-dist]# ls

pom.xml target

[root@localhost hadoop-dist]# cd target/

[root@localhost target]# ls

antrun hadoop-2.7.5.tar.gz javadoc-bundle-options

classes hadoop-dist-2.7.5.jar maven-archiver

dist-layout-stitching.sh hadoop-dist-2.7.5-javadoc.jar maven-shared-archive-resources

dist-tar-stitching.sh hadoop-dist-2.7.5-sources.jar test-classes

hadoop-2.7.5 hadoop-dist-2.7.5-test-sources.jar test-dir10、对比原始包与编译后的包效果

10.1 上传hadoop-2.7.5.tar.gz包

10.2 原本hadoop提供的包

[root@localhost software]# tar -zxvf hadoop-2.7.5.tar.gz

[root@localhost software]# ls

apache-maven-3.0.5 hadoop-2.7.5 jdk-7u75-linux-x64.tar.gz snappy-1.1.1

apache-maven-3.0.5-bin.tar.gz hadoop-2.7.5-src mvnrepository snappy-1.1.1.tar.gz

apache-tomcat-6.0.53.tar.gz hadoop-2.7.5-src.tar.gz mvnrepository.tar.gz

findbugs-1.3.9 hadoop-2.7.5.tar.gz protobuf-2.5.0

findbugs-1.3.9.tar.gz jdk1.7.0_75 protobuf-2.5.0.tar.gz

[root@localhost software]# cd hadoop-2.7.5

[root@localhost hadoop-2.7.5]# ls

bin etc include lib libexec LICENSE.txt NOTICE.txt README.txt sbin share

[root@localhost hadoop-2.7.5]# cd lib

[root@localhost lib]# ls

native

[root@localhost lib]# cd native/

[root@localhost native]# ls

libhadoop.a libhadoop.so libhadooputils.a libhdfs.so

libhadooppipes.a libhadoop.so.1.0.0 libhdfs.a libhdfs.so.0.0.0

[root@localhost native]# ../../bin/hadoop checknative

20/06/10 13:02:29 WARN bzip2.Bzip2Factory: Failed to load/initialize native-bzip2 library system-native, will use pure-Java version

20/06/10 13:02:29 INFO zlib.ZlibFactory: Successfully loaded & initialized native-zlib library

Native library checking:

hadoop: true /software/hadoop-2.7.5/lib/native/libhadoop.so.1.0.0

zlib: true /lib64/libz.so.1

snappy: false

lz4: true revision:99

bzip2: false

openssl: true /lib64/libcrypto.so

10.2 编译hadoop后的包

[root@localhost software]# cd hadoop-2.7.5-src

[root@localhost hadoop-2.7.5-src]# ll

总用量 148

-rw-r--r--. 1 root root 13050 12月 16 2017 BUILDING.txt

drwx------. 3 root root 181 6月 10 12:04 dev-support

drwx------. 5 root root 63 6月 10 12:24 hadoop-assemblies

drwx------. 4 root root 89 6月 10 12:22 hadoop-build-tools

drwx------. 4 root root 52 6月 10 12:38 hadoop-client

drwxr-xr-x. 9 root root 234 12月 16 2017 hadoop-client-modules

drwxr-xr-x. 3 root root 34 12月 16 2017 hadoop-cloud-storage-project

drwx------. 11 root root 206 6月 10 12:28 hadoop-common-project

drwx------. 4 root root 52 6月 10 12:39 hadoop-dist

drwx------. 9 root root 181 6月 10 12:31 hadoop-hdfs-project

drwx------. 10 root root 197 6月 10 12:37 hadoop-mapreduce-project

drwx------. 5 root root 63 6月 10 12:25 hadoop-maven-plugins

drwx------. 4 root root 52 6月 10 12:38 hadoop-minicluster

drwx------. 5 root root 63 6月 10 12:24 hadoop-project

drwx------. 4 root root 70 6月 10 12:24 hadoop-project-dist

drwx------. 22 root root 4096 6月 10 12:39 hadoop-tools

drwx------. 4 root root 73 6月 10 12:35 hadoop-yarn-project

-rw-r--r--. 1 root root 86424 12月 16 2017 LICENSE.txt

-rw-r--r--. 1 root root 14978 12月 16 2017 NOTICE.txt

-rw-r--r--. 1 root root 18993 12月 16 2017 pom.xml

-rw-r--r--. 1 root root 1366 7月 25 2017 README.txt

[root@localhost hadoop-2.7.5-src]# cd hadoop-dist/

[root@localhost hadoop-dist]# ll

总用量 12

-rw-r--r--. 1 root root 6930 12月 16 2017 pom.xml

drwxr-xr-x. 10 root root 4096 6月 10 12:39 target

[root@localhost hadoop-dist]# cd target/

[root@localhost target]# ll

总用量 592312

drwxr-xr-x. 2 root root 28 6月 10 12:39 antrun

drwxr-xr-x. 3 root root 22 6月 10 12:39 classes

-rw-r--r--. 1 root root 1866 6月 10 12:39 dist-layout-stitching.sh

-rw-r--r--. 1 root root 639 6月 10 12:39 dist-tar-stitching.sh

drwxr-xr-x. 9 root root 149 6月 10 12:39 hadoop-2.7.5

-rw-r--r--. 1 root root 201695024 6月 10 12:39 hadoop-2.7.5.tar.gz

-rw-r--r--. 1 root root 26518 6月 10 12:39 hadoop-dist-2.7.5.jar

-rw-r--r--. 1 root root 404743016 6月 10 12:39 hadoop-dist-2.7.5-javadoc.jar

-rw-r--r--. 1 root root 24048 6月 10 12:39 hadoop-dist-2.7.5-sources.jar

-rw-r--r--. 1 root root 24048 6月 10 12:39 hadoop-dist-2.7.5-test-sources.jar

drwxr-xr-x. 2 root root 51 6月 10 12:39 javadoc-bundle-options

drwxr-xr-x. 2 root root 28 6月 10 12:39 maven-archiver

drwxr-xr-x. 3 root root 22 6月 10 12:39 maven-shared-archive-resources

drwxr-xr-x. 3 root root 22 6月 10 12:39 test-classes

drwxr-xr-x. 2 root root 6 6月 10 12:39 test-dir

[root@localhost target]# cd hadoop-2.7.5

[root@localhost hadoop-2.7.5]# ls

bin etc include lib libexec LICENSE.txt NOTICE.txt README.txt sbin share

[root@localhost hadoop-2.7.5]# cd lib

[root@localhost lib]# ls

native

[root@localhost lib]# cd native/

[root@localhost native]# ../../bin/hadoop checknative

20/06/10 13:06:43 INFO bzip2.Bzip2Factory: Successfully loaded & initialized native-bzip2 library system-native

20/06/10 13:06:43 INFO zlib.ZlibFactory: Successfully loaded & initialized native-zlib library

Native library checking:

hadoop: true /software/hadoop-2.7.5-src/hadoop-dist/target/hadoop-2.7.5/lib/native/libhadoop.so.1.0.0

zlib: true /lib64/libz.so.1

snappy: true /lib64/libsnappy.so.1

lz4: true revision:99

bzip2: true /lib64/libbz2.so.1

openssl: true /lib64/libcrypto.so

相比之下,对本地库的支持增加了snappy和bzip2的压缩算法。