Saltstack 自动化运维

实验环境

| 主机名 | 角色 | IP |

|---|---|---|

| server1 | master | 172.25.31.1 |

| server2 | minion | 172.25.31.2 |

| server3 | minion | 172.25.31.3 |

安装saltstack

第三方软件库 salt 配置

ls /var/www/html/rhel6

libyaml-0.1.3-4.el6.x86_64.rpm

python-babel-0.9.4-5.1.el6.noarch.rpm

python-backports-1.0-5.el6.x86_64.rpm

python-backports-ssl_match_hostname-3.4.0.2-2.el6.noarch.rpm

python-chardet-2.2.1-1.el6.noarch.rpm

python-cherrypy-3.2.2-4.el6.noarch.rpm

python-crypto-2.6.1-3.el6.x86_64.rpm

python-crypto-debuginfo-2.6.1-3.el6.x86_64.rpm

python-enum34-1.0-4.el6.noarch.rpm

python-futures-3.0.3-1.el6.noarch.rpm

python-impacket-0.9.14-1.el6.noarch.rpm

python-jinja2-2.8.1-1.el6.noarch.rpm

python-msgpack-0.4.6-1.el6.x86_64.rpm

python-ordereddict-1.1-2.el6.noarch.rpm

python-requests-2.6.0-3.el6.noarch.rpm

python-setproctitle-1.1.7-2.el6.x86_64.rpm

python-six-1.9.0-2.el6.noarch.rpm

python-tornado-4.2.1-1.el6.x86_64.rpm

python-urllib3-1.10.2-1.el6.noarch.rpm

python-zmq-14.5.0-2.el6.x86_64.rpm

PyYAML-3.11-1.el6.x86_64.rpm

salt-2016.11.3-1.el6.noarch.rpm

salt-api-2016.11.3-1.el6.noarch.rpm

salt-cloud-2016.11.3-1.el6.noarch.rpm

salt-master-2016.11.3-1.el6.noarch.rpm

salt-minion-2016.11.3-1.el6.noarch.rpm

salt-ssh-2016.11.3-1.el6.noarch.rpm

salt-syndic-2016.11.3-1.el6.noarch.rpm

zeromq-4.0.5-4.el6.x86_64.rpm

注意:rhel6目录必须全是rpm包!

createrepo -v /var/www/html/pub/rhel6/ #/var/www/html/pub/rhel6/下会生成repodata文件配置yum源

vim /etc/yum.repos.d/rhel-source.repo

添加如下几行

[slat]

name=slatstack

baseurl=http://172.25.120.250/pub/rhel6

gpgcheck=0查看yum源

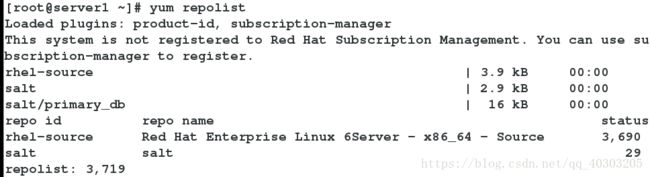

yum repolist在server1中安装salt-master,server2中安装salt-minion

server1

yum install -y salt-master

/etc/init.d/salt-master start

server2

yum install -y salt-minion

/etc/init.d/salt-minion start

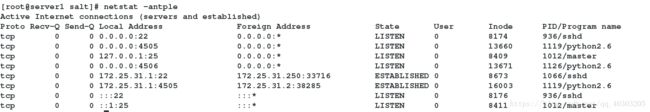

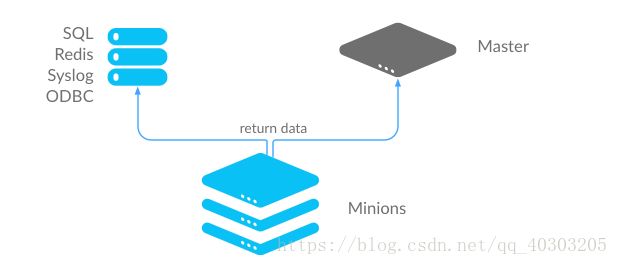

- 4505端口:链接用的,发布订阅

- 4506端口:请求响应,模式为:

zmq(消息队列)

minion端

修改配置文件

cd /etc/salt/

vim minion

15 # resolved, then the minion will fail to start.

16 master: 172.25.31.1

/etc/init.d/salt-minion start #开启服务

启动ok后,会生成 minion_id文件

注意:修改 IP 或 hostname 时,必须删除该文件

master 端

显示minion

salt-key -L

A:全部添加;a:添加指定主机

salt-key -A

alt-key : 实质上,是将master和minion的公钥互换

[root@server1 ~]# md5sum /etc/salt/pki/master/master.pub

c6ad4a9147697bcd891e0ac7acfb8e15 /etc/salt/pki/master/master.pub

[root@server2 salt]# md5sum /etc/salt/pki/minion/minion_master.pub

c6ad4a9147697bcd891e0ac7acfb8e15 /etc/salt/pki/minion/minion_master.pub

查看链接情况

[root@server1 ~]# lsof -i :4505

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

salt-mast 1119 root 16u IPv4 13660 0t0 TCP *:4505 (LISTEN)

salt-mast 1119 root 18u IPv4 16003 0t0 TCP server1:4505->server2:38285 (ESTABLISHED)

[root@server1 ~]# lsof -i :4506

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

salt-mast 1126 root 24u IPv4 13671 0t0 TCP *:4506 (LISTEN)

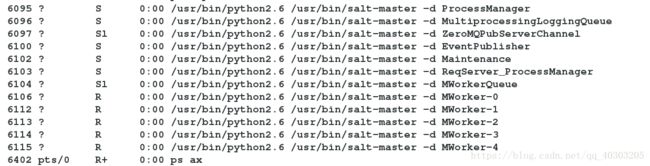

查看python端口进程

yum install -y python-setproctitle.x86_64

/etc/init.d/salt-master restart

ps ax

6095 ? S 0:00 /usr/bin/python2.6 /usr/bin/salt-master -d ProcessManager

6096 ? S 0:00 /usr/bin/python2.6 /usr/bin/salt-master -d MultiprocessingLoggingQueue

6097 ? Sl 0:00 /usr/bin/python2.6 /usr/bin/salt-master -d ZeroMQPubServerChannel

6100 ? S 0:00 /usr/bin/python2.6 /usr/bin/salt-master -d EventPublisher

6102 ? S 0:00 /usr/bin/python2.6 /usr/bin/salt-master -d Maintenance

6103 ? S 0:00 /usr/bin/python2.6 /usr/bin/salt-master -d ReqServer_ProcessManager

6104 ? Sl 0:00 /usr/bin/python2.6 /usr/bin/salt-master -d MWorkerQueue

6106 ? R 0:00 /usr/bin/python2.6 /usr/bin/salt-master -d MWorker-0

6112 ? R 0:00 /usr/bin/python2.6 /usr/bin/salt-master -d MWorker-1

6113 ? R 0:00 /usr/bin/python2.6 /usr/bin/salt-master -d MWorker-2

6114 ? R 0:00 /usr/bin/python2.6 /usr/bin/salt-master -d MWorker-3

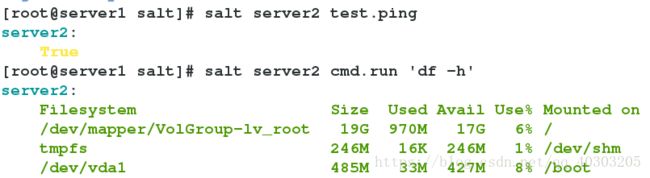

6115 ? R 0:00 /usr/bin/python2.6 /usr/bin/salt-master -d MWorker-4[root@server1 salt]# salt server2 test.ping

server2:

True

[root@server1 salt]# salt server2 cmd.run 'df -h'

server2:

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup-lv_root 19G 970M 17G 6% /

tmpfs 246M 16K 246M 1% /dev/shm

/dev/vda1 485M 33M 427M 8% /boot配置自动化部署

master端:

修改配置文件

cd /etc/salt/

vim master

534 file_roots:

535 base:

536 - /srv/salt

mkdir /srv/salt

/etc/init.d/salt-master restarthttpd的安装配置

YAML语言语法规则

安装httpd和php

cd /srv/salt

mkdir httpd

cd httpd/

vim install.sls

apache-install:

pkg.installed:

- pkgs:

- httpd

- php

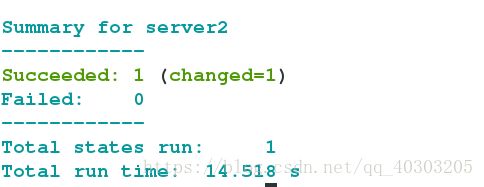

推送

salt server2 state.sls httpd.install

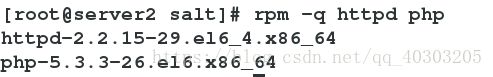

rpm -q httpd php

在/etc/salt/httpd/install.sls文件中加入如下几行

service.running:

- name: httpd

- enable: True在server2查看

netstat -antple | grep http

tcp 0 0 :::80 :::* LISTEN 0 16211 2030/httpd

修改httpd端口

在server2端拷贝配置文件到/srv/salt/httpd/files:

scp /etc/httpd/conf/httpd.conf server1:/srv/salt/httpd/files

在server1端的/srv/salt/httpd/files下修改httpd.conf文件,将端口改为8080

修改install.sls文件

vim /srv/salt/httpd/install.sls

apache-install:

pkg.installed:

- pkgs:

- httpd

- php

service.running:

- name: httpd

- enable: True

- reload: True

- watch:

- file: apache-install

file.managed:

- name: /etc/httpd/conf/httpd.conf

- source: salt://httpd/files/httpd.conf

- mode: 644

- user: root

- group: root

推送

salt server2 state.sls httpd.install

在server2端查看

netstat -antple | grep http

tcp 0 0 :::8080 :::* LISTEN 0 17021 2030/httpd

更改成功

nginx

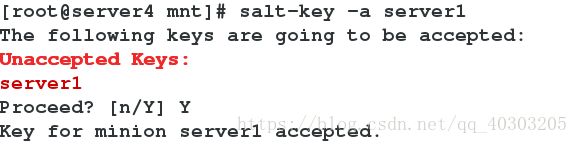

再增加一个minion端 server3

编辑server3的配置文件,开启服务

cd /etc/salt/

vim minion

15 # resolved, then the minion will fail to start.

16 master: 172.25.31.1

/etc/init.d/salt-minion start

salt-key -a server3

安装模块

vim /srv/salt/nginx/install.sls

include:

- pkgs.make

- users.nginx

nginx-install:

file.managed:

- name: /mnt/nginx-1.10.1.tar.gz

- source: salt://nginx/files/nginx-1.10.1.tar.gz

cmd.run:

- name: cd /mnt && tar zxf nginx-1.10.1.tar.gz && cd nginx-1.10.1 && sed -i.bak 's/CFLAGS="$CFLAGS -g"/#CFLAGS="$CFLAGS -g"/g' auto/cc/gcc && sed -i.bak 's/#define NGINX_VER "nginx\/" NGINX_VERSION/#define NGINX_VER "nginx"/g' src/core/nginx.h && ./configure --prefix=/usr/local/nginx --with-http_ssl_module --with-http_stub_status_module --with-threads --with-file-aio &> /dev/null && make > /dev/null && make install > /dev/null

- creates: /usr/local/nginx

服务模块

vim /srv/salt/nginx/service.sls

include:

- nginx.install

- users.nginx

/usr/local/nginx/conf/nginx.conf:

file.managed:

- source: salt://nginx/files/nginx.conf

nginx-service:

file.managed:

- name: /etc/init.d/nginx

- source: salt://nginx/files/nginx

- mode: 755

service.running:

- name: nginx

- reload: True

- watch:

- file: /usr/local/nginx/conf/nginx.conf用户模块

vim /srv/salt/users/nginx.sls

nginx-group:

group.present:

- name: nginx

- gid: 800

nginx-user:

user.present:

- name: nginx

- shell: /sbin/nologin

- home: /usr/local/nginx

- createhome: false

- uid: 800

- gid: 800pkgs模块(解决依赖性)

vim /srv/salt/pkgs/make.sls

make:

pkg.installed:

- pkgs:

- gcc

- pcre-devel

- openssl-devel在 /srv/salt/nginx/files 目录下放入nginx配置文件nginx.conf nginx服务脚本nginx nginx源码包nginx-1.10.1.tar.gz

[root@server1 salt]# ls nginx/files/

nginx nginx-1.10.1.tar.gz nginx.conf

推送

salt server3 state.sls nginx.service

安装haproxy并配置负载均衡

配置好yum源

[root@server1 salt]# yum list haproxy

Loaded plugins: product-id, subscription-manager

This system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.

Installed Packages

haproxy.x86_64 1.4.24-2.el6 @LoadBalancer安装haproxy

vim /srv/salt/haproxy/install.sls

haproxy-install:

pkg.installed:

- pkgs:

- haproxy

推送安装

salt server1 state.sls haproxy.install

拷贝配置文件到/srv/salt/haproxy/files目录下

cp /etc/haproxy/haproxy.cfg /srv/salt/haproxy/files/

编辑haproxy配置文件

vim /srv/salt/haproxy/files/haproxy.cfg

frontend main *:80

default_backend app

#---------------------------------------------------------------------

# static backend for serving up images, stylesheets and such

#---------------------------------------------------------------------

backend app

balance roundrobin

server app1 172.25.31.2:80 check

server app2 172.25.31.3:80 check修改/srv/salt/haproxy/install.sls文件

vim /srv/salt/haproxy/install.sls

haproxy-install:

pkg.installed:

- pkgs:

- haproxy

file.managed:

- name: /etc/haproxy/haproxy.cfg

- source: salt://haproxy/files/haproxy.cfg

service.running:

- name: haproxy

- reload: True

- watch:

- file: haproxy-install再次推送

salt server1 state.sls haproxy.install

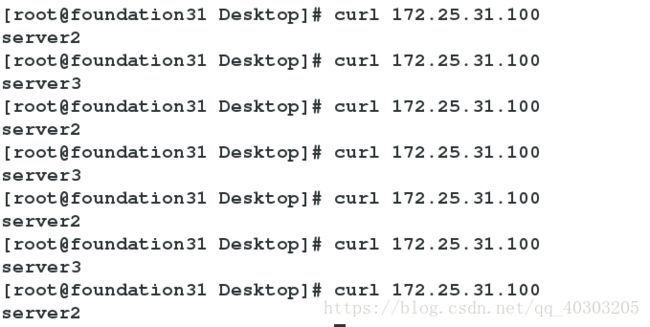

一键部署haproxy负载均衡

vim /srv/salt/top.sls

base:

'server1':

- haproxy.install

'server2':

- httpd.install

'server3':

- nginx.service高级推送

salt '*' state.highstate

在物理机测试

curl 172.25.31.1

minion端的静态变量grains

grains是minion第一次启动的时候采集的静态数据,可以用在salt的模块和其他组件中。其实grains在每次的minion启动(重启)的时候都会采集,即向master汇报一次的。

官方参考文档:Grains参考文档

查询所有变量

salt server2 grains.items

查询指定变量

salt server2 grains.item os

静态信息grains定义的方法

方法一:

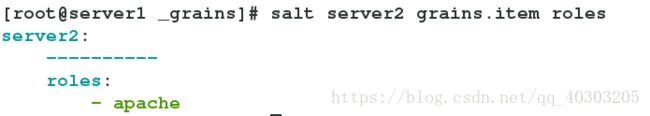

修改配置文件

在server2中

vim /etc/salt/minion

120 grains:

121 roles:

122 - apache

/etc/init.d/salt-minion restart

在master端查看

salt server2 grains.item roles

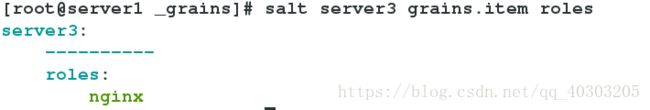

方法二:

在minion端

vim /etc/salt/grains

roles: nginx

在master端

salt server3 saltutil.sync_grains #刷新

查看

salt server3 grains.item roles

mkdir /srv/salt/_grains

vim my_grains.py

#!/usr/bin/env python

def my_grains():

grains = {};

grains['hello'] = 'world'

grains['linux'] = 'redhat'

return grains推送到server2,查看

[root@server1 _grains]# salt server2 saltutil.sync_grains

server2:

- grains.my_grains

[root@server1 _grains]# salt server2 grains.item hello

server2:

----------

hello:

world

[root@server1 _grains]# salt server2 grains.item linux

server2:

----------

linux:

redhat

动态信息 pillar 定义

Pillar是Salt用来分发全局变量到所有minions的一个接口。

官方参考文档:pillar参考文档

在master端

修改配置文件,重启服务

cd /etc/salt/

694 pillar_roots:

695 base:

696 - /srv/pillar

mkdir /srv/pillar

/etc/init.d/salt-master restart 建立pillar推送信息

cd /srv/pillar

mkdir web

vim web/install.sls

{% if grains['fqdn'] == 'server2' %}

webserver: http

{% elif grains['fqdn'] == 'server3' %}

webserver: nginx

{% endif %}使之生效

vim top.sls

base:

'*':

- web.install

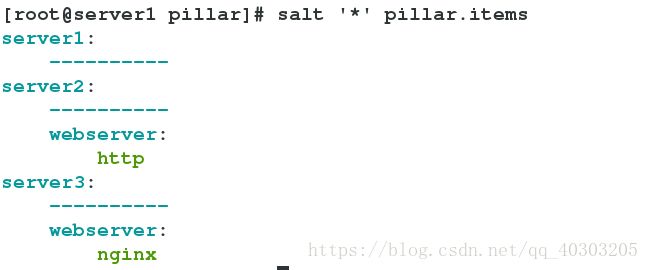

获取 piller 信息

salt '*' pillar.items

salt '*' saltutil.refresh_pillar

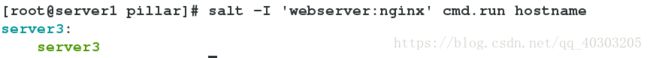

指定信息查询(I:动态信息)

salt -I 'webserver:nginx' cmd.run hostname

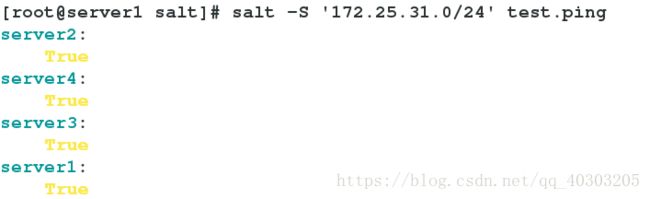

salt -S 172.25.31.0/24 test.ping

不同主机设定不同参数(jinja模板)

- {% %}:定义

- {{ }}:取值

配置httpd服务端口

脚本定义固定端口

vim /srv/salt/httpd/install.sls

- template: jinja

- contest:

port: 8080

将httpd配置文件 port 设为变量

vim /srv/salt/httpd/files/httpd.conf

136 Listen {{port}}

salt server2 state.sls httpd.install

在server2端查看

[root@server2 salt]# netstat -antlup | grep httpd

tcp 0 0 :::8080 :::* LISTEN 2030/httpd

![]()

定义为动态信息(无需刷新)

vim /srv/pillar/web/install.sls

{% if grains['fqdn'] == 'server2' %}

webserver: http

port: 80 #不同主机指定不同参数

{% elif grains['fqdn'] == 'server3' %}

webserver: nginx

{% endif %}

vim /srv/salt/httpd/install.sls

- template: jinja

- contest: #取值

port: {{ pillar['port'] }}

模板导入

vim /srv/salt/lib.sls

{% set bind = '172.25.120.4' %}

vim /srv/salt/httpd/files/httpd.conf

1 {% from 'lib.sls' import bind with context %}

137 Listen {{ bind }}:{{ port }}

lib.sls中的内容会覆盖install.sls中的内容

grains方法

vim /srv/salt/httpd/files/httpd.conf

137 Listen {{ bind }}:{{ port }}

vim /srv/salt/httpd/install.sls

- template: jinja

- contest:

port: {{ pillar['port'] }}

bind: {{ grains['ipv4'][-1] }} #ipv4是个列表pillar、grains取值

vim /srv/salt/httpd/install.sls

# - contest:

# port: {{ pillar['port'] }}

# bind: {{ grains['ipv4'][-1] }} #注释掉vim /srv/pillar/web/install.sls

{% if grains['fqdn'] == 'server2' %}

webserver: http

port: 80

vim /srv/salt/httpd/files/httpd.conf

137 Listen {{ grains['fqdn_ip4'][0] }}:{{ pillar['port'] }}Saltstack一键部署keepalived

将server4:172.25.31.4 也设为monion端

salt -S '172.25.31.0/24' test.ping

vim /srv/salt/keepalived/install.sls

include:

- pkgs.make

keepalived-install:

file.managed:

- name: /mnt/keepalived-2.0.6.tar.gz

- source: salt://keepalived/files/keepalived-2.0.6.tar.gz

cmd.run:

- name: cd /mnt && tar zxf keepalived-2.0.6.tar.gz && cd keepalived-2.0.6 && ./configure --prefix=/usr/local/keepalived --with-init=SYSV &> /dev/null && make > /dev/null && make install > /dev/null

- creates: /usr/local/keepalived将keepalived源码包放入/srv/salt/keepalived/files/目录下

在server1上安装keepalived

salt server1 state.sls keepalived.install

将keepalive服务脚本、配置文件放入/srv/salt/keepalived/files/目录下

cp /usr/local/keepalived/etc/keepalived/keepalived.conf /srv/salt/keepalived/files/

cp /usr/local/keepalived/etc/rc.d/init.d/keepalived keepalived/files/

修改keepalived配置文件

vim keepalived/files/keepalived.conf

3 global_defs {

4 notification_email {

5 root@localhost

6 }

7 notification_email_from keepalived@localhost

8 smtp_server 127.0.0.1

12 #vrrp_strict ##注释,否则火墙会出问题

18 state {{ STATE }} ##获取变量值

20 virtual_router_id {{ VRID }}

21 priority {{ PRIORITY }}

27 virtual_ipaddress { ##配置VIP

28 172.25.31.100

29 }设置prillar变量

vim /srv/pillar/keepalived/install.sls

{% if grains['fqdn'] == 'server1' %}

state: MASTER

vrid: 31

priority: 100

{% elif grains['fqdn'] == 'server4' %}

state: BACKUP

vrid: 31

priority: 50

{% endif %}

vim /srv/pillar/top.sls

base:

'server2':

- web.install

'server3':

- web.install

'server1':

- keepalived.install

'server4':

- keepalived.install

编辑安装和服务脚本

vim /srv/salt/keepalived/install.sls

include:

- pkgs.make

keepalived-install:

file.managed:

- name: /mnt/keepalived-2.0.6.tar.gz

- source: salt://keepalived/files/keepalived-2.0.6.tar.gz

cmd.run:

- name: cd /mnt && tar zxf keepalived-2.0.6.tar.gz && cd keepalived-2.0.6 && ./configure --prefix=/usr/local/keepalived --with-init=SYSV &> /dev/null && make > /dev/null && make install > /dev/null

- creates: /usr/local/keepalived

/etc/sysconfig/keepalived:

file.symlink:

- target : /usr/local/keepalived/etc/sysconfig/keepalived

/sbin/keepalived:

file.symlink:

- target : /usr/local/keepalived/sbin/keepalived

/etc/keepalived:

file.directory:

- mode: 755

vim /srv/salt/keepalived/service.sls

include:

- keepalived.install

/etc/keepalived/keepalived.conf:

file.managed:

- source: salt://keepalived/files/keepalived.conf

- template: jinja

- context:

STATE: {{ pillar['state'] }}

VRID: {{ pillar['vrid'] }}

PRIORITY: {{ pillar['priority'] }}

keepalived-service:

file.managed:

- name: /etc/init.d/keepalived

- source: salt://keepalived/files/keepalived

- mode: 755

service.running:

- name: keepalived

- reload: True

- watch:

- file: /etc/keepalived/keepalived.conf

编辑top.sls文件

vim /srv/salt/top.sls

base:

'server1':

- haproxy.install

- keepalived.service

'server4':

- haproxy.install

- keepalived.service

'server2':

- httpd.install

'server3':

- nginx.service高级推送

salt '*' state.highstate

推送成功后测试

查看server1的ip

ip addr

curl 172.25.31.100

/etc/init.d/keepalived stop

将salt的返回值存入数据库

方法一: 外部作业缓存——Minion侧运行Returner

安装MySQL-python

yum install -y MySQL-python

编辑配置文件

vim /etc/salt/minion

815 mysql.host: '172.25.31.1'

816 mysql.user: 'salt'

817 mysql.pass: 'westos'

818 mysql.db: 'salt'

819 mysql.port: 3306

/etc/init.d/salt-minion restart在master端: server1上

安装数据库

登陆数据库,给salt授权

mysql> grant all on salt.* to salt@'172.25.31.%' identified by 'westos';

将salt库导入数据库中

vim test.sql

CREATE DATABASE `salt`

DEFAULT CHARACTER SET utf8

DEFAULT COLLATE utf8_general_ci;

USE `salt`;

--

-- Table structure for table `jids`

--

DROP TABLE IF EXISTS `jids`;

CREATE TABLE `jids` (

`jid` varchar(255) NOT NULL,

`load` mediumtext NOT NULL,

UNIQUE KEY `jid` (`jid`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-- CREATE INDEX jid ON jids(jid) USING BTREE;

--

-- Table structure for table `salt_returns`

--

DROP TABLE IF EXISTS `salt_returns`;

CREATE TABLE `salt_returns` (

`fun` varchar(50) NOT NULL,

`jid` varchar(255) NOT NULL,

`return` mediumtext NOT NULL,

`id` varchar(255) NOT NULL,

`success` varchar(10) NOT NULL,

`full_ret` mediumtext NOT NULL,

`alter_time` TIMESTAMP DEFAULT CURRENT_TIMESTAMP,

KEY `id` (`id`),

KEY `jid` (`jid`),

KEY `fun` (`fun`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

--

-- Table structure for table `salt_events`

--

DROP TABLE IF EXISTS `salt_events`;

CREATE TABLE `salt_events` (

`id` BIGINT NOT NULL AUTO_INCREMENT,

`tag` varchar(255) NOT NULL,

`data` mediumtext NOT NULL,

`alter_time` TIMESTAMP DEFAULT CURRENT_TIMESTAMP,

`master_id` varchar(255) NOT NULL,

PRIMARY KEY (`id`),

KEY `tag` (`tag`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

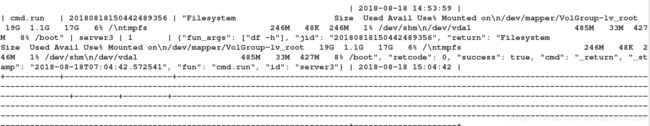

mysql < test.sql 测试

[root@server1 salt]# salt server2 test.ping --return mysql

进入数据库查看

mysql> use salt

mysql> select * from salt_returns;

方法二:外部作业缓存——Master侧运行Returner

安装 MySQL-python

yum install -y MySQL-python

编辑配置文件

vim /etc/salt/master

1059 master_job_cache: mysql

1060 mysql.host: localhost

1061 mysql.user: 'salt'

1062 mysql.pass: 'westos'

1063 mysql.db: 'salt'

1064 mysql.port: 3306登入数据库中,给本地用户授权

grant all on salt.* to salt@localhost identified by 'westos';

/etc/init.d/salt-master restart

测试

salt server3 cmd.run 'df -h'

进入数据库查看

mysql> use salt

mysql> select * from salt_returns;

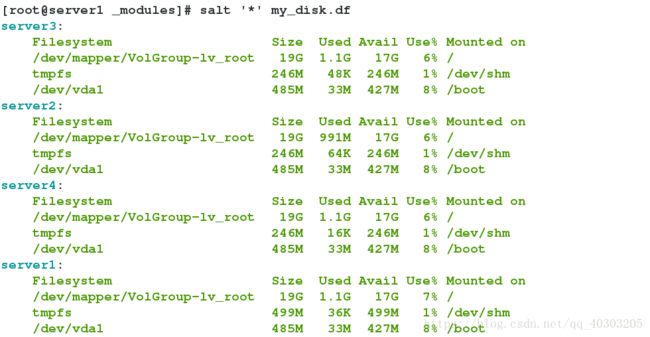

封装自定义模块

编辑自定义函数

cd /srv/salt/

mkdir _modules

vim _modules/my_disk.py

#!/usr/bin/env python

def df():

return __salt__['cmd.run']('df -h')将模块推送到minion端

salt '*' saltutil.sync_modules

salt '*' my_disk.df

syndic

将server4设为top-master

在server1上将server4的salt-key删除

salt-key -d server4

在server4上将之前实验用到的服务关掉

/etc/init.d/salt-minion stop

chkconfig salt-minion off

/etc/init.d/haproxy stop

/etc/init.d/keepalived stop

安装salt-master

yum install salt-master

编辑配置文件,设置server4为top-master

vim /etc/salt/master

856 # masters' syndic interfaces.

857 order_masters: True

/etc/init.d/salt-master start

在server1上

安装syndic

yum insatll -y salt-syncdic

编辑配置文件

vim /etc/salt/master

860 # this master where to receive commands from.

861 syndic_master: 172.25.31.4

/etc/init.d/salt-master restart

开启syndic

/etc/init.d/salt-syndic start

在server4上设置server1的salt-key

salt-key -a sever1

salt '*' test.ping

salt-ssh

关掉server3的salt-minion

/etc/init.d/salt-minion stop

在server1上安装salt-ssh

yum install salt-ssh -y

注释掉之前在主配置文件中写的master_job_cache模块

写入monion端密码

vim /etc/salt/roster

server3:

host: 172.25.31.3

user: root

passwd: redhat测试

salt-ssh 'server3' test.ping

API

设置https

cd /etc/pki/tls/private/

openssl genrsa 1024 > localhost.key

cd /etc/pki/tls/certs/

make testcert

yum install -y salt-api

编辑api配置文件

cd /etc/salt/master.d/

vim api.conf

rest_cherrypy:

port: 8000

ssl_crt: /etc/pki/tls/certs/localhost.crt

ssl_key: /etc/pki/tls/private/localhost.key

vim auth.conf

external_auth:

pam:

saltapi:

- '.*'

- '@wheel'

- '@runner'

- '@jobs'

创建用户

useradd saltapi

passwd saltapi

开启服务

/etc/init.d/salt-master restart

/etc/init.d/salt-api start

查看8000端口是否开启

netstat -antlp | grep :8000

调用API

[root@server1 master.d]# curl -sSk https://localhost:8000/login \

> -H 'Accept: application/x-yaml' \

> -d username=saltapi \

> -d password=westos \

> -d eauth=pam

return:

- eauth: pam

expire: 1534627626.672622

perms:

- .*

- '@wheel'

- '@runner'

- '@jobs'

start: 1534584426.672621

token: 8a0575903143e052386c1b6ff86d036dc8f34422

user: saltapi[root@server1 master.d]# curl -sSk https://localhost:8000 \

> -H 'Accept: application/x-yaml' \

> -H 'X-Auth-Token: 8a0575903143e052386c1b6ff86d036dc8f34422' \

> -d client=local \

> -d tgt='*' \

> -d fun=test.ping

return:

- server1: true

server2: true

server3: true