mfs分布式文件系统,高可用(pacemaker+corosync),磁盘共享(iscsi),fence(解决脑裂问题)

前言:

MFS文件系统:

MooseFS是一个具有容错性的网络分布式文件系统。它把数据分散存放在多个物理服务器上,而呈现给用户的则是一个统一的资源。

MooseFS是一个分布式存储的框架,其具有如下特性:

- (1)通用文件系统,不需要修改上层应用就可以使用(那些需要专门api的dfs很麻烦!)。

- (2)可以在线扩容,体系架构可伸缩性极强。(官方的case可以扩到70台了!)

- (3)部署简单。

- (4)高可用,可设置任意的文件冗余程度(提供比raid1+0更高的冗余级别,而绝对不会影响读或者写的性能,只会加速!)

- (5)可回收在指定时间内删除的文件(“回收站”提供的是系统级别的服务,不怕误操作了,提供类似oralce 的闪回等高级dbms的即时回滚特性!)

- (6)提供netapp,emc,ibm等商业存储的snapshot特性。(可以对整个文件甚至在正在写入的文件创建文件的快照)

- (7)google filesystem的一个c实现。

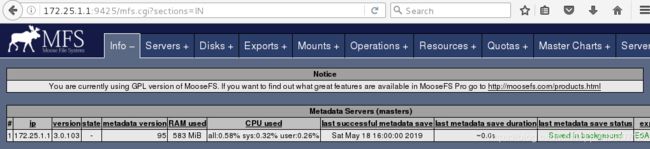

- (8)提供web gui监控接口。

体系机构

- 1、管理服务器(master server)

一台管理整个文件系统的独立主机,存储着每个文件的元数据(文件的大小、属性、位置信息,包括所有非常规文件的所有信息,例如目录、套接字、管道以及设备文件) - 2、数据服务器群(chunk servers)

任意数目的商用服务器,用来存储文件数据并在彼此之间同步(如果某个文件有超过一个备份的话) - 3、元数据备份服务器(metalogger server)

任意数量的服务器,用来存储元数据变化日志并周期性下载主要元数据文件,以便用于管理服务器意外停止时好接替其位置。 - 4、访问mfs的客户端

任意数量的主机,可以通过mfsmount进程与管理服务器(接收和更改元数据)和数据服务器(改变实际文件数据)进行交流。

一、mfs分布式文件系统原理

读写原理

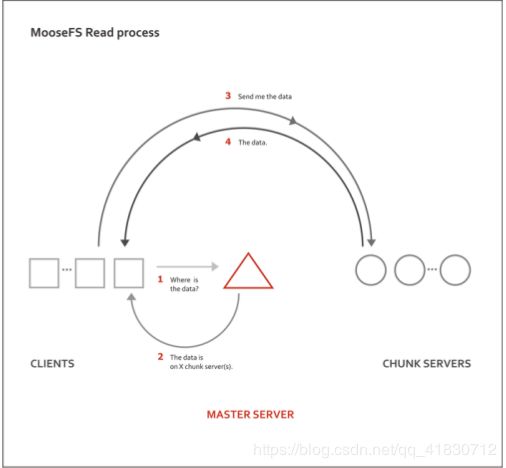

1.MFS的读数据过程

(1) client当需要一个数据时,首先向master server发起查询请求;

(2)管理服务器检索自己的数据,获取到数据所在的可用数据服务器位置ip|port|chunkid;

(3)管理服务器将数据服务器的地址发送给客户端;

(4)客户端向具体的数据服务器发起数据获取请求;

(5)数据服务器将数据发送给客户端;

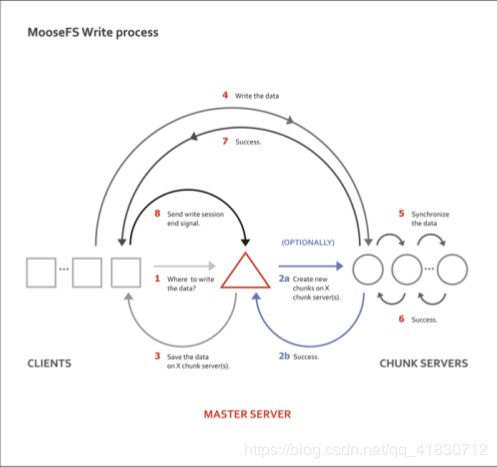

2.MFS的写数据过程

(1)当客户端有数据写需求时,首先向管理服务器提供文件元数据信息请求存储地址(元数据信息如:文件名|大小|份数等);

(2)管理服务器根据写文件的元数据信息,到数据服务器创建新的数据块;

(3)数据服务器返回创建成功的消息;

(4)管理服务器将数据服务器的地址返回给客户端(chunkIP|port|chunkid);

(5)客户端向数据服务器写数据;

(6)数据服务器返回给客户端写成功的消息;

(7)客户端将此次写完成结束信号和一些信息发送到管理服务器来更新文件的长度和最后修改时间

环境:

| server1 | 172.25.1.1 | mfsmaster节点 |

|---|---|---|

| server2 | 172.25.1.2 | chunkserver节点 |

| server3 | 172.25.1.3 | chunkserver节点 |

| server4 | 172.25.1.4 | 高可用 |

| foundation1 | 172.25.1.250 | 客户端 |

MFS元数据日志服务器(moosefs-metalogger-3.0.97-1.rhsysv.x86_64.rpm)

这个软件可以记录元数据日志,定期同步master数据日志,防止master挂掉

元数据日志守护进程是在安装master server 时一同安装的, 最小的要求并不比master 本身大

,可以被运行在任何机器上(例如任一台 chunkserver),但是最好是放置在MooseFS master 的备份机上,

备份master 服务器的变化日志文件,文件类型为changelog_ml.*.mfs。

因为主要的master server 一旦失效, 可能就会将这台metalogger 机器取代而作为master server

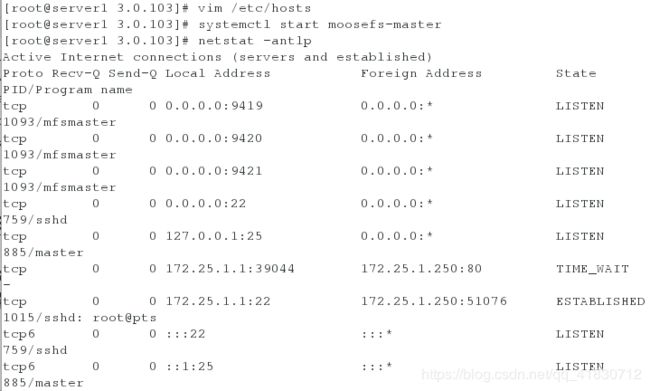

9421 # 对外的连接端口

9420 # 用于chunkserver 连接的端口地址

9419 # metalogger 监听的端口地址

二.MFS的安装、部署、配置

1.安装部署mfs

mfsmaster端

[root@server1 3.0.103]# ls

moosefs-cgi-3.0.103-1.rhsystemd.x86_64.rpm

moosefs-cgiserv-3.0.103-1.rhsystemd.x86_64.rpm

moosefs-chunkserver-3.0.103-1.rhsystemd.x86_64.rpm

moosefs-cli-3.0.103-1.rhsystemd.x86_64.rpm

moosefs-client-3.0.103-1.rhsystemd.x86_64.rpm

moosefs-master-3.0.103-1.rhsystemd.x86_64.rpm

moosefs-metalogger-3.0.103-1.rhsystemd.x86_64.rpm

[root@server1 3.0.103]# yum install moosefs-cgi moosefs-cli-3.0.103-1.rhsystemd.x86_64.rpm

[root@server1 3.0.103]# vim /etc/hosts

172.25.1.1 server1 mfsmaster

[root@server1 3.0.103]# systemctl start moosefs-master

[root@server1 3.0.103]# netstat -antlp

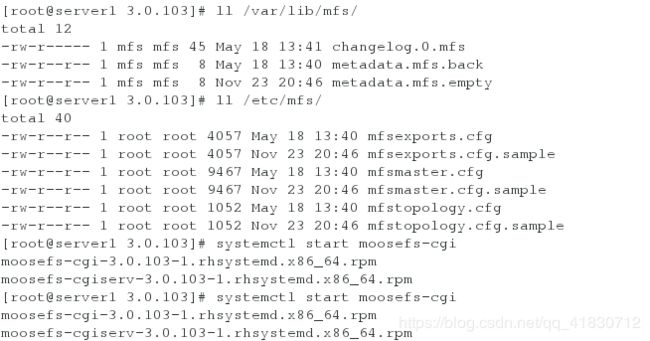

[root@server1 3.0.103]# ll /var/lib/mfs/ # 数据目录

total 12

-rw-r----- 1 mfs mfs 45 May 18 13:41 changelog.0.mfs

-rw-r--r-- 1 mfs mfs 8 May 18 13:40 metadata.mfs.back

-rw-r--r-- 1 mfs mfs 8 Nov 23 20:46 metadata.mfs.empty

[root@server1 3.0.103]# ll /etc/mfs/ # 主配置文件目录

total 40

-rw-r--r-- 1 root root 4057 May 18 13:40 mfsexports.cfg

-rw-r--r-- 1 root root 4057 Nov 23 20:46 mfsexports.cfg.sample

-rw-r--r-- 1 root root 9467 May 18 13:40 mfsmaster.cfg

-rw-r--r-- 1 root root 9467 Nov 23 20:46 mfsmaster.cfg.sample

-rw-r--r-- 1 root root 1052 May 18 13:40 mfstopology.cfg

-rw-r--r-- 1 root root 1052 Nov 23 20:46 mfstopology.cfg.sample

systemctl start moosefs-cgiserv

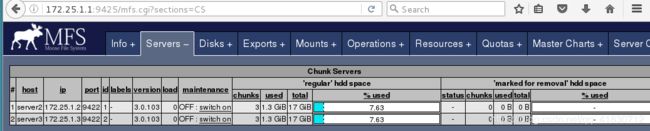

2、配置chunkserver

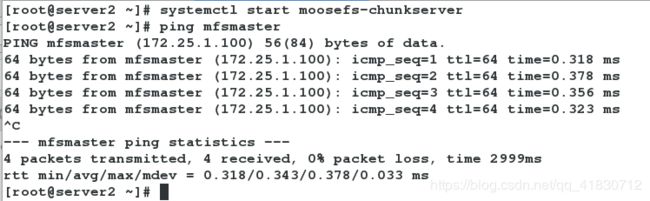

server2:

[root@server2 ~]# yum install -y moosefs-chunkserver-3.0.103-1.rhsystemd.x86_64.rpm

[root@server2 ~]# vim /etc/hosts

172.25.1.1 server1 mfsmaster

[root@server2 ~]# cd /etc/mfs/

[root@server2 mfs]# vim mfshdd.cfg

/mnt/chunk1

[root@server2 mfs]# mkdir /mnt/chunk1

[root@server2 mfs]# chown mfs.mfs /mnt/chunk1

[root@server2 mfs]# systemctl start moosefs-chunkserver

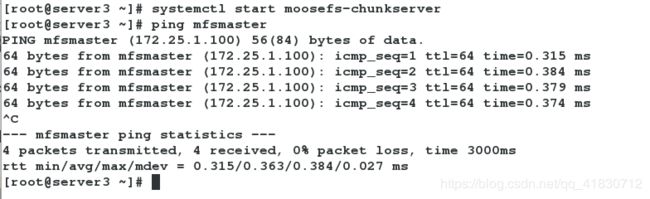

server3:

[root@server3 ~]# yum install -y moosefs-chunkserver-3.0.103-1.rhsystemd.x86_64.rpm

[root@server3 ~]# vim /etc/hosts

172.25.1.1 server1 mfsmaster

[root@server3 ~]# cd /etc/mfs/

[root@server3 mfs]# vim mfshdd.cfg

/mnt/chunk2

[root@server3 mfs]# mkdir /mnt/chunk1

[root@server3 mfs]# chown mfs.mfs /mnt/chunk1

[root@server3 mfs]# systemctl start moosefs-chunkserver

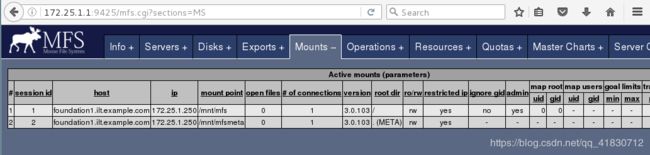

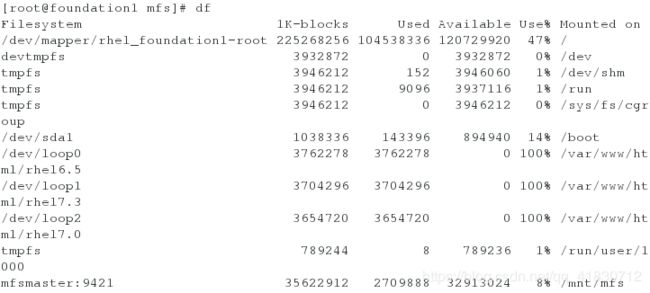

2、客户端测试分布式存储

配置客户端

[root@foundation1 ~]# yum install moosefs-client-3.0.103-1.rhsystemd.x86_64.rpm -y

[root@foundation1 ~]# vim /etc/mfs/mfsmount.cfg # 确定挂载数据的目录

/mnt/mfs

[root@foundation1 mnt]# mkdir /mnt/mfs

[root@foundation1 mnt]# cd /mnt/mfs

[root@foundation1 mfs]# vim /etc/hosts # 编辑解析文件

172.25.1.1 server1 mfsmaster

[root@foundation1 mfs]# mfsmount # 自动读取后端文件进行挂载

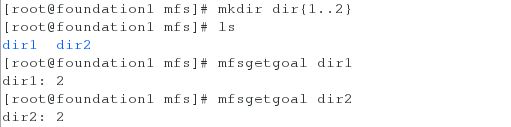

[root@foundation1 mfs]# mkdir dir1

[root@foundation1 mfs]# mkdir dir2

[root@foundation1 mfs]# ls

dir1 dir2

[root@foundation1 mfs]# mfsgetgoal dir1

dir1: 2

[root@foundation1 mfs]# mfsgetgoal dir2

dir2: 2

[root@foundation1 mfs]# mfssetgoal -r 1 dir1

dir1:

inodes with goal changed: 1

inodes with goal not changed: 0

inodes with permission denied:

[root@foundation1 mfs]# cd dir1/

[root@foundation1 dir1]# cp /etc/passwd .

[root@foundation1 dir1]# mfsfileinfo passwd # 查看文件具体信息,数据存储在chunk1上,因为我们设置了数据只能存储在一台服务器上

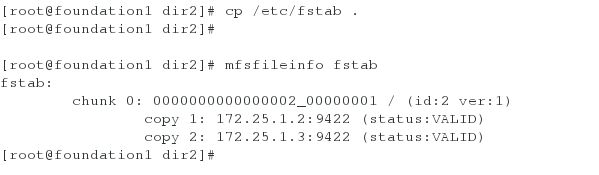

[root@foundation1 dir1]# cd ../dir2/

[root@foundation1 dir2]# cp /etc/fstab .

[root@foundation1 dir2]# mfsfileinfo fstab

fstab:

chunk 0: 0000000000000002_00000001 / (id:2 ver:1)

copy 1: 172.25.1.2:9422 (status:VALID)

copy 2: 172.25.1.3:9422 (status:VALID)

2、对于大文件,实行离散存储

[root@foundation1 dir1]# dd if=/dev/zero of=file1 bs=1M count=100

100+0 records in

100+0 records out

104857600 bytes (105 MB) copied, 0.50116 s, 209 MB/s

[root@foundation1 dir1]# mfsfileinfo file1

file1:

chunk 0: 0000000000000003_00000001 / (id:3 ver:1)

copy 1: 172.25.1.2:9422 (status:VALID)

chunk 1: 0000000000000004_00000001 / (id:4 ver:1)

copy 1: 172.25.1.3:9422 (status:VALID)

3、如果我们在客户端不小心删除了数据,那么怎么恢复呢?

[root@foundation1 dir1]# mfsgettrashtime . # 查看当前文件的缓存时间,在 86400秒内的文件都可以恢复

.: 86400

[root@foundation1 mfs]# mkdir /mnt/mfsmeda

[root@foundation1 mfs]# mfsmount -m /mnt/mfsmeda # 挂载

[root@foundation1 mfs]# mount # 查看挂载记录

[root@foundation1 dir1]# cp /etc/group .

[root@foundation1 dir1]# ls

file1 group passwd

[root@foundation1 dir1]# rm -rf group

[root@foundation1 dir1]# cd /mnt/mfsmeta/

[root@foundation1 mfsmeta]# ls

sustained trash

[root@foundation1 mfsmeta]# cd trash/

[root@foundation1 trash]# find -name *group* # 查找丢失文件

./007/00000007|dir1|group

[root@foundation1 trash]# mv 007/00000007\|dir1\|group undel/ # 恢复文件,注意特殊字符要进行转译

[root@foundation1 trash]# cd /mnt/mfs/dir1/

[root@foundation1 dir1]# ls # 文件成功恢复

file1 group passwd

4、master有时候会处于非正常服务状态,导致客户端无法获取数据

(1)正常关闭master

当服务端正常关闭时,客户端就会卡顿

[root@server1 ~]# systemctl stop moosefs-master

[root@server1 ~]# systemctl start moosefs-master

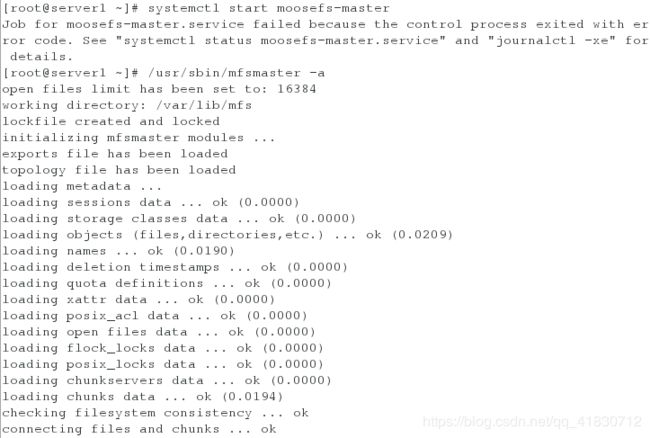

(2)非正常关闭master

[root@server1 ~]# ps ax

1203 ? S< 0:00 /usr/sbin/mfsmaster start

1205 pts/0 R+ 0:00 ps ax

[root@server1 ~]# kill -9 1203 #模拟非正常关闭

[root@server1 ~]# systemctl start moosefs-master

Job for moosefs-master.service failed because the control process exited with error code. See "systemctl status moosefs-master.service" and "journalctl -xe" for details.

[root@server1 ~]# /usr/sbin/mfsmaster -a

[root@server1 ~]# ps ax # 查看进程,成功开启

1220 ? S< 0:00 /usr/sbin/mfsmaster -a

1221 pts/0 R+ 0:00 ps ax

但是每次master非正常关闭之后,我们每次执行这个命令就有点麻烦,所以我们直接写在配置文件里,这样不管是否正常关闭,都可以保证服务成功开启。

[root@server1 ~]# vim /usr/lib/systemd/system/moosefs-master.service

[Unit]

Description=MooseFS Master server

Wants=network-online.target

After=network.target network-online.target

[Service]

Type=forking

ExecStart=/usr/sbin/mfsmaster -a

ExecStop=/usr/sbin/mfsmaster stop

ExecReload=/usr/sbin/mfsmaster reload

PIDFile=/var/lib/mfs/.mfsmaster.lock

TimeoutStopSec=1800

TimeoutStartSec=1800

Restart=no

[Install]

WantedBy=multi-user.target

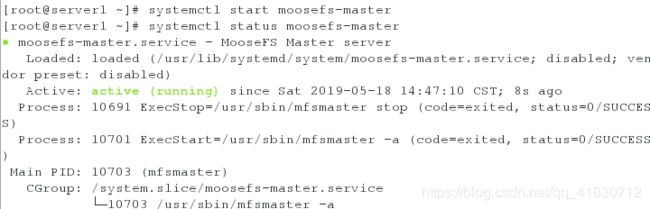

[root@server1 ~]# systemctl daemon-reload

[root@server1 ~]# /usr/sbin/mfsmaster -a

[root@server1 ~]# ps ax

10689 ? S< 0:00 /usr/sbin/mfsmaster -a

10690 pts/0 R+ 0:00 ps ax

[root@server1 ~]# kill -9 10689

[root@server1 ~]# systemctl start moosefs-master

[root@server1 ~]# systemctl status moosefs-master

三、mfsmaster的高可用

1、配置master,先获取高可用yum源,下载所需软件

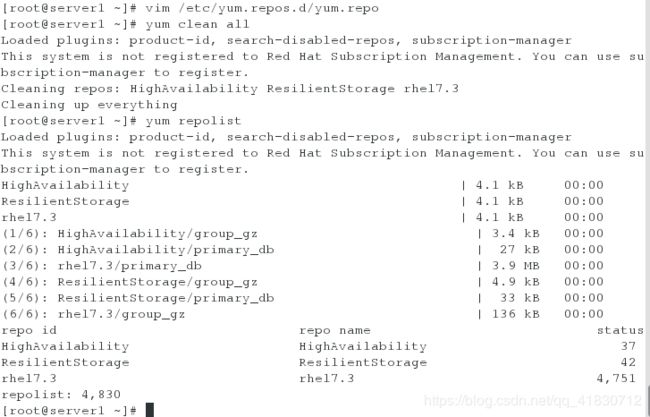

[root@server1 ~]# vim /etc/yum.repos.d/yum.repo

[rhel7.3]

name=rhel7.3

baseurl=http://172.25.1.250/rhel7.3

gpgcheck=0

[HighAvailability]

name=HighAvailability

baseurl=http://172.25.1.250/rhel7.3/addons/HighAvailability

gpgcheck=0

[ResilientStorage]

name=ResilientStorage

baseurl=http://172.25.1.250/rhel7.3/addons/ResilientStorage

gpgcheck=0

[root@server1 ~]# yum clean all

[root@server1 ~]# yum repolist

[root@server1 ~]# yum install pacemaker corosync pcs -y

[root@server1 ~]# rpm -q pacemaker

pacemaker-1.1.15-11.el7.x86_64

2、配置免密登陆

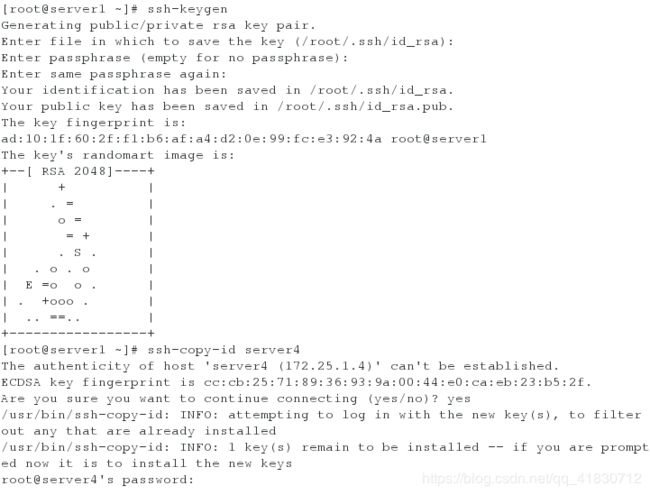

[root@server1 ~]# ssh-keygen

[root@server1 ~]# ssh-copy-id server4

3、启动pcsd服务,设置hacluster用户密码

[root@server1 ~]# systemctl start pcsd

[root@server1 ~]# systemctl enable pcsd

Created symlink from /etc/systemd/system/multi-user.target.wants/pcsd.service to /usr/lib/systemd/system/pcsd.service.

[root@server1 ~]# passwd hacluster

Changing password for user hacluster.

New password:

BAD PASSWORD: The password is shorter than 8 characters

Retype new password:

passwd: all authentication tokens updated successfully.

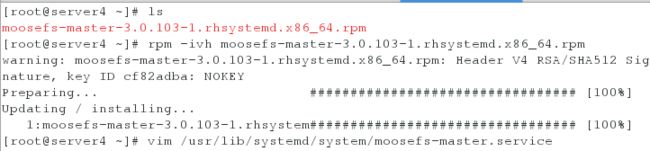

4、配置backup-mfsmaster

[root@server4 ~]# ls

moosefs-master-3.0.103-1.rhsystemd.x86_64.rpm

[root@server4 ~]# rpm -ivh moosefs-master-3.0.103-1.rhsystemd.x86_64.rpm

warning: moosefs-master-3.0.103-1.rhsystemd.x86_64.rpm: Header V4 RSA/SHA512 Signature, key ID cf82adba: NOKEY

Preparing... ################################# [100%]

Updating / installing...

1:moosefs-master-3.0.103-1.rhsystem################################# [100%]

[root@server4 ~]# vim /usr/lib/systemd/system/moosefs-master.service

[Unit]

Description=MooseFS Master server

Wants=network-online.target

After=network.target network-online.target

[Service]

Type=forking

ExecStart=/usr/sbin/mfsmaster -a

ExecStop=/usr/sbin/mfsmaster stop

ExecReload=/usr/sbin/mfsmaster reload

PIDFile=/var/lib/mfs/.mfsmaster.lock

TimeoutStopSec=1800

TimeoutStartSec=1800

Restart=no

[Install]

WantedBy=multi-user.target

[root@server4 ~]# systemctl daemon-reload

[root@server4 ~]# vim /etc/yum.repos.d/yum.repo

[rhel7.3]

name=rhel7.3

baseurl=http://172.25.1.250/rhel7.3

gpgcheck=0

[HighAvailability]

name=HighAvailability

baseurl=http://172.25.1.250/rhel7.3/addons/HighAvailability

gpgcheck=0

[ResilientStorage]

name=ResilientStorage

baseurl=http://172.25.1.250/rhel7.3/addons/ResilientStorage

gpgcheck=0

[root@server4 ~]# yum repolist

[root@server4 ~]# yum install -y pacemaker corosync pcs

启动服务,配置hacluster用户密码(主备的密码需要相同)

[root@server4 ~]# systemctl start pcsd

[root@server4 ~]# systemctl enable pcsd

Created symlink from /etc/systemd/system/multi-user.target.wants/pcsd.service to /usr/lib/systemd/system/pcsd.service.

[root@server4 ~]# passwd hacluster

Changing password for user hacluster.

New password:

BAD PASSWORD: The password is shorter than 8 characters

Retype new password:

passwd: all authentication tokens updated successfully.

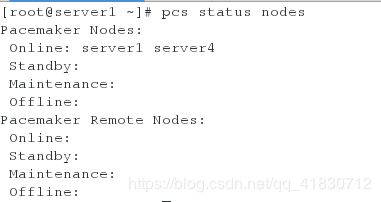

5、开始创建集群

[root@server1 ~]# pcs cluster auth server1 server4

[root@server1 ~]# pcs cluster setup --name mycluster server1 server4

[root@server1 ~]# pcs status nodes

Error: error running crm_mon, is pacemaker running?

[root@server1 ~]# pcs cluster start --all

[root@server1 ~]# pcs status nodes

Pacemaker Nodes:

Online: server1 server4

Standby:

Maintenance:

Offline:

Pacemaker Remote Nodes:

Online:

Standby:

Maintenance:

Offline:

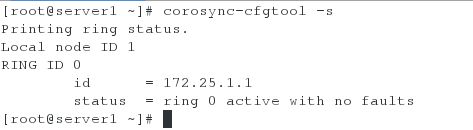

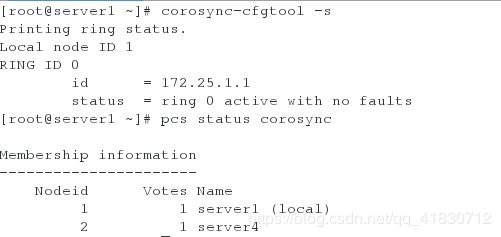

[root@server1 ~]# corosync-cfgtool -s

[root@server1 ~]# pcs status corosync

6、创建主备集群

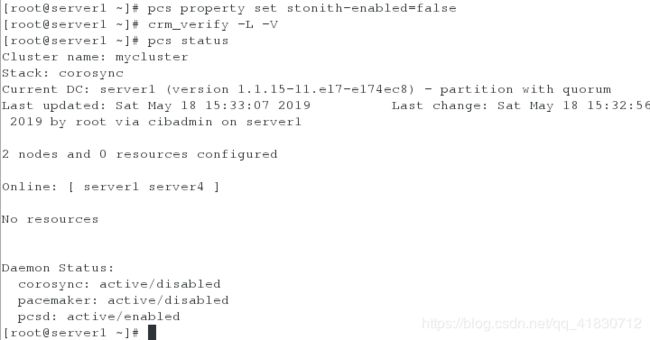

[root@server1 ~]# pcs property set stonith-enabled=false

[root@server1 ~]# crm_verify -L -V

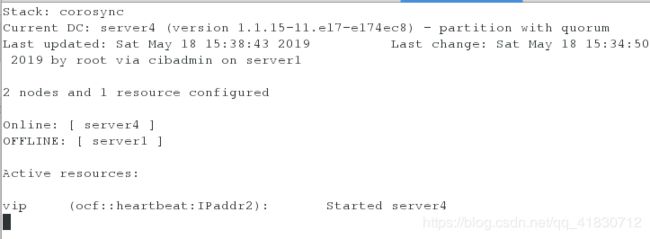

[root@server1 ~]# pcs status

Cluster name: mycluster

Stack: corosync

Current DC: server1 (version 1.1.15-11.el7-e174ec8) - partition with quorum

Last updated: Sat May 18 15:33:07 2019 Last change: Sat May 18 15:32:56 2019 by root via cibadmin on server1

2 nodes and 0 resources configured

Online: [ server1 server4 ]

No resources

Daemon Status:

corosync: active/disabled

pacemaker: active/disabled

pcsd: active/enabled

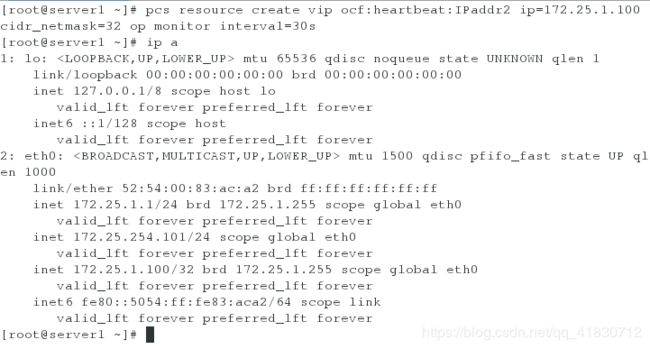

[root@server1 ~]# pcs resource create vip ocf:heartbeat:IPaddr2 ip=172.25.1.100 cidr_netmask=32 op monitor interval=30s

[root@server1 ~]# ip a

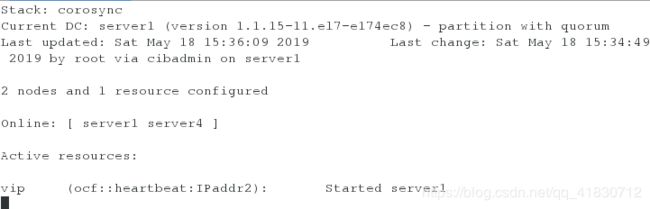

crm_mon

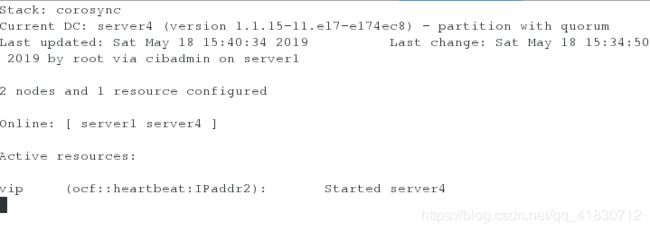

7、执行故障转移

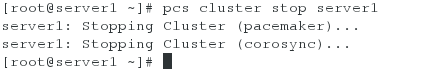

[root@server1 ~]# pcs cluster stop server1

server1: Stopping Cluster (pacemaker)...

server1: Stopping Cluster (corosync)...

crm_mon

[root@server1 ~]# pcs cluster start server1

server1: Starting Cluster...

关闭master-backup,vip会重新漂移到server1:

关闭master-backup,vip会重新漂移到server1:

[root@server4 ~]# pcs cluster stop server4

server4: Stopping Cluster (pacemaker)...

server4: Stopping Cluster (corosync)...

我们的实验实现了mfs的高可用,当master出现故障时,资源会自动转移到我们的master-backup,当master恢复后,资源不会回切,保障了我们客户端服务的稳定性。

获取可用资源标准列表:

[root@server1 ~]# pcs resource standards

ocf

lsb

service

systemd

查看资源提供者的列表:

[root@server1 ~]# pcs resource providers

heartbeat

openstack

pacemaker

查看特定的可用资源代理:

[root@server1 ~]# pcs resource agents ocf:heartbeat

四、存储共享(使用vip的方式实现)

1、关闭服务,卸载目录。

客户端:

[root@foundation1 ~]# umount /mnt/mfs

root@foundation1 ~]# umount /mnt/mfsmeta/

[root@foundation1 ~]# vim /etc/hosts

172.25.1.100 mfsmaster

server1:

[root@server1 ~]# systemctl stop moosefs-master

[root@server1 ~]# vim /etc/hosts

172.25.1.100 mfsmaster

server2:

[root@server2 ~]# systemctl stop moosefs-chunkserver

[root@server2 ~]# vim /etc/hosts

172.25.1.100 mfsmaster

server3:

[root@server3 ~]# systemctl stop moosefs-chunkserver

[root@server3 ~]# vim /etc/hosts

172.25.1.100 mfsmaster

server4:

[root@server4 ~]# systemctl stop moosefs-master

[root@server4 ~]# vim /etc/hosts

172.25.1.100 mfsmaster

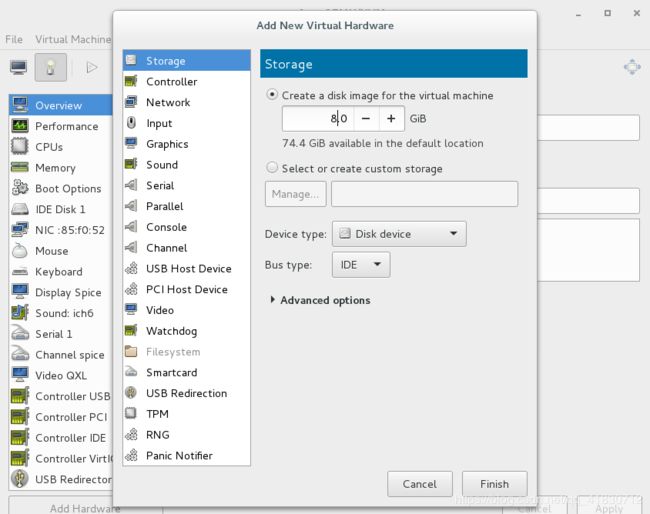

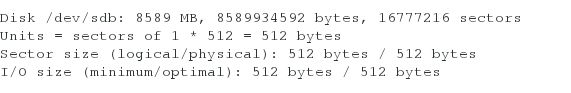

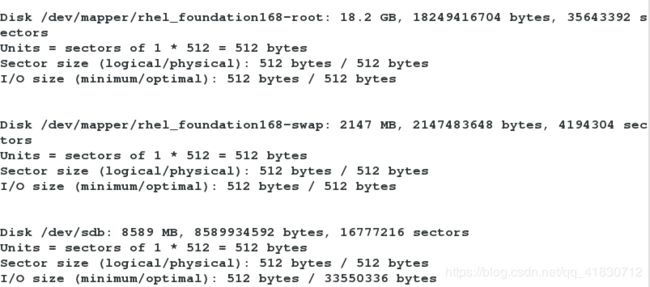

2、使用server2为iscsi的服务端,添加一块磁盘,

检测硬盘是否添加成功:

[root@server2 ~]# fdisk -l

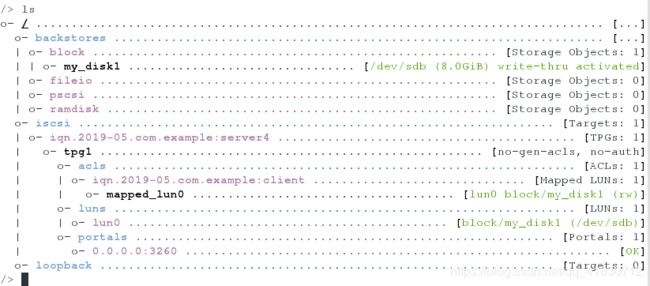

3、server2下载iscsi软件,及共享磁盘的配置

[root@server2 ~]# yum install -y targetcli #安装远程快存储设备

[root@server2 ~]# systemctl start target #开启服务

[root@server2 ~]# targetcli

Warning: Could not load preferences file /root/.targetcli/prefs.bin.

targetcli shell version 2.1.fb41

Copyright 2011-2013 by Datera, Inc and others.

For help on commands, type 'help'.

/> cd backstores/block

/backstores/block> create my_disk1 /dev/sdb

Created block storage object my_disk1 using /dev/sdb.

/backstores/block> cd ..

/backstores> cd ..

/> cd iscsi

/iscsi> create iqn.2019-05.com.example:server2

Created target iqn.2019-05.com.example:server2.

Created TPG 1.

Global pref auto_add_default_portal=true

Created default portal listening on all IPs (0.0.0.0), port 3260.

/iscsi> cd iqn.2019-05.com.example:server2

/iscsi/iqn.20...ample:server2> cd tpg1/luns

/iscsi/iqn.20...er2/tpg1/luns> create /backstores/block/my_disk1

Created LUN 0.

/iscsi/iqn.20...er2/tpg1/luns> cd ..

/iscsi/iqn.20...:server2/tpg1> cd acls

/iscsi/iqn.20...er2/tpg1/acls> create iqn.2019-05.com.example:client

Created Node ACL for iqn.2019-05.com.example:client

Created mapped LUN 0.

/iscsi/iqn.20...er2/tpg1/acls> cd ..

/iscsi/iqn.20...:server2/tpg1> cd ..

/iscsi/iqn.20...ample:server2> cd ..

/iscsi> cd ..

/> exit

Global pref auto_save_on_exit=true

Last 10 configs saved in /etc/target/backup.

Configuration saved to /etc/target/saveconfig.json

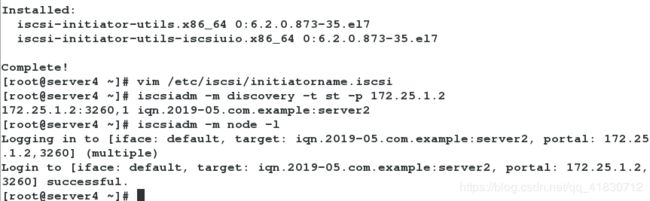

4、在iscsi的客户端:server1上安装iscsi客户端软件,对共享磁盘进行分区使用

[root@server1 ~]# yum install -y iscsi-*

[root@server1 ~]# vim /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.2019-05.com.example:client

[root@server1 ~]# iscsiadm -m discovery -t st -p 172.25.1.2

172.25.1.2:3260,1 iqn.2019-05.com.example:server2

[root@server1 ~]# iscsiadm -m node -l

Logging in to [iface: default, target: iqn.2019-05.com.example:server2, portal: 172.25.1.2,3260] (multiple)

Login to [iface: default, target: iqn.2019-05.com.example:server2, portal: 172.25.1.2,3260] successful.

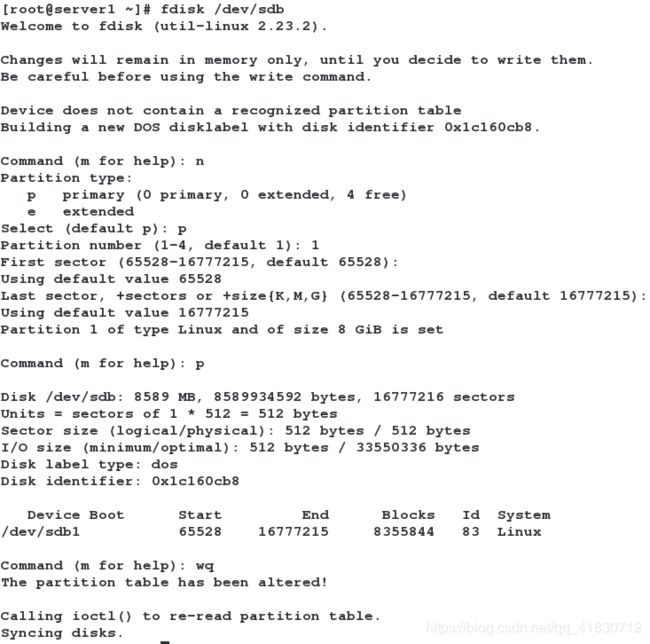

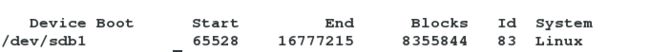

[root@server1 ~]# fdisk -l ##查看远程共享出来的磁盘

[root@server1 ~]# fdisk /dev/sdb

Welcome to fdisk (util-linux 2.23.2).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Device does not contain a recognized partition table

Building a new DOS disklabel with disk identifier 0x1c160cb8.

Command (m for help): n

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p): p

Partition number (1-4, default 1): 1

First sector (65528-16777215, default 65528):

Using default value 65528

Last sector, +sectors or +size{K,M,G} (65528-16777215, default 16777215):

Using default value 16777215

Partition 1 of type Linux and of size 8 GiB is set

Command (m for help): p

Disk /dev/sdb: 8589 MB, 8589934592 bytes, 16777216 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 33550336 bytes

Disk label type: dos

Disk identifier: 0x1c160cb8

Device Boot Start End Blocks Id System

/dev/sdb1 65528 16777215 8355844 83 Linux

Command (m for help): wq

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

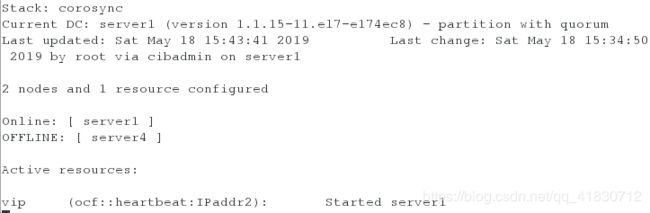

5、格式化分区及使用

[root@server1 ~]# mkfs.xfs /dev/sdb1 #格式化分区

[root@server1 ~]# dd if=/dev/zero of=/dev/sdb bs=512 count=1 #破坏分区

[root@server1 ~]# fdisk -l /dev/sdb #查看不到分区

因为截取速度太快,导致分区被自动删除,不过不用担心,因为我们把/dev/sda下的所有空间都分给了/dev/sda1,所以我们只需要重新建立分区即可。

[root@server1 ~]# mount /dev/sdb1 /mnt # 查看到分区不存在

mount: special device /dev/sdb1 does not exist

[root@server1 ~]# fdisk /dev/sdb

Command (m for help): n

Select (default p): p

Partition number (1-4, default 1):

First sector (65528-16777215, default 65528):

Using default value 65528

Last sector, +sectors or +size{K,M,G} (65528-16777215, default 16777215):

Device Boot Start End Blocks Id System

/dev/sdb1 65528 16777215 8355844 83 Linux

Command (m for help): wq

[root@server1 ~]# mount /dev/sdb1 /mnt

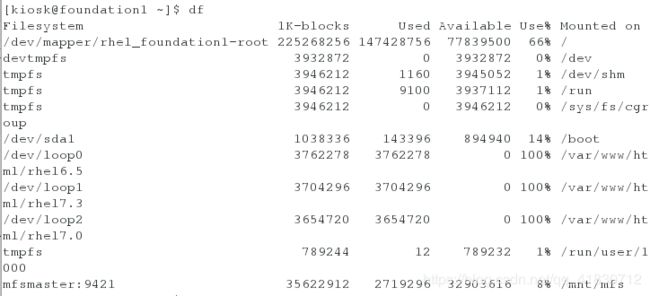

[root@server1 ~]# df

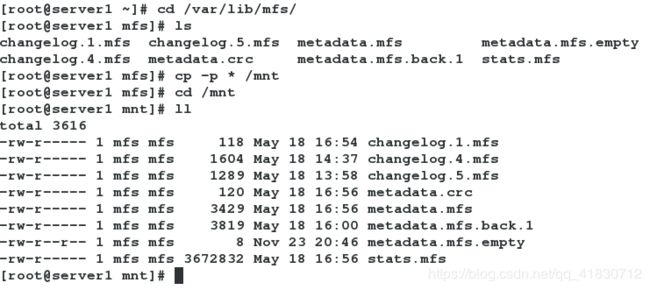

[root@server1 ~]# cd /var/lib/mfs/ # 这是mfs的数据目录

[root@server1 mfs]# ls

changelog.1.mfs changelog.5.mfs metadata.mfs metadata.mfs.empty

changelog.4.mfs metadata.crc metadata.mfs.back.1 stats.mfs

[root@server1 mfs]# cp -p * /mnt # 带权限拷贝/var/lib/mfs的所有数据文件到/dev/sdb1上

[root@server1 mfs]# cd /mnt

[root@server1 mnt]# ll

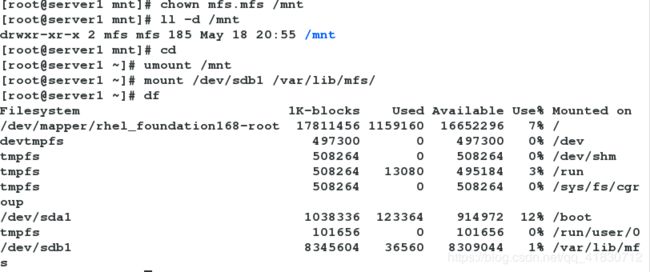

[root@server1 mnt]# chown mfs.mfs /mnt # 当目录属于mfs用户和组时,才能正常使用

[root@server1 mnt]# ll -d /mnt

drwxr-xr-x 2 mfs mfs 185 May 18 20:55 /mnt

[root@server1 mnt]# cd

[root@server1 ~]# umount /mnt

[root@server1 ~]# mount /dev/sdb1 /var/lib/mfs/ # 使用分区,测试是否可以使用共享磁盘

[root@server1 ~]# df

[root@server1 ~]# systemctl start moosefs-master # 服务开启成功,就说明数据文件拷贝成功,共享磁盘可以正常使用

[root@server1 ~]# ps ax

[root@server1 ~]# systemctl stop moosefs-master

6、配置backup-master,是之也可以使用共享磁盘

[root@server4 ~]# vim /etc/hosts

172.25.1.1 server1 mfsmaster

[root@server4 ~]# yum install -y iscsi-*

[root@server4 ~]# vim /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.2019-05.com.example:client

[root@server4 ~]# iscsiadm -m discovery -t st -p 172.25.1.2

172.25.1.2:3260,1 iqn.2019-05.com.example:server2

[root@server4 ~]# iscsiadm -m node -l

Logging in to [iface: default, target: iqn.2019-05.com.example:server2, portal: 172.25.1.2,3260] (multiple)

Login to [iface: default, target: iqn.2019-05.com.example:server2, portal: 172.25.1.2,3260] successful.

[root@server4 ~]# fdisk -l

[root@server4 ~]# mount /dev/sdb1 /var/lib/mfs/

[root@server4 ~]# systemctl start moosefs-master

[root@server4 ~]# systemctl stop moosefs-master

[root@server4 ~]# pcs cluster start server4

server4: Starting Cluster...

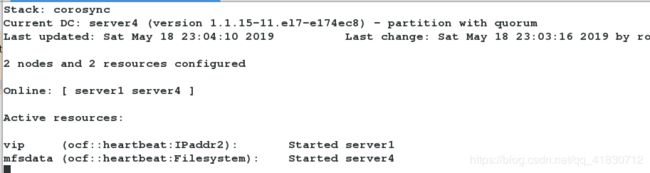

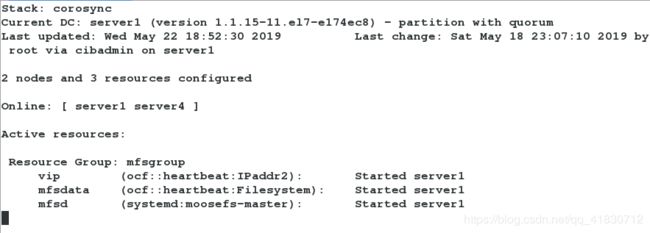

7、在master上创建mfs文件系统

[root@server1 ~]# pcs resource create mfsdata ocf:heartbeat:Filesystem device=/dev/sdb1 directory=/var/lib/mfs fstype=xfs op monitor interval=30s

[root@server1 ~]# pcs resource show

vip (ocf::heartbeat:IPaddr2): Started server1

mfsdata (ocf::heartbeat:Filesystem): Started server4

crm_mon

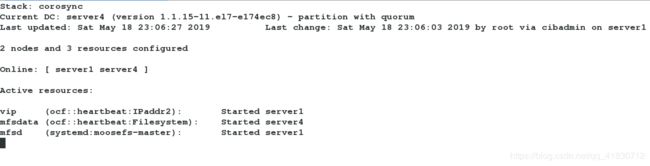

[root@server1 ~]# pcs resource create mfsd systemd:moosefs-master op monitor interval=1min

# 创建mfsd系统

[root@server1 ~]# pcs resource group add mfsgroup vip mfsdata mfsd # 把vip,mfsdata,mfsd 集中在一个组中

[root@server1 ~]# pcs cluster stop server1 # 当关闭master之后,master上的服务就会迁移到backup-master上

server1: Stopping Cluster (pacemaker)...

server1: Stopping Cluster (corosync)...

在server4上查:

crm_mon

五、fence解决脑裂问题

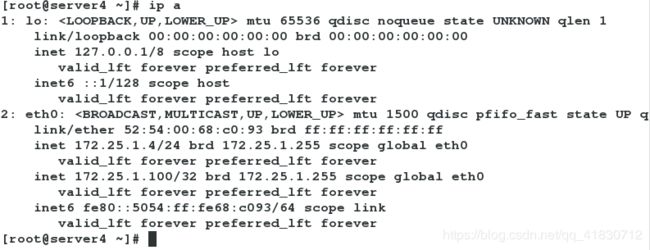

(1)先在客户端测试高可用

- 先在客户端测试高可用

[root@server2 ~]# systemctl start moosefs-chunkserver

[root@server2 ~]# ping mfsmaster # 保证解析可以通信

- 查看vip的位置

[root@server4 ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:68:c0:93 brd ff:ff:ff:ff:ff:ff

inet 172.25.1.4/24 brd 172.25.1.255 scope global eth0

valid_lft forever preferred_lft forever

inet 172.25.1.100/32 brd 172.25.1.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fe68:c093/64 scope link

valid_lft forever preferred_lft forever

- 开启master

[root@server1 ~]# pcs cluster start server1

server1: Starting Cluster...

- 在客户端进行分布式存储测试

[root@foundation1 ~]# cd /mnt/mfs/

[root@foundation1 mfs]# ls

[root@foundation1 mfs]# mfsmount #有可能挂载失败,是因为这个目录不为空

mfsmaster accepted connection with parameters: read-write,restricted_ip,admin ; root mapped to root:root

[root@foundation1 mfs]# mkdir dir{1..2}

[root@foundation1 mfs]# ls

dir1 dir2

[root@foundation1 mfs]# cd /mnt/mfs/dir1/

[root@foundation1 dir1]# dd if=/dev/zero of=file2 bs=1M count=2000 # 我们上传一份大文件

2000+0 records in

2000+0 records out

2097152000 bytes (2.1 GB) copied, 10.9616 s, 191 MB/s

[root@server4 ~]# pcs cluster stop server4 # 在客户端上传大文件的同时,关闭正在提供服务的服务端

server4: Stopping Cluster (pacemaker)...

server4: Stopping Cluster (corosync)...

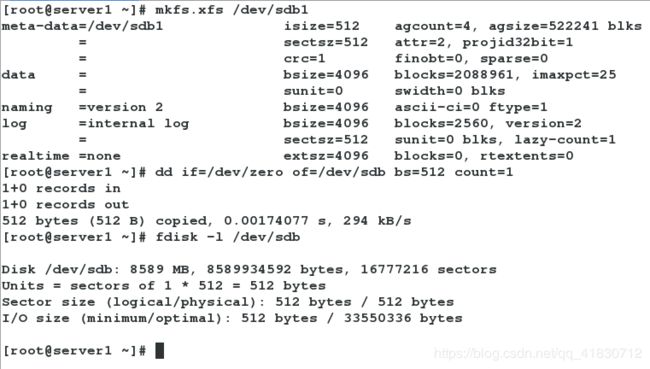

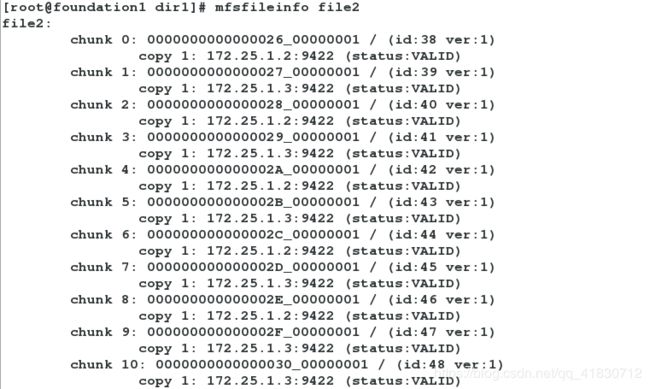

[root@foundation1 dir1]# mfsfileinfo file2 # 我们查看到文件上传成功,并没有受到影响

通过以上实验我们发现,当master挂掉之后,backup-master会立刻接替master的工作,保证客户端可以进行正常访问,但是,当master重新运行时,master可能会抢回自己的工作,从而导致master和backup-master同时修改同一份数据文件从而发生脑裂,此时fence就派上用场了。

(2)安装fence服务

[root@server1 ~]# yum install fence-virt -y

[root@server1 ~]# mkdir /etc/cluster

[root@server4 ~]# yum install fence-virt -y

[root@server4 ~]# mkdir /etc/cluster

(3)生成一份fence密钥文件,传给服务端

[root@foundation1 ~]# yum install -y fence-virtd

[root@foundation1 ~]# yum install fence-virtd-libvirt -y

[root@foundation1 ~]# yum install fence-virtd-multicast -y

[root@foundation1 ~]# fence_virtd -c

Listener module [multicast]:

Multicast IP Address [225.0.0.12]:

Multicast IP Port [1229]:

Interface [br0]: br0

Key File [/etc/cluster/fence_xvm.key]:

Backend module [libvirt]:

[root@foundation1 ~]# mkdir /etc/cluster # 这是存放密钥的文件,需要自己手动建立

[root@foundation1 ~]# dd if=/dev/urandom of=/etc/cluster/fence_xvm.key bs=128 count=1

[root@foundation1 ~]# systemctl start fence_virtd

[root@foundation1 ~]# cd /etc/cluster/

[root@foundation1 cluster]# ls

fence_xvm.key

[root@foundation1 cluster]# scp fence_xvm.key [email protected]:/etc/cluster/

[root@foundation1 cluster]# scp fence_xvm.key [email protected]:/etc/cluster/

[root@foundation1 cluster]# virsh list #查看域名

[root@server1 ~]# cd /etc/cluster/

[root@server1 cluster]# pcs stonith create vmfence fence_xvm pcmk_host_map=":server1;server4:server4" op monitor interval=1min

[root@server1 cluster]# pcs property set stonith-enabled=true

[root@server1 cluster]# crm_verify -L -V

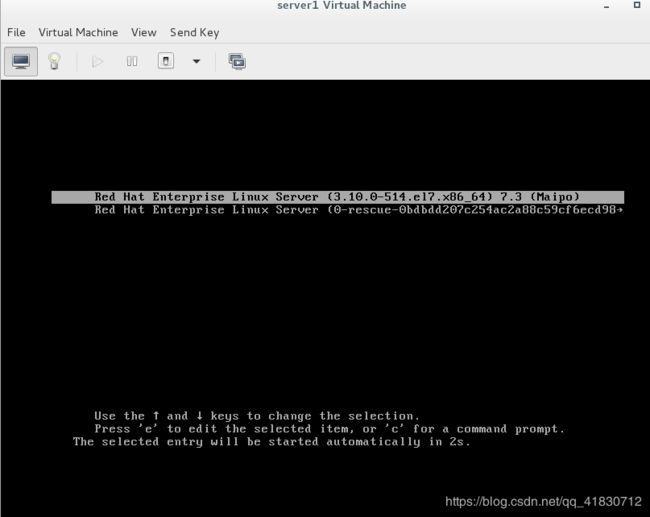

[root@server1 cluster]# fence_xvm -H server4 # 使server4断电重启

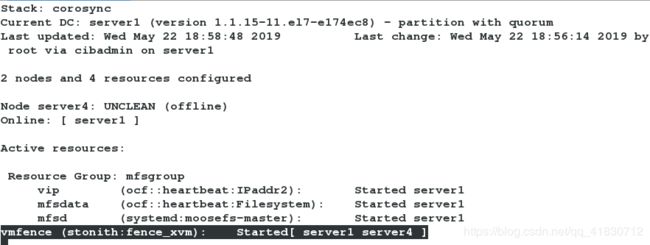

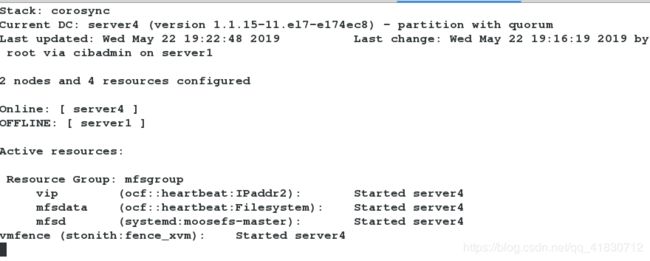

[root@server1 cluster]# crm_mon # 查看监控,server4上的服务迁移到master的server1上

[root@server1 cluster]# echo c > /proc/sysrq-trigger # 模拟master端内核崩溃

查看监控,server4会立刻接管master的所有服务

server1重启成功后,不会抢占资源,不会出现脑裂的状况。

查看监控发现,master重启成功之后,并不会抢占资源,服务依旧在backup-master端正常运行,说明fence生效。