分布式系统基础架构hadoop搭建

Windows系统搭建hadoop

实验环境:

windows10操作系统

安装包

jdk-8u221-windows-x64

apache-hadoop-3.1.0-winutils-master.zip

hadoop-3.2.0.tar

【java环境配置】

默认安装

配置环境变量

JAVA_HOME : C:\PROGRA~1\Java\jdk1.8.0_221

Path : %JAVA_HOME%\bin

C:\Users\dell>java -version //验证版本

java version "1.8.0_221"

Java(TM) SE Runtime Environment (build 1.8.0_221-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.221-b11, mixed mode)【hadoop配置】

解压:以管理员身份运行rar解压hadoop-3.2.0.tar到D:

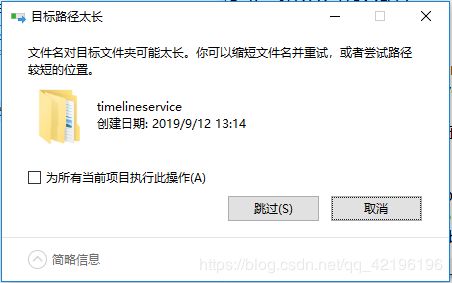

错误:err1目标路径太长(执行跳过)

配置环境变量

HADOOP_HOME : D:\hadoop-3.2.0

Path : %HADOOP_HOME%\bin

Path : %HADOOP_HOME%\sbin

C:\Users\dell>hadoop version //验证版本

Hadoop 3.2.0

Source code repository https://github.com/apache/hadoop.git -r e97acb3bd8f3befd27418996fa5d4b50bf2e17bf

Compiled by sunilg on 2019-01-08T06:08Z

Compiled with protoc 2.5.0

From source with checksum d3f0795ed0d9dc378e2c785d3668f39

This command was run using /D:/hadoop-3.2.0/share/hadoop/common/hadoop-common-3.2.0.jarhadoop-3.2.0\etc\hadoop下有四个配置文件

core-site.xml 全局配置

hdfs-site.xml hdfs的局部配置

mapred-site.xml mapred的局部配置

yarn-site.xml yarn的局部配置

core-site.xml下的配置:

fs.defaultFS

hdfs://localhost:9000

hdfs-site.xml下的配置:

dfs.replication

1

dfs.http.address

0.0.0.0:9870

然后在Hadoop3.1.2的安装目录下建个目录/data,再在这个目录下建两个目录,一个叫namenode,一个叫datanode,把两个目录的路径添加到这个配置文件里:

dfs.namenode.name.dir

/D:/hadoop-3.2.0/data/namenode

dfs.datanode.data.dir

/D:/hadoop-3.2.0/data/datanode

mapred-site.xml下的配置:

mapreduce.framework.name

yarn

yarn-site.xml下的配置:

yarn.nodemanager.aux-services.mapreduce_shuffle.class

mapreduce_shuffle

shuffle service that needs to be set for Map Reduce to run

将D:\hadoop-3.2.0\share\hadoop\yarn\timelineservice所有文件复制到D:\hadoop-3.2.0\share\hadoop\yarn,如果不这样操作ResourceManager可能启动不起来。

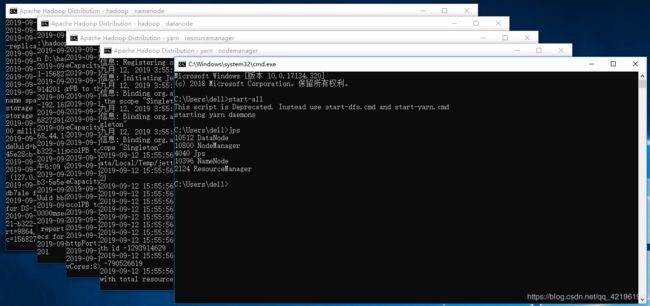

C:\Users\dell>hadoop namenode -format //初始化hadoopC:\Users\dell>start-all //启动所有服务

This script is Deprecated. Instead use start-dfs.cmd and start-yarn.cmd

starting yarn daemonsC:\Users\dell>jps 查看服务运行情况

8672 Jps

10196 DataNode

6436 ResourceManager

7556 NameNode

9400 NodeManager

C:\Users\dell>stop-all //关闭所有服务

This script is Deprecated. Instead use stop-dfs.cmd and stop-yarn.cmd

成功: 给进程发送了终止信号,进程的 PID 为 8148。

成功: 给进程发送了终止信号,进程的 PID 为 6828。

stopping yarn daemons

成功: 给进程发送了终止信号,进程的 PID 为 8276。

成功: 给进程发送了终止信号,进程的 PID 为 7544。

信息: 没有运行的带有指定标准的任务。

http://127.0.0.1:8088/cluster 查看集群状态

http://localhost:9870 查看Hadoop状态【hadoop2.x为50070 3.x为9870】

测试文件管理

C:\Users\dell>hadoop fs -rmdir hdfs://localhost:9000/test/

C:\Users\dell>hadoop fs -mkdir hdfs://localhost:9000/test/

C:\Users\dell>hadoop fs -mkdir hdfs://localhost:9000/test/input1

C:\Users\dell>hadoop fs -mkdir hdfs://localhost:9000/test/input2

C:\Users\dell>hadoop fs -put D:\hadoop.txt hdfs://localhost:9000/test/input1

Found 1 items

-rw-r--r-- 1 dell supergroup 6 2019-09-12 16:25 hdfs://localhost:9000/test/input1/hadoop.txt

C:\Users\dell>hadoop fs -ls hdfs://localhost:9000/test/

Found 2 items

drwxr-xr-x - dell supergroup 0 2019-09-12 16:25 hdfs://localhost:9000/test/input1

drwxr-xr-x - dell supergroup 0 2019-09-12 16:25 hdfs://localhost:9000/test/input2

C:\Users\dell>hadoop fs -rm -r -f hdfs://localhost:9000/test/input1

Deleted hdfs://localhost:9000/test/input1

C:\Users\dell>hadoop fs -ls hdfs://localhost:9000/test/

Found 1 items

drwxr-xr-x - dell supergroup 0 2019-09-12 16:25 hdfs://localhost:9000/test/input2Linux系统搭建hadoop

实验环境:

Centos7

root权限

安装包

hadoop-3.2.0.tar.gz

jdk-8u221-linux-x64.tar.gz

【java环境配置】

tar -zxvf jdk-8u221-linux-x64.tar.gz

vi /etc/profile

>{

export JAVA_HOME=/root/jdk1.8.0_221

export JRE_HOME=/root/jdk1.8.0_221/jre

export CLASSPATH=.:$JAVA_HOME/lib:$JRE_HOME/lib:$CLASSPATH

export PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$JAVA_HOME:$PATH

>}

source /etc/profile #刷新配置

【安装hadoop】

sudo tar -zxvf hadoop-3.2.0.tar.gz

cd hadoop-3.2.0

mkdir hdfs

mkdir hdfs/tmp

mkdir hdfs/name

mkdir hdfs/data

cd etc/hadoop/

sudo vi /etc/profile

>{

export HADOOP_HOME=/root/hadoop-3.2.0

export PATH="$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH"

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

>}

source /etc/profile

sudo vi hadoop-env.sh

>{

JAVA_HOME=$JAVA_HOME #注意去掉注释

>}

四个配置文件和上面介绍windows的安装是一样的,修改一下路径就可以

初始化hadoop

hadoop namenode -format

start-all.sh

在执行启动所有服务的时候会出现不能使用root权限进行启动,做以下操作

>{

vi /root/hadoop-3.2.0/sbin/start-dfs.sh

vi /root/hadoop-3.2.0/sbin/stop-dfs.sh

#!/usr/bin/env bash

HDFS_DATANODE_USER=root

HADOOP_SECURE_DN_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

vi /root/hadoop-3.2.0/sbin/start-yarn.sh

vi /root/hadoop-3.2.0/sbin/stop-yarn.sh

#!/usr/bin/env bash

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

>}

[root@localhost hadoop]# jps

4128 DataNode

6996 NodeManager

6295 NameNode

6855 ResourceManager

7309 Jps

6606 SecondaryNameNode

systemctl stop firewalld.service#关闭防火墙,访问web8088,9870端口