kubernetes-----控制器

一.控制器概述

- 控制器:又称之为工作负载,分别包含以下类型的控制器,控制器决定资源的类型

1.Deployment

2.StatefulSet

3.DaemonSet

4.Job

5.CronJob

-

pod与控制器之间的关系

控制器:在集群上管理和运行容器的对象通过Label-selector相关联

Pod:通过控制器实现应用的运维,比如伸缩,升级等

二.Deployment

- 使用deployment部署无状态应用;管理pod和ReplicaSet(副本数);具有线上部署、副本设定、滚动升级、回滚等功能;提供声明式更新,例如只更新一个新的image,更新相当于灰度部署。

- 应用场景为:web服务部署

无状态:

deployment认为所有的pod都是一样的

不用考虑顺序的要求

不用考虑在哪个node节点上运行

可以随意扩容和缩容

创建实例

- 创建pod资源

[root@master demo]# cat nginx-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3 ##设定副本数为3,回滚操作通过控制副本数来实现

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.15.4

ports:

- containerPort: 80

[root@master demo]# kubectl create -f nginx-deployment.yaml

deployment.apps/nginx-deployment created

[root@master demo]#

-

查看pod资源,deploy控制器,副本数

##rs是replicaset的缩写

[root@master demo]# kubectl get pods,deploy,rs

NAME READY STATUS RESTARTS AGE

pod/nginx-deployment-d55b94fd-54cpg 1/1 Running 0 6m57s

pod/nginx-deployment-d55b94fd-fpq25 1/1 Running 0 6m57s

pod/nginx-deployment-d55b94fd-hnxdc 1/1 Running 0 6m57s

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment.extensions/nginx-deployment 3 3 3 3 6m57s

NAME DESIRED CURRENT READY AGE

replicaset.extensions/nginx-deployment-d55b94fd 3 3 3 6m57s

[root@master demo]#

-

查看控制器,使得控制器以yaml的文件格式输出

[root@master demo]# kubectl get deployment.extensions/nginx-deployment -o yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

creationTimestamp: 2020-05-19T14:06:14Z

generation: 1

labels:

app: nginx

name: nginx-deployment

namespace: default

resourceVersion: "416373"

selfLink: /apis/extensions/v1beta1/namespaces/default/deployments/nginx-deployment

uid: e6835a1d-99d9-11ea-bb1a-000c29ce5f24

spec:

progressDeadlineSeconds: 600

replicas: 3 ##副本数控制资源总量

revisionHistoryLimit: 10

selector:

matchLabels:

app: nginx

strategy: ##控制滚动更新的策略

rollingUpdate:

maxSurge: 25% ##表示更新后创建资源25%,一共不超过125%的容量

maxUnavailable: 25% ##释放资源最大为25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app: nginx

spec:

containers:

- image: nginx:1.15.4

imagePullPolicy: IfNotPresent ##默认的镜像来去策略,表示有镜像,则不下载

name: nginx

ports:

- containerPort: 80

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

status:

availableReplicas: 3

conditions:

- lastTransitionTime: 2020-05-19T14:06:17Z

lastUpdateTime: 2020-05-19T14:06:17Z

message: Deployment has minimum availability.

reason: MinimumReplicasAvailable

status: "True"

type: Available

- lastTransitionTime: 2020-05-19T14:06:14Z

lastUpdateTime: 2020-05-19T14:06:17Z

message: ReplicaSet "nginx-deployment-d55b94fd" has successfully progressed.

reason: NewReplicaSetAvailable

status: "True"

type: Progressing

observedGeneration: 1

readyReplicas: 3

replicas: 3

updatedReplicas: 3

[root@master demo]# - 查看滚动的历史版本

##只有一行数据表示此前没有进行过更新回滚操作

[root@master demo]# kubectl rollout history deploy/nginx-deployment

deployment.extensions/nginx-deployment

REVISION CHANGE-CAUSE

1

[root@master demo]# 三.StatefulSet

- 部署有状态的应用;

- 解决pod独立生命周期,保持Pod的启动顺序和唯一性;

- 稳定,唯一性的网络标识符,持久存储(例如:etcd配置文件,节点地址变化,将无法使用)

- 有序,优雅的部署和扩展、删除和终止(例如:mysql的主从关系、先启动主,再启动从)有序,滚动更新。

- 应用场景为:数据库

有状态:

实例之间有差异,每个实例都有自己的独特性,元数据不同,例如etcd,zookeeper

实例之间不对等的关系,以及依靠外部存储的应用

常规service和Headless service的区别

- service:一组Pod访问策略,提供cluster-IP群集之间通讯,还提供负载均衡和服务发现

- Headless service:无头服务,不需要cluster-IP,直接半丁具体的Pod的IP

StatefulSet控制器使用实例

- 创建服务资源

[root@master demo]# cat nginx-service.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-service

labels:

app: nginx

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

selector:

app: nginx

[root@master demo]# kubectl create -f nginx-service.yaml

service/nginx-service created

[root@master demo]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.0.0.1 443/TCP 22d

nginx-service NodePort 10.0.0.178 80:40715/TCP 8s

[root@master demo]#

-

创建headless service资源

[root@master demo]# cat headless.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

[root@master demo]# kubectl apply -f headless.yaml

service/nginx created

[root@master demo]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.0.0.1 443/TCP 22d

nginx ClusterIP None 80/TCP 8s

nginx-service NodePort 10.0.0.178 80:40715/TCP 16m

[root@master demo]#

-

创建DNS解析的资源

[root@master demo]# cat coredns.yaml

# Warning: This is a file generated from the base underscore template file: coredns.yaml.base

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

data:

Corefile: |

.:53 {

errors

health

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

upstream

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

proxy . /etc/resolv.conf

cache 30

loop

reload

loadbalance

}

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. In order to make Addon Manager do not reconcile this replicas parameter.

# 2. Default is 1.

# 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'docker/default'

spec:

serviceAccountName: coredns

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

- key: "CriticalAddonsOnly"

operator: "Exists"

containers:

- name: coredns

image: coredns/coredns:1.2.2

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.0.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

[root@master demo]# kubectl create -f coredns.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.extensions/coredns created

service/kube-dns created

[root@master demo]#

- 验证DNS解析

##清楚当前目录下与yaml文件相关得资源

[root@master demo]# kubectl delete -f .

。。。

[root@master demo]# kubectl get pods

No resources found.

[root@master demo]# kubectl create -f coredns.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.extensions/coredns created

service/kube-dns created

[root@master demo]# kubectl create -f sts.yaml

service/nginx created

statefulset.apps/nginx-statefulset created

[root@master demo]# cat pod3.yaml

apiVersion: v1

kind: Pod

metadata:

name: dns-test

spec:

containers:

- name: busybox

image: busybox:1.28.4

args:

- /bin/sh

- -c

- sleep 6000

restartPolicy: Never

[root@master demo]# kubectl create -f pod3.yaml

pod/dns-test created

[root@master demo]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/dns-test 1/1 Running 0 74s

pod/nginx-statefulset-0 1/1 Running 0 97s

pod/nginx-statefulset-1 1/1 Running 0 75s

pod/nginx-statefulset-2 1/1 Running 0 54s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.0.0.1 443/TCP 22d

service/nginx ClusterIP None 80/TCP 97s

[root@localhost demo]# kubectl exec -it dns-test sh

/ # nslookup nginx-statefulset-0.nginx

Server: 10.0.0.2

Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.local

Name: nginx-statefulset-0.nginx

Address 1: 172.17.42.2 nginx-statefulset-0.nginx.default.svc.cluster.local

注:Deployment和StatefulSet的区别是,StatefulSet创建的资源是有身份的

身份的三要素为:

域名:nginx-statefulset-0.nginx

主机名:nginx-statefulset-0

存储:PVC

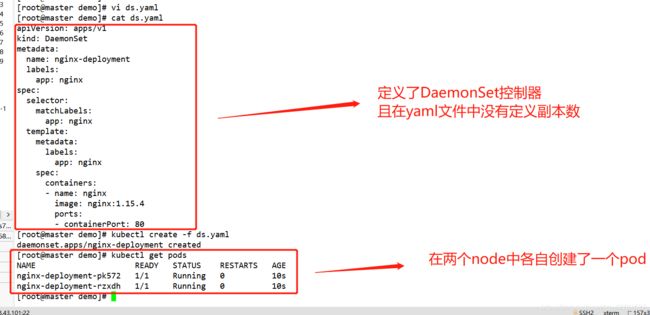

四.DaemonSet

- 在每一个Node上面运行一个Pod;新加入的Node也同样会自动运行一个Pod

- 应用场景为:Agent

实例如下

五.Jod和CronJob

- Job:普通任务,一次性执行。应用场景为:离线数据处理,视频解码等业务

- CronJob:周期任务,按时执行。应用场景:通知备份等

Job实例如下

- 创建实例资源,

[root@master demo]# cat job.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: pi

spec:

template:

spec:

containers:

- name: pi

image: perl

command: ["perl", "-Mbignum=bpi", "-wle", "print bpi(2000)"]

restartPolicy: Never

backoffLimit: 4 ##重试默认次数为4次,当遇到异常时Never状态会重启,所以要设定次数。

[root@master demo]# kubectl create -f job.yaml

job.batch/pi created

[root@master demo]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-deployment-pk572 1/1 Running 0 12m

nginx-deployment-rzxdh 1/1 Running 0 12m

pi-qpn8l 0/1 Completed 0 59s

[root@master demo]#

- 打印日志

[root@master demo]# kubectl logs pi-qpn8l

3.1415926535897932384626433832795028841971693993751058209749445923078164062862089986280348253421170679821480865132823066470938446095505822317253594081284811174502841027019385211055596446229489549303819644288109756659334461284756482337867831652712019091456485669234603486104543266482133936072602491412737245870066063155881748815209209628292540917153643678925903600113305305488204665213841469519415116094330572703657595919530921861173819326117931051185480744623799627495673518857527248912279381830119491298336733624406566430860213949463952247371907021798609437027705392171762931767523846748184676694051320005681271452635608277857713427577896091736371787214684409012249534301465495853710507922796892589235420199561121290219608640344181598136297747713099605187072113499999983729780499510597317328160963185950244594553469083026425223082533446850352619311881710100031378387528865875332083814206171776691473035982534904287554687311595628638823537875937519577818577805321712268066130019278766111959092164201989380952572010654858632788659361533818279682303019520353018529689957736225994138912497217752834791315155748572424541506959508295331168617278558890750983817546374649393192550604009277016711390098488240128583616035637076601047101819429555961989467678374494482553797747268471040475346462080466842590694912933136770289891521047521620569660240580381501935112533824300355876402474964732639141992726042699227967823547816360093417216412199245863150302861829745557067498385054945885869269956909272107975093029553211653449872027559602364806654991198818347977535663698074265425278625518184175746728909777727938000816470600161452491921732172147723501414419735685481613611573525521334757418494684385233239073941433345477624168625189835694855620992192221842725502542568876717904946016534668049886272327917860857843838279679766814541009538837863609506800642251252051173929848960841284886269456042419652850222106611863067442786220391949450471237137869609563643719172874677646575739624138908658326459958133904780275901

[root@master demo]#

CronJob实例如下

##每一分钟都在日志上打印一个hello

[root@master demo]# cat cronjob.yaml

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: hello

spec:

schedule: "*/1 * * * *"

jobTemplate:

spec:

template:

spec:

containers:

- name: hello

image: busybox

args:

- /bin/sh

- -c

- date; echo Hello from the Kubernetes cluster

restartPolicy: OnFailure

[root@master demo]# kubectl create -f cronjob.yaml

cronjob.batch/hello created

[root@master demo]# kubectl get cronjob

NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE

hello */1 * * * * False 0 12s

[root@master demo]# kubectl get pod

NAME READY STATUS RESTARTS AGE

hello-1589904720-k4ssh 0/1 ContainerCreating 0 9s

[root@master demo]# kubectl logs hello-1589904720-k4ssh

Tue May 19 16:12:11 UTC 2020

Hello from the Kubernetes cluster

[root@master demo]# kubectl get cronjob

NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE

hello */1 * * * * False 0 42s 54s

[root@master demo]#

注:cronjob会不停地创建pod资源,所以测试之后建议删除