Centos7安装ELK Cyrus

Author: Cyrus

Date: 2020/4/21

一、介绍

ELK是三款开源软件的缩写,即:ElasticSearch + Logstash + Kibana。这三个工具组合形成了一套实用、易用的监控架构,可抓取系统日志、apache日志、nginx日志、mysql日志等多种日志类型,目前很多公司用它来搭建可视化的集中式日志分析平台。

ElasticSearch:是一个分布式的RESTful风格的搜索和数据分析引擎,同时还提供了集中存储功能,它主要负责将logstash抓取来的日志数据进行检索、查询、分析等。

Logstash:日志处理工具,负责日志收集、转换、解析等,并将解析后的日志推送给ElasticSearch进行检索。

Kibana:Web前端,可以将ElasticSearch检索后的日志转化为各种图表,为用户提供数据可视化支持。

Filebeat:轻量型日志采集器,负责采集文件形式的日志,并将采集来的日志推送给logstash进行处理。

Winlogbeat:轻量型windows事件日志采集器,负责采集wondows的事件日志,并将采集来的日志推送给logstash进行处理。

二、安装环境

由于我这边是测试环境,所以ElasticSearch + Logstash + Kibana这三个软件我都是装在一台机器上面,如果是生产环境,建议分开部署,并且ElasticSearch可配置成集群方式。

三、准备工作

禁用selinux、关闭防火墙

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

sed -i ‘s/SELINUXTYPE=targeted/#&/‘ /etc/selinux/config

setenforce 0 # 可以设置配置文件永久关闭

systemctl stop firewalld.service

systemctl disable firewalld.service

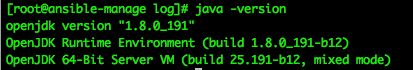

四、安装jdk,因为安装ELK需要依赖java环境

yum -y install java-1.8.0-openjdk

查看java版本

java -version

五、安装elasticsearch、logstash、kibana

下载并安装公共签名密钥:rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

创建yum的repo文件:vi /etc/yum.repos.d/elasticsearch.repo

添加容易如下:

[elasticsearch-6.x]

name=Elasticsearch repository for 6.x packages

baseurl=https://artifacts.elastic.co/packages/6.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

1、安装Elasticsearch

yum -y install elasticsearch

修改配置文件

vi /etc/elasticsearch/elasticsearch.yml

path.data: /data/elasticsearch/data

path.logs: /data/elasticsearch/logs

network.host: localhost

http.port: 9200

systemctl start elasticsearch

systemctl enable elasticsearch

验证安装

systemctl status elasticsearch

curl -X GET "localhost:9200"

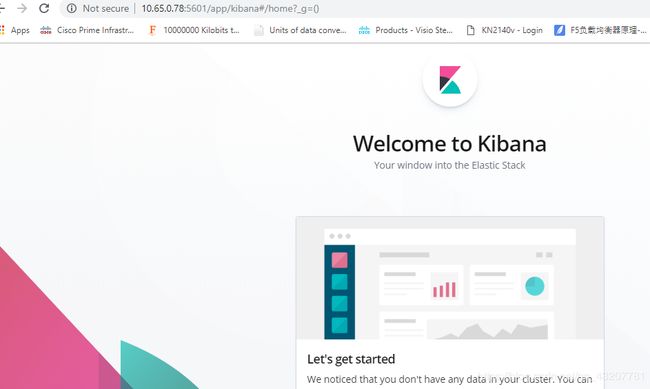

2、kibana安装

yum -y install kibana

vi /etc/kibana/kibana.yml

server.port: 5601

server.host: "0.0.0.0"

elasticsearch.url: "http://localhost:9200"

systemctl start kibana

systemctl enable kibana

systemctl status kibana

netstat -nltp |grep 5601

浏览器访问 http://192.168.47.131:5601

3、安装 logstash

Yum -y install logstash

添加配置文件

vi /etc/logstash/conf.d/logstash.conf # 添加如下内容

input {

beats {

port => 5044

codec => plain {

charset => "UTF-8"

}

}

}

output {

elasticsearch {

hosts => "127.0.0.1:9200"

manage_template => false

index => "%{[@metadata][beat]}-%{+YYYY.MM.dd}"

document_type => "%{[@metadata][type]}"

}

}

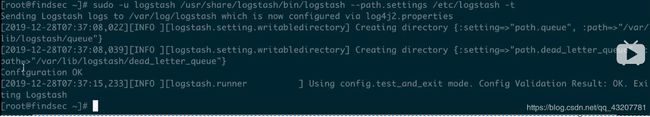

测试语法是否正确

sudo -u logstash /usr/share/logstash/bin/logstash --path.settings /etc/logstash -t

Configuration OK

systemctl start logstash

systemctl enable logstash

systemctl status logstash

4、安装 filebeat

yum install -y filebeat

vi /etc/filebeat/filebeat.yml

- type: log

enabled: true

- /var/log/*.log

- /var/log/messages

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 3

setup.kibana:

host: "localhost:5601"

#output.elasticsearch: //我们输出到logstash,把这行注释掉

#hosts: ["localhost:9200"] //这行也注释掉

output.logstash:

hosts: ["localhost:5044"]

filebeat modules enable system

filebeat modules list

cat /etc/filebeat/modules.d/system.yml

创建索引

sudo filebeat setup --template -E output.logstash.enabled=false -E 'output.elasticsearch.hosts=["localhost:9200"]'

显示Loaded index template

这样就可以展现数据

filebeat setup -e -E output.logstash.enabled=false -E output.elasticsearch.hosts=['localhost:9200'] -E setup.kibana.host=localhost:5601

Systemctl start filebeat

Systemctl enable filebeat

Systemctl status filebeat

验证是否存在数据

curl -X GET 'http://localhost:9200/filebeat-*/_search?pretty'