mfs分布式文件系统(pacemaker+corosync+pcs)实现高可用,ISCSI实现磁盘共享,fence机制

一.MFS的原理及组成

1.分布式原理:分布式文件系统就是把一些分散在多台计算机上的共享文件夹,集合到一个共享文件夹内,用户要访问这些文件夹的时候,只要打开一个文件夹,就可以的看到所有链接到此文件夹内的共享文件夹。

2.mfs文件系统组成

1、管理服务器(master server)

一台管理整个文件系统的独立主机,存储着每个文件的元数据(文件的大小、属性、位置信息,包括所有非常规文件的所有信息,例如目录、套接字、管道以及设备文件)

2、数据服务器群(chunk servers)

任意数目的商用服务器,用来存储文件数据并在彼此之间同步(如果某个文件有超过一个备份的话)

3、元数据备份服务器(metalogger server)

任意数量的服务器,用来存储元数据变化日志并周期性下载主要元数据文件,以便用于管理服务器意外停止时好接替其位置。

4、访问mfs的客户端

任意数量的主机,可以通过mfsmount进程与管理服务器(接收和更改元数据)和数据服务器(改变实际文件数据)进行交流。

3.MFS读数据的处理过程

客户端向元数据服务器发出读请求

元数据服务器把所需数据存放的位置(Chunk Server的IP地址和Chunk编号)告知客户端

客户端向已知的Chunk Server请求发送数据

Chunk Server向客户端发送数据

4.写入的过程

客户端向元数据服务器发送写入请求

元数据服务器与Chunk Server进行交互,但元数据服务器只在某些服务器创建新的分块Chunks,创建成功后由Chunk Servers告知元数据服务器操作成功

元数据服务器告知客户端,可以在哪个Chunk Server的哪些Chunks写入数据

客户端向指定的Chunk Server写入数据

该Chunk Server与其他Chunk Server进行数据同步,同步成功后Chunk Server告知客户端数据写入成功

二.MFS的部署

| 实验环境 | RHEL7.3 |

|---|---|

| master server | 172.25.14.1(server1) |

| chunk server1 | 172.25.14.2(server2) |

| chunk server2 | 172.25.14.3(server3) |

| clients | 172.25.254.14(物理机) |

1.配置master(server1)

1)安装rpm包

yum install -y

moosefs-master-3.0.103-1.rhsystemd.x86_64.rpm

moosefs-cli-3.0.103-1.rhsystemd.x86_64.rpm

moosefs-cgiserv-3.0.103-1.rhsystemd.x86_64.rpm

moosefs-cgi-3.0.103-1.rhsystemd.x86_64.rpm

2)修改本地地址解析

加上 172.25.14.1 mfsmaster server1,2,3,物理机都要加

3)开启服务

systemctl start moosefs-master

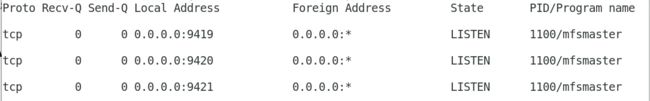

netstat -atlnp ##查看端口,占用9419,9420,9420端口

systemctl start moosefs-cgiserv

scp moosefs-chunkserver-3.0.103-1.rhsystemd.x86_64.rpm [email protected]:/root ##将安装包给server2和server3都发

一份

2.配置chunk server1(server2 )

1) 安装,创建目录

yum install moosefs-chunkserver-3.0.103-1.rhsystemd.x86_64.rpm

vim /etc/hosts ##修改本地地址解析

mkdir /mnt/chunk1

2)添加硬盘,挂载

给虚拟机添加一块硬盘,将硬盘挂载,否则启动服务后占用的是根空间

fdisk -l

mkfs.xfs /dev/vda ##格式化

mount /dev/vda /mnt/chunk1/

chown mfs.mfs /mnt/chunk1/

vim /etc/mfs/mfshdd.cfg ##在文件的最后添加下面一行

/mnt/chunk1

systemctl start moosefs-chunkserver ##开启服务

3.配置chunk server2(server3)

不给chunk server2添加硬盘,服务开启占用根空间

rpm -ivh moosefs-chunkserver-3.0.103-1.rhsystemd.x86_64.rpm

mkdir /mnt/chunk2

vim /etc/hosts ##添加本地解析

chown mfs.mfs /mnt/chunk2/

vim /etc/mfs/mfshdd.cfg

/mnt/chunk2

systemctl start moosefs-chunkserver

4.配置clients(客户端)

yum install -y moosefs-client-3.0.103-1.rhsystemd.x86_64.rpm

vim /etc/mfs/mfsmount.cfg

#/mnt/mfs ##指定挂载的地方

mkdir /mnt/mfs

vim /etc/hosts

mfsmount

[root@foundation14 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/rhel_foundation14-root 225399264 79664564 145734700 36% /

devtmpfs 3911020 0 3911020 0% /dev

tmpfs 3924360 488 3923872 1% /dev/shm

tmpfs 3924360 9084 3915276 1% /run

tmpfs 3924360 0 3924360 0% /sys/fs/cgroup

/dev/sda1 1038336 143400 894936 14% /boot

/dev/loop0 3704296 3704296 0 100% /var/www/html/7.3yumpak

tmpfs 784876 24 784852 1% /run/user/1000

mfsmaster:9421 38772736 1627072 37145664 5% /mnt/mfs

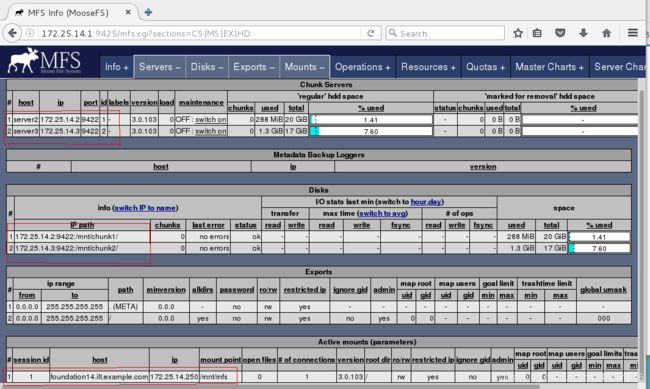

客户端已经成功挂载,可以通过浏览器进行查看

可以看到chunk server的信息和挂载硬盘占用大小,以及客户端的基本信息

三.MFS的高可用

1.配置master

在此之前server1作为master

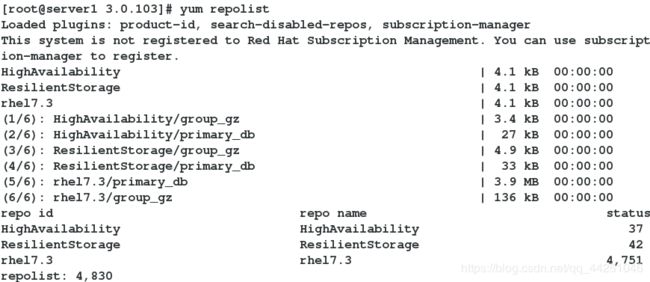

1).配置yum源

[rhel7.3]

name=rhel7.3

baseurl=http://172.25.14.250/7.3yumpak

gpgcheck=0

[HighAvailability]

name=HighAvailability

baseurl=http://172.25.14.250/7.3yumpak/addons/HighAvailability

gpgcheck=0

[ResilientStorage]

name=ResilientStorage

baseurl=http://172.25.14.250/7.3yumpak/addons/ResilientStorage

gpgcheck=0

2).下载软件

yum install -y pacemaker corosync pcs

3).免密操作

ssh-keygen

ssh-copy-id server4

systemctl start pcsd

systemctl enable pcsd

passwd hacluster ##主备密码要配置一致

2.配置backup-master

[root@server4 ~]# ls

moosefs-master-3.0.103-1.rhsystemd.x86_64.rpm

[root@server4~]#rpm-ivh moosefs-master-3.0.103-1.rhsystemd.x86_64.rpm

1)配置yum源和server1(master)一样

[rhel7.3]

name=rhel7.3

baseurl=http://172.25.14.250/7.3yumpak

gpgcheck=0

[HighAvailability]

name=HighAvailability

baseurl=http://172.25.14.250/7.3yumpak/addons/HighAvailability

gpgcheck=0

[ResilientStorage]

name=ResilientStorage

baseurl=http://172.25.14.250/7.3yumpak/addons/ResilientStorage

gpgcheck=0

2)vim /usr/lib/systemd/system/moosefs-master.service

[Unit]

Description=MooseFS Master server

Wants=network-online.target

After=network.target network-online.target

[Service]

Type=forking

ExecStart=/usr/sbin/mfsmaster -a

ExecStop=/usr/sbin/mfsmaster stop

ExecReload=/usr/sbin/mfsmaster reload

PIDFile=/var/lib/mfs/.mfsmaster.lock

TimeoutStopSec=1800

TimeoutStartSec=1800

Restart=no

[Install]

WantedBy=multi-user.target

systemctl daemon-reload

3)下载软件

yum install -y pacemaker corosync pcs

systemctl start pcsd

systemctl enable pcsd

passwd hacluster ##设定密码,与master一致

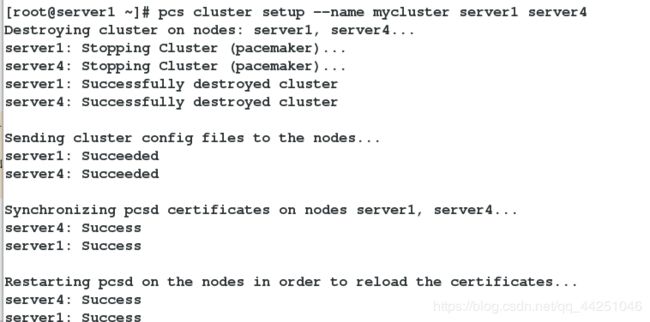

3.创建主备集群

pcs cluster auth server1 server4

pcs cluster setup --name mycluster server1 server4

pcs cluster start --all # 开启所有的服务

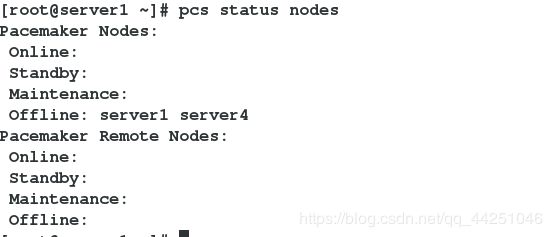

pcs status nodes # 再次查看节点信息

corosync-cfgtool -s # 验证corosync是否正常

pcs property set stonith-enabled=false

crm_verify -L -V

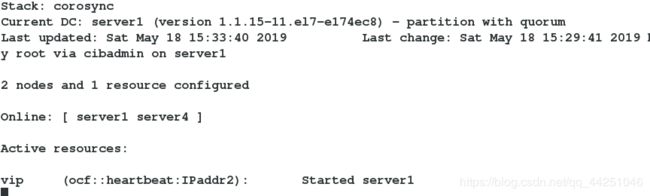

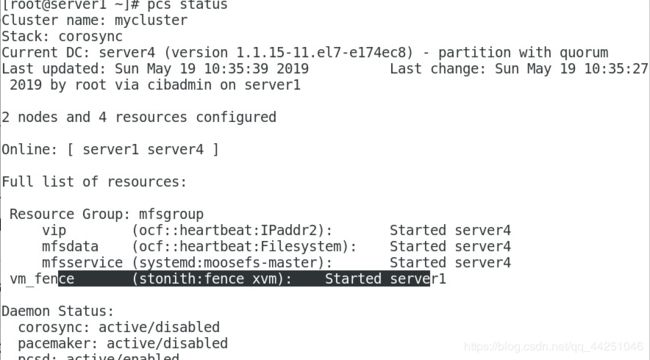

pcs status #查看状态

4.添加vip

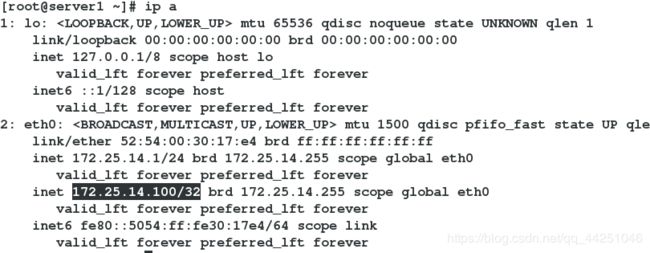

Pcs resource create vip ocf:heartbeat:IPaddr2 ip=172.25.14.100 \

cidr_netmask=32 op monitor interval=30s

5.打开监控,测试

crm_mon ##开始在master(server1)上

pcs cluster stop server1 ##关掉server1的服务,服务到了server4上

pcs cluster start server1 #当master重新开启时,不会抢占资源

pcs cluster stop server5 ## 关闭backup-master,vip又自动漂移到master上

pcs resource providers # 查看资源提供者的列表

当master出故障时,backup-master会立刻接替master的工作,

保证客户端可以正常得到服务

三.iscsi存储共享

1.环境恢复

物理机 umount /mnt/mfs/

vim /etc/hosts

172.25.14.100 mfsmaster

Server1:

systemctl stop moosefs-master

vim /etc/hosts

172.25.14.100 mfsmaster

Server2,server3的操作

systemctl stop moosefs-chunkserver

vim /etc/hosts

172.25.14.100 mfsmaster

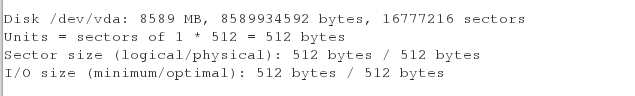

2.给server3上添加一块硬盘

[root@server3 ~]# fdisk -l

Disk /dev/vda: 8589 MB, 8589934592bytes, 16777216sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

[root@server3 ~]# yum install -y targetcli

[root@server3 ~]# systemctl start target

3.配置iscsi服务

[root@server3 ~]# targetcli

/> cd backstores/block

/backstores/block> create my_disk1 /dev/vda

Created block storage object my_disk1 using /dev/vda.

/backstores/block> cd ..

/backstores> cd ..

/> cd iscsi

/iscsi> create iqn.2019-05.com.example:server3

Created target iqn.2019-05.com.example:server3.

Created TPG 1.

Global pref auto_add_default_portal=true

Created default portal listening on all IPs (0.0.0.0), port 3260.

/iscsi> cd iqn.2019-05.com.example:server3

/iscsi/iqn.20...ample:server3> cd tpg1/luns

/iscsi/iqn.20...er3/tpg1/luns> create /backstores/block/my_disk1

Created LUN 0.

/iscsi/iqn.20...er3/tpg1/luns> cd ..

/iscsi/iqn.20...:server3/tpg1> cd acls

/iscsi/iqn.20...er3/tpg1/acls> create iqn.2019-05.com.example:client

Created Node ACL for iqn.2019-05.com.example:client

Created mapped LUN 0.

/iscsi/iqn.20...er3/tpg1/acls> cd ..

/iscsi/iqn.20...:server3/tpg1> cd ..

/iscsi/iqn.20...ample:server3> cd ..

/iscsi> cd ..

/> ls

o- / ............................................................................ [...]

o- backstores ................................................................. [...]

| o- block ..................................................... [Storage Objects: 1]

| | o- my_disk1 ........................... [/dev/vda (20.0GiB) write-thru activated]

| o- fileio .................................................... [Storage Objects: 0]

| o- pscsi ..................................................... [Storage Objects: 0]

| o- ramdisk ................................................... [Storage Objects: 0]

o- iscsi ............................................................... [Targets: 1]

| o- iqn.2019-05.com.example:server3 ...................................... [TPGs: 1]

| o- tpg1 .................................................. [no-gen-acls, no-auth]

| o- acls ............................................................. [ACLs: 1]

| | o- iqn.2019-05.com.example:client .......................... [Mapped LUNs: 1]

| | o- mapped_lun0 ................................. [lun0 block/my_disk1 (rw)]

| o- luns ............................................................. [LUNs: 1]

| | o- lun0 ......................................... [block/my_disk1 (/dev/vda)]

| o- portals ....................................................... [Portals: 1]

| o- 0.0.0.0:3260 ........................................................ [OK]

o- loopback ............................................................ [Targets: 0]

/> exit

4.发现设备

[root@server1 ~]# iscsiadm -m discovery -t st -p 172.25.14.3

172.25.14.3:3260,1 iqn.2019-05.com.example:server3

fdisk -l # 可以查看到远程共享出来的磁盘

fdisk /dev/sda # 使用共享磁盘,建立分区

mkfs.xfs /dev/sdb1 # 格式化分区

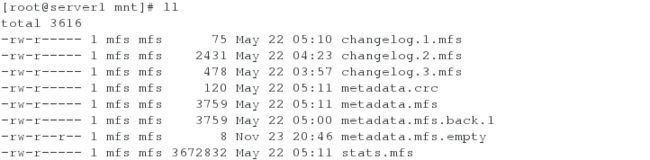

[root@server1 ~]# cd /var/lib/mfs/ # 这是mfs的数据目录

[root@server1 mfs]# ls

changelog.1.mfs changelog.2.mfs changelog.3.mfs metadata.crc metadata.mfs metadata.mfs.back.1 metadata.mfs.empty stats.mfs

[root@server1 mfs]# cp -p * /mnt

[root@server1 mfs]# cd /mnt/

[root@server1 mnt]# ll

[root@server1 mnt]# chown mfs.mfs /mnt #当目录属于mfs用户和组时,才能正常使用

[root@server1 mnt]# cd

[root@server1 ~]# umount /mnt

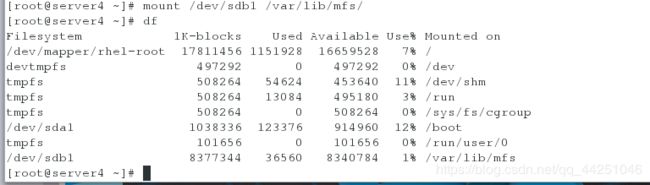

[root@server1 ~]# mount /dev/sdb1 /var/lib/mfs/ #使用分区,测试是否可以使用共享磁盘

[root@server1 ~]# df

5.配置backup-master

[root@server4 ~]# vim /etc/hosts

[root@server4 ~]# yum install -y iscsi-*

[root@server4 ~]# vim /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.2019-05.com.example:client

[root@server4 ~]# iscsiadm -m discovery -t st -p 172.25.40.2 ##发现

172.25.40.2:3260,1 iqn.2019-05.com.example:server2

[root@server4 ~]# iscsiadm -m node -l ##登陆

Logging in to [iface: default, target: iqn.2019-05.com.example:server2, portal: 172.25.40.2,3260] (multiple)

Login to [iface: default, target: iqn.2019-05.com.example:server2, portal: 172.25.40.2,3260] successful.

[root@server4 ~]# fdisk -l ##查看

[root@server4 ~]# mount /dev/sdb1 /var/lib/mfs/

##此处使用的磁盘和master是同一块,因为master已经做过配置了,所以我们只需要使用即可,不用再次配置

[root@server4 ~]# df

[root@server4 ~]# systemctl start moosefs-master ##测试磁盘是否可以正常使用

[root@server4 ~]# systemctl stop moosefs-master

6.在master上创建mfs文件系统

[root@server1 ~]# pcs resource create mfsdata ocf:heartbeat:Filesystem device=/dev/sdb1 directory=/var/lib/mfs fstype=xfs op monitor interval=30s

[root@server1 ~]# pcs resource show

vip (ocf::heartbeat:IPaddr2): Started server1

mfsdata (ocf::heartbeat:Filesystem): Started server4

[root@server1 ~]# pcs resource create mfsd systemd:moosefs-master op monitor interval=1min

[root@server1 ~]# pcs resource group add mfsgroup vip mfsdata mfsd

##把vip,mfsdata,mfsd 集中在一个组中

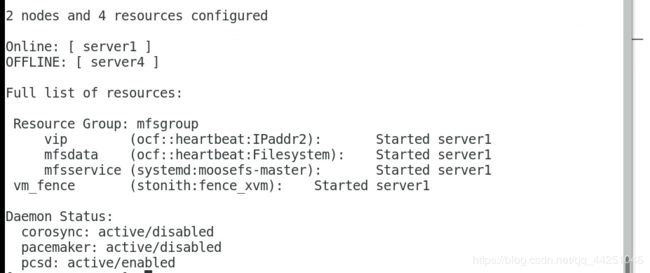

[root@server1 ~]# pcs cluster stop server1 ## 当关闭master之后,master上的服务就会迁移到backup-master上

server1: Stopping Cluster (pacemaker)...

server1: Stopping Cluster (corosync)...

四.fence机制

通过以上实验我们发现,当master挂掉之后,backup-master会立刻接替master的工作,保证客户端可以进行正常访问,但是,当master重新运行时,我们不能保证master是否会抢回自己的工作,从而导致master和backup-master同时修改同一份数据文件从而发生脑裂,此时fence就派上用场了

1.安装fence

[root@server4 ~]# yum install -y fence-virt

[root@server4~]# mkdir /etc/cluster

[root@server4~]# yum install -y fence-virt

[root@server4 ~]# mkdir /etc/cluster

2.生成一份fence密钥文件,传给服务端

[root@foundation61 ~]# yum install -y fence-virtd

[root@foundation61 ~]# yum install fence-virtd-libvirt -y

[root@foundation61 ~]# yum install fence-virtd-multicast -y

[root@foundation61 ~]# fence_virtd -c

Listener module [multicast]:

Multicast IP Address [225.0.0.12]:

Multicast IP Port [1229]:

Interface [virbr0]: br0 # 注意此处要修改接口,必须与本机一致

Key File [/etc/cluster/fence_xvm.key]:

Backend module [libvirt]:

[root@foundation61 ~]# mkdir /etc/cluster # 这是存放密钥的文件,需要自己手动建立

[root@foundation61 ~]# dd if=/dev/urandom of=/etc/cluster/fence_xvm.key bs=128 count=1

[root@foundation61 ~]# systemctl start fence_virtd

[root@foundation61 ~]# cd /etc/cluster/

[root@foundation61 cluster]# ls

fence_xvm.key

[root@foundation61 cluster]# scp fence_xvm.key [email protected]:/etc/cluster/

[root@foundation61 cluster]# scp fence_xvm.key [email protected]:/etc/cluster/

[root@foundation61 cluster]# netstat -anulp | grep 1229

[root@foundation61 cluster]# virsh list # 查看主机域名

在master查看监控crm_mon

[root@server1 ~]# cd /etc/cluster

[root@server1 cluster]# pcs stonith create vmfence fence_xvm pcmk_host_map="server1:server1;server4:server4" op monitor interval=1min

[root@server1 cluster]# pcs property set stonith-enabled=true

[root@server1 cluster]# crm_verify -L -V

[root@server1 cluster]# crm_mon # 查看监控,服务在master上(server1)

[root@server4 cluster]# echo c > /proc/sysrq-trigger # 模拟backup-master端内核崩溃