- 背景

- 搭建k8s使用的主机环境

- 部署ha和harbor

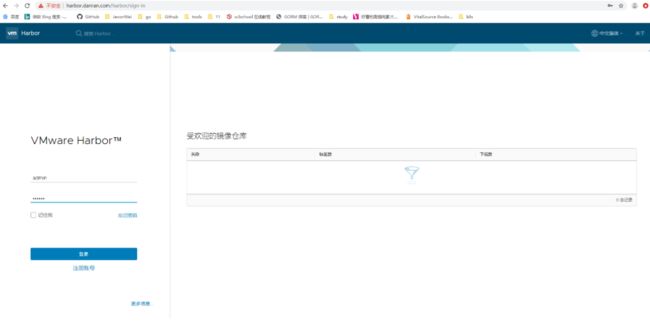

- harbor

- HA

- keepalived

- haproxy

- kubernetes部署

- master、node安装组件

- docker安装

- kubeadm安装

- 初始化前准备

- kubeadm 命令使用

- 验证当前 kubeadm 版本

- 准备k8s镜像

- master 节点镜像下载

- Master 初始化

- 单节点master init命令初始化

- 基于文件初始化高可用master

- 编辑kubeadm-init.yml文件

- 基于yml文件初始化集群

- 配置kube-config文件

- 部署网络组件flannel

- 准备kube-flannel.yml文件

- 准备flannel image

- 部署flannel

- 确认node状态

- master添加新节点

- 当前maste1生成证书用于添加新控制节点

- master join 新节点

- master1查看当前节点状态

- 添加node节点

- 测试

- 验证master状态

- 验证k8s集群状态

- 当前csr证书状态

- 测试pod间的网络

- master、node安装组件

- 部署dashboard

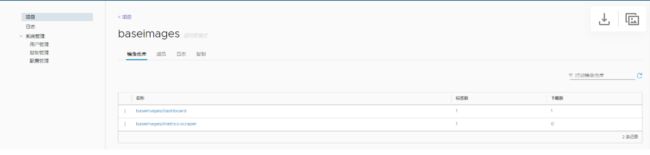

- 将dashboard上传到Harbor仓库

- pull dashboard iamge

- 将image push到harbor

- harbor pull image

- 部署dashboard

- 准备yml文件

- 部署

- 使用token登录dashboard

- 使用KubeCondif文件登录Dashboard

- 将dashboard上传到Harbor仓库

- K8s集群升级

- 升级 k8s master 服务

- 验证当 k8s 前版本

- 各 master 安装指定新版本 kubeadm

- kubeadm 升级命令使用帮助

- 查看升级计划

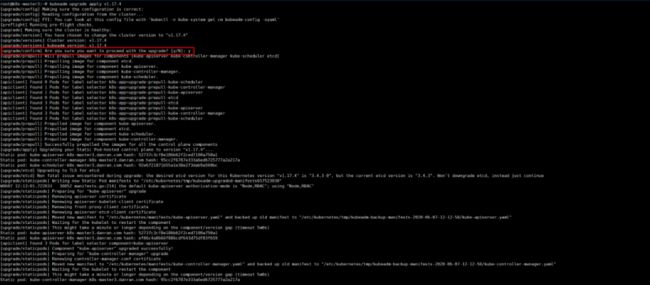

- master升级

- 非主节点升级

- master1主节点升级

- 验证镜像

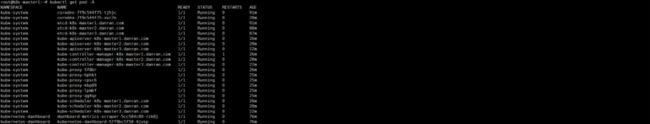

- 获取主机上pod信息

- master升级kubelet、kubectl

- 查看node信息

- 升级 k8s node 服务

- 验证当前 node 版本信息

- 升级各 node 节点kubelet

- Node 升级 kubectl、kubelet

- 验证node节点的版本

- 升级 k8s master 服务

- 测试运行Nginx+Tomcat

- 下载Nginx、Tomcat Image,push到harbor

- Nginx

- Tomcat(Dockfile重做Tomcat Image)

- 运行Nginx

- 修改haproxy配置文件,添加Nginx 反向代理

- 运行 tomcat

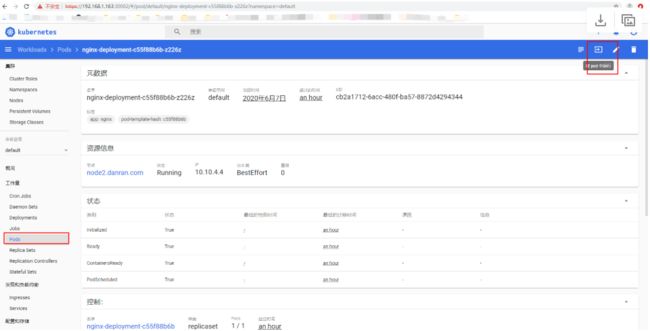

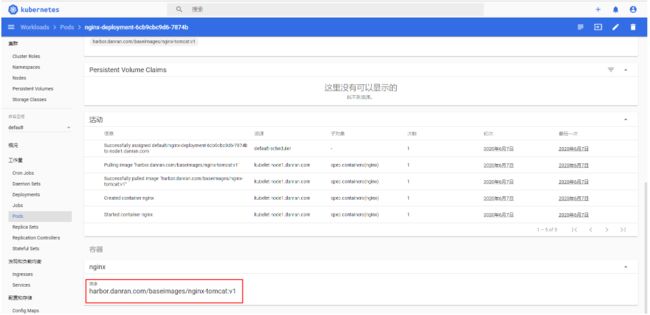

- dashboard进入到Nginx Pod

- 在Pod中添加Tomcat的反向代理配置

- 重新运行Nginx

- 浏览器访问Nginx 的VIP,可跳转到Tomcat的界面

- 下载Nginx、Tomcat Image,push到harbor

- kubeadm命令

- token 管理

- 问题

背景

本次环境使用kubeadm搭建1.17.2版本的kubernetes,并使用kubeadm升级到1.17.4,镜像仓库为自建harbor,web界面使用dashboard展示,此文档仅是学习理论环境使用

https://github.com/kubernetes/kubernetes

https://kubernetes.io/zh/docs/concepts/workloads/controllers/deployment/#

搭建k8s使用的主机环境

| IP | Hostname | Role |

|---|---|---|

| 10.203.124.230 | master1.linux.com | mster |

| 10.203.124.231 | master2.linux.com | mster |

| 10.203.124.232 | master3.linux.com | mster |

| 10.203.124.233 | node1.linux.com | node |

| 10.203.124.234 | node2.linux.com | node |

| 10.203.124.235 | node3.linux.com | node |

| 10.203.124.236 | harbor.linux.com | harbor |

| 10.203.124.237 | ha1.linux.com | HA |

| 10.203.124.238 | keeplived-vip | VIP |

-

环境准备

所有主机时间同步 root@node3:~# timedatectl set-timezone Asia/Shanghai root@node3:~# timedatectl set-ntp on root@node3:~# timedatectl Local time: Sat 2020-06-06 00:18:27 CST Universal time: Fri 2020-06-05 16:18:27 UTC RTC time: Fri 2020-06-05 16:18:27 Time zone: Asia/Shanghai (CST, +0800) System clock synchronized: yes systemd-timesyncd.service active: yes RTC in local TZ: no 停止apparmor root@k8s-master1~# /etc/init.d/apparmor stop 从系统中清除AppArmor root@k8s-master1:~# apt purge apparmor 关闭防火墙 ufw disable -

确保所有机器的net.ipv4.ip_forward = 1

root@master1:~# sysctl -p net.ipv4.ip_forward = 1 修改内核参数 root@master1:~# cat /etc/sysctl.conf 最后添加一下内容 net.ipv4.tcp_max_orphans = 3276800 net.ipv4.tcp_max_tw_buckets =20000 net.ipv4.tcp_synack_retries = 1 net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_timestamps = 1 #? # keepalive conn net.ipv4.tcp_keepalive_intvl = 30 net.ipv4.tcp_keepalive_time = 300 net.ipv4.tcp_keepalive_probes = 3 net.ipv4.ip_local_port_range = 10001 65000 # swap vm.overcommit_memory = 0 vm.swappiness = 10 #net.ipv4.conf.eth1.rp_filter = 0 #net.ipv4.conf.lo.arp_ignore = 1 #net.ipv4.conf.lo.arp_announce = 2 #net.ipv4.conf.all.arp_ignore = 1 #net.ipv4.conf.all.arp_announce = 2 -

在所有master节点安装指定版本的kubeadm、kubelet、kubectl、docker

-

在所有node节点安装指定版本的kubeadm、kubelet、docker,在node节点kubectl为可选安装

-

master 节点运行 kubeadm init 初始化命令

-

验证 master 节点状态

-

.在 node 节点使用 kubeadm 命令将自己加入 k8s master(需要使用 master 生成 token 认证)

-

验证 node 节点状态

-

创建 pod 并测试网络通信

-

部署 web 服务 Dashboard

-

k8s 集群升级

部署ha和harbor

harbor

安装docker和docker-compose

root@master1:~# cat docker-install_\(2\).sh

#!/bin/bash

# step 1: 安装必要的一些系统工具

sudo apt-get update

sudo apt-get -y install apt-transport-https ca-certificates curl software-properties-common

# step 2: 安装GPG证书

curl -fsSL https://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -

# Step 3: 写入软件源信息

sudo add-apt-repository "deb [arch=amd64] https://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable"

# Step 4: 更新并安装Docker-CE

sudo apt-get -y update

sudo apt-get -y install docker-ce docker-ce-cli

root@harbor:~# sh docker-install.sh

root@harbor:~# apt install docker-compose

启动docker

root@harbor:/usr/local/src/harbor# systemctl start docker

部署harbor

下载harbor的offline包到系统的/usr/local/src/目录下

root@harbor:~# cd /usr/local/src/

root@harbor:/usr/local/src# ls

harbor-offline-installer-v1.1.2.tgz

root@harbor:/usr/local/src# tar xvf harbor-offline-installer-v1.1.2.tg

root@harbor:/usr/local/src# ls

harbor harbor-offline-installer-v1.1.2.tgz

root@harbor:/usr/local/src# cd harbor/

编辑harbor.cfg文件修改hostname及harbor的admin密码

root@harbor:/usr/local/src/harbor# vim harbor.cfg

root@harbor:/usr/local/src/harbor# cat harbor.cfg | grep hostname

#The IP address or hostname to access admin UI and registry service.

hostname = harbor.linux.com

root@harbor:/usr/local/src/harbor# cat harbor.cfg | grep harbor_admin_password

harbor_admin_password = xxxxx

修改prepare文件的empty_subj参数

root@harbor:/usr/local/src/harbor# cat prepare | grep empty

#empty_subj = "/C=/ST=/L=/O=/CN=/"

empty_subj = "/C=US/ST=California/L=Palo Alto/O=VMware, Inc./OU=Harbor/CN=notarysigner"

安装harbor

root@harbor:/usr/local/src/harbor# ./install.sh

通过配置了harbor域名解析的主机,可访问harbor的hostname测试harbor,可通过harbor.cfg中配置的admin密码登录

docker login harbor

如果docker login时报以下错误,则需安装apt install gnupg2 pass包

root@harbor:/images/kubeadm_images/quay.io/coreos# docker login harbor.linux.com

Username: admin

Password:

Error response from daemon: Get http://harbor.linux.com/v2/: dial tcp 10.203.124.236:80: connect: connection refused

root@harbor:/images# apt install gnupg2 pass

root@harbor:/images# docker login harbor.linux.com

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

HA

两台HA主机上都需要执行以下安装操作

keepalived

root@ha1:~# apt install haproxy keepalived

root@ha1:~# cp /usr/share/doc/keepalived/samples/keepalived.conf.vrrp /etc/keepalived/keepalived.conf

root@ha1:~# vim /etc/keepalived/keepalived.conf

root@ha1:~# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from [email protected]

smtp_server 10.203.124.230

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state MASTER

interface ens33

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.203.124.238 dev ens33 label ens33:1

}

}

root@ha1:~# systemctl restart keepalived.service

root@ha1:~# systemctl enable keepalived.service

Synchronizing state of keepalived.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable keepalived

haproxy

root@ha1:~# vim /etc/haproxy/haproxy.cfg

在haproxy.cfg配置文件最后添加keepalived VIP的监听,负载均衡到三台master server

listen k8s-api-6443

bind 10.203.124.238:6443

mode tcp

server master1 10.203.124.230:6443 check inter 3s fall 3 rise 5

server master2 10.203.124.231:6443 check inter 3s fall 3 rise 5

server master3 10.203.124.232:6443 check inter 3s fall 3 rise 5

root@ha1:~# systemctl restart haproxy.service

root@ha1:~# systemctl enable haproxy.service

Synchronizing state of haproxy.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable haproxy

root@ha1:~# ss -ntl | grep 6443

LISTEN 0 128 10.203.124.238:6443 0.0.0.0:*

kubernetes部署

master、node安装组件

阿里云k8s镜像源配置(https://developer.aliyun.com/mirror/docker-ce?spm=a2c6h.13651102.0.0.3e221b11wJY3XG)

docker安装

master节点和node节点安装docker,docker版本为19.03

root@master1:~# cat docker-install.sh

#!/bin/bash

# step 1: 安装必要的一些系统工具

sudo apt-get update

sudo apt-get -y install apt-transport-https ca-certificates curl software-properties-common

# step 2: 安装GPG证书

curl -fsSL https://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -

# Step 3: 写入软件源信息

sudo add-apt-repository "deb [arch=amd64] https://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable"

# Step 4: 更新并安装Docker-CE

sudo apt-get -y update

sudo apt-get -y install docker-ce docker-ce-cli

root@master1:~# sh docker-install.sh

root@node1:~# systemctl start docker

root@node1:~# systemctl enable docker

Synchronizing state of docker.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable docker

配置docker 加速器

在master和node中配置docker加速器

root@master1:~# mkdir -p /etc/docker

root@master1:~# cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://9916w1ow.mirror.aliyuncs.com"]

}

root@master1:~# systemctl daemon-reload && sudo systemctl restart docker

kubeadm安装

root@master1:~# apt-get update && apt-get install -y apt-transport-https

root@master1:~# curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

root@master1:~# cat </etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF //手动输入EOF

root@master1:~# apt-get update

显示可用的kubeadm版本,安装的版本为1.17.2

root@k8s-master1:~# apt-cache madison kubeadm

master节点安装

root@k8s-master1:~# apt-get install -y kubeadm=1.17.2-00 kubectl=1.17.2-00 kubelet=1.17.2-00

node节点安装

root@k8s-node1:~# apt-get install -y kubeadm=1.17.2-00 kubelet=1.17.2-00

各节点配置Kubelet的配置文件并启动Kubelet服务

将Environment="KUBELET_EXTRA_ARGS=--fail-swap-on=false"写入/etc/systemd/system/kubelet.service.d/10-kubeadm.conf文件中

root@k8s-master1:~# vim /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

root@k8s-master1:~# cat /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

# Note: This dropin only works with kubeadm and kubelet v1.11+

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf"

Environment="KUBELET_CONFIG_ARGS=--config=/var/lib/kubelet/config.yaml"

Environment="KUBELET_EXTRA_ARGS=--fail-swap-on=false"

# This is a file that "kubeadm init" and "kubeadm join" generates at runtime, populating the KUBELET_KUBEADM_ARGS variable dynamically

EnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env

# This is a file that the user can use for overrides of the kubelet args as a last resort. Preferably, the user should use

# the .NodeRegistration.KubeletExtraArgs object in the configuration files instead. KUBELET_EXTRA_ARGS should be sourced from this file.

EnvironmentFile=-/etc/default/kubelet

ExecStart=

ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS

启动Kubelet

root@k8s-master1:~# systemctl daemon-reload

root@k8s-master1:~# systemctl restart kubelet

root@k8s-master1:~# systemctl enable kubelet

master节点上安装#bash命令补全脚本

root@k8s-master1:~# mkdir /data/scripts -p

root@k8s-master1:~# kubeadm completion bash > /data/scripts/kubeadm_completion.sh

root@k8s-master1:~# source /data/scripts/kubeadm_completion.sh

root@k8s-master1:~# cat /etc/profile

source /data/scripts/kubeadm_completion.sh

root@k8s-master1:~# chmod a+x /data/scripts/kubeadm_completion.sh

初始化前准备

在三台 master 中任意一台 master 进行集群初始化,而且集群初始化只需要初始化一次

kubeadm 命令使用

Available Commands:

alpha #kubeadm 处于测试阶段的命令

completion #bash 命令补全,需要安装 bash-completion

#mkdir /data/scripts -p

#kubeadm completion bash > /data/scripts/kubeadm_completion.sh

#source /data/scripts/kubeadm_completion.sh

#vim /etc/profile

source /data/scripts/kubeadm_completion.sh

config #管理 kubeadm 集群的配置,该配置保留在集群的 ConfigMap 中

#kubeadm config print init-defaults

help Help about any command

init #启动一个 Kubernetes 主节点

join #将节点加入到已经存在的 k8s master

reset 还原使用 kubeadm init 或者 kubeadm join 对系统产生的环境变化token #管理 token

upgrade #升级 k8s 版本

version #查看版本信息

kubeadm init 命令简介

-

命令使用:

https://kubernetes.io/zh/docs/reference/setup-tools/kubeadm/kubeadm/ -

集群初始化:

https://kubernetes.io/zh/docs/reference/setup-tools/kubeadm/kubeadm-init/root@docker-node1:~# kubeadm init --help --apiserver-advertise-address string #K8S API Server 将要监听的监听的本机 IP --apiserver-bind-port int32 #API Server 绑定的端口,默认为 6443 --apiserver-cert-extra-sans stringSlice #可选的证书额外信息,用于指定 API Server 的服务器证书。可以是 IP 地址也可以是 DNS 名称。 --cert-dir string #证书的存储路径,缺省路径为 /etc/kubernetes/pki --certificate-key string #定义一个用于加密 kubeadm-certs Secret 中的控制平台证书的密钥 --config string #kubeadm #配置文件的路径 --control-plane-endpoint string #为控制平台指定一个稳定的 IP 地址或 DNS 名称,即配置一个可以长期使用切是高可用的 VIP 或者域名,k8s 多 master 高可用基于此参数实现 --cri-socket string #要连接的 CRI(容器运行时接口,Container Runtime Interface, 简称 CRI)套接字的路径,如果为空,则 kubeadm 将尝试自动检测此值,"仅当安装了多个 CRI 或具有非标准 CRI 插槽时,才使用此选项" --dry-run #不要应用任何更改,只是输出将要执行的操作,其实就是测试运行。 --experimental-kustomize string #用于存储 kustomize 为静态 pod 清单所提供的补丁的路径。 --feature-gates string #一组用来描述各种功能特性的键值(key=value)对,选项是:IPv6DualStack=true|false (ALPHA - default=false) --ignore-preflight-errors strings #可以忽略检查过程 中出现的错误信息,比如忽略 swap,如果为 all 就忽略所有 --image-repository string #设置一个镜像仓库,默认为 k8s.gcr.io --kubernetes-version string #指定安装 k8s 版本,默认为 stable-1 --node-name string #指定 node 节点名称 --pod-network-cidr #设置 pod ip 地址范围 --service-cidr #设置 service 网络地址范围 --service-dns-domain string #设置 k8s 内部域名,默认为 cluster.local,会有相应的 DNS 服务(kube-dns/coredns)解析生成的域名记录。 --skip-certificate-key-print #不打印用于加密的 key 信息 --skip-phases strings #要跳过哪些阶段 --skip-token-print #跳过打印 token 信息 --token #指定 token --token-ttl #指定 token 过期时间,默认为 24 小时,0 为永不过期 --upload-certs #更新证书 #全局可选项: --add-dir-header #如果为 true,在日志头部添加日志目录 --log-file string #如果不为空,将使用此日志文件 --log-file-max-size uint #设置日志文件的最大大小,单位为兆,默认为 1800 兆,0 为没有限制 --rootfs #宿主机的根路径,也就是绝对路径 --skip-headers #如果为 true,在 log 日志里面不显示标题前缀 --skip-log-headers #如果为 true,在 log 日志里里不显示标题

验证当前 kubeadm 版本

# kubeadm version #查看当前 kubeadm 版本

root@k8s-master1:~# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"17", GitVersion:"v1.17.2", GitCommit:"59603c6e503c87169aea6106f57b9f242f64df89", GitTreeState:"clean", BuildDate:"2020-01-18T23:27:49Z", GoVersion:"go1.13.5", Compiler:"gc", Platform:"linux/amd64"}

准备k8s镜像

查看安装指定版本 k8s 需要的镜像有哪些

# kubeadm config images list --kubernetes-version v1.17.2

k8s.gcr.io/kube-apiserver:v1.17.2

k8s.gcr.io/kube-controller-manager:v1.17.2

k8s.gcr.io/kube-scheduler:v1.17.2

k8s.gcr.io/kube-proxy:v1.17.2

k8s.gcr.io/pause:3.1

k8s.gcr.io/etcd:3.4.3-0

k8s.gcr.io/coredns:1.6.5

master 节点镜像下载

推荐提前在 master 节点下载镜像以减少安装等待时间,但是镜像默认使用 Google 的镜像仓库,所以国内无法直接下载,但是可以通过阿里云的镜像仓库把镜像先提前下载下来,可以避免后期因镜像下载异常而导致 k8s 部署异常。

下载k8s image

root@k8s-master1:~# cat k8s-1.17.3-images-download.sh

#!/bin/bash

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.17.3

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.17.3

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.17.3

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.17.3

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.3-0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.6.5

root@k8s-master1:~# bash k8s-1.17.3-images-download.sh

root@k8s-master1:~# docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy v1.17.3 ae853e93800d 3 months ago 116MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager v1.17.3 b0f1517c1f4b 3 months ago 161MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver v1.17.3 90d27391b780 3 months ago 171MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler v1.17.3 d109c0821a2b 3 months ago 94.4MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy v1.17.2 cba2a99699bd 4 months ago 116MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager v1.17.2 da5fd66c4068 4 months ago 161MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver v1.17.2 41ef50a5f06a 4 months ago 171MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler v1.17.2 f52d4c527ef2 4 months ago 94.4MB

registry.cn-hangzhou.aliyuncs.com/google_containers/coredns 1.6.5 70f311871ae1 7 months ago 41.6MB

registry.cn-hangzhou.aliyuncs.com/google_containers/etcd 3.4.3-0 303ce5db0e90 7 months ago 288MB

registry.cn-hangzhou.aliyuncs.com/google_containers/pause 3.1 da86e6ba6ca1 2 years ago 742kB

Master 初始化

单节点master init命令初始化

root@k8s-master1:~# kubeadm init --apiserver-advertise-address=10.203.124.230 --control-plane-endpoint=10.203.124.238--apiserver-bind-port=6443 --ignore-preflight-errors=swap --image-repository=registry.cnhangzhou.aliyuncs.com/google_containers --kubernetes-version=v1.17.2 --pod-network-cidr=10.10.0.0/16 --service-cidr=172.16.0.0/16 --service-dns-domain=linux.com

初始化结果

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join 10.203.124.238:6443 --token ytnfvw.2m0wg4v22lq51mdw \

--discovery-token-ca-cert-hash sha256:0763db2d6454eeb4a25a8a91159a565f8bf81b9ffe69ba90fa3967652a00964f \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.203.124.238:6443 --token ytnfvw.2m0wg4v22lq51mdw \

--discovery-token-ca-cert-hash sha256:0763db2d6454eeb4a25a8a91159a565f8bf81b9ffe69ba90fa3967652a00964f

基于文件初始化高可用master

编辑kubeadm-init.yml文件

将kubeadm config的默认参数输入到yml文件

root@k8s-master1:~# kubeadm config print init-defaults > kubeadm-init.yml

W0606 16:11:52.170765 79373 validation.go:28] Cannot validate kube-proxy config - no validator is available

W0606 16:11:52.170940 79373 validation.go:28] Cannot validate kubelet config - no validator is available

修改yml文件的$\color{red}{advertiseAddress}$,$\color{red}{dnsDomain}$,$\color{red}{serviceSubnet}$,$\color{red}{imageRepository}$,并添加$\color{red}{controlPlaneEndpoint}$,$\color{red}{podSubnet}$

root@k8s-master1:~# cat kubeadm-init.yml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 48h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 10.203.124.230

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8s-master1.linux.com

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 10.203.124.238:6443

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.17.2

networking:

dnsDomain: linux.com

podSubnet: 10.10.0.0/16

serviceSubnet: 172.16.0.0/16

scheduler: {}

基于yml文件初始化集群

root@k8s-master1:~# kubeadm init --config kubeadm-init.yml --ignore-preflight-errors=swap

输出结果

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join 10.203.124.238:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:c5ae436a1bd5d7c128f7db7060d51551d8ee3903c35dd16d780c38653f937a06 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.203.124.238:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:c5ae436a1bd5d7c128f7db7060d51551d8ee3903c35dd16d780c38653f937a06

配置kube-config文件

kube-config文件中包含kube-apiserver地址及相关认证信息

master1上配置kube-config文件

root@k8s-master1:~# mkdir -p $HOME/.kube

root@k8s-master1:~# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

root@k8s-master1:~# chown $(id -u):$(id -g) $HOME/.kube/config

master上没有部署flannel网络组件时,kubectl get nodes显示的状态为NotReady

查看cluster节点

root@k8s-master1:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master1.linux.com NotReady master 22m v1.17.2

部署网络组件flannel

https://github.com/coreos/flannel/

准备kube-flannel.yml文件

将https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml 下载到master1

[root@JevonWei ~]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

root@k8s-master1:~# ls kube-flannel.yml

kube-flannel.yml

修改kube-flannel.yml文件中的pod network网络中kubeadm init指定的--pod-network-cidr=10.10.0.0/16地址

root@k8s-master1:~# cat kube-flannel.yml

net-conf.json: |

{

"Network": "10.10.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

准备flannel image

执行kube-flannel.yml文件,apply时会pull quay.io/coreos/flannel:v0.12.0-amd64 image,若无法直接从quay.io/coreos/flannel:v0.12.0-amd64 pull image,可先使用其他途径将image pull到所有的master上

root@k8s-master1:~/images# docker load < flannel.tar

256a7af3acb1: Loading layer [==================================================>] 5.844MB/5.844MB

d572e5d9d39b: Loading layer [==================================================>] 10.37MB/10.37MB

57c10be5852f: Loading layer [==================================================>] 2.249MB/2.249MB

7412f8eefb77: Loading layer [==================================================>] 35.26MB/35.26MB

05116c9ff7bf: Loading layer [==================================================>] 5.12kB/5.12kB

Loaded image: quay.io/coreos/flannel:v0.12.0-amd64

root@k8s-master1:~/images# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

quay.io/coreos/flannel v0.12.0-amd64 4e9f801d2217 2 months ago 52.8MB

部署flannel

master1上执行kube-flannel.yml文件

root@k8s-master1:~# kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

确认node状态

root@k8s-master1:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master1.linux.com Ready master 3h25m v1.17.2

k8s-master2.linux.com Ready master 164m v1.17.2

k8s-master3.linux.com Ready master 159m v1.17.2

master添加新节点

当前maste1生成证书用于添加新控制节点

root@k8s-master1:~# kubeadm init phase upload-certs --upload-certs

I0606 16:38:29.449089 84654 version.go:251] remote version is much newer: v1.18.3; falling back to: stable-1.17

W0606 16:38:34.434978 84654 validation.go:28] Cannot validate kube-proxy config - no validator is available

W0606 16:38:34.435026 84654 validation.go:28] Cannot validate kubelet config - no validator is available

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

de15087cf5e3e5986c5e1577f9d041ed02f95ceb911a20d41eba792b9afc507c

master join 新节点

在已经安装了docker、kubeadm和kubelet的 master2、master3节点上执行以下操作:

root@k8s-master2:~# kubeadm join 10.203.124.238:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:c5ae436a1bd5d7c128f7db7060d51551d8ee3903c35dd16d780c38653f937a06 \

--control-plane --certificate-key de15087cf5e3e5986c5e1577f9d041ed02f95ceb911a20d41eba792b9afc507c --ignore-preflight-errors=swap

\\ -certificate-key指定的key为上步操作kubeadm init phase upload-certs --upload-certs生成的Using certificate key

kubeadm join输出结果

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

master1查看当前节点状态

在master1上执行kubectl get nodes

root@k8s-master1:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master1.linux.com NotReady master 46m v1.17.2

k8s-master2.linux.com NotReady master 6m18s v1.17.2

k8s-master3.linux.com NotReady master 36s v1.17.2

当master节点上有quay.io/coreos/flannel iamge时,STATUS会由NotReady变为Ready

root@k8s-master1:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master1.linux.com Ready master 3h25m v1.17.2

k8s-master2.linux.com Ready master 164m v1.17.2

k8s-master3.linux.com Ready master 159m v1.17.2

添加node节点

在所有node上执行kubeadm join,加入k8s集群 node节点

root@node1:~# kubeadm join 10.203.124.238:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:c5ae436a1bd5d7c128f7db7060d51551d8ee3903c35dd16d780c38653f937a06 --ignore-preflight-errors=swap

输出为

W0606 15:47:49.095318 5123 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set.

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING Swap]: running with swap on is not supported. Please disable swap

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.17" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

查看node节点状态

node1节点添加之后,若master1上kubectl get nodes 输出的node节点STATUS为NotReady,则也需要手动将quay.io/coreos/flannel:v0.12.0-amd64 镜像load到node节点上

root@k8s-master1:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master1.linux.com Ready master 3h45m v1.17.2

k8s-master2.linux.com Ready master 3h5m v1.17.2

k8s-master3.linux.com Ready master 179m v1.17.2

node1.linux.com NotReady 3m58s v1.17.2

node2.linux.com NotReady 2m30s v1.17.2

node3.linux.com NotReady 2m24s v1.17.2

可手动将master节点上的lannel image 拷贝到 node节点上,并在node上导入

root@node3:~# docker load < images/flannel.tar

256a7af3acb1: Loading layer [==================================================>] 5.844MB/5.844MB

d572e5d9d39b: Loading layer [==================================================>] 10.37MB/10.37MB

57c10be5852f: Loading layer [==================================================>] 2.249MB/2.249MB

7412f8eefb77: Loading layer [==================================================>] 35.26MB/35.26MB

05116c9ff7bf: Loading layer [==================================================>] 5.12kB/5.12kB

Loaded image: quay.io/coreos/flannel:v0.12.0-amd64

root@node3:~# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

quay.io/coreos/flannel v0.12.0-amd64 4e9f801d2217 2 months ago 52.8MB

master1上再次确认nodes状态为Ready

root@k8s-master1:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master1.linux.com Ready master 3h52m v1.17.2

k8s-master2.linux.com Ready master 3h11m v1.17.2

k8s-master3.linux.com Ready master 3h6m v1.17.2

node1.linux.com Ready 10m v1.17.2

node2.linux.com Ready 9m1s v1.17.2

node3.linux.com Ready 8m55s v1.17.2

测试

验证master状态

root@k8s-master1:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master1.linux.com Ready master 5h29m v1.17.2

k8s-master2.linux.com Ready master 5h24m v1.17.2

k8s-master3.linux.com Ready master 5h23m v1.17.2

node1.linux.com Ready 5h23m v1.17.2

node2.linux.com Ready 5h22m v1.17.2

node3.linux.com Ready 5h22m v1.17.2

验证k8s集群状态

root@k8s-master1:~# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

当前csr证书状态

root@k8s-master1:~# kubectl get csr

测试pod间的网络

k8s集群创建容器并测试网络通信

创建一个alpine pod

root@k8s-master1:~# kubectl run net-test1 --image=alpine --replicas=3 sleep 360000

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

deployment.apps/net-test1 created

pod已创建

root@k8s-master1:~# kubectl get pod

NAME READY STATUS RESTARTS AGE

net-test1-5fcc69db59-7s5nc 1/1 Running 0 42s

net-test1-5fcc69db59-9v95p 1/1 Running 0 42s

net-test1-5fcc69db59-vq5wq 1/1 Running 0 42s

root@k8s-master1:~# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

net-test1-5fcc69db59-7s5nc 1/1 Running 0 77s 10.10.3.2 node1.linux.com

net-test1-5fcc69db59-9v95p 1/1 Running 0 77s 10.10.4.2 node2.linux.com

net-test1-5fcc69db59-vq5wq 1/1 Running 0 77s 10.10.5.2 node3.linux.com

测试pod与外部网络的通信

容器与外网和pod内其他容器都是可通信

root@k8s-master1:~# kubectl exec -it net-test1-5fcc69db59-9v95p sh

/ # ifconfig

eth0 Link encap:Ethernet HWaddr FA:37:E5:7B:61:BA

inet addr:10.10.4.2 Bcast:0.0.0.0 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1450 Metric:1

RX packets:26 errors:0 dropped:0 overruns:0 frame:0

TX packets:1 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:1968 (1.9 KiB) TX bytes:42 (42.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

/ # ping 10.10.3.2

PING 10.10.3.2 (10.10.3.2): 56 data bytes

64 bytes from 10.10.3.2: seq=0 ttl=62 time=1.934 ms

64 bytes from 10.10.3.2: seq=1 ttl=62 time=0.402 ms

/ # ping www.baidu.com

PING www.baidu.com (36.152.44.95): 56 data bytes

64 bytes from 36.152.44.95: seq=0 ttl=55 time=9.423 ms

部署dashboard

将dashboard上传到Harbor仓库

pull dashboard iamge

root@k8s-master1:~# docker pull kubernetesui/dashboard:v2.0.0-rc6

v2.0.0-rc6: Pulling from kubernetesui/dashboard

1f45830e3050: Pull complete

Digest: sha256:61f9c378c427a3f8a9643f83baa9f96db1ae1357c67a93b533ae7b36d71c69dc

Status: Downloaded newer image for kubernetesui/dashboard:v2.0.0-rc6

docker.io/kubernetesui/dashboard:v2.0.0-rc6

root@k8s-master1:~# docker pull kubernetesui/metrics-scraper:v1.0.3

v1.0.3: Pulling from kubernetesui/metrics-scraper

75d12d4b9104: Pull complete

fcd66fda0b81: Pull complete

53ff3f804bbd: Pull complete

Digest: sha256:40f1d5785ea66609b1454b87ee92673671a11e64ba3bf1991644b45a818082ff

Status: Downloaded newer image for kubernetesui/metrics-scraper:v1.0.3

docker.io/kubernetesui/metrics-scraper:v1.0.3

root@k8s-master1:~# docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

harbor.linux.com/baseimages/dashboard v2.0.0-rc6 cdc71b5a8a0e 2 months ago 221MB

kubernetesui/dashboard v2.0.0-rc6 cdc71b5a8a0e 2 months ago 221MB

quay.io/coreos/flannel v0.12.0-amd64 4e9f801d2217 2 months ago 52.8MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy v1.17.3 ae853e93800d 3 months ago 116MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager v1.17.3 b0f1517c1f4b 3 months ago 161MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver v1.17.3 90d27391b780 3 months ago 171MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler v1.17.3 d109c0821a2b 3 months ago 94.4MB

kubernetesui/metrics-scraper v1.0.3 3327f0dbcb4a 4 months ago 40.1MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy v1.17.2 cba2a99699bd 4 months ago 116MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver v1.17.2 41ef50a5f06a 4 months ago 171MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager v1.17.2 da5fd66c4068 4 months ago 161MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler v1.17.2 f52d4c527ef2 4 months ago 94.4MB

registry.cn-hangzhou.aliyuncs.com/google_containers/coredns 1.6.5 70f311871ae1 7 months ago 41.6MB

registry.cn-hangzhou.aliyuncs.com/google_containers/etcd 3.4.3-0 303ce5db0e90 7 months ago 288MB

registry.cn-hangzhou.aliyuncs.com/google_containers/pause 3.1 da86e6ba6ca1 2 years ago 742kB

将image push到harbor

修改所有master、node主机上的hosts解析文件,确保可访问harbor主机

root@k8s-master1:~# cat /etc/hosts | grep harbor

10.203.124.236 harbor harbor.linux.com

配置允许docker使用http协议

在所有主机上将--insecure-registry harbor.linux.com添加到docker启动参数中,表示可使用http协议的仓库

root@k8s-master1:~# dockerd --help | grep ins

--authorization-plugin list Authorization plugins to load

--dns-search list DNS search domains to use

--insecure-registry list Enable insecure registry communication

root@k8s-master1:~# cat /lib/systemd/system/docker.service | grep ExecStart

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --insecure-registry harbor.linux.com

重启docker生效

root@k8s-master1:~# systemctl daemon-reload

root@k8s-master1:~# systemctl restart docker

将dashboard 镜像tag修改为harbor的信息

root@k8s-master1:~# docker tag 3327f0dbcb4a harbor.linux.com/baseimages/metrics-scraper:v1.0.3

root@k8s-master1:~# docker tag cdc71b5a8a0e harbor.linux.com/baseimages/dashboard:v2.0.0-rc6

root@k8s-master1:~# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

kubernetesui/dashboard v2.0.0-rc6 cdc71b5a8a0e 2 months ago 221MB

harbor.linux.com/baseimages/dashboard v2.0.0-rc6 cdc71b5a8a0e 2 months ago 221MB

上传镜像到harbor

root@k8s-master1:~# docker login harbor.linux.com

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

root@k8s-master1:~# docker push harbor.linux.com/baseimages/dashboard:v2.0.0-rc6

The push refers to repository [harbor.linux.com/baseimages/dashboard]

f6419c845e04: Pushed

v2.0.0-rc6: digest: sha256:7d7273c38f37c62375bb8262609b746f646da822dc84ea11710eed7082482b12 size: 529

root@k8s-master1:~# docker push harbor.linux.com/baseimages/metrics-scraper:v1.0.3

The push refers to repository [harbor.linux.com/baseimages/metrics-scraper]

4e247d9378a1: Pushed

0aec45b843c5: Pushed

3ebaca24781b: Pushed

v1.0.3: digest: sha256:e24a74b3b1cdc84d6285d507a12eb06907fd8c457b3e8ae9baa9418eca43efc4 size: 946

harbor pull image

从harbor下载镜像

root@node1:~# docker pull harbor.linux.com/baseimages/dashboard:v2.0.0-rc6

v2.0.0-rc6: Pulling from baseimages/dashboard

1f45830e3050: Pull complete

Digest: sha256:7d7273c38f37c62375bb8262609b746f646da822dc84ea11710eed7082482b12

Status: Downloaded newer image for harbor.linux.com/baseimages/dashboard:v2.0.0-rc6

harbor.linux.com/baseimages/dashboard:v2.0.0-rc6

部署dashboard

准备yml文件

准备dashboard-2.0.0-rc6.yml文件,image仓库指向harbor,nodePort为30002

root@k8s-master1:~# cat dashboard-2.0.0-rc6.yml

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30002

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: harbor.linux.com/baseimages/dashboard:v2.0.0-rc6

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"beta.kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

image: harbor.linux.com/baseimages/metrics-scraper:v1.0.3

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"beta.kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

编辑admin-user.yml权限控制文件

root@k8s-master1:~# cat admin-user.yml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

部署

root@k8s-master1:~# kubectl apply -f dashboard-2.0.0-rc6.yml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

root@k8s-master1:~# kubectl apply -f admin-user.yml

serviceaccount/admin-user created

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

查看集群的services,可看到dashboard的端口为30002

root@k8s-master1:~# kubectl get service -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 172.16.0.1 443/TCP 16m

kube-system kube-dns ClusterIP 172.16.0.10 53/UDP,53/TCP,9153/TCP 16m

kubernetes-dashboard dashboard-metrics-scraper ClusterIP 172.16.213.211 8000/TCP 65s

kubernetes-dashboard kubernetes-dashboard NodePort 172.16.65.18 443:30002/TCP 66s

所有的master和node节点都监听dashboard 30002端口

root@node1:~# ss -ntl | grep 30002

LISTEN 0 128 *:30002 *:*

在浏览器中访问任意节点的30002端口登录(https://10.203.124.233:30002)

使用token登录dashboard

在master节点上查看kubernetes-dashboard的token,并复制token,在dashboard的浏览器中使用token登录

root@k8s-master1:~# kubectl get secret -A | grep admin

kubernetes-dashboard admin-user-token-t8fjg kubernetes.io/service-account-token 3 7m9s

root@k8s-master1:~# kubectl describe secret admin-user-token-t8fjg -n kubernetes-dashboard

Name: admin-user-token-t8fjg

Namespace: kubernetes-dashboard

Labels:

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: c1d4a1b1-5082-447c-b29b-f0730290ae9f

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IkpiYks5aGpXUV84eHV2ZElGRVZ4emxuNEUyWl9PbUU0WkIzNk1tRjVNZHcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXQ4ZmpnIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJjMWQ0YTFiMS01MDgyLTQ0N2MtYjI5Yi1mMDczMDI5MGFlOWYiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.RN5AFQI_gZ5IxwHQ-yfkfyQDL4UXmyFSq8gfi_JAEUBNvKW-jEeIVri2ZF8O1PqJpkoiKouVxdgc3MwJDdBpszxFv1bub0GeMdKidiJ4hifo1QJOHV8V84B51PshFpMvDLYpX_JkK40tiYnjH5CnRrNgeM-SalOo-QlfpbK1mDyBostsuz8-9lOJGXqOZLv6TZPlBfA31rcfmo2G7eDreE4kR61EhW2q5pYmEgmATIBJwAZXSy0-W3oIvSwxekXzgGByzrObmczpW_wdcP81T83Uzhhz5cst1n3xUhP8a-WepeiNfHQbh46UK310KpqivLEEcDS6Aa1HdQTxpv1aKA

在浏览器中,使用token登录kubernetes-dashboard

使用KubeCondif文件登录Dashboard

查看token

root@k8s-master1:~# kubectl get secret -A | grep admin

kubernetes-dashboard admin-user-token-t8fjg kubernetes.io/service-account-token 3 10h

root@k8s-master1:~# kubectl describe secret admin-user-token-t8fjg -n kubernetes-dashboard

Name: admin-user-token-t8fjg

Namespace: kubernetes-dashboard

Labels:

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: c1d4a1b1-5082-447c-b29b-f0730290ae9f

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IkpiYks5aGpXUV84eHV2ZElGRVZ4emxuNEUyWl9PbUU0WkIzNk1tRjVNZHcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXQ4ZmpnIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJjMWQ0YTFiMS01MDgyLTQ0N2MtYjI5Yi1mMDczMDI5MGFlOWYiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.RN5AFQI_gZ5IxwHQ-yfkfyQDL4UXmyFSq8gfi_JAEUBNvKW-jEeIVri2ZF8O1PqJpkoiKouVxdgc3MwJDdBpszxFv1bub0GeMdKidiJ4hifo1QJOHV8V84B51PshFpMvDLYpX_JkK40tiYnjH5CnRrNgeM-SalOo-QlfpbK1mDyBostsuz8-9lOJGXqOZLv6TZPlBfA31rcfmo2G7eDreE4kR61EhW2q5pYmEgmATIBJwAZXSy0-W3oIvSwxekXzgGByzrObmczpW_wdcP81T83Uzhhz5cst1n3xUhP8a-WepeiNfHQbh46UK310KpqivLEEcDS6Aa1HdQTxpv1aKA

拷贝一份kube的配置文件做修改

root@k8s-master1:~# cp /root/.kube/config /opt/kubeconfig

将添加token后的kubeconfig文件存放于电脑本地,每次登录时选中该文件即可登录

K8s集群升级

k8s从1.17.2升级到1.17.4

升级 k8s 集群必须先升级 kubeadm 版本到目的 k8s 版本,也就是说 kubeadm 是 k8s 升级的 ”准升证”。

升级 k8s master 服务

在 k8s 的所有 master 进行升级,将管理端服务 kube-controller-manager、kube-apiserver、kube-scheduler、kube-proxy

验证当 k8s 前版本

root@k8s-master1:~# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"17", GitVersion:"v1.17.2", GitCommit:"59603c6e503c87169aea6106f57b9f242f64df89", GitTreeState:"clean", BuildDate:"2020-01-18T23:27:49Z", GoVersion:"go1.13.5", Compiler:"gc", Platform:"linux/amd64"}

各 master 安装指定新版本 kubeadm

查看k8s版本列表

root@k8s-master1:~# apt-cache madison kubeadm

kubeadm | 1.18.3-00 | https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 Packages

kubeadm | 1.18.2-00 | https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 Packages

kubeadm | 1.18.1-00 | https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 Packages

kubeadm | 1.18.0-00 | https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 Packages

kubeadm | 1.17.6-00 | https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 Packages

kubeadm | 1.17.5-00 | https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 Packages

kubeadm | 1.17.4-00 | https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 Packages

kubeadm | 1.17.3-00 | https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 Packages

在各master安装新版本 kubeadm

root@k8s-master1:~# apt-get install kubeadm=1.17.4-00

验证 kubeadm 版本

root@k8s-master1:~# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"17", GitVersion:"v1.17.4", GitCommit:"8d8aa39598534325ad77120c120a22b3a990b5ea", GitTreeState:"clean", BuildDate:"2020-03-12T21:01:11Z", GoVersion:"go1.13.8", Compiler:"gc", Platform:"linux/amd64"}

kubeadm 升级命令使用帮助

root@k8s-master1:~# kubeadm upgrade --help

Upgrade your cluster smoothly to a newer version with this command

Usage:

kubeadm upgrade [flags]

kubeadm upgrade [command]

Available Commands:

apply Upgrade your Kubernetes cluster to the specified version

diff Show what differences would be applied to existing static pod manifests. See also: kubeadm upgrade apply --dry-run

node Upgrade commands for a node in the cluster

plan Check which versions are available to upgrade to and validate whether your current cluster is upgradeable. To skip the internet check, pass in the optional [version] parameter

Flags:

-h, --help help for upgrade

Global Flags:

--add-dir-header If true, adds the file directory to the header

--log-file string If non-empty, use this log file

--log-file-max-size uint Defines the maximum size a log file can grow to. Unit is megabytes. If the value is 0, the maximum file size is unlimited. (default 1800)

--rootfs string [EXPERIMENTAL] The path to the 'real' host root filesystem.

--skip-headers If true, avoid header prefixes in the log messages

--skip-log-headers If true, avoid headers when opening log files

-v, --v Level number for the log level verbosity

Use "kubeadm upgrade [command] --help" for more information about a command.

查看升级计划

查看升级计划

root@k8s-master1:~# kubeadm upgrade plan

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[preflight] Running pre-flight checks.

[upgrade] Making sure the cluster is healthy:

[upgrade] Fetching available versions to upgrade to

[upgrade/versions] Cluster version: v1.17.2

[upgrade/versions] kubeadm version: v1.17.4

I0607 11:59:53.319376 33051 version.go:251] remote version is much newer: v1.18.3; falling back to: stable-1.17

[upgrade/versions] Latest stable version: v1.17.6

[upgrade/versions] Latest version in the v1.17 series: v1.17.6

Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':

COMPONENT CURRENT AVAILABLE

Kubelet 6 x v1.17.2 v1.17.6

Upgrade to the latest version in the v1.17 series:

COMPONENT CURRENT AVAILABLE

API Server v1.17.2 v1.17.6

Controller Manager v1.17.2 v1.17.6

Scheduler v1.17.2 v1.17.6

Kube Proxy v1.17.2 v1.17.6

CoreDNS 1.6.5 1.6.5

Etcd 3.4.3 3.4.3-0

You can now apply the upgrade by executing the following command:

kubeadm upgrade apply v1.17.6

Note: Before you can perform this upgrade, you have to update kubeadm to v1.17.6.

_____________________________________________________________________

master升级

非主节点升级

升级前,在在haproxy中修改配置负载均衡配置,取消升级节点的请求

root@ha1:~# cat /etc/haproxy/haproxy.cfg

listen k8s-api-6443

bind 10.203.124.238:6443

mode tcp

server master1 10.203.124.230:6443 check inter 3s fall 3 rise 5

#server master2 10.203.124.231:6443 check inter 3s fall 3 rise 5

#server master3 10.203.124.232:6443 check inter 3s fall 3 rise 5

root@ha1:~# systemctl restart haproxy.service

master3、master2升级

先升级master3,最后升级master1

root@k8s-master3:~# kubeadm upgrade apply v1.17.4

master1主节点升级

master1升级前准备

在master1中,可提前将需要升级的1.17.4版本的image下载到系统上,缩短升级时间

root@k8s-master1:~# cat k8s-1.17.4-images-download.sh

#!/bin/bash

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.17.4

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.17.4

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.17.4

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.17.4

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.3-0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.6.5

下载iamges

root@k8s-master1:~# sh k8s-1.17.4-images-download.sh

修改haproxy配置

master1升级前,再次调整haproxy.cfg配置文件,将master1的节点移除,master2、master3添加到haproxy资源组中

root@ha1:~# cat /etc/haproxy/haproxy.cfg

listen k8s-api-6443

bind 10.203.124.238:6443

mode tcp

#server master1 10.203.124.230:6443 check inter 3s fall 3 rise 5

server master2 10.203.124.231:6443 check inter 3s fall 3 rise 5

server master3 10.203.124.232:6443 check inter 3s fall 3 rise 5

root@ha1:~# systemctl restart haproxy.service

master1升级

root@k8s-master1:~# kubeadm upgrade apply v1.17.4

验证镜像

获取主机上pod信息

root@k8s-master1:~# kubectl get pod -A

master升级kubelet、kubectl

root@k8s-master1:~# apt-cache madison kubelet

root@k8s-master1:~# apt-cache madison kubectl

root@k8s-master1:~# apt install kubelet=1.17.4-00 kubectl=1.17.4-00

查看node信息

若kubelet、kubectl升级后,显示的VERSION版本仍旧为旧版本1.17.2,查看kubectl状态,显示没有重新创建etcd pod,则手工删除pod后,重启kubectl服务,则VERSION显示升级后的版本

root@k8s-master1:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master1.linux.com Ready master 113m v1.17.2

k8s-master2.linux.com Ready master 110m v1.17.4

k8s-master3.linux.com Ready master 109m v1.17.2

node1.linux.com Ready 108m v1.17.2

node2.linux.com Ready 108m v1.17.2

node3.linux.com Ready 108m v1.17.2

root@k8s-master2:~# systemctl status kubelet

root@k8s-master1:~# kubectl get pod -A

root@k8s-master1:~# kubectl delete pod etcd-k8s-master3.linux.com -n kube-system

pod "etcd-k8s-master3.linux.com" deleted

root@k8s-master1:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master1.linux.com Ready master 115m v1.17.4

k8s-master2.linux.com Ready master 111m v1.17.4

k8s-master3.linux.com Ready master 111m v1.17.4

node1.linux.com Ready 110m v1.17.2

node2.linux.com Ready 110m v1.17.2

node3.linux.com Ready 110m v1.17.2

升级 k8s node 服务

验证当前 node 版本信息

node 节点还是 1.17.2 的旧版本

root@k8s-master1:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master1.linux.com Ready master 115m v1.17.4

k8s-master2.linux.com Ready master 111m v1.17.4

k8s-master3.linux.com Ready master 111m v1.17.4

node1.linux.com Ready 110m v1.17.2

node2.linux.com Ready 110m v1.17.2

node3.linux.com Ready 110m v1.17.2

升级各 node 节点kubelet

在node节点上升级

root@node1:~# kubeadm upgrade node --kubelet-version 1.17.4

Node 升级 kubectl、kubelet

包含 master 节点在的 kubectl、kubelet 也要升级

root@node1:~# apt install kubelet=1.17.4-00 kubeadm=1.17.4-00

验证node节点的版本

root@k8s-master1:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master1.linux.com Ready master 131m v1.17.4

k8s-master2.linux.com Ready master 128m v1.17.4

k8s-master3.linux.com Ready master 127m v1.17.4

node1.linux.com Ready 126m v1.17.4

node2.linux.com Ready 126m v1.17.4

node3.linux.com Ready 126m v1.17.4

测试运行Nginx+Tomcat

https://kubernetes.io/zh/docs/concepts/workloads/controllers/deployment/

运行测试Nginx,并实现动静分离

下载Nginx、Tomcat Image,push到harbor

Nginx

root@k8s-master1:~# docker pull nginx:1.14.2

1.14.2: Pulling from library/nginx

27833a3ba0a5: Pull complete

0f23e58bd0b7: Pull complete

8ca774778e85: Pull complete

Digest: sha256:f7988fb6c02e0ce69257d9bd9cf37ae20a60f1df7563c3a2a6abe24160306b8d

Status: Downloaded newer image for nginx:1.14.2

docker.io/library/nginx:1.14.2

root@k8s-master1:~# docker tag nginx:1.14.2 harbor.linux.com/baseimages/nginx:1.14.2

root@k8s-master1:~# docker push harbor.linux.com/baseimages/nginx:1.14.2

The push refers to repository [harbor.linux.com/baseimages/nginx]

82ae01d5004e: Pushed

b8f18c3b860b: Pushed

5dacd731af1b: Pushed

1.14.2: digest: sha256:706446e9c6667c0880d5da3f39c09a6c7d2114f5a5d6b74a2fafd24ae30d2078 size: 948

Tomcat(Dockfile重做Tomcat Image)

root@k8s-master1:~# docker pull tomcat

Using default tag: latest

latest: Pulling from library/tomcat

376057ac6fa1: Pull complete

5a63a0a859d8: Pull complete

496548a8c952: Pull complete

2adae3950d4d: Pull complete

0a297eafb9ac: Pull complete

09a4142c5c9d: Pull complete

9e78d9befa39: Pull complete

18f492f90b9c: Pull complete

7834493ec6cd: Pull complete

216b2be21722: Pull complete

Digest: sha256:ce753be7b61d86f877fe5065eb20c23491f783f283f25f6914ba769fee57886b

Status: Downloaded newer image for tomcat:latest

docker.io/library/tomcat:latest

根据pull 下来的iamge,通过dockerfile添加一个Tomcat的访问目录,重新制作image,push到harbor

root@k8s-master1:/usr/local/src/kubeadm# mkdir tomcat-dockerfile

root@k8s-master1:/usr/local/src/kubeadm# cd tomcat-dockerfile/

root@k8s-master1:/usr/local/src/kubeadm/tomcat-dockerfile# vim Dockerfile

FROM tomcat

ADD ./app /usr/local/tomcat/webapps/app/

基于当前目录下Dockerfile Build Image

root@k8s-master1:/usr/local/src/kubeadm/tomcat-dockerfile# docker build -t harbor.linux.com/danran/tomcat:app .

Sending build context to Docker daemon 3.584kB

Step 1/2 : FROM tomcat

---> 1b6b1fe7261e

Step 2/2 : ADD ./app /usr/local/tomcat/webapps/app/

---> 1438161fa122

Successfully built 1438161fa122

Successfully tagged harbor.linux.com/danran/tomcat:app

root@k8s-master1:/usr/local/src/kubeadm/tomcat-dockerfile# docker push harbor.linux.com/danran/tomcat:app

The push refers to repository [harbor.linux.com/danran/tomcat]

2b7a658772fe: Pushed

b0ac242ce8d3: Mounted from baseimages/tomcat

5e71d8e4cd3d: Mounted from baseimages/tomcat

eb4497d7dab7: Mounted from baseimages/tomcat

bfbfe00b44fc: Mounted from baseimages/tomcat

d39111fb2602: Mounted from baseimages/tomcat

155d997ed77c: Mounted from baseimages/tomcat

88cfc2fcd059: Mounted from baseimages/tomcat

760e8d95cf58: Mounted from baseimages/tomcat

7cc1c2d7e744: Mounted from baseimages/tomcat

8c02234b8605: Mounted from baseimages/tomcat

app: digest: sha256:68a9021fa5ed55c75d12abef17d55333d80bd299d867722b9dbd86a3799f8210 size: 2628

运行Nginx

root@k8s-master1:~# cd /usr/local/src/

root@k8s-master1:/usr/local/src# mkdir kubeadm

root@k8s-master1:/usr/local/src# cd kubeadm/

root@k8s-master1:/usr/local/src/kubeadm# mkdir nginx-yml

root@k8s-master1:/usr/local/src/kubeadm# cd nginx-yml/

nginx.yml指定image为harbor仓库

root@k8s-master1:/usr/local/src/kubeadm/nginx-yml# cat nginx.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: harbor.linux.com/baseimages/nginx:1.14.2

ports:

- containerPort: 80

---

kind: Service

apiVersion: v1

metadata:

labels:

app: danran-nginx-service-label

name: danran-nginx-service

namespace: default

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

nodePort: 30004

selector:

app: nginx

root@k8s-master1:/usr/local/src/kubeadm/nginx-yml# kubectl apply -f nginx.yml

deployment.apps/nginx-deployment created

service/danran-nginx-service created

分别向三个nginx pod的index.html写入不同数据,观察访问nginx时的pod的调度

root@k8s-master1:/usr/local/src/kubeadm/nginx-yml# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-c55f88b6b-5wkhr 1/1 Running 0 52s

nginx-deployment-c55f88b6b-g2kjs 1/1 Running 0 52s

nginx-deployment-c55f88b6b-z226z 1/1 Running 0 52s

root@k8s-master1:/usr/local/src/kubeadm/nginx-yml# kubectl exec -it nginx-deployment-c55f88b6b-5wkhr bash

root@nginx-deployment-c55f88b6b-5wkhr:/# ls /usr/share/nginx/html/

50x.html index.html

root@nginx-deployment-c55f88b6b-5wkhr:/# echo pod1 > /usr/share/nginx/html/index.html

root@nginx-deployment-c55f88b6b-5wkhr:/# exit

exit

root@k8s-master1:/usr/local/src/kubeadm/nginx-yml# kubectl exec -it nginx-deployment-c55f88b6b-g2kjs bash

root@nginx-deployment-c55f88b6b-g2kjs:/# echo pod2 > /usr/share/nginx/html/index.html

root@nginx-deployment-c55f88b6b-g2kjs:/# exit

exit

root@k8s-master1:/usr/local/src/kubeadm/nginx-yml# kubectl exec -it nginx-deployment-c55f88b6b-z226z bash

root@nginx-deployment-c55f88b6b-z226z:/# echo pod3 > /usr/share/nginx/html/index.html

root@nginx-deployment-c55f88b6b-z226z:/# exit

exit

在浏览器中通过访问node节点的地址和Nginx的端口,访问nginx pod(1092.168.1.163为其中一台node的地址)

![]()

修改haproxy配置文件,添加Nginx 反向代理

在keepalived中新增一个10.203.124.239 用作Nginx的VIP

root@ha1:~# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from [email protected]

smtp_server 10.203.124.230

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state MASTER

interface ens33

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.203.124.238 dev ens33 label ens33:1

10.203.124.239 dev ens33 label ens33:1

}

}

root@ha1:~# systemctl restart keepalived.service

haproxy中新增一个用于Nginx的反向代理负载均衡

root@ha1:~# cat /etc/haproxy/haproxy.cfg

listen k8s-nginx

bind 10.203.124.239:80

mode tcp

server master1 10.203.124.233:30004 check inter 3s fall 3 rise 5

server master2 10.203.124.234:30004 check inter 3s fall 3 rise 5

server master3 10.203.124.235:30004 check inter 3s fall 3 rise 5

10.203.124.239:80 端口已监听

root@ha1:~# systemctl restart haproxy.service

root@ha1:~# ss -ntl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 127.0.0.53%lo:53 0.0.0.0:*

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 10.203.124.238:6443 0.0.0.0:*

LISTEN 0 128 10.203.124.239:80 0.0.0.0:*

LISTEN 0 128 [::]:22 [::]:*

运行 tomcat

root@k8s-master1:/usr/local/src/kubeadm/tomcat-dockerfile# cd ..

root@k8s-master1:/usr/local/src/kubeadm# mkdir tomcat-yml

root@k8s-master1:/usr/local/src/kubeadm# cd tomcat-

-bash: cd: tomcat-: No such file or directory

root@k8s-master1:/usr/local/src/kubeadm# cd tomcat-yml/

root@k8s-master1:/usr/local/src/kubeadm/tomcat-yml# cp ../nginx-yml/nginx.yml tomcat.yml

root@k8s-master1:/usr/local/src/kubeadm/tomcat-yml# vim tomcat.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: tomcat-deployment

labels:

app: tomcat

spec:

replicas: 1

selector:

matchLabels:

app: tomcat

template:

metadata:

labels:

app: tomcat

spec:

containers:

- name: tomcat

image: harbor.linux.com/danran/tomcat:app

ports:

- containerPort: 8080

---

kind: Service

apiVersion: v1

metadata:

labels:

app: danran-tomcat-service-label

name: danran-tomcat-service

namespace: default

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

selector:

app: tomcat

创建Tomcat Pod

root@k8s-master1:/usr/local/src/kubeadm/tomcat-yml# kubectl apply -f tomcat.yml

deployment.apps/tomcat-deployment created

service/danran-tomcat-service created

root@k8s-master1:~# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-c55f88b6b-z226z 1/1 Running 0 57m

tomcat-deployment-678684f574-x6g4h 1/1 Running 0 7m1s

dashboard进入到Nginx Pod

在Pod中添加Tomcat的反向代理配置

获取Tomcat的Service Name为danran-tomcat-service,和tomcat pod的IP地址10.10.5.4

root@k8s-master1:~# kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

danran-nginx-service NodePort 172.16.190.128 80:30004/TCP 95m

danran-tomcat-service NodePort 172.16.105.29 80:30716/TCP 45m

kubernetes ClusterIP 172.16.0.1 443/TCP 5h31m

root@k8s-master1:~# kubectl describe service danran-tomcat-service

Name: danran-tomcat-service

Namespace: default

Labels: app=danran-tomcat-service-label

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"app":"danran-tomcat-service-label"},"name":"danran-tomcat-serv...

Selector: app=tomcat

Type: NodePort

IP: 172.16.105.29

Port: http 80/TCP

TargetPort: 8080/TCP

NodePort: http 30716/TCP

Endpoints: 10.10.5.4:8080

Session Affinity: None

External Traffic Policy: Cluster

Events:

将Nginx镜像启动的danran-nginx-service Pod中的/etc/nginx/conf.d/default.conf文件拷贝出来编辑后,将该文件重启使用Dockerfile制作新的镜像,或在danran-nginx-service Pod中在线修改配置文件,然后制作为image,重新启动pod

编辑拷贝至image中的/etc/nginx/conf.d/default.conf配置文件(将Tomcat Pod的Name和IP写入Nginx的代理配置)

root@k8s-master1:/usr/local/src/kubeadm/Nginx-dockerfile# pwd

/usr/local/src/kubeadm/Nginx-dockerfile

root@k8s-master1:/usr/local/src/kubeadm/Nginx-dockerfile# ls

default.conf

在default.conf 中新增proxy_pass到Tomcat的Service

root@k8s-master1:/usr/local/src/kubeadm/Nginx-dockerfile# cat default.conf

location /app {

proxy_pass http://danran-tomcat-service;

}

编辑Dockerfile文件,制作新的Nginx image

root@k8s-master1:/usr/local/src/kubeadm/Nginx-dockerfile# ls

default.conf Dockerfile

root@k8s-master1:/usr/local/src/kubeadm/Nginx-dockerfile# vim Dockerfile

FROM harbor.linux.com/baseimages/nginx:1.14.2

ADD default.conf /etc/nginx/conf.d/

root@k8s-master1:/usr/local/src/kubeadm/Nginx-dockerfile# docker build -t harbor.linux.com/baseimages/nginx-tomcat:v1 .

Sending build context to Docker daemon 4.096kB

Step 1/2 : FROM harbor.linux.com/baseimages/nginx:1.14.2

---> 295c7be07902

Step 2/2 : ADD default.conf /etc/nginx/conf.d/

---> 356359fcd741

Successfully built 356359fcd741

Successfully tagged harbor.linux.com/baseimages/nginx-tomcat:v1

root@k8s-master1:~# docker push harbor.linux.com/baseimages/nginx-tomcat:v1

The push refers to repository [harbor.linux.com/baseimages/nginx-tomcat]

1bd551b21d90: Pushed

82ae01d5004e: Mounted from baseimages/nginx

b8f18c3b860b: Mounted from baseimages/nginx

5dacd731af1b: Mounted from baseimages/nginx

v1: digest: sha256:eb7d1cce19182025765d49734c1936f273355fdc71a0d02b65cf67aa1734d1f5 size: 1155

重新运行Nginx

修改nginx.yml文件中的image地址为harbor.linux.com/baseimages/nginx-tomcat:v1

root@k8s-master1:/usr/local/src/kubeadm/nginx-yml# cat nginx.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: harbor.linux.com/baseimages/nginx-tomcat:v1

ports:

- containerPort: 80

---

kind: Service

apiVersion: v1

metadata:

labels:

app: danran-nginx-service-label

name: danran-nginx-service

namespace: default

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

nodePort: 30004

selector:

app: nginx

root@k8s-master1:/usr/local/src/kubeadm/nginx-yml# kubectl apply -f nginx.yml

deployment.apps/nginx-deployment configured

service/danran-nginx-service unchanged

在dashboard中确认Nginx Pod是否使用的nginx-tomcat:v1 Image

浏览器访问Nginx 的VIP,可跳转到Tomcat的界面

kubeadm命令

token 管理

# kubeadm token --help

create #创建 token,默认有效期 24 小时

delete #删除 token

generate #生成并打印 token,但不在服务器上创建,即将 token 用于其他操作

list #列出服务器所有的 token

显示已有的token

root@k8s-master1:~# kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

abcdef.0123456789abcdef 1d 2020-06-09T11:03:47+08:00 authentication,signing system:bootstrappers:kubeadm:default-node-token

创建token

root@k8s-master1:~# kubeadm token create

W0607 17:12:33.444298 5914 validation.go:28] Cannot validate kube-proxy config - no validator is available

W0607 17:12:33.444396 5914 validation.go:28] Cannot validate kubelet config - no validator is available

rolz9i.aeoaj86ga4p8g03k

root@k8s-master1:~# kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

abcdef.0123456789abcdef 1d 2020-06-09T11:03:47+08:00 authentication,signing system:bootstrappers:kubeadm:default-node-token

rolz9i.aeoaj86ga4p8g03k 23h 2020-06-08T17:12:33+08:00 authentication,signing system:bootstrappers:kubeadm:default-node-token

问题

-

kubectl delete flannel

root@k8s-master1:~# kubectl delete -f kube-flannel.yml podsecuritypolicy.policy "psp.flannel.unprivileged" deleted clusterrole.rbac.authorization.k8s.io "flannel" deleted clusterrolebinding.rbac.authorization.k8s.io "flannel" deleted serviceaccount "flannel" deleted configmap "kube-flannel-cfg" deleted daemonset.apps "kube-flannel-ds-amd64" deleted daemonset.apps "kube-flannel-ds-arm64" deleted daemonset.apps "kube-flannel-ds-arm" deleted daemonset.apps "kube-flannel-ds-ppc64le" deleted daemonset.apps "kube-flannel-ds-s390x" deleted root@k8s-master1:~# kubectl apply -f kube-flannel.yml podsecuritypolicy.policy/psp.flannel.unprivileged created clusterrole.rbac.authorization.k8s.io/flannel created clusterrolebinding.rbac.authorization.k8s.io/flannel created serviceaccount/flannel created configmap/kube-flannel-cfg created daemonset.apps/kube-flannel-ds-amd64 created daemonset.apps/kube-flannel-ds-arm64 created daemonset.apps/kube-flannel-ds-arm created daemonset.apps/kube-flannel-ds-ppc64le created daemonset.apps/kube-flannel-ds-s390x created