一、管理k8s资源

1.管理k8s核心资源的三种基本方法

- 陈述式管理方法----主要依赖命令行cli工具进行管理

- 声明式管理方法--主要依赖统一资源配置清单(manifest)进行管理

- GUI式管理方法--主要依赖图形化操作界面(web页面)进行管理

2.陈述式管理方法

2.1 管理namespace资源

##查看名称空间

[root@kjdow7-21 ~]# kubectl get namespaces #或者 kubectl get ns

NAME STATUS AGE

default Active 4d18h

kube-node-lease Active 4d18h

kube-public Active 4d18h

kube-system Active 4d18h

##查看名称空间内的资源

[root@kjdow7-21 ~]# kubectl get all -n default

NAME READY STATUS RESTARTS AGE

pod/nginx-ds-ssdtm 1/1 Running 1 2d18h

pod/nginx-ds-xfsk4 1/1 Running 1 2d18h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 192.168.0.1 443/TCP 4d19h

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/nginx-ds 2 2 2 2 2 2d18h

##创建名称空间

[root@kjdow7-21 ~]# kubectl create namespace app

namespace/app created

##删除名称空间

[root@kjdow7-21 ~]# kubectl delete namespace app

namespace "app" deleted

注意: namespace可以简写为ns;

2.2 管理Deploymen资源

##创建deployment

[root@kjdow7-21 ~]# kubectl create deployment nginx-dp --image=harbor.phc-dow.com/public/nginx:v1.7.9 -n kube-public

deployment.apps/nginx-dp created

[root@kjdow7-21 ~]# kubectl get all -n kube-public

NAME READY STATUS RESTARTS AGE

pod/nginx-dp-67f6684bb9-zptmd 1/1 Running 0 26s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-dp 1/1 1 1 27s

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-dp-67f6684bb9 1 1 1 26s

###查看deployment资源

[root@kjdow7-21 ~]# kubectl get deployment -n kube-public

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-dp 1/1 1 1 42m

注意: deployment可以简写为deploy

###长格式显示deployment信息

[root@kjdow7-21 ~]# kubectl get deploy -n kube-public -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx-dp 1/1 1 1 53m nginx harbor.phc-dow.com/public/nginx:v1.7.9 app=nginx-dp

###查看deployment详细信息

[root@kjdow7-21 ~]# kubectl describe deployment nginx-dp -n kube-public

Name: nginx-dp

Namespace: kube-public

CreationTimestamp: Mon, 13 Jan 2020 23:33:45 +0800

Labels: app=nginx-dp

Annotations: deployment.kubernetes.io/revision: 1

Selector: app=nginx-dp

Replicas: 1 desired | 1 updated | 1 total | 1 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=nginx-dp

Containers:

nginx:

Image: harbor.phc-dow.com/public/nginx:v1.7.9

Port:

Host Port:

Environment:

Mounts:

Volumes:

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets:

NewReplicaSet: nginx-dp-67f6684bb9 (1/1 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 55m deployment-controller Scaled up replica set nginx-dp-67f6684bb9 to 1

2.3 管理pod资源

###查看pod资源

[root@kjdow7-21 ~]# kubectl get pod -n kube-public

NAME READY STATUS RESTARTS AGE

nginx-dp-67f6684bb9-zptmd 1/1 Running 0 104m

###进入pod资源

[root@kjdow7-21 ~]# kubectl exec -it nginx-dp-67f6684bb9-zptmd /bin/bash -n kube-public

root@nginx-dp-67f6684bb9-zptmd:/# ip add

1: lo: mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

9: eth0@if10: mtu 1500 qdisc noqueue state UP

link/ether 02:42:ac:07:16:03 brd ff:ff:ff:ff:ff:ff

inet 172.7.22.3/24 brd 172.7.22.255 scope global eth0

valid_lft forever preferred_lft forever

注意:也可以在对应的宿主机上使用docker exec 进入

2.4 删除资源

2.4.1 删除pod

###删除pod

[root@kjdow7-21 ~]# kubectl get pod -n kube-public

NAME READY STATUS RESTARTS AGE

nginx-dp-67f6684bb9-zptmd 1/1 Running 0 146m

[root@kjdow7-21 ~]# kubectl delete pod nginx-dp-67f6684bb9-zptmd -n kube-public

pod "nginx-dp-67f6684bb9-zptmd" deleted

[root@kjdow7-21 ~]# kubectl get pod -n kube-public

NAME READY STATUS RESTARTS AGE

nginx-dp-67f6684bb9-kn8m9 1/1 Running 0 11s

注:删除pod实际上是重启了一个新的pod,因为pod控制器预期有一个pod,删除了一个,就会再启动一个,让它符合预定的预期。

###强制删除pod

[root@kjdow7-21 ~]# kubectl get pod -n kube-public

NAME READY STATUS RESTARTS AGE

nginx-dp-67f6684bb9-kn8m9 1/1 Running 0 22h

[root@kjdow7-21 ~]# kubectl delete pod nginx-dp-67f6684bb9-kn8m9 -n kube-public --force --grace-period=0

warning: Immediate deletion does not wait for confirmation that the running resource has been terminated. The resource may continue to run on the cluster indefinitely.

pod "nginx-dp-67f6684bb9-kn8m9" force deleted

[root@kjdow7-21 ~]# kubectl get pod -n kube-public

NAME READY STATUS RESTARTS AGE

nginx-dp-67f6684bb9-898p6 1/1 Running 0 17s

--force --grace-period=0 是强制删除

2.4.2 删除deployment

[root@kjdow7-21 ~]# kubectl delete deployment nginx-dp -n kube-public

deployment.extensions "nginx-dp" deleted

[root@kjdow7-21 ~]# kubectl get deployment -n kube-public

No resources found.

[root@kjdow7-21 ~]# kubectl get pod -n kube-public

No resources found.

2.5 service 资源

2.5.1 创建service

###创建service资源

[root@kjdow7-21 ~]# kubectl expose deployment nginx-dp --port=80 -n kube-public

service/nginx-dp exposed

###查看资源

[root@kjdow7-21 ~]# kubectl get all -n kube-public

NAME READY STATUS RESTARTS AGE

pod/nginx-dp-67f6684bb9-l9jqh 1/1 Running 0 13m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/nginx-dp ClusterIP 192.168.208.157 80/TCP 7m53s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-dp 1/1 1 1 13m

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-dp-67f6684bb9 1 1 1 13m

####service中的cluster-ip就是pod的固定接入点,不管podip怎么变化,cluster-ip不会变

# kubectl get pod -n kube-public -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dp-67f6684bb9-l9jqh 1/1 Running 0 26m 172.7.22.3 kjdow7-22.host.com

kjdow7-22 ~]# curl 192.168.208.157

Welcome to nginx!

Welcome to nginx!

If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.

For online documentation and support please refer to

nginx.org.

Commercial support is available at

nginx.com.

Thank you for using nginx.

###可以访问

[root@kjdow7-22 ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.0.1:443 nq

-> 10.4.7.21:6443 Masq 1 0 0

-> 10.4.7.22:6443 Masq 1 0 0

TCP 192.168.208.157:80 nq

-> 172.7.22.3:80 Masq 1 0 0

[root@kjdow7-21 ~]# kubectl scale deployment nginx-dp --replicas=2 -n kube-public

deployment.extensions/nginx-dp scaled

[root@kjdow7-22 ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.0.1:443 nq

-> 10.4.7.21:6443 Masq 1 0 0

-> 10.4.7.22:6443 Masq 1 0 0

TCP 192.168.208.157:80 nq

-> 172.7.21.3:80 Masq 1 0 0

-> 172.7.22.3:80 Masq 1 0 0

###尝试删除一个pod,查看下状况

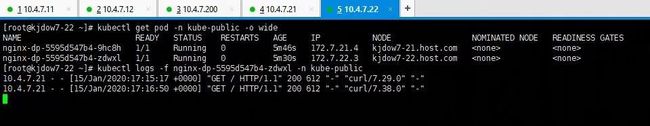

[root@kjdow7-21 ~]# kubectl get pod -n kube-public -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dp-67f6684bb9-l9jqh 1/1 Running 0 39m 172.7.22.3 kjdow7-22.host.com

nginx-dp-67f6684bb9-nn9pq 1/1 Running 0 3m41s 172.7.21.3 kjdow7-21.host.com

[root@kjdow7-21 ~]# kubectl delete pod nginx-dp-67f6684bb9-l9jqh -n kube-public

pod "nginx-dp-67f6684bb9-l9jqh" deleted

[root@kjdow7-21 ~]# kubectl get pod -n kube-public -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dp-67f6684bb9-lmfvl 1/1 Running 0 9s 172.7.22.4 kjdow7-22.host.com

nginx-dp-67f6684bb9-nn9pq 1/1 Running 0 3m57s 172.7.21.3 kjdow7-21.host.com

[root@kjdow7-21 ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.0.1:443 nq

-> 10.4.7.21:6443 Masq 1 0 0

-> 10.4.7.22:6443 Masq 1 0 0

TCP 192.168.208.157:80 nq

-> 172.7.21.3:80 Masq 1 0 0

-> 172.7.22.4:80 Masq 1 0 0

无论后端的pod的ip如何变化,前面的service的cluster-ip不会变。cluster-ip代理后端两台ip

service就是抽象一个相对稳定的点,可以让服务有一个稳定的接入点可以接入进来

2.5.2 查看service

[root@kjdow7-21 ~]# kubectl describe svc nginx-dp -n kube-public

Name: nginx-dp

Namespace: kube-public

Labels: app=nginx-dp

Annotations:

Selector: app=nginx-dp

Type: ClusterIP

IP: 192.168.208.157

Port: 80/TCP

TargetPort: 80/TCP

Endpoints: 172.7.21.3:80,172.7.22.4:80

Session Affinity: None

Events:

可以看到selector跟pod一样,service是通过标签进行匹配

注意:service简写为svc

2.6 总结

- kubernetes集群管理集群资源的唯一入口是通过相应的方法调用apiserver的接口

- kubectl是官方的cli命令行工具,用于与apiserver进行通信,将用户在命令行输入的命令,组织并转化为apiserver能识别的信息,进而实现管理k8s各种资源的一种有效途径。

- kubectl的命令大全

-

- kubectl --help

- kubernetes中文社区

- 陈述式资源管理方法可以满足90%以上的资源管理需求,但它的缺点也很明显

-

- 命令冗长、复杂、难以记忆

- 特定场景下无法实现管理需求

- 对资源的增、删、查操作比较容易,改就很痛苦了

3.声明式资源管理方法

声明式资源管理方法依赖于资源配置清单(yaml/json)

3.1 查看资源配置清单的方法

[root@kjdow7-21 ~]# kubectl get svc nginx-dp -o yaml -n kube-public

apiVersion: v1

kind: Service

metadata:

creationTimestamp: "2020-01-14T17:15:32Z"

labels:

app: nginx-dp

name: nginx-dp

namespace: kube-public

resourceVersion: "649706"

selfLink: /api/v1/namespaces/kube-public/services/nginx-dp

uid: 5159828f-6d5d-4e43-83aa-51fd16b652d0

spec:

clusterIP: 192.168.208.157

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx-dp

sessionAffinity: None

type: ClusterIP

status:

loadBalancer: {}

3.2 解释资源配置清单

[root@kjdow7-21 ~]# kubectl explain service

相当于--help,解释后面的资源是什么,怎么使用

3.3 创建资源配置清单

[root@kjdow7-21 nginx-ds]# vim nginx-ds-svc.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx-ds

name: nginx-ds

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx-ds

sessionAffinity: None

type: ClusterIP

[root@kjdow7-21 nginx-ds]# ls

nginx-ds-svc.yaml

[root@kjdow7-21 nginx-ds]# kubectl create -f nginx-ds-svc.yaml

service/nginx-ds created

3.4 修改资源配置清单

3.4.1 离线修改

[root@kjdow7-21 nginx-ds]# kubectl apply -f nginx-ds-svc.yaml

service/nginx-ds created

[root@kjdow7-21 nginx-ds]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 192.168.0.1 443/TCP 6d

nginx-ds ClusterIP 192.168.52.127 8080/TCP 7s

[root@kjdow7-21 nginx-ds]# vim nginx-ds-svc.yaml

###修改文件的端口为8081,并应用新的配置清单

[root@kjdow7-21 nginx-ds]# kubectl apply -f nginx-ds-svc.yaml

service/nginx-ds configured

[root@kjdow7-21 nginx-ds]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 192.168.0.1 443/TCP 6d

nginx-ds ClusterIP 192.168.52.127 8081/TCP 2m46s

注意:必须使用apply创建的,才能使用apply进行应用修改,如果是使用create进行创建的,则不能用apply应用修改

3.4.2 在线修改

[root@kjdow7-21 nginx-ds]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 192.168.0.1 443/TCP 6d

nginx-ds ClusterIP 192.168.8.104 8080/TCP 4m18s

[root@kjdow7-21 nginx-ds]# kubectl edit svc nginx-ds

service/nginx-ds edited

####通过edit相当于vim打开清单文件,修改后保存退出,立即生效,但是创建时使用的yaml文件并没有被修改

[root@kjdow7-21 nginx-ds]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 192.168.0.1 443/TCP 6d

nginx-ds ClusterIP 192.168.8.104 8082/TCP 4m42s

3.5 删除资源配置清单

3.5.1 陈述式删除

[root@kjdow7-21 nginx-ds]# kubectl delete svc nginx-ds

service "nginx-ds" deleted

3.5.2 声明式删除

[root@kjdow7-21 nginx-ds]# kubectl delete -f nginx-ds-svc.yaml

service "nginx-ds" deleted

3.6 总结

- 声明式资源管理方法依赖于统一资源配置清单文件对资源进行管理

- 对资源的管理,是通过事先定义在统一资源配置清单内,再通过陈述式命令应用到k8s集群里

- 语法格式:kubectl create/apply/delete -f /path/to/yaml

- 资源配置清单的学习方法:

-

- 多看别人(官方)写的,能读懂

- 能照着现成的文件改着用

- 遇到不懂的,善于用kubectl explain 。。。查

- 初学切记上来就无中生有,自己憋着写

二、k8s核心的插件

1.flanneld安装部署

kubernetes设计了网络模型,但却将它的实现交给了网络插件,CNI网络插件最主要的功能就是实现pod资源能够跨宿主机进行通信

创建的CNI网络插件:

- Flannel

- Calico

- Canal

- Contiv

- OpenContrail

- NSX-T

- Kub-router

1.1 下载与安装flannel

在kjdow7-21、kjdow7-22上进行部署

flannel官方下载地址

###下载并解压flannel

[root@kjdow7-21 ~]# cd /opt/src

[root@kjdow7-22 src]# wget https://github.com/coreos/flannel/releases/download/v0.11.0/flannel-v0.11.0-linux-amd64.tar.gz

[root@kjdow7-21 src]# mkdir /opt/flannel-v0.11.0

[root@kjdow7-21 src]# tar xf flannel-v0.11.0-linux-amd64.tar.gz -C /opt/flannel-v0.11.0/

[root@kjdow7-21 src]# ln -s /opt/flannel-v0.11.0 /opt/flannel

1.2 拷贝证书

[root@kjdow7-21 srcl]# mkdir /opt/flannel/certs

[root@kjdow7-21 srcl]# cd /opt/flannel/certs

[root@kjdow7-21 certs]# scp kjdow7-200:/opt/certs/ca.pem .

[root@kjdow7-21 certs]# scp kjdow7-200:/opt/certs/client.pem .

[root@kjdow7-21 certs]# scp kjdow7-200:/opt/certs/client-key.pem .

flannel默认是需要使用etcd做一些存储和配置的,因此需要flannel使用client证书连接etcd

1.3 配置flannel配置文件

[root@kjdow7-21 flannel]# vim /opt/flannel/subnet.env

FLANNEL_NETWORK=172.7.0.0/16

FLANNEL_SUBNET=172.7.21.1/24

FLANNEL_MTU=1500

FLANNEL_IPMASQ=false

第一行表示管理的网络,第二行表示本机的网络,在其他节点上进行配置时需要进行修改

[root@kjdow7-21 flannel]# vim /opt/flannel/flanneld.sh

#!/bin/sh

./flanneld \

--public-ip=10.4.7.21 \

--etcd-endpoints=https://10.4.7.12:2379,https://10.4.7.21:2379,https://10.4.7.22:2379 \

--etcd-keyfile=./certs/client-key.pem \

--etcd-certfile=./certs/client.pem \

--etcd-cafile=./certs/ca.pem \

--iface=eth1 \

--subnet-file=./subnet.env \

--healthz-port=2401

[root@kjdow7-21 flannel]# chmod +x flanneld.sh

注意:ip写本机ip,iface写通信的接口名,根据实际进行修改,不同节点配置略有不同

1.4 配置etcd

因为flannel需要etcd做一些存储配置,因此需要在etcd中创建有关flannel的配置

[root@kjdow7-21 flannel]# cd /opt/etcd

###查看etcd集群状态

[root@kjdow7-21 etcd]# ./etcdctl member list

988139385f78284: name=etcd-server-7-22 peerURLs=https://10.4.7.22:2380 clientURLs=http://127.0.0.1:2379,https://10.4.7.22:2379 isLeader=false

5a0ef2a004fc4349: name=etcd-server-7-21 peerURLs=https://10.4.7.21:2380 clientURLs=http://127.0.0.1:2379,https://10.4.7.21:2379 isLeader=false

f4a0cb0a765574a8: name=etcd-server-7-12 peerURLs=https://10.4.7.12:2380 clientURLs=http://127.0.0.1:2379,https://10.4.7.12:2379 isLeader=true

配置etcd,增加host-gw

[root@kjdow7-21 etcd]# ./etcdctl set /coreos.com/network/config '{"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}}'

{"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}}

###查看配置的内容

[root@kjdow7-21 etcd]# ./etcdctl get /coreos.com/network/config

{"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}}

注意:这一步只需要做一次就行,不需要在其他节点重复配置了

1.5 创建supervisor配置

[root@kjdow7-21 etcd]# vi /etc/supervisord.d/flannel.ini

[program:flanneld-7-21]

command=/opt/flannel/flanneld.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/flannel ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/flanneld/flanneld.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

####创建日志目录

[root@kjdow7-21 flannel]# mkdir -p /data/logs/flanneld

###启动

[root@kjdow7-21 flannel]# supervisorctl update

flanneld-7-21: added process group

[root@kjdow7-21 flannel]# supervisorctl status

etcd-server-7-21 RUNNING pid 5885, uptime 4 days, 13:33:06

flanneld-7-21 RUNNING pid 10430, uptime 0:00:40

kube-apiserver-7-21 RUNNING pid 5886, uptime 4 days, 13:33:06

kube-controller-manager-7-21 RUNNING pid 5887, uptime 4 days, 13:33:06

kube-kubelet-7-21 RUNNING pid 5881, uptime 4 days, 13:33:06

kube-proxy-7-21 RUNNING pid 5890, uptime 4 days, 13:33:06

kube-scheduler-7-21 RUNNING pid 5894, uptime 4 days, 13:33:06

配置端口转发

flannel依托于端口转发功能,每台安装flannel的服务器必须配置端口转发

~]# vim /etc/sysctl.conf

net.ipv4.ip_forward = 1

sysctl -p

1.6 验证Flannel

[root@kjdow7-21 ~]# kubectl get pod -n kube-public -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dp-67f6684bb9-lmfvl 1/1 Running 0 18h 172.7.22.4 kjdow7-22.host.com

nginx-dp-67f6684bb9-nn9pq 1/1 Running 0 18h 172.7.21.3 kjdow7-21.host.com

####在一台运算节点上ping,发现都能ping通了

[root@kjdow7-21 ~]# ping 172.7.22.4

PING 172.7.22.4 (172.7.22.4) 56(84) bytes of data.

64 bytes from 172.7.22.4: icmp_seq=1 ttl=63 time=0.528 ms

64 bytes from 172.7.22.4: icmp_seq=2 ttl=63 time=0.249 ms

^C

--- 172.7.22.4 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1000ms

rtt min/avg/max/mdev = 0.249/0.388/0.528/0.140 ms

[root@kjdow7-21 ~]# ping 172.7.21.3

PING 172.7.21.3 (172.7.21.3) 56(84) bytes of data.

64 bytes from 172.7.21.3: icmp_seq=1 ttl=64 time=0.151 ms

64 bytes from 172.7.21.3: icmp_seq=2 ttl=64 time=0.101 ms

^C

--- 172.7.21.3 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1000ms

rtt min/avg/max/mdev = 0.101/0.126/0.151/0.025 ms

注意: 通过ip可以看到这两个pod分别在两个不同的宿主机上部署着,之前不能curl通其他宿主机上的pod,现在已经可以了

1.7 flannel原理以及三种网络模型

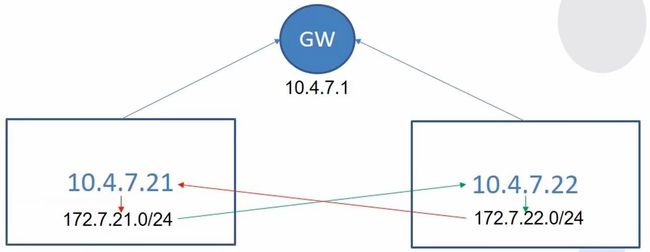

1.7.1 Flannel的host-gw模型

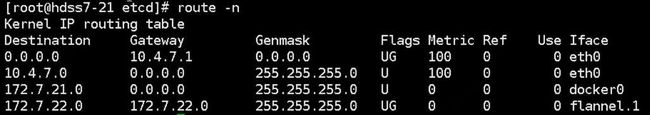

###在21上看路由表

[root@kjdow7-21 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.4.7.11 0.0.0.0 UG 100 0 0 eth1

10.4.7.0 0.0.0.0 255.255.255.0 U 100 0 0 eth1

172.7.21.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0

172.7.22.0 10.4.7.22 255.255.255.0 UG 0 0 0 eth1

###在22上看路由表

[root@kjdow7-22 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.4.7.11 0.0.0.0 UG 100 0 0 eth1

10.4.7.0 0.0.0.0 255.255.255.0 U 100 0 0 eth1

172.7.21.0 10.4.7.21 255.255.255.0 UG 0 0 0 eth1

172.7.22.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0

可以看到flannel在host-gw模式下其实是维护了一个静态路由表,通过静态路由进行通信。

注意:此种模型仅限于所以的运算节点都在一个网段,且指向同一个网关

'{"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}}'

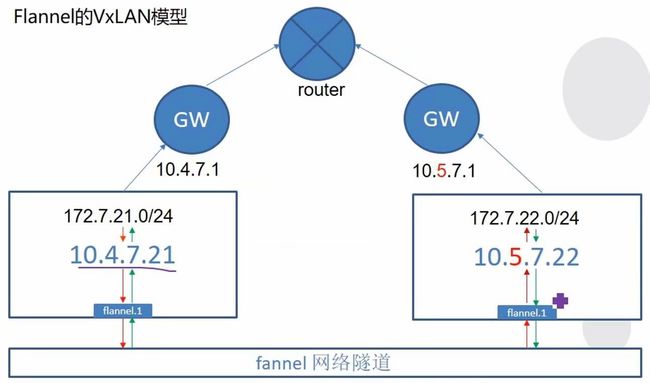

1.7.2 Flannel的VxLAN模型

注意:如果运算节点不在一个网段内,则需要使用vxlan模型。其实就是先找本机接口ip,并给包添加一个flannel的包头,然后再发送出去。

'{"Network": "172.7.0.0/16", "Backend": {"Type": "VxLAN"}}'

通过VxLAN模式启动,会在宿主机上添加一个flannel.1的虚拟网络设备,例如7-21上有如下图所示的网络

1.7.3 直接路由模型

'{"Network": "172.7.0.0/16", "Backend": {"Type": "VxLAN","Directrouting": true}}'

模型是vxlan模型,但是如果发现运算节点都在一个网段,就走host-gw网络

1.8 flannel之SNAT规则优化

问题:在k8s中运行了一个deployment,并起了两个pod做web服务器,可以看到分别部署在两个节点上,登录一个pod,并使用curl访问web页面,在另一个pod上查看实时日志,发现web日志,显示访问的源ip是宿主机ip

怎么解决呢?

在kjdow7-21、kjdow7-22上进行操作

[root@kjdow7-21 ~]# yum install iptables-services -y

[root@kjdow7-21 ~]# systemctl start iptables

[root@kjdow7-21 ~]# systemctl enable iptables

——————————————————————————————————————————————————————————————————————————————

[root@kjdow7-21 ~]# iptables -t nat -D POSTROUTING -s 172.7.21.0/24 ! -o docker0 -j MASQUERADE

[root@kjdow7-21 ~]# iptables -t nat -I POSTROUTING -s 172.7.21.0/24 ! -d 172.7.0.0/16 ! -o docker0 -j MASQUERADE

[root@kjdow7-21 ~]# service iptables save

iptables: Saving firewall rules to /etc/sysconfig/iptables:[ OK ]

####10.4.7.21主机上的,来源是172.7.21.0/24段的docker的ip,目标ip不是172.7.0.0/16段,网络发包不从docker0桥设备出站的,才进行SNAT转换

——————————————————————————————————————————————————————————————————————————————

[root@kjdow7-21 ~]# iptables-save | grep -i reject

-A INPUT -j REJECT --reject-with icmp-host-prohibited

-A FORWARD -j REJECT --reject-with icmp-host-prohibited

[root@kjdow7-21 ~]# iptables -t filter -D INPUT -j REJECT --reject-with icmp-host-prohibited

[root@kjdow7-21 ~]# iptables -t filter -D FORWARD -j REJECT --reject-with icmp-host-prohibited

[root@kjdow7-21 ~]# service iptables save

iptables: Saving firewall rules to /etc/sysconfig/iptables:[ OK ]

在其中一个pod上访问另一个pod的地址,并查看另一个pod的实时日志,可以看到日志中的源地址是真实的地址

在真实生产的情况中,要根据实际情况进行优化。

2.安装部署coredns

- 简单来说,服务发现就是服务(应用)之间互相定位的过程。

- 服务发现并非云时代独有的,传统的单体架构时代也会用到。以下应用场景下,更需要服务发现

-

- 服务(应用)的动态性强

- 服务(应用)更新发布频繁

- 服务(应用)支持自动伸缩

- 在k8s集群里,POD的ip是不断变化的,如何“以不变应万变”呢?

-

- 抽象出了service资源,通过标签选择器,关联一组pod

- 抽象出了集群网络,通过相对固定的“集群ip”,使服务接入点固定

- 那么如何自动关联service资源的“名称”和“集群网络ip”,从而达到服务被集群自动发现的目的呢?

-

- 考虑传统的DNS的模型:kjdow7-21.host.com----->10.4.7.21

- 能否在k8s里建立这样的模型:nginx-ds------>192.168.0.5

- k8s里服务发现的方式-------DNS

- 实现k8s里DNS功能的插件(软件)

-

- kube-dns----------> kubernetes-v1.2至kubernetes-v1.10

- coredns------------> kubernetes-v1.11至今

- 注意:

-

- k8s里的DNS不是万能的,他应该只负责自动维护“服务名”-------> “集群网络IP”之间的关系

2.1 部署k8s的内网资源配置清单http服务

在kjdow7-200上,配置一个nginx虚拟主机,用来提供k8s统一的资源配置清单访问入口

- 配置nginx

[root@kjdow7-200 conf.d]# vim k8s-yaml.phc-dow.com.conf

server {

listen 80;

server_name k8s-yaml.phc-dow.com;

location / {

autoindex on;

default_type text/plain;

root /data/k8s-yaml;

}

}

[root@kjdow7-200 conf.d]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@kjdow7-200 conf.d]# systemctl reload nginx

[root@kjdow7-200 conf.d]# mkdir /data/k8s-yaml -p

- 配置内网dns解析

在kjdow7-11上

[root@kjdow7-11 ~]# cd /var/named/

[root@kjdow7-11 named]# vim phc-dow.com.zon

2020010203 ; serial #serial值加一

k8s-yaml A 10.4.7.200 #添加此行记录

[root@kjdow7-11 named]# systemctl restart named

2.2 下载coredns镜像

在kjdow7-200上

corednsGitHub官方下载地址

cordnsdocker官方下载地址

###准备coredns的镜像,并推送到harbor里面

[root@kjdow7-200 ~]# docker pull coredns/coredns:1.6.1

1.6.1: Pulling from coredns/coredns

c6568d217a00: Pull complete

d7ef34146932: Pull complete

Digest: sha256:9ae3b6fcac4ee821362277de6bd8fd2236fa7d3e19af2ef0406d80b595620a7a

Status: Downloaded newer image for coredns/coredns:1.6.1

docker.io/coredns/coredns:1.6.1

[root@kjdow7-200 ~]# docker tag c0f6e815079e harbor.phc-dow.com/public/coredns:v1.6.1

[root@kjdow7-200 ~]# docker push harbor.phc-dow.com/public/coredns:v1.6.1

2.3 准备资源配置清单

在kjdow7-200上

k8s官方yaml参考

[root@kjdow7-200 k8s-yaml]# mkdir coredns && cd /data/k8s-yaml/coredns

[root@kjdow7-200 coredns]# vim /data/k8s-yaml/coredns/rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

[root@kjdow7-200 coredns]# vim /data/k8s-yaml/coredns/cm.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

log

health

ready

kubernetes cluster.local 192.168.0.0/16

forward . 10.4.7.11

cache 30

loop

reload

loadbalance

}

[root@kjdow7-200 coredns]# vim /data/k8s-yaml/coredns/dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: coredns

kubernetes.io/name: "CoreDNS"

spec:

replicas: 1

selector:

matchLabels:

k8s-app: coredns

template:

metadata:

labels:

k8s-app: coredns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

containers:

- name: coredns

image: harbor.phc-dow.com/public/coredns:v1.6.1

args:

- -conf

- /etc/coredns/Corefile

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

[root@kjdow7-200 coredns]# vim /data/k8s-yaml/coredns/svc.yaml

apiVersion: v1

kind: Service

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: coredns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: coredns

clusterIP: 192.168.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

- name: metrics

port: 9153

protocol: TCP

[root@kjdow7-200 coredns]# ll

total 16

-rw-r--r-- 1 root root 319 Jan 16 20:53 cm.yaml

-rw-r--r-- 1 root root 1299 Jan 16 20:57 dp.yaml

-rw-r--r-- 1 root root 954 Jan 16 20:51 rbac.yaml

-rw-r--r-- 1 root root 387 Jan 16 20:58 svc.yaml

2.4 声明式创建资源

[root@kjdow7-21 ~]# kubectl apply -f http://k8s-yaml.phc-dow.com/coredns/rbac.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

[root@kjdow7-21 ~]# kubectl apply -f http://k8s-yaml.phc-dow.com/coredns/cm.yaml

configmap/coredns created

[root@kjdow7-21 ~]# kubectl apply -f http://k8s-yaml.phc-dow.com/coredns/dp.yaml

deployment.apps/coredns created

[root@kjdow7-21 ~]# kubectl apply -f http://k8s-yaml.phc-dow.com/coredns/svc.yaml

service/coredns created

[root@kjdow7-21 ~]# kubectl get all -n kube-system

NAME READY STATUS RESTARTS AGE

pod/coredns-7dd986bcdc-2w8nw 1/1 Running 0 119s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/coredns ClusterIP 192.168.0.2 53/UDP,53/TCP,9153/TCP 112s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/coredns 1/1 1 1 119s

NAME DESIRED CURRENT READY AGE

replicaset.apps/coredns-7dd986bcdc 1 1 1 119s

[root@kjdow7-21 ~]# dig -t A www.baidu.com @192.168.0.2 +short

www.a.shifen.com.

180.101.49.12

180.101.49.11

[root@kjdow7-21 ~]# dig -t A kjdow7-21.host.com @192.168.0.2 +short

10.4.7.21

注意:在前面kubelet的启动脚本中已经制定了dns的ip是192.168.0.2,因此这里可以解析

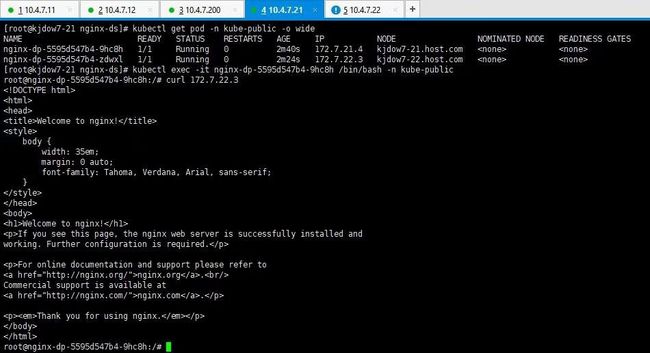

2.5 验证

[root@kjdow7-21 ~]# kubectl get all -n kube-public

NAME READY STATUS RESTARTS AGE

pod/nginx-dp-5595d547b4-9hc8h 1/1 Running 0 40h

pod/nginx-dp-5595d547b4-zdwxl 1/1 Running 0 40h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/nginx-dp ClusterIP 192.168.208.157 80/TCP 2d16h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-dp 2/2 2 2 2d16h

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-dp-5595d547b4 2 2 2 40h

replicaset.apps/nginx-dp-67f6684bb9 0 0 0 2d16h

[root@kjdow7-21 ~]# dig -t A nginx-dp.kube-public.svc.cluster.local. @192.168.0.2 +short

192.168.208.157

[root@kjdow7-21 ~]# kubectl exec -it nginx-dp-5595d547b4-9hc8h /bin/bash -n kube-public

root@nginx-dp-5595d547b4-9hc8h:/# cat /etc/resolv.conf

nameserver 192.168.0.2

search kube-public.svc.cluster.local svc.cluster.local cluster.local host.com

options ndots:5

###进入pod可以看到容器的dns已经自动设置为192.168.0.2

3.k8s服务暴露之ingress

- k8s的DNS实现了服务在集群内被自动发现,那如何使得服务在k8s集群外被使用和访问呢?

-

- 使用nodeport型的service

-

- 注意:无法使用kube-proxy的ipvs模型,只能使用iptables模型

- 使用ingress资源

-

- Ingress只能调度并暴露7层应用,特指http和https协议

- Ingress是K8S API的标准资源类型之一,也是一种核心资源,它其实就是一组基于域名和URL路径,把用户的请求转发至指定的service资源的规则

- 可以将集群外部的请求流量,转发至集群内部,从而实现服务暴露

- Ingress控制器是能够为Ingress资源监听某套接字,然后根据Ingress规则匹配机制路由调度流量的一个组件

- 说白了,Ingress没啥神秘的,就是个简化版的nginx+一段go脚本而已

- 常用的Ingress控制器的实现软件

-

- Ingress-nginx

- HAProxy

- Traefik

3.1 部署traefik(ingress控制器)---准备traefik镜像

traefik官方GitHub下载地址

[root@kjdow7-200 ~]# docker pull traefik:v1.7.2-alpine

v1.7.2-alpine: Pulling from library/traefik

4fe2ade4980c: Pull complete

8d9593d002f4: Pull complete

5d09ab10efbd: Pull complete

37b796c58adc: Pull complete

Digest: sha256:cf30141936f73599e1a46355592d08c88d74bd291f05104fe11a8bcce447c044

Status: Downloaded newer image for traefik:v1.7.2-alpine

docker.io/library/traefik:v1.7.2-alpine

[root@kjdow7-200 ~]# docker images | grep traefik

traefik v1.7.2-alpine add5fac61ae5 15 months ago 72.4MB

[root@kjdow7-200 ~]# docker tag traefik:v1.7.2-alpine harbor.phc-dow.com/public/traefik:v1.7.2-alpine

[root@kjdow7-200 ~]# docker push harbor.phc-dow.com/public/traefik:v1.7.2-alpine

The push refers to repository [harbor.phc-dow.com/public/traefik]

a02beb48577f: Pushed

ca22117205f4: Pushed

3563c211d861: Pushed

df64d3292fd6: Pushed

v1.7.2-alpine: digest: sha256:6115155b261707b642341b065cd3fac2b546559ba035d0262650b3b3bbdd10ea size: 1157

3.2 准备资源配置清单

[root@kjdow7-200 ~]# mkdir /data/k8s-yaml/traefik

[root@kjdow7-200 ~]# cat /data/k8s-yaml/traefik/rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: traefik-ingress-controller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: traefik-ingress-controller

rules:

- apiGroups:

- ""

resources:

- services

- endpoints

- secrets

verbs:

- get

- list

- watch

- apiGroups:

- extensions

resources:

- ingresses

verbs:

- get

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: traefik-ingress-controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: traefik-ingress-controller

subjects:

- kind: ServiceAccount

name: traefik-ingress-controller

namespace: kube-system

[root@kjdow7-200 ~]# cat /data/k8s-yaml/traefik/ds.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: traefik-ingress

namespace: kube-system

labels:

k8s-app: traefik-ingress

spec:

template:

metadata:

labels:

k8s-app: traefik-ingress

name: traefik-ingress

spec:

serviceAccountName: traefik-ingress-controller

terminationGracePeriodSeconds: 60

containers:

- image: harbor.phc-dow.com/public/traefik:v1.7.2-alpine

name: traefik-ingress

ports:

- name: controller

containerPort: 80

hostPort: 81

- name: admin-web

containerPort: 8080

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

args:

- --api

- --kubernetes

- --logLevel=INFO

- --insecureskipverify=true

- --kubernetes.endpoint=https://10.4.7.10:7443

- --accesslog

- --accesslog.filepath=/var/log/traefik_access.log

- --traefiklog

- --traefiklog.filepath=/var/log/traefik.log

- --metrics.prometheus

[root@kjdow7-200 ~]# cat /data/k8s-yaml/traefik/svc.yaml

kind: Service

apiVersion: v1

metadata:

name: traefik-ingress-service

namespace: kube-system

spec:

selector:

k8s-app: traefik-ingress

ports:

- protocol: TCP

port: 80

name: controller

- protocol: TCP

port: 8080

name: admin-web

[root@kjdow7-200 ~]# cat /data/k8s-yaml/traefik/ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: traefik-web-ui

namespace: kube-system

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: traefik.phc-dow.com

http:

paths:

- path: /

backend:

serviceName: traefik-ingress-service

servicePort: 8080

3.3 创建资源

[root@kjdow7-21 ~]# kubectl apply -f http://k8s-yaml.phc-dow.com/traefik/rbac.yaml

serviceaccount/traefik-ingress-controller created

clusterrole.rbac.authorization.k8s.io/traefik-ingress-controller created

clusterrolebinding.rbac.authorization.k8s.io/traefik-ingress-controller created

[root@kjdow7-21 ~]# kubectl apply -f http://k8s-yaml.phc-dow.com/traefik/ds.yaml

daemonset.extensions/traefik-ingress created

[root@kjdow7-21 ~]# kubectl apply -f http://k8s-yaml.phc-dow.com/traefik/svc.yaml

service/traefik-ingress-service created

[root@kjdow7-21 ~]# kubectl apply -f http://k8s-yaml.phc-dow.com/traefik/ingress.yaml

ingress.extensions/traefik-web-ui created

注意:使用kubectl get pod -n kube-system,traefik这个pod可能会停留在containercreating的状态,并报错

Warning FailedCreatePodSandBox 15m kubelet, kjdow7-21.host.com Failed create pod sandbox: rpc error: code = Unknown desc = failed to start sandbox container for pod "traefik-ingress-7fsp8": Error response from daemon: driver failed programming external connectivity on endpoint k8s_POD_traefik-ingress-7fsp8_kube-system_3c7fbecb-801c-4f3a-aa30-e3717245d9f5_0 (7603ab3cbc43915876ab0db527195a963f8c8f4a59f8a1a84f00332f3f387227): (iptables failed: iptables --wait -t filter -A DOCKER ! -i docker0 -o docker0 -p tcp -d 172.7.21.5 --dport 80 -j ACCEPT: iptables: No chain/target/match by that name. (exit status 1))这时可以重启kubelet服务

按照配置已经监听了宿主机的81端口

[root@kjdow7-21 ~]# netstat -lntup | grep 81

tcp6 0 0 :::81 :::* LISTEN 25625/docker-proxy

[root@kjdow7-22 ~]# netstat -lntup | grep 81

tcp6 0 0 :::81 :::* LISTEN 22080/docker-proxy

3.5 配置反向代理

[root@kjdow7-11 ~]# cat /etc/nginx/conf.d/phc-dow.com.conf

upstream default_backend_traefik {

server 10.4.7.21:81 max_fails=3 fail_timeout=10s;

server 10.4.7.22:81 max_fails=3 fail_timeout=10s;

}

server {

server_name *.phc-dow.com;

location / {

proxy_pass http://default_backend_traefik;

proxy_set_header Host $http_host;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

}

}

[root@kjdow7-11 ~]# systemctl reload nginx

3.6 添加域名解析

[root@kjdow7-11 conf.d]# vim /var/named/phc-dow.com.zone

$ORIGIN phc-dow.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.phc-dow.com. dnsadmin.phc-dow.com. (

2020010204 ; serial #serial值+1

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.phc-dow.com.

$TTL 60 ; 1 minute

dns A 10.4.7.11

harbor A 10.4.7.200

k8s-yaml A 10.4.7.200

traefik A 10.4.7.10 #添加此行配置

[root@kjdow7-11 conf.d]# systemctl restart named

[root@kjdow7-11 conf.d]# dig -t A traefik,phc-dow.com @10.4.7.11 +short

[root@kjdow7-11 conf.d]# dig -t A traefik.phc-dow.com @10.4.7.11 +short

10.4.7.10

访问域名:traefik.phc-dow.com

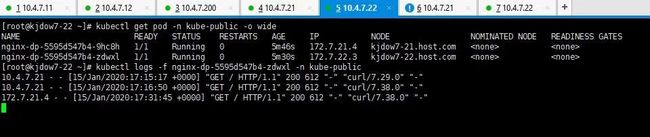

4.dashboar插件安装部署

dashboard官方下载地址

4.1 准备dashboard镜像

[root@kjdow7-200 ~]# docker pull k8scn/kubernetes-dashboard-amd64:v1.8.3

[root@kjdow7-200 ~]# docker images | grep dashboard

k8scn/kubernetes-dashboard-amd64 v1.8.3 fcac9aa03fd6 19 months ago 102MB

[root@kjdow7-200 ~]# docker tag fcac9aa03fd6 harbor.phc-dow.com/public/dashboard:v1.8.3

[root@kjdow7-200 ~]# docker push harbor.phc-dow.com/public/dashboard:v1.8.3

4.2 准备资源配置清单

可以参考github中kubernetes下cluster/addons/dashboard/这里有官方提供的yaml模板

[root@kjdow7-200 ~]# mkdir -p /data/k8s-yaml/dashboard

[root@kjdow7-200 ~]# cat /data/k8s-yaml/dashboard/rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard-admin

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-admin

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard-admin

namespace: kube-system

[root@kjdow7-200 ~]# cat /data/k8s-yaml/dashboard/dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

priorityClassName: system-cluster-critical

containers:

- name: kubernetes-dashboard

image: harbor.phc-dow.com/public/dashboard:v1.8.3

resources:

limits:

cpu: 100m

memory: 300Mi

requests:

cpu: 50m

memory: 100Mi

ports:

- containerPort: 8443

protocol: TCP

args:

# PLATFORM-SPECIFIC ARGS HERE

- --auto-generate-certificates

volumeMounts:

- name: tmp-volume

mountPath: /tmp

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard-admin

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

[root@kjdow7-200 dashboard]# cat svc.yaml

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 443

targetPort: 8443

[root@kjdow7-200 ~]# cat /data/k8s-yaml/dashboard/ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kubernetes-dashboard

namespace: kube-system

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: dashboard.phc-dow.com

http:

paths:

- backend:

serviceName: kubernetes-dashboard

servicePort: 443

4.3 创建资源

[root@kjdow7-22 ~]# kubectl apply -f http://k8s-yaml.phc-dow.com/dashboard/rbac.yaml

serviceaccount/kubernetes-dashboard-admin created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-admin created

[root@kjdow7-22 ~]# kubectl apply -f http://k8s-yaml.phc-dow.com/dashboard/dp.yaml

deployment.apps/kubernetes-dashboard created

[root@kjdow7-22 ~]# kubectl apply -f http://k8s-yaml.phc-dow.com/dashboard/svc.yaml

service/kubernetes-dashboard created

[root@kjdow7-22 ~]# kubectl apply -f http://k8s-yaml.phc-dow.com/dashboard/ingress.yaml

ingress.extensions/kubernetes-dashboard created

[root@kjdow7-22 ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7dd986bcdc-2w8nw 1/1 Running 0 3d22h

kubernetes-dashboard-857c754c78-fv5k6 1/1 Running 0 72s

traefik-ingress-7fsp8 1/1 Running 0 2d20h

traefik-ingress-rlwrj 1/1 Running 0 2d20h

[root@kjdow7-22 ~]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

coredns ClusterIP 192.168.0.2 53/UDP,53/TCP,9153/TCP 3d22h

kubernetes-dashboard ClusterIP 192.168.75.142 443/TCP 2m32s

traefik-ingress-service ClusterIP 192.168.58.5 80/TCP,8080/TCP 2d20h

[root@kjdow7-22 ~]# kubectl get ingress -n kube-system

NAME HOSTS ADDRESS PORTS AGE

kubernetes-dashboard dashboard.phc-dow.com 80 2m30s

traefik-web-ui traefik.phc-dow.com 80 2d20h

4.4 添加域名解析

[root@kjdow7-11 ~]# cat /var/named/phc-dow.com.zone

$ORIGIN phc-dow.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.phc-dow.com. dnsadmin.phc-dow.com. (

2020010205 ; serial #值加一

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.phc-dow.com.

$TTL 60 ; 1 minute

dns A 10.4.7.11

harbor A 10.4.7.200

k8s-yaml A 10.4.7.200

traefik A 10.4.7.10

dashboard A 10.4.7.10 #添加A记录

[root@kjdow7-11 ~]# systemctl restart named

[root@kjdow7-11 ~]# dig -t A dashboard.phc-dow.com @10.4.7.11 +short

10.4.7.10

[root@kjdow7-21 ~]# dig -t A dashboard.phc-dow.com @192.168.0.2 +shor

10.4.7.10

4.5 dashboard配置配置https访问并登录

[root@kjdow7-200 ~]# cd /opt/certs/

[root@kjdow7-200 certs]# (umask 077; openssl genrsa -out dashboard.phc-dow.com.key 2048)

Generating RSA private key, 2048 bit long modulus

...............................................+++

...........................+++

e is 65537 (0x10001)

[root@kjdow7-200 certs]# openssl req -new -key dashboard.phc-dow.com.key -out dashboard.phc-dow.com.csr -subj "/CN=dashboard.phc-dow.com/C=CN/ST=SH/L=Shanghai/O=kjdow/OU=kj"

[root@kjdow7-200 certs]# openssl x509 -req -in dashboard.phc-dow.com.csr -CA ca.pem -CAkey ca-key.pem -CAcreateserial -out dashboard.phc-dow.com.crt -days 3650

Signature ok

subject=/CN=dashboard.phc-dow.com/C=CN/ST=SH/L=Shanghai/O=kjdow/OU=kj

Getting CA Private Key

[root@kjdow7-200 certs]# ls -l| grep dashboard

-rw-r--r-- 1 root root 1212 Jan 20 23:59 dashboard.phc-dow.com.crt

-rw-r--r-- 1 root root 1009 Jan 20 23:53 dashboard.phc-dow.com.csr

-rw------- 1 root root 1679 Jan 20 23:48 dashboard.phc-dow.com.key

在kjdow7-11上配置nginx,dashborad.phc-dow.com使用https访问

[root@kjdow7-11 ~]# mkdir /etc/nginx/certs

[root@kjdow7-11 nginx]# cd /etc/nginx/certs

[root@kjdow7-11 certs]# scp 10.4.7.200:/opt/certs/dashboard.phc-dow.com.crt .

[root@kjdow7-11 certs]# scp 10.4.7.200:/opt/certs/dashboard.phc-dow.com.key .

[root@kjdow7-11 conf.d]# cat dashboard.phc-dow.conf

server {

listen 80;

server_name dashboard.phc-dow.com;

rewrite ^(.*)$ https://${server_name}$1 permanent;

}

server {

listen 443 ssl;

server_name dashboard.phc-dow.com;

ssl_certificate "certs/dashboard.phc-dow.com.crt";

ssl_certificate_key "certs/dashboard.phc-dow.com.key";

ssl_session_cache shared:SSL:1m;

ssl_session_timeout 10m;

ssl_ciphers HIGH:!aNULL:!MD5;

ssl_prefer_server_ciphers on;

location / {

proxy_pass http://default_backend_traefik;

proxy_set_header Host $http_host;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

}

}

[root@kjdow7-11 conf.d]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@kjdow7-11 conf.d]# systemctl reload nginx

4.6 使用token登录dashboard

[root@kjdow7-21 ~]# kubectl get secret -n kube-system

NAME TYPE DATA AGE

coredns-token-xr65q kubernetes.io/service-account-token 3 4d2h

default-token-4gfv2 kubernetes.io/service-account-token 3 11d

kubernetes-dashboard-admin-token-c55cw kubernetes.io/service-account-token 3 4h27m

kubernetes-dashboard-key-holder Opaque 2 4h26m

traefik-ingress-controller-token-p9jp6 kubernetes.io/service-account-token 3 3d1h

[root@kjdow7-21 ~]# kubectl describe secret kubernetes-dashboard-admin-token-c55cw -n kube-system

Name: kubernetes-dashboard-admin-token-c55cw

Namespace: kube-system

Labels:

Annotations: kubernetes.io/service-account.name: kubernetes-dashboard-admin

kubernetes.io/service-account.uid: de6430a5-5d41-4916-917d-23f39a47c9a0

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1379 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbi10b2tlbi1jNTVjdyIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImRlNjQzMGE1LTVkNDEtNDkxNi05MTdkLTIzZjM5YTQ3YzlhMCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiJ9.3FdxCC2-u7635hsifG57G0fR4kqnJPD5ARRGQXBfu47cEgCNbJMAceeW6f8Lmq_Acz_nQxaH92dVFuuouxJvBY1hrswQJMYb2qeH5icH-zZ3ivuzaZ9WFsiix-40w8itMvkv4EhQv8dGaId0DdkPxE0lo-OVUhfk3dndRZnLVPhmkBC-ciJEoaFUgjejaoLoEHx1lMXurVfd5BICPP_hGfeg5sA0HSUaUwp14oAOcbR6syHlCH3O5FN6q7Mxie9g0zqHvGc5RvyLWEKyYwbJwLPA2MeJxRmJ4wH6573w9yaOVEFvENMO-_yjJhIi1BL3MGDvtqWv35yk-jsLo5emVg

复制最后token字段的值,并粘贴到下图所示的位置

4.7 dashboard升级1.10.1

###下载最新版镜像

[root@kjdow7-200 ~]# docker pull hexun/kubernetes-dashboard-amd64:v1.10.1

v1.10.1: Pulling from hexun/kubernetes-dashboard-amd64

9518d8afb433: Pull complete

Digest: sha256:0ae6b69432e78069c5ce2bcde0fe409c5c4d6f0f4d9cd50a17974fea38898747

Status: Downloaded newer image for hexun/kubernetes-dashboard-amd64:v1.10.1

docker.io/hexun/kubernetes-dashboard-amd64:v1.10.1

[root@kjdow7-200 ~]# docker images | grep dashboard

hexun/kubernetes-dashboard-amd64 v1.10.1 f9aed6605b81 13 months ago 122MB

[root@kjdow7-200 ~]# docker tag f9aed6605b81 harbor.phc-dow.com/public/dashboard:v1.10.1

[root@kjdow7-200 ~]# docker push harbor.phc-dow.com/public/dashboard:v1.10.1

###修改dashboard的dp.yaml文件

[root@kjdow7-200 ~]# cd /data/k8s-yaml/dashboard/

[root@kjdow7-200 ~]# sed -i s#dashboard:v1.8.3#dashboard:v1.10.1#g dp.yaml

#修改dp中使用的image

###应用最新配置

[root@kjdow7-21 ~]# kubectl apply -f http://k8s-yaml.phc-dow.com/dashboard/dp.yaml

deployment.apps/kubernetes-dashboard configured

等待几秒刷新页面即可,1.10.1如果不登录是进不去的。是没有跳过选项的

4.8 创建最小权限的ServiceAccount

###创建资源配置文件

[root@kjdow7-200 dashboard]# cat /data/k8s-yaml/dashboard/rbac-minimal.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard

namespace: kube-system

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard-minimal

namespace: kube-system

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics from heapster.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard-minimal

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

[root@kjdow7-200 dashboard]# sed -i s#"serviceAccountName: kubernetes-dashboard-admin"#"serviceAccountName: kubernetes-dashboard"#g dp.yaml

#修改绑定的服务用户名

###应用配置

[root@kjdow7-21 ~]# kubectl apply -f http://k8s-yaml.phc-dow.com/dashboard/rbac-minimal.yaml

serviceaccount/kubernetes-dashboard created

role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

[root@kjdow7-21 ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7dd986bcdc-2w8nw 1/1 Running 0 4d5h

kubernetes-dashboard-7f5f8dd677-knlrs 1/1 Running 0 6s

kubernetes-dashboard-d9d98bb89-2jmmx 0/1 Terminating 0 38m

traefik-ingress-7fsp8 1/1 Running 0 3d3h

traefik-ingress-rlwrj 1/1 Running 0 3d3h

#可以看到新的pod已经启动,老的pod正在删除

查看新的服务用户名的令牌,并登录

[root@kjdow7-21 ~]# kubectl get secret -n kube-system

NAME TYPE DATA AGE

coredns-token-xr65q kubernetes.io/service-account-token 3 4d5h

default-token-4gfv2 kubernetes.io/service-account-token 3 11d

kubernetes-dashboard-admin-token-c55cw kubernetes.io/service-account-token 3 6h58m

kubernetes-dashboard-key-holder Opaque 2 6h58m

kubernetes-dashboard-token-xnvct kubernetes.io/service-account-token 3 11m

traefik-ingress-controller-token-p9jp6 kubernetes.io/service-account-token 3 3d3h

[root@kjdow7-21 ~]# kubectl describe secret kubernetes-dashboard-token-xnvct -n kube-system

Name: kubernetes-dashboard-token-xnvct

Namespace: kube-system

Labels:

Annotations: kubernetes.io/service-account.name: kubernetes-dashboard

kubernetes.io/service-account.uid: 5d14b8fb-9b64-4e5b-ad49-13ac04cf44be

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1379 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi14bnZjdCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjVkMTRiOGZiLTliNjQtNGU1Yi1hZDQ5LTEzYWMwNGNmNDRiZSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.qs1oTLOPUuufo5rJC6QkS09tGUBVHd21xqR4BfOU7QLv5Ua_thlFDVps5V1pznFTyk7hV_9pN9BmZ6GPecuF2eWiwUm-sLv5gf0lg1kvO3ObO9R1RJ8AuJ6slNXJwlpQC8H0jRK2QYgLEWvnF1_tHH2F0ZTmyqBnf_O-rMrwQvLr4FGEmiZ3yf_yI6V7gNwZ_TdWTrcxpaVZk8urpmucda-o9IToy98I0MrDe1EfLJrMl_YIBppmMJFFfTgArQ7IFVQ0STlpqKY6OKV8pTXXnuUnTuD0BzLxfXQfUMo-otQdSxvhQEr8vM5vC5zrvlnBkGqJ15ctQgT0qshAcENBJg

复制最后的token字段的值,并粘贴到dashboard的web页面对应处,并登录

可以看到已经登录成功了,但是因为我们配置的是官方提供的最小的权限,因此会报错没有权限

注意:

1.在1.8.3版本中,如果点击跳过,进去之后的默认权限就是在dp.yaml配置文件中绑定的默认serviceaccountname。

2.如果用之前配置的用户也可以登录,因为这个角色已经绑定了serviceaccountname

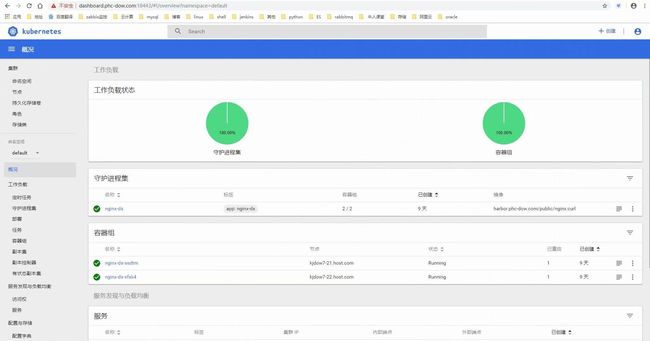

4.9 K8S之RBAC原理

- k8s自1.6版本起默认使用基于角色的访问控制(RBAC)

- 它实现了对集群中的资源的权限的完整覆盖

- 支持权限的动态调整,无需重启apiserver

对用户资源权限的管理以dashboard进行举例

- 一共有三种对象,分别是账户、角色、权限。

- 在定义角色时赋予权限,然后角色绑定给账户,那么账户就有了相应的权限,一个账户可以绑定多个角色,可以方便进行多权限的灵活控制。

- 一个角色都唯一对应了secret,然后通过查看secret的详细信息,找到token字段,使用token的值进行登录,那么就有了这个角色所对应的权限

-

- kubectl get secret -n kube-system #查看secret

- kubectl describe secret kubernetes-dashboard-token-xnvct -n kube-system #查看指定secret的详细信息,并复制token值,进行登录

- 账户分为用户账户和服务账户

- 角色分为role和clusterrole。

- 绑定角色就有两种,分别是rolebinding和clusterrolebinding

5.dashboard插件-heapster

5.1 准备heapster镜像

在kjdow7-200上部署

[root@kjdow7-200 ~]# docker pull quay.io/bitnami/heapster:1.5.4

[root@kjdow7-200 ~]# docker images | grep heapster

quay.io/bitnami/heapster 1.5.4 c359b95ad38b 11 months ago 136MB

[root@kjdow7-200 ~]# docker tag c359b95ad38b harbor.phc-dow.com/public/heapster:v1.5.4

[root@kjdow7-200 ~]# docker push harbor.phc-dow.com/public/heapster:v1.5.4

5.2 准备资源配置清单

在kjdow7-200上部署

[root@kjdow7-200 ~]# mkdir /data/k8s-yaml/dashboard/heapster

[root@kjdow7-200 ~]# vi /data/k8s-yaml/dashboard/heapster/rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: heapster

namespace: kube-system

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: heapster

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:heapster

subjects:

- kind: ServiceAccount

name: heapster

namespace: kube-system

[root@kjdow7-200 ~]# vi /data/k8s-yaml/dashboard/heapster/deployment.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: heapster

namespace: kube-system

spec:

replicas: 1

template:

metadata:

labels:

task: monitoring

k8s-app: heapster

spec:

serviceAccountName: heapster

containers:

- name: heapster

image: harbor.phc-dow.com/public/heapster:v1.5.4

imagePullPolicy: IfNotPresent

command:

- /opt/bitnami/heapster/bin/heapster

- --source=kubernetes:https://kubernetes.default

[root@kjdow7-200 ~]# vi /data/k8s-yaml/dashboard/heapster/svc.yaml

apiVersion: v1

kind: Service

metadata:

labels:

task: monitoring

# For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon, you should comment out this line.

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: Heapster

name: heapster

namespace: kube-system

spec:

ports:

- port: 80

targetPort: 8082

selector:

k8s-app: heapster

5.3 应用资源配置清单

在任意运算节点上部署

[root@kjdow7-21 ~]# kubectl apply -f http://k8s-yaml.phc-dow.com/dashboard/heapster/rbac.yaml

serviceaccount/heapster created

clusterrolebinding.rbac.authorization.k8s.io/heapster created

[root@kjdow7-21 ~]# kubectl apply -f http://k8s-yaml.phc-dow.com/dashboard/heapster/deployment.yaml

deployment.extensions/heapster created

[root@kjdow7-21 ~]# kubectl apply -f http://k8s-yaml.phc-dow.com/dashboard/heapster/svc.yaml

service/heapster created

[root@kjdow7-21 ~]# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-7dd986bcdc-rq9dz 1/1 Running 0 26h 172.7.21.3 kjdow7-21.host.com

heapster-96d7f656f-4xw5k 1/1 Running 0 88s 172.7.21.4 kjdow7-21.host.com

kubernetes-dashboard-7f5f8dd677-knlrs 1/1 Running 0 8d 172.7.22.5 kjdow7-22.host.com

traefik-ingress-7fsp8 1/1 Running 0 11d 172.7.21.5 kjdow7-21.host.com

traefik-ingress-rlwrj 1/1 Running 0 11d 172.7.22.4 kjdow7-22.host.com