Azkaban2.5.0与Hadoop2.5.1的集成

本文主要介绍Azkaban与Hadoop2.5.1、Hive0.13.1的集成安装部署。以前一直再用oozie和Hadoop1,这是第一次接触Azkaban和Hadoop2,对它们的理解不深,所以文章中有错误的地方还望不吝赐教。本文主要是抛砖引玉,让有需要的人不用再跳到同样的坑里

组件版本

- Centos 6.5 64位

- Java 1.7

- Hadoop 2.5.1

- Hive 0.13.1

- MySQL 5.5

预装需求

- Hadoop集群 - 保证可用,并配置了相关的环境变量,包括:JAVA_HOME、HADOOP_HOME、HADOOP_MAPRED_HOME、HADOOP_COMMON_HOME、HADOOP_HDFS_HOME、HADOOP_YARN_HOME、HADOOP_CONF_DIR。

Hadoop集群总共3台服务器,ip分别为:namenode:192.168.20.221、datanode:192.168.20.222和192.168.20.224,Hadoop没有启用任何安全性。

假设HADOOP_HOME=/usr/local/hadoop - Hive - 在192.168.20.222上已经部署,并配置HIVE_HOME环境变量,将$HIVE/bin和$HADOOP_HOME/bin加入到环境变量PATH中。

假设HIVE_HOME=/opt/hive - MySQL Server可用

准备工作

下载安装包

从 http://azkaban.github.io/downloads.html下载如下安装包:- azkaban-web-server-2.5.0.tar.gz

- azkaban-executor-server-2.5.0.tar.gz

- azkaban-sql-script-2.5.0.tar.gz

- azkaban-jobtype-2.5.0.tar.gz

- azkaban-hdfs-viewer-2.5.0.tar.gz

- azkaban-jobsummary-2.5.0.tar.gz

- azkaban-reportal-2.5.0.tar.gz

下载azkaban plugins源码

安装过程中需要修改一些bug,重新编译azkaban plugins。下载地址:https://github.com/azkaban/azkaban-plugins,并切换到release-2.5分支下。为了描述方便,假设解压的完整路径是${AZKABAN_PLUGINS_SOURCE}。

用于编译的机器需要jdk1.6+和ant,请提前准备好。为了节省时间具体的插件编译到具体的目录下执行,例如:${AZKABAN_PLUGINS_SOURCE}/plugins/reportal/

此外,最好在根目录下直接运行sudo ant命令编译一部分,例如产生dist/package.version或基本的依赖,这过程中可能出现错误,例如,缺少dustc可执行命令等,但是对本文中提到的编译没有直接的影响。部署拓扑

本文将azkaban web server和azkaban executor server分开部署,azkaban web server部署在192.168.20.221;azkaban executor server部署在192.168.20.222。本文中的所有配置都是满足我目前需要的配置,如果需要其他配置请自行查阅在线文档或源码 - -

Azkaban Web Server的部署

数据库初始化

这部分与azkaban文档基本一致- 创建数据库

在数据库管理端执行:CREATE DATABASE `azkaban` /*!40100 DEFAULT CHARACTER SET utf8 */; - 建表

解压azkaban-sql-script-2.5.0.tar.gz,在上步中创建的azkaban库上执行解压包内的create-all-sql-2.5.0.sql

解压部署

解压azkaban-web-server-2.5.0.tar.gz,为了方便描述,以下使用${AZKABAN_WEB_SERVER}作为安装目录路径为,这个版本的azkaban在lib中自带了mysql-connector-java-5.1.28.jar,如果版本不一致请自行替换

生成keystore

在${AZKABAN_WEB_SERVER}/conf下运行如下命令:keytool -keystore keystore -alias azkaban -genkey -keyalg RSA Enter keystore password:

Re-enter new password:

What is your first and last name?

[Unknown]: azkaban.test.com

What is the name of your organizational unit?

[Unknown]: azkaban

What is the name of your organization?

[Unknown]: test

What is the name of your City or Locality?

[Unknown]: beijing

What is the name of your State or Province?

[Unknown]: beijing

What is the two-letter country code for this unit?

[Unknown]: CN

Is CN=azkaban.test.com, OU=azkaban, O=test, L=beijing, ST=beijing, C=CN correct?

[no]: yes

Enter key password for

(RETURN if same as keystore password): 配置

所有配置都是保证最小运行,如需其他功能自行查询,修改${AZKABAN_WEB_SERVER}/conf/azkaban.properties- 配置时区

default.timezone.id=Asia/Shanghai - 配置数据库

按照实际MySQL信息修改mysql.xxx属性 - 配置keystore

jetty.keystore=conf/keystore jetty.password=password jetty.keypassword=password jetty.truststore=conf/keystore jetty.trustpassword=password - 配置jetty host

jetty.hostname=192.168.20.221 - 配置Azkaban Executor Server信息

executor.host=192.168.20.222 executor.port=12321 - 配置邮箱

[email protected] mail.host=mail.xxxx.com mail.user=mailuser mail.password=password

启动/停止服务

- 启动 - 启动之前先在${AZKABAN_WEB_SERVER}目录下创建logs目录,进入${AZKABAN_WEB_SERVER}目录,运行如下命令:

bin/start-web.sh

访问地址为:https://{localhost}:8443,默认的帐号为azkaban/azkaban

- 停止 - 在${AZKABAN_WEB_SERVER}目录,运行如下命令停止服务:

bin/azkaban-web-shutdown.sh

插件安装

为了以后插件的安装,需要在${AZKABAN_WEB_SERVER}/plugins/目录下创建viewer目录,用来安装各种viewer插件。当安装好一个插件后需要重启服务,让插件生效。安装HDFS Viewer插件

- 解压插件

在viewer目录中解压azkaban-hdfs-viewer-2.5.0.tar.gz,得到azkaban-hdfs-viewer-2.5.0目录,将其更名为hdfs。最终,这个插件的目录路径是:${AZKABAN_WEB_SERVER}/plugins/viewer/hdfs。 - 配置HDFS Viewer

修改${AZKABAN_WEB_SERVER}/plugins/viewer/hdfs/conf/plugin.properties。根据应用场景修改proxy.user。需要注意的是配置viewer.external.classpaths并没有任何效果,不知是bug还是我用错了,以至于需要使用下面的步骤来配置 - 增加依赖jar

这个版本的Azkaban无法找到Hadoop2中的相关依赖jar,而HDFS Viewer需要以下依赖:

这么做的缺点是以后hadoop如果升级,别忘了将这些jar更新![root@mn extlib]# ll total 10448 -rw-r--r-- 1 root root 41123 Oct 29 18:09 commons-cli-1.2.jar -rw-r--r-- 1 root root 52418 Oct 29 18:09 hadoop-auth-2.5.1.jar -rw-r--r-- 1 root root 2962475 Oct 29 18:09 hadoop-common-2.5.1.jar -rw-r--r-- 1 root root 7095356 Oct 29 18:09 hadoop-hdfs-2.5.1.jar -rw-r--r-- 1 root root 533455 Oct 29 18:09 protobuf-java-2.5.0.jar - 效果

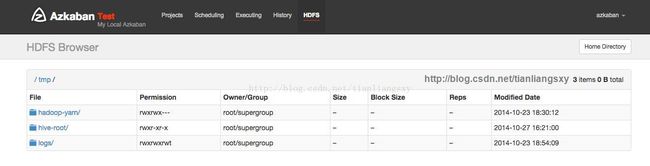

可以看到导航栏多了一个HDFS标签

安装Job Summary插件

- 解压插件

在viewer目录中解压azkaban-jobsummary-2.5.0.tar.gz,得到azkaban-jobsummary-2.5.0目录,将其更名为job summary。最终,这个插件的目录路径是${AZKABAN_WEB_SERVER}/plugins/viewer/jobsummary - 配置Job Summary

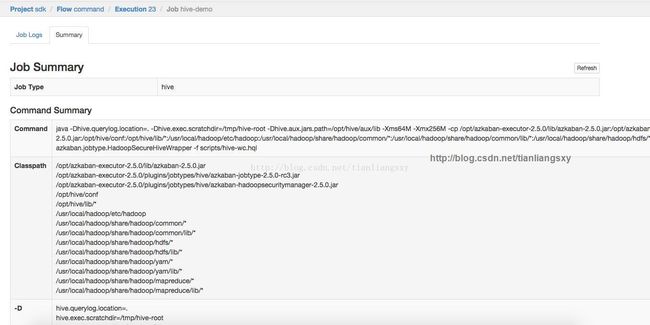

默认情况下不用做任何改到,如果需要修改${AZKABAN_WEB_SERVER}/plugins/viewer/jobsummary/conf/plugin.properties。 - 效果

安装Reportal插件

Reportal插件的安装不仅是要在Azkaban Web Server上进行(Viewer插件),也需要在Azkaban Executor Server上进行(Jobtype插件)。本小节主要是说明如何在前者上的安装;后者上的安装参考Azkaban Executor Server的安装和部署。- 解压插件

由于azkaban-reportal-2.5.0.tar.gz中不仅包含Viewer插件,还包含Jobtype插件,所以先在一个临时目录中解压这个包,然后将解压得到的viewer/reportal/目录拷贝到上步中的viewer目录下。最终这个插件的目录路径为:

${AZKABAN_WEB_SERVER}/plugins/viewer/reportal。 - 配置Reportal Viewer

修改${AZKABAN_WEB_SERVER}/plugins/viewer/reportal/conf/plugin.properties。由于Web Server和Executor Server是分开部署,不能使用本地文件存储report任务的结果,而是用hdfs存储:reportal.output.filesystem=hdfs - 效果

配置成功后,首页导航栏会出现Reportal链接,但是目前还无法正常运行report任务

Azkaban Executor Server的部署

解压部署

解压azkaban-executor-server-2.5.0.tar.gz到安装目录,为了方便描述,假设该路径为${AZKABAN_EXECUTOR_SERVER}。这个版本的azkaban在lib中自带了mysql-connector-java-5.1.28.jar,如果版本不一致请自行替换。

配置

修改${AZKABAN_EXECUTOR_SERVER}/conf/azkaban.properties- 设置时区

default.timezone.id=Asia/Shanghai - 设置数据库,与Web Server中的数据库设置一致

启动/停止服务

- 启动 - 与Web Server的启动/停止类似,首先在${AZKABAN_EXECUTOR_SERVER}目录下创建logs,然后进入${AZKABAN_EXECUTOR_SERVER}目录,运行如下命令启动:

bin/start-exec.sh - 停止 - 在${AZKABAN_EXECUTOR_SERVER}目录下运行如下命令停止:

bin/azkaban-executor-shutdown.sh

插件安装准备

进入${AZKABAN_EXECUTOR_SERVER}/plugins目录,在该目录下解压azkaban-jobtype-2.5.0.tar.gz得到目录:azkaban-jobtype-2.5.0。将该目录更名为jobtypes。这个目录用来存放之后安装的所有插件,与Web Server中的viewer目录类似。每次插件的安装配置需要重启Executor Server。修改${AZKABAN_EXECUTOR_SERVER}/plugins/jobtypes/common.properties,设置hadoop.home和hive.home,与你的环境变量HADOOP_HOME和HIVE_HOME分布保持一致。例如:

hadoop.home=/usr/local/hadoop

hive.home=/opt/hivehadoop.home=/usr/local/hadoop

hive.home=/opt/hive

jobtype.global.classpath=${hadoop.home}/etc/hadoop,${hadoop.home}/share/hadoop/common/*,${hadoop.home}/share/hadoop/common/lib/*,${hadoop.home}/share/hadoop/hdfs/*,${hadoop.home}/share/hadoop/hdfs/lib/*,${hadoop.home}/share/hadoop/yarn/*,${hadoop.home}/share/hadoop/yarn/lib/*,${hadoop.home}/share/hadoop/mapreduce/*,${hadoop.home}/share/hadoop/mapreduce/lib/*安装Hive插件

在上步中hive插件已经被安装了,这里主要是如何配置hive插件。

- 配置

在配置之前需要注意的是,azkaban默认hive aux lib的目录是$HIVE_HOME/aux/lib,所以请在$HIVE_HOME目录下创建相应的目录,或者修改下面提到的两个配置文件中的hive.aux.jars.path和hive.aux.jar.path为你期望的路径,此外我在这两个属性值都加上file://,来指定使用本地文件。

1.1. 修改${AZKABAN_EXECUTOR_SERVER}/plugins/jobtypes/hive/private.properties,如下:

jobtype.classpath与${AZKABAN_EXECUTOR_SERVER}/plugins/jobtypes/commonprivate.properties中的jobtype.global.classpath一起组合成hive任务的classpath。所以这两个属性如何赋值,可以灵活设置,保证classpath是你要的即可。jobtype.classpath=${hive.home}/conf,${hive.home}/lib/* hive.aux.jar.path=file://${hive.home}/aux/lib

1.2. 修改${AZKABAN_EXECUTOR_SERVER}/plugins/jobtypes/hive/plugin.properties,如下:hive.aux.jars.path=file://${hive.home}/aux/lib - 源码的修改与编译

这个版本的Azkaban中的${AZKABAN_EXECUTOR_SERVER}/plugins/jobtypes/hive/azkaban-jobtype-2.5.0.jar有bug,在Hadoop2.5.1上(其他2.x未验证),运行hive任务会抛出如下异常:

解决办法是修改${AZKABAN_PLUGINS_SOURCE}/plugins/jobtype/src/azkaban/jobtype/HadoopSecureHiveWrapper.java,找到如下代码片段:Exception in thread "main" java.lang.NoSuchMethodError: org.apache.hadoop.hive.shims.HadoopShims.usesJobShell()Z at azkaban.jobtype.HadoopSecureHiveWrapper.runHive(HadoopSecureHiveWrapper.java:148) at azkaban.jobtype.HadoopSecureHiveWrapper.main(HadoopSecureHiveWrapper.java:115)

将其中的if条件去掉,也就是删除两行。然后进入${AZKABAN_PLUGINS_SOURCE}/plugins/hadoopsecuritymanager/目录,运行:if (!ShimLoader.getHadoopShims().usesJobShell()) { ... ... }

sudo ant

再进入${AZKABAN_PLUGINS_SOURCE}/plugins/jobtype,同样运行:

sudo ant

成功后会生成${AZKABAN_PLUGINS_SOURCE}/dist/jobtype/jars/azkaban-jobtype-2.5.0-rc3.jar,使用这个jar来替换${AZKABAN_EXECUTOR_SERVER}/plugins/jobtypes/hive/azkaban-jobtype-2.5.0.jar。

安装Reportalhive插件

- 解压部署

解压azkaban-reportal-2.5.0.tar.gz,将jobtypes/reportalhive/拷贝到${AZKABAN_EXECUTOR_SERVER}/plugins/jobtypes目录。完整的目录路径是:${AZKABAN_EXECUTOR_SERVER}/plugins/jobtypes/reportalhive。 - 更新依赖jar

在Reportalhive插件根目录下的azkaban-hadoopsecuritymanager-2.2.jar和azkaban-jobtype-2.1.jar两个jar和当前版本不一致。需要用hive插件中的对应jar(${AZKABAN_EXECUTOR_SERVER}/plugins/jobtypes/hive)来替换掉这两个jar,否则在运行report任务时会如下报错:

${AZKABAN_EXECUTOR_SERVER}/plugins/jobtypes/reportalhive/lib/azkaban-reportal-2.5.jar也有bug,需要修改${AZKABAN_PLUGINS_SOURCE}/plugins/reportal/src/azkaban/jobtype/ReportalHiveRunner.java文件,找到如下代码片段:Exception in thread "main" java.lang.ClassNotFoundException: azkaban.jobtype.ReportalHiveRunner at java.net.URLClassLoader$1.run(URLClassLoader.java:366) at java.net.URLClassLoader$1.run(URLClassLoader.java:355) at java.security.AccessController.doPrivileged(Native Method) at java.net.URLClassLoader.findClass(URLClassLoader.java:354) at java.lang.ClassLoader.loadClass(ClassLoader.java:425) at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:308) at java.lang.ClassLoader.loadClass(ClassLoader.java:358) at azkaban.jobtype.HadoopJavaJobRunnerMain.getObject(HadoopJavaJobRunnerMain.java:299) at azkaban.jobtype.HadoopJavaJobRunnerMain.(HadoopJavaJobRunnerMain.java:146) at azkaban.jobtype.HadoopJavaJobRunnerMain.main(HadoopJavaJobRunnerMain.java:76)

删除if条件,然后进入${AZKABAN_PLUGINS_SOURCE}/plugins/reportal,运行sudo ant生成${AZKABAN_PLUGINS_SOURCE}/dist/reportal/jars/azkaban-reportal-2.5.jar,用这个jar来替换掉${AZKABAN_EXECUTOR_SERVER}/plugins/jobtypes/reportalhive/lib/azkaban-reportal-2.5.jar,否则在运行report任务时会报如下错误:if (!ShimLoader.getHadoopShims().usesJobShell()) { ... ... }Exception in thread "main" java.lang.NoSuchMethodError: org.apache.hadoop.hive.shims.HadoopShims.usesJobShell()Z at azkaban.jobtype.HadoopSecureHiveWrapper.runHive(HadoopSecureHiveWrapper.java:148) at azkaban.jobtype.HadoopSecureHiveWrapper.main(HadoopSecureHiveWrapper.java:115) - 配置Reportalhive

3.1. 配置plugin.properties

可以注释掉hive.home,因为我们在${AZKABAN_EXECUTOR_SERVER}/plugins/jobtypes/common.properties和${AZKABAN_EXECUTOR_SERVER}/plugins/jobtypes/commonprivate.properties中已经配置过了,其他修改的属性:

hive.aux.jars.path - 使用本地的hive aux lib,如果使用hdfs,将file改为hdfs即可hive.aux.jars.path=file://${hive.home}/aux/lib hadoop.dir.conf=${hadoop.home}/etc/hadoop

hadoop.dir.conf - hadoop2的配置目录与hadoop1不一样,请注意修改

3.2. 配置private.properties

同上,可以注释掉hive.home,其他修改的属性:

jobtype.classpath - 与hive插件的配置不一样,需要将插件本身的lib目录加入到classpath,以使用azkaban-reportal-2.5.jar,否则会报错。jobtype.classpath=${hadoop.home}/conf,${hadoop.home}/lib/*,${hive.home}/lib/*,./lib/* hive.aux.jars.path=file://${hive.home}/aux/lib hadoop.dir.conf=${hadoop.home}/etc/hadoop #jobtype.global.classpath= #hive.classpath.items=

jobtype.global.classpath - 已在${AZKABAN_EXECUTOR_SERVER}/plugins/jobtypes/commonprivate.properties中定义,可以注释掉。

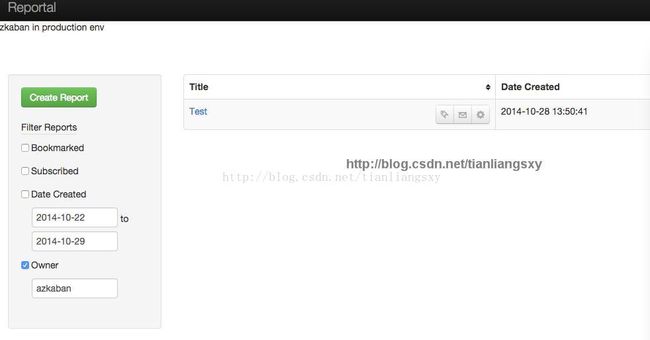

hive.classpath.items - 未用,也可以注释掉。 - 效果

最后,部署完毕,可以运行多种任务了