MapReduce学习笔记(7)—— 寻找共同好友

1 数据

冒号前是一个用户,冒号后是该用户的所有好友(数据中的好友关系是单向的)。求出哪些人两两之间有共同好友,及他俩的共同好友都有谁?

A:B,C,D,F,E,O

B:A,C,E,K

C:F,A,D,I

D:A,E,F,L

E:B,C,D,M,L

F:A,B,C,D,E,O,M

G:A,C,D,E,F

H:A,C,D,E,O

I:A,O

J:B,O

K:A,C,D

L:D,E,F

M:E,F,G

O:A,H,I,J

1.1 思路

1.1.1 map

读一行 A:B,C,D,F,E,O

输出 <C,A><D,A><E,A><O,A>

在读一行 B:A,C,E,K

输出 <C,B><E,B><K,B>

1.1.2 reduce

拿到的数据比如<C,A><C,B><C,E><C,F><C,G>......

输出:

C>

E,C>

C>

C>

E,C>

C>.....

1.1.3 第二次 map

读入一行<A-B,C>

直接输出<A-B,C>

1.1.4 第二次 reduce

读入数据 <A-B,C><A-B,F><A-B,G>.......

输出: A-B C,F,G,.....

2 第一次分类

2.1 源码

package friends;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

public class SharedFriendsStepOne {

static class SharedFriendsStepOneMapper extends Mapper {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String line = value.toString();

String[] person_friends = line.split(":");

String person = person_friends[0];

String friends = person_friends[1];

for(String friend:friends.split(",")){

//输出<好友,人>

context.write(new Text(friend),new Text(person));

}

}

}

static class SharedFriendsStepOneReducer extends Reducer{

@Override

protected void reduce(Text friend, Iterable persons, Context context) throws IOException, InterruptedException {

StringBuffer sb = new StringBuffer();

for(Text person:persons){

sb.append(person).append(",");

}

context.write(friend,new Text(sb.toString()));

}

}

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

job.setJarByClass(SharedFriendsStepOne.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

job.setMapperClass(SharedFriendsStepOneMapper.class);

job.setReducerClass(SharedFriendsStepOneReducer.class);

FileInputFormat.setInputPaths(job,new Path("h:/friends/input"));

FileOutputFormat.setOutputPath(job,new Path("h:/friends/output_step_one"));

job.waitForCompletion(true);

}

}

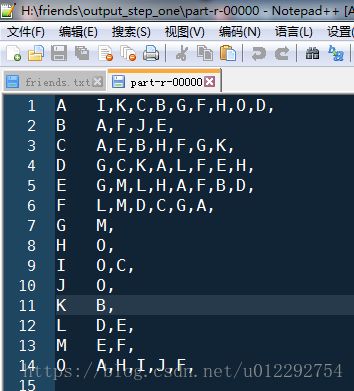

2.2 本地运行结果

3 第二次分类

3.1 源码

package friends;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

import java.util.Arrays;

public class SharedFriendsStepTwo {

/*

* 拿到的数据是上一个步骤的输出结果

* A I,K,C,B,G,F,H,O,D,

* 友 人,人,人,人

*

* */

static class SharedFriendsStepTwoMapper extends Mapper {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String line = value.toString();

String[] friend_persons = line.split("\t");

String friend = friend_persons[0];

String[] persons = friend_persons[1].split(",");

Arrays.sort(persons);

for (int i = 0; i < persons.length - 2; i++) {

for (int j = i + 1; j < persons.length - 1; j++) {

// <人-人,好友>,相同的人人对就会到同一个 reduce

context.write(new Text(persons[i] + "-" + persons[j]), new Text(friend));

}

}

}

}

static class SharedFriendsStepTwoReducer extends Reducer {

@Override

protected void reduce(Text person_person, Iterable friends, Context context) throws IOException, InterruptedException {

StringBuffer sb = new StringBuffer();

for(Text friend:friends){

sb.append(friend).append(" ");

}

context.write(person_person,new Text(sb.toString()));

}

}

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

job.setJarByClass(SharedFriendsStepTwo.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

job.setMapperClass(SharedFriendsStepTwoMapper.class);

job.setReducerClass(SharedFriendsStepTwoReducer.class);

FileInputFormat.setInputPaths(job,new Path("h:/friends/output_step_one"));

FileOutputFormat.setOutputPath(job,new Path("h:/friends/output_step_two"));

job.waitForCompletion(true);

}

}

3.2 本地运行结果

A-B C E

A-C F D

A-D E F

A-E B C D

A-F C D B E O

A-G D E F C

A-H E O C D

A-I O

A-K D

A-L F E

B-C A

B-D E A

B-E C

B-F E A C

B-G C E A

B-H E C A

B-I A

B-K A

B-L E

C-D F A

C-E D

C-F D A

C-G F A D

C-H A D

C-I A

C-K D A

C-L F

D-F E A

D-G A E F

D-H A E

D-I A

D-K A

D-L F E

E-F C D B

E-G D C

E-H D C

E-K D

F-G C E D A

F-H C A D E O

F-I A O

F-K D A

F-L E

G-H D E C A

G-I A

G-K A D

G-L F E

H-I A O

H-K A D

H-L E

I-K A