PaddleClas-图像分类模型TensorRT预测评估

简介

- 在之前介绍分类模型的时候,大家除了比较精度之外,一般还会比较flops和params这两个参数,flops表示计算量,可以间接反映推理速度,params表示参数量,可以反映存储大小。但是有一个问题,flops相同的两个网络,它们的推理速度并不一定是相同的,因为可能有内存拷贝、通道拆分操作等其他差异。因此最好的方法就是直接在机器上预测,统计下耗时。

- T4 GPU是可用于模型推理的GPU显卡,支持TensorRT、FP32/FP16等多种推理类型,PaddleClas在其文档中给出了T4 GP上不同模型在不同bs的情况下的预测耗时情况,具体文档可以参考PaddleClas中模型库的不同系列文档:https://github.com/PaddlePaddle/PaddleClas/tree/master/docs/zh_CN/models

注意:这篇博客中提到的分类模型均是在PaddleClas官方repo中有对应实现:https://github.com/PaddlePaddle/PaddleClas,如果希望实现或者评估的话,可以去PaddleClas官网下载代码进行评估或者使用。

环境准备

- 为避免和其他人的环境冲突,可以使用docker去创建自己的容器,准备docker并且在docker中安装Paddle环境的可以参考这里:https://www.paddlepaddle.org.cn/documentation/docs/zh/develop/install/install_Docker.html

Paddle+TRT编译安装

- 在T4 GPU上可以使用TRT进行推理加速,因此编译带有trt的Paddle安装包。

- 下载好TRT包之后,如果是TRT5,首先需要修改TRT包里的

NvInfer.h,在其中为class IPluginFactory和class IGpuAllocator分别添加虚析构函数:

virtual ~IPluginFactory() {};

virtual ~IGpuAllocator() {};

下载好Paddle代码之后,可以使用下面的命令进行编译。

cmake .. \

-DWITH_CONTRIB=OFF \

-DWITH_MKL=ON \

-DWITH_MKLDNN=OFF \

-DWITH_TESTING=OFF \

-DCMAKE_BUILD_TYPE=Release \

-DWITH_INFERENCE_API_TEST=OFF \

-DON_INFER=ON \

-DWITH_PYTHON=ON \

-DCUDA_TOOLKIT_ROOT_DIR=/usr/local/cuda \

-DCUDNN_ROOT=/paddle/libs/cudnn_v7.6_cuda10.1 \

-DTENSORRT_ROOT=/paddle/libs/TensorRT-5.1.2.2

其中DCUDNN_ROOT是CUDNN地址,DTENSORRT_ROOT是TRT的代码地址,因为编译预测包的时候需要用到这些。

更多的编译选项和可能遇到的问题可以参考这里:https://www.paddlepaddle.org.cn/documentation/docs/zh/develop/advanced_guide/inference_deployment/inference/build_and_install_lib_cn.html

- 最终编译得到一个whl包,直接安装,就可以替换之前docker默认自带的Paddle版本了,新的Paddle包是可以使用TRT进行预测的,但是需要注意的是,在预测之前要首先把TRT的lib地址添加到

LD_LIBRARY_PATH环境变量中。

export LD_LIBRARY_PATH=/paddle/libs/TensorRT-5.1.2.2/lib:$LD_LIBRARY_PATH

T4环境准备

- T4 GPU在不使用的时候,有可能会降频;由于T4没有主动散热,长时间运行的话,也会降频(否则GPU可能过热而宕机),因此在做benchmark的时候需要锁频,下面这两条命令可以进行锁频。

nvidia-smi -i 0 -pm ENABLED

nvidia-smi --lock-gpu-clocks=1590 -i 0

锁频之后,要想在没有外部散热的情况下,使GPU可以继续长时间运行,可以在每次推理之后sleep 30ms左右,这样的话,基本就不会发生GPU宕机的问题了。

评估结果和分析

- 可以直接使用PaddleClas中的predict.py函数进行预测耗时的统计。

最终给出不同模型的精度和实际预测速度的图表统计信息。

如果需要直接看结论,也可以直接拉到最后查看结论部分。

总览

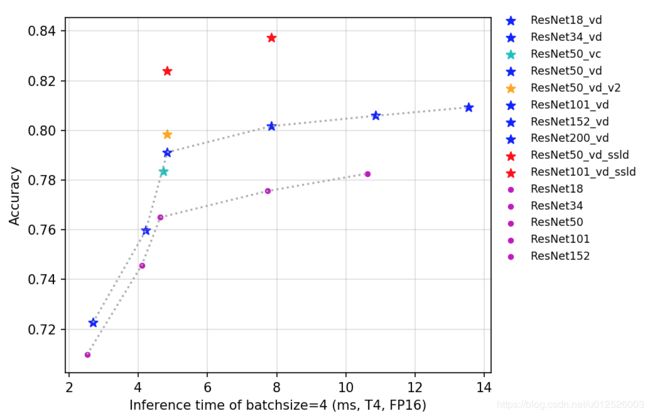

ResNet及其Vd系列

具体地,T4上FP32与FP16的预测耗时统计信息如下。

| Models | Crop Size | Resize Short Size | FP16 Batch Size=1 (ms) |

FP16 Batch Size=4 (ms) |

FP16 Batch Size=8 (ms) |

FP32 Batch Size=1 (ms) |

FP32 Batch Size=4 (ms) |

FP32 Batch Size=8 (ms) |

|---|---|---|---|---|---|---|---|---|

| ResNet18 | 224 | 256 | 1.3568 | 2.5225 | 3.61904 | 1.45606 | 3.56305 | 6.28798 |

| ResNet18_vd | 224 | 256 | 1.39593 | 2.69063 | 3.88267 | 1.54557 | 3.85363 | 6.88121 |

| ResNet34 | 224 | 256 | 2.23092 | 4.10205 | 5.54904 | 2.34957 | 5.89821 | 10.73451 |

| ResNet34_vd | 224 | 256 | 2.23992 | 4.22246 | 5.79534 | 2.43427 | 6.22257 | 11.44906 |

| ResNet50 | 224 | 256 | 2.63824 | 4.63802 | 7.02444 | 3.47712 | 7.84421 | 13.90633 |

| ResNet50_vc | 224 | 256 | 2.67064 | 4.72372 | 7.17204 | 3.52346 | 8.10725 | 14.45577 |

| ResNet50_vd | 224 | 256 | 2.65164 | 4.84109 | 7.46225 | 3.53131 | 8.09057 | 14.45965 |

| ResNet50_vd_v2 | 224 | 256 | 2.65164 | 4.84109 | 7.46225 | 3.53131 | 8.09057 | 14.45965 |

| ResNet101 | 224 | 256 | 5.04037 | 7.73673 | 10.8936 | 6.07125 | 13.40573 | 24.3597 |

| ResNet101_vd | 224 | 256 | 5.05972 | 7.83685 | 11.34235 | 6.11704 | 13.76222 | 25.11071 |

| ResNet152 | 224 | 256 | 7.28665 | 10.62001 | 14.90317 | 8.50198 | 19.17073 | 35.78384 |

| ResNet152_vd | 224 | 256 | 7.29127 | 10.86137 | 15.32444 | 8.54376 | 19.52157 | 36.64445 |

| ResNet200_vd | 224 | 256 | 9.36026 | 13.5474 | 19.0725 | 10.80619 | 25.01731 | 48.81399 |

| ResNet50_vd_ssld | 224 | 256 | 2.65164 | 4.84109 | 7.46225 | 3.53131 | 8.09057 | 14.45965 |

| ResNet101_vd_ssld | 224 | 256 | 5.05972 | 7.83685 | 11.34235 | 6.11704 | 13.76222 | 25.11071 |

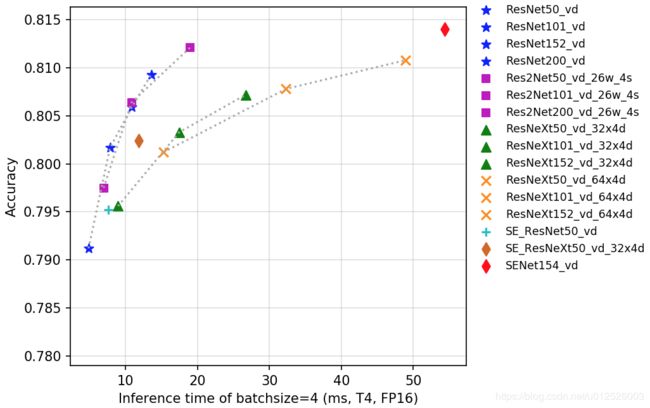

SEResNeXt与Res2Net系列

具体地,T4上FP32与FP16的预测耗时统计信息如下。

| Models | Crop Size | Resize Short Size | FP16 Batch Size=1 (ms) |

FP16 Batch Size=4 (ms) |

FP16 Batch Size=8 (ms) |

FP32 Batch Size=1 (ms) |

FP32 Batch Size=4 (ms) |

FP32 Batch Size=8 (ms) |

|---|---|---|---|---|---|---|---|---|

| Res2Net50_26w_4s | 224 | 256 | 3.56067 | 6.61827 | 11.41566 | 4.47188 | 9.65722 | 17.54535 |

| Res2Net50_vd_26w_4s | 224 | 256 | 3.69221 | 6.94419 | 11.92441 | 4.52712 | 9.93247 | 18.16928 |

| Res2Net50_14w_8s | 224 | 256 | 4.45745 | 7.69847 | 12.30935 | 5.4026 | 10.60273 | 18.01234 |

| Res2Net101_vd_26w_4s | 224 | 256 | 6.53122 | 10.81895 | 18.94395 | 8.08729 | 17.31208 | 31.95762 |

| Res2Net200_vd_26w_4s | 224 | 256 | 11.66671 | 18.93953 | 33.19188 | 14.67806 | 32.35032 | 63.65899 |

| ResNeXt50_32x4d | 224 | 256 | 7.61087 | 8.88918 | 12.99674 | 7.56327 | 10.6134 | 18.46915 |

| ResNeXt50_vd_32x4d | 224 | 256 | 7.69065 | 8.94014 | 13.4088 | 7.62044 | 11.03385 | 19.15339 |

| ResNeXt50_64x4d | 224 | 256 | 13.78688 | 15.84655 | 21.79537 | 13.80962 | 18.4712 | 33.49843 |

| ResNeXt50_vd_64x4d | 224 | 256 | 13.79538 | 15.22201 | 22.27045 | 13.94449 | 18.88759 | 34.28889 |

| ResNeXt101_32x4d | 224 | 256 | 16.59777 | 17.93153 | 21.36541 | 16.21503 | 19.96568 | 33.76831 |

| ResNeXt101_vd_32x4d | 224 | 256 | 16.36909 | 17.45681 | 22.10216 | 16.28103 | 20.25611 | 34.37152 |

| ResNeXt101_64x4d | 224 | 256 | 30.12355 | 32.46823 | 38.41901 | 30.4788 | 36.29801 | 68.85559 |

| ResNeXt101_vd_64x4d | 224 | 256 | 30.34022 | 32.27869 | 38.72523 | 30.40456 | 36.77324 | 69.66021 |

| ResNeXt152_32x4d | 224 | 256 | 25.26417 | 26.57001 | 30.67834 | 24.86299 | 29.36764 | 52.09426 |

| ResNeXt152_vd_32x4d | 224 | 256 | 25.11196 | 26.70515 | 31.72636 | 25.03258 | 30.08987 | 52.64429 |

| ResNeXt152_64x4d | 224 | 256 | 46.58293 | 48.34563 | 56.97961 | 46.7564 | 56.34108 | 106.11736 |

| ResNeXt152_vd_64x4d | 224 | 256 | 47.68447 | 48.91406 | 57.29329 | 47.18638 | 57.16257 | 107.26288 |

| SE_ResNet18_vd | 224 | 256 | 1.61823 | 3.1391 | 4.60282 | 1.7691 | 4.19877 | 7.5331 |

| SE_ResNet34_vd | 224 | 256 | 2.67518 | 5.04694 | 7.18946 | 2.88559 | 7.03291 | 12.73502 |

| SE_ResNet50_vd | 224 | 256 | 3.65394 | 7.568 | 12.52793 | 4.28393 | 10.38846 | 18.33154 |

| SE_ResNeXt50_32x4d | 224 | 256 | 9.06957 | 11.37898 | 18.86282 | 8.74121 | 13.563 | 23.01954 |

| SE_ResNeXt50_vd_32x4d | 224 | 256 | 9.25016 | 11.85045 | 25.57004 | 9.17134 | 14.76192 | 19.914 |

| SE_ResNeXt101_32x4d | 224 | 256 | 19.34455 | 20.6104 | 32.20432 | 18.82604 | 25.31814 | 41.97758 |

| SENet154_vd | 224 | 256 | 49.85733 | 54.37267 | 74.70447 | 53.79794 | 66.31684 | 121.59885 |

DPN与DenseNet系列

具体地,T4上FP32与FP16的预测耗时统计信息如下。

| Models | Crop Size | Resize Short Size | FP16 Batch Size=1 (ms) |

FP16 Batch Size=4 (ms) |

FP16 Batch Size=8 (ms) |

FP32 Batch Size=1 (ms) |

FP32 Batch Size=4 (ms) |

FP32 Batch Size=8 (ms) |

|---|---|---|---|---|---|---|---|---|

| DenseNet121 | 224 | 256 | 4.16436 | 7.2126 | 10.50221 | 4.40447 | 9.32623 | 15.25175 |

| DenseNet161 | 224 | 256 | 9.27249 | 14.25326 | 20.19849 | 10.39152 | 22.15555 | 35.78443 |

| DenseNet169 | 224 | 256 | 6.11395 | 10.28747 | 13.68717 | 6.43598 | 12.98832 | 20.41964 |

| DenseNet201 | 224 | 256 | 7.9617 | 13.4171 | 17.41949 | 8.20652 | 17.45838 | 27.06309 |

| DenseNet264 | 224 | 256 | 11.70074 | 19.69375 | 24.79545 | 12.14722 | 26.27707 | 40.01905 |

| DPN68 | 224 | 256 | 11.7827 | 13.12652 | 16.19213 | 11.64915 | 12.82807 | 18.57113 |

| DPN92 | 224 | 256 | 18.56026 | 20.35983 | 29.89544 | 18.15746 | 23.87545 | 38.68821 |

| DPN98 | 224 | 256 | 21.70508 | 24.7755 | 40.93595 | 21.18196 | 33.23925 | 62.77751 |

| DPN107 | 224 | 256 | 27.84462 | 34.83217 | 60.67903 | 27.62046 | 52.65353 | 100.11721 |

| DPN131 | 224 | 256 | 28.58941 | 33.01078 | 55.65146 | 28.33119 | 46.19439 | 89.24904 |

Inception系列

具体地,T4上FP32与FP16的预测耗时统计信息如下。

| Models | Crop Size | Resize Short Size | FP16 Batch Size=1 (ms) |

FP16 Batch Size=4 (ms) |

FP16 Batch Size=8 (ms) |

FP32 Batch Size=1 (ms) |

FP32 Batch Size=4 (ms) |

FP32 Batch Size=8 (ms) |

|---|---|---|---|---|---|---|---|---|

| GoogLeNet | 299 | 320 | 1.75451 | 3.39931 | 4.71909 | 1.88038 | 4.48882 | 6.94035 |

| Xception41 | 299 | 320 | 2.91192 | 7.86878 | 15.53685 | 4.96939 | 17.01361 | 32.67831 |

| Xception41_ deeplab |

299 | 320 | 2.85934 | 7.2075 | 14.01406 | 5.33541 | 17.55938 | 33.76232 |

| Xception65 | 299 | 320 | 4.30126 | 11.58371 | 23.22213 | 7.26158 | 25.88778 | 53.45426 |

| Xception65_ deeplab |

299 | 320 | 4.06803 | 9.72694 | 19.477 | 7.60208 | 26.03699 | 54.74724 |

| Xception71 | 299 | 320 | 4.80889 | 13.5624 | 27.18822 | 8.72457 | 31.55549 | 69.31018 |

| InceptionV4 | 299 | 320 | 9.50821 | 13.72104 | 20.27447 | 12.99342 | 25.23416 | 43.56121 |

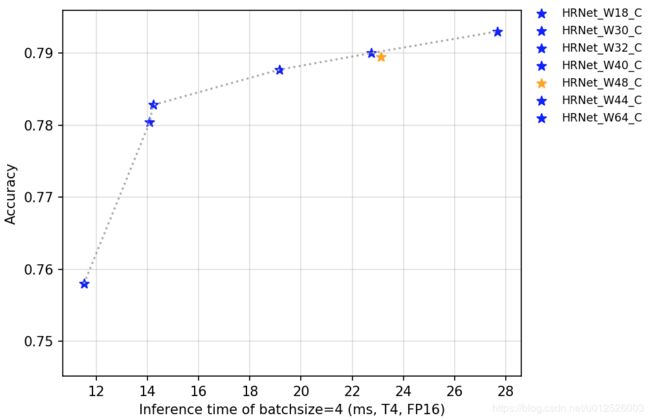

HRNet系列

具体地,T4上FP32与FP16的预测耗时统计信息如下。

| Models | Crop Size | Resize Short Size | FP16 Batch Size=1 (ms) |

FP16 Batch Size=4 (ms) |

FP16 Batch Size=8 (ms) |

FP32 Batch Size=1 (ms) |

FP32 Batch Size=4 (ms) |

FP32 Batch Size=8 (ms) |

|---|---|---|---|---|---|---|---|---|

| HRNet_W18_C | 224 | 256 | 6.79093 | 11.50986 | 17.67244 | 7.40636 | 13.29752 | 23.33445 |

| HRNet_W30_C | 224 | 256 | 8.98077 | 14.08082 | 21.23527 | 9.57594 | 17.35485 | 32.6933 |

| HRNet_W32_C | 224 | 256 | 8.82415 | 14.21462 | 21.19804 | 9.49807 | 17.72921 | 32.96305 |

| HRNet_W40_C | 224 | 256 | 11.4229 | 19.1595 | 30.47984 | 12.12202 | 25.68184 | 48.90623 |

| HRNet_W44_C | 224 | 256 | 12.25778 | 22.75456 | 32.61275 | 13.19858 | 32.25202 | 59.09871 |

| HRNet_W48_C | 224 | 256 | 12.65015 | 23.12886 | 33.37859 | 13.70761 | 34.43572 | 63.01219 |

| HRNet_W64_C | 224 | 256 | 15.10428 | 27.68901 | 40.4198 | 17.57527 | 47.9533 | 97.11228 |

EfficientNet与ResNeXt101_wsl系列

具体地,T4上FP32与FP16的预测耗时统计信息如下。

| Models | Crop Size | Resize Short Size | FP16 Batch Size=1 (ms) |

FP16 Batch Size=4 (ms) |

FP16 Batch Size=8 (ms) |

FP32 Batch Size=1 (ms) |

FP32 Batch Size=4 (ms) |

FP32 Batch Size=8 (ms) |

|---|---|---|---|---|---|---|---|---|

| ResNeXt101_ 32x8d_wsl |

224 | 256 | 18.19374 | 21.93529 | 34.67802 | 18.52528 | 34.25319 | 67.2283 |

| ResNeXt101_ 32x16d_wsl |

224 | 256 | 18.52609 | 36.8288 | 62.79947 | 25.60395 | 71.88384 | 137.62327 |

| ResNeXt101_ 32x32d_wsl |

224 | 256 | 33.51391 | 70.09682 | 125.81884 | 54.87396 | 160.04337 | 316.17718 |

| ResNeXt101_ 32x48d_wsl |

224 | 256 | 50.97681 | 137.60926 | 190.82628 | 99.01698256 | 315.91261 | 551.83695 |

| Fix_ResNeXt101_ 32x48d_wsl |

320 | 320 | 78.62869 | 191.76039 | 317.15436 | 160.0838242 | 595.99296 | 1151.47384 |

| EfficientNetB0 | 224 | 256 | 3.40122 | 5.95851 | 9.10801 | 3.442 | 6.11476 | 9.3304 |

| EfficientNetB1 | 240 | 272 | 5.25172 | 9.10233 | 14.11319 | 5.3322 | 9.41795 | 14.60388 |

| EfficientNetB2 | 260 | 292 | 5.91052 | 10.5898 | 17.38106 | 6.29351 | 10.95702 | 17.75308 |

| EfficientNetB3 | 300 | 332 | 7.69582 | 16.02548 | 27.4447 | 7.67749 | 16.53288 | 28.5939 |

| EfficientNetB4 | 380 | 412 | 11.55585 | 29.44261 | 53.97363 | 12.15894 | 30.94567 | 57.38511 |

| EfficientNetB5 | 456 | 488 | 19.63083 | 56.52299 | - | 20.48571 | 61.60252 | - |

| EfficientNetB6 | 528 | 560 | 30.05911 | - | - | 32.62402 | - | - |

| EfficientNetB7 | 600 | 632 | 47.86087 | - | - | 53.93823 | - | - |

| EfficientNetB0_small | 224 | 256 | 2.39166 | 4.36748 | 6.96002 | 2.3076 | 4.71886 | 7.21888 |

结论

有几个比较有意思的结论可以参考一下

- 从总图中可以看出,EfficientNet在

精度-预测速度方面还是更有优势的,但是其由于显存占用比较多(更大的模型用更大的尺度去预测,也就是表中的resize_short_size),因此batch size无法打到很大,这对于实际部署的时候其实是比较让人头疼的,因为申请新的GPU资源其实也大大增加了模型服务部署的成本。 - 相比于FP32,FP16的推理在batch size较大的时候,优势才比较明显,各个系列模型里面,bs=1时,fp32与fp16的预测耗时并没有拉开很大的差距,但是bs=4的时候,基本都有2倍左右,而Xception系列更甚,FP16是FP32速度的2.5~3倍(毕竟这个系列模型尺度大,为299,这本来也相当于计算量增加了)。

- 对于分叉较多的HRNet以及DPN这些网络来说,FP16的优势没有结构简单的模型的优势明显。

- 其实基于FP16预测的时候,最终评估指标是不变的(top1 score在小数点后4~6位可能会有些diff),因此在实际预测的时候,还是推荐使用更大的bs,基于FP16进行预测,加速效果让人感动。

- PaddleClas中提供的知识蒸馏预训练模型还是很有优势的,毕竟在整个坐标轴的左上角。这个具体的知识蒸馏方案可以参考:https://blog.csdn.net/u012526003/article/details/106160464

- 在bs=1的情况下,其实224尺度的评估与288尺度的评估的预测耗时并不会差很多(对于ResNet101来说,224预测比288预测快了0.4ms左右),但是指标却直接提升了0.7%,因此在预测部署的时候,如果对预测耗时的要求没有那么严苛,是可以稍微把预测尺度调大一些的,毕竟不用重新训练就可以提升精度,还是非常香的。