Hi3516a——HDMI接口获取数据后编码保存

前言

本文将从hi3516a基础的例程出发,分析海思提供的编码应用案例。

调试背景 : hi3516a开发板通过HDMI接口获取BT1120数据后进行编码,然后进行本地保存。

硬件平台:hi3516a

软件平台:Hi3516A_SDK_V1.0.5.0

无私分享,从我做起!

源码解析

/******************************************************************************

* function : H.264@1080p@30fps+H.265@1080p@30fps+H.264@D1@30fps

******************************************************************************/

HI_S32 SAMPLE_VENC_1080P_CLASSIC(HI_VOID)

{

PAYLOAD_TYPE_E enPayLoad[3] = {PT_H264, PT_H265, PT_H264}; // 载荷方式

#ifdef CVBSMODE

PIC_SIZE_E enSize[3] = {PIC_D1, PIC_D1, PIC_CIF};

printf("CVBS MODE\n");

#else

PIC_SIZE_E enSize[3] = {PIC_HD1080, PIC_HD720, PIC_D1}; //分辨率

printf("HDMI/SDI 1080P MODE\n");

#endif

HI_U32 u32Profile = 0;

VB_CONF_S stVbConf; //内存池配置结构体

SAMPLE_VI_CONFIG_S stViConfig = {0}; //视频输入配置结构体清零

VPSS_GRP VpssGrp; //VPSS(Video Process Sub-System)的组号

VPSS_CHN VpssChn; //VPSS(Video Process Sub-System)的通道号

VPSS_GRP_ATTR_S stVpssGrpAttr; //视频处理子模块的组属性

VPSS_CHN_ATTR_S stVpssChnAttr; //视频处理子模块的通道属性

VPSS_CHN_MODE_S stVpssChnMode; //视频处理子模块的通道工作模式

VENC_CHN VencChn; //视频编码的通道号

SAMPLE_RC_E enRcMode = SAMPLE_RC_CBR; //视频编码方式,,恒定码率

HI_S32 s32ChnNum;

HI_S32 s32Ret = HI_SUCCESS;

HI_U32 u32BlkSize; //块的大小,一个内存池有许多个块

SIZE_S stSize; //图像宽和高

char c;

if ((SONY_IMX178_LVDS_5M_30FPS == SENSOR_TYPE)

|| (APTINA_AR0330_MIPI_1536P_25FPS == SENSOR_TYPE)

|| (APTINA_AR0330_MIPI_1296P_25FPS == SENSOR_TYPE))

{

s32ChnNum = 2;

}

else

{

s32ChnNum = 3;

}

/******************************************

step 1: init sys variable

******************************************/

memset(&stVbConf, 0, sizeof(VB_CONF_S)); //分配的内存池结构体清零

SAMPLE_COMM_VI_GetSizeBySensor(&enSize[0]); //由传感器的类型得到分辨率的尺寸

switch (SENSOR_TYPE)

{

case SONY_IMX178_LVDS_5M_30FPS:

case APTINA_AR0330_MIPI_1536P_25FPS:

case APTINA_AR0330_MIPI_1296P_25FPS:

enSize[1] = PIC_VGA;

break;

default:

break;

}

stVbConf.u32MaxPoolCnt = 128; //最大的内存池数量

/*video buffer*/

u32BlkSize = SAMPLE_COMM_SYS_CalcPicVbBlkSize(gs_enNorm, \

enSize[0], SAMPLE_PIXEL_FORMAT, SAMPLE_SYS_ALIGN_WIDTH);

//由视频格式gs_enNorm、分辨率enSize[0]、像素格式SAMPLE_PIXEL_FORMAT、对齐宽度SAMPLE_SYS_ALIGN_WIDTH

//这4个输入计算得到内存块的大小

printf("u32BlkSize=%d\r\n",u32BlkSize);

stVbConf.astCommPool[0].u32BlkSize = u32BlkSize;

stVbConf.astCommPool[0].u32BlkCnt = 20;

u32BlkSize = SAMPLE_COMM_SYS_CalcPicVbBlkSize(gs_enNorm, \

enSize[1], SAMPLE_PIXEL_FORMAT, SAMPLE_SYS_ALIGN_WIDTH);

stVbConf.astCommPool[1].u32BlkSize = u32BlkSize;

stVbConf.astCommPool[1].u32BlkCnt = 20;

u32BlkSize = SAMPLE_COMM_SYS_CalcPicVbBlkSize(gs_enNorm, \

enSize[2], SAMPLE_PIXEL_FORMAT, SAMPLE_SYS_ALIGN_WIDTH);

stVbConf.astCommPool[2].u32BlkSize = u32BlkSize;

stVbConf.astCommPool[2].u32BlkCnt = 20;

/******************************************

step 2: mpp system init.

******************************************/

s32Ret = SAMPLE_COMM_SYS_Init(&stVbConf);

/*SAMPLE_COMM_SYS_Init()即 :

HI_MPI_SYS_Exit();

HI_MPI_VB_Exit();

HI_MPI_VB_SetConf(pstVbConf);

HI_MPI_VB_Init();

HI_MPI_SYS_SetConf(&stSysConf);

HI_MPI_SYS_Init();

*/

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("system init failed with %d!\n", s32Ret);

goto END_VENC_1080P_CLASSIC_0;

}

/******************************************

step 3: start vi dev & chn to capture

******************************************/

stViConfig.enViMode = SENSOR_TYPE; //SAMPLE_VI_MODE_BT1120_1080P

stViConfig.enRotate = ROTATE_NONE; //不翻转

stViConfig.enNorm = VIDEO_ENCODING_MODE_AUTO;//自动模式

stViConfig.enViChnSet = VI_CHN_SET_NORMAL;//正常模式,非镜像、翻转

stViConfig.enWDRMode = WDR_MODE_NONE; //不使用宽动态

s32Ret = SAMPLE_COMM_VI_StartVi(&stViConfig);//传入视频输入结构体stViConfig的参数,开启vi-视频输入

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("start vi failed!\n");

goto END_VENC_1080P_CLASSIC_1;

}

/******************************************

step 4: start vpss and vi bind vpss

******************************************/

s32Ret = SAMPLE_COMM_SYS_GetPicSize(gs_enNorm, enSize[0], &stSize);

//根据视频格式gs_enNorm、分辨率enSize[0]、长宽结构体stSize得到图像尺寸

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("SAMPLE_COMM_SYS_GetPicSize failed!\n");

goto END_VENC_1080P_CLASSIC_1;

}

VpssGrp = 0;

stVpssGrpAttr.u32MaxW = stSize.u32Width;

stVpssGrpAttr.u32MaxH = stSize.u32Height;

stVpssGrpAttr.bIeEn = HI_FALSE;

stVpssGrpAttr.bNrEn = HI_TRUE;

stVpssGrpAttr.bHistEn = HI_FALSE;

stVpssGrpAttr.bDciEn = HI_FALSE;

stVpssGrpAttr.enDieMode = VPSS_DIE_MODE_NODIE; //Hi3516A/Hi3518EV200/Hi3519V100 不支持 DIE,故配置为VPSS_DIE_MODE_NODIE,关闭去交错功能

stVpssGrpAttr.enPixFmt = SAMPLE_PIXEL_FORMAT; //PIXEL_FORMAT_YUV_SEMIPLANAR_420

s32Ret = SAMPLE_COMM_VPSS_StartGroup(VpssGrp, &stVpssGrpAttr);//由视频处理子程序的组号和组的属性参数来开启组

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("Start Vpss failed!\n");

goto END_VENC_1080P_CLASSIC_2;

}

s32Ret = SAMPLE_COMM_VI_BindVpss(stViConfig.enViMode);//根据输入的视频格式进行绑定

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("Vi bind Vpss failed!\n");

goto END_VENC_1080P_CLASSIC_3;

}

VpssChn = 0;

stVpssChnMode.enChnMode = VPSS_CHN_MODE_USER;

stVpssChnMode.bDouble = HI_FALSE;

stVpssChnMode.enPixelFormat = SAMPLE_PIXEL_FORMAT;

stVpssChnMode.u32Width = stSize.u32Width;

stVpssChnMode.u32Height = stSize.u32Height;

stVpssChnMode.enCompressMode = COMPRESS_MODE_SEG;

memset(&stVpssChnAttr, 0, sizeof(stVpssChnAttr));//vpss通道的属性结构体清零

stVpssChnAttr.s32SrcFrameRate = -1;

stVpssChnAttr.s32DstFrameRate = -1;

s32Ret = SAMPLE_COMM_VPSS_EnableChn(VpssGrp, VpssChn, &stVpssChnAttr, &stVpssChnMode, HI_NULL);//使能通道0

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("Enable vpss chn failed!\n");

goto END_VENC_1080P_CLASSIC_4;

}

VpssChn = 1;

stVpssChnMode.enChnMode = VPSS_CHN_MODE_USER;

stVpssChnMode.bDouble = HI_FALSE;

stVpssChnMode.enPixelFormat = SAMPLE_PIXEL_FORMAT;

stVpssChnMode.u32Width = stSize.u32Width;

stVpssChnMode.u32Height = stSize.u32Height;

stVpssChnMode.enCompressMode = COMPRESS_MODE_SEG;

stVpssChnAttr.s32SrcFrameRate = -1;

stVpssChnAttr.s32DstFrameRate = -1;

s32Ret = SAMPLE_COMM_VPSS_EnableChn(VpssGrp, VpssChn, &stVpssChnAttr, &stVpssChnMode, HI_NULL);//使能通道1

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("Enable vpss chn failed!\n");

goto END_VENC_1080P_CLASSIC_4;

}

if ((SONY_IMX178_LVDS_5M_30FPS != SENSOR_TYPE)

&& (APTINA_AR0330_MIPI_1536P_25FPS != SENSOR_TYPE)

&& (APTINA_AR0330_MIPI_1296P_25FPS != SENSOR_TYPE))

{

VpssChn = 2;

stVpssChnMode.enChnMode = VPSS_CHN_MODE_USER;

stVpssChnMode.bDouble = HI_FALSE;

stVpssChnMode.enPixelFormat = SAMPLE_PIXEL_FORMAT;

stVpssChnMode.u32Width = 720;

stVpssChnMode.u32Height = (VIDEO_ENCODING_MODE_PAL == gs_enNorm) ? 576 : 480;;

stVpssChnMode.enCompressMode = COMPRESS_MODE_NONE;

stVpssChnAttr.s32SrcFrameRate = -1;

stVpssChnAttr.s32DstFrameRate = -1;

s32Ret = SAMPLE_COMM_VPSS_EnableChn(VpssGrp, VpssChn, &stVpssChnAttr, &stVpssChnMode, HI_NULL);//使能通道2

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("Enable vpss chn failed!\n");

goto END_VENC_1080P_CLASSIC_4;

}

}

/******************************************

step 5: start stream venc

******************************************/

enRcMode = SAMPLE_RC_CBR; //编码方式cbr

VpssGrp = 0;

VpssChn = 0;

VencChn = 0;

s32Ret = SAMPLE_COMM_VENC_Start(VencChn, enPayLoad[0], \

gs_enNorm, enSize[0], enRcMode, u32Profile); // 开启编码模块VencChn=0

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("Start Venc failed!\n");

goto END_VENC_1080P_CLASSIC_5;

}

s32Ret = SAMPLE_COMM_VENC_BindVpss(VencChn, VpssGrp, VpssChn); //把视频处理模块VpssChn=0绑定到视频编码模块VencChn=0

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("Start Venc failed!\n");

goto END_VENC_1080P_CLASSIC_5;

}

/*** 1080p **/

VpssChn = 1;

VencChn = 1;

s32Ret = SAMPLE_COMM_VENC_Start(VencChn, enPayLoad[1], \

gs_enNorm, enSize[1], enRcMode, u32Profile);// 开启编码模块VencChn=1

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("Start Venc failed!\n");

goto END_VENC_1080P_CLASSIC_5;

}

s32Ret = SAMPLE_COMM_VENC_BindVpss(VencChn, VpssGrp, VpssChn);//把视频处理模块VpssChn=1绑定到视频编码模块VencChn=1

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("Start Venc failed!\n");

goto END_VENC_1080P_CLASSIC_5;

}

/*** D1 **/

if (SONY_IMX178_LVDS_5M_30FPS != SENSOR_TYPE)

{

VpssChn = 2;

VencChn = 2;

s32Ret = SAMPLE_COMM_VENC_Start(VencChn, enPayLoad[2], \

gs_enNorm, enSize[2], enRcMode, u32Profile);// 开启编码模块VencChn=2

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("Start Venc failed!\n");

goto END_VENC_1080P_CLASSIC_5;

}

s32Ret = SAMPLE_COMM_VENC_BindVpss(VencChn, VpssGrp, VpssChn);//把视频处理模块VpssChn=2绑定到视频编码模块VencChn=2

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("Start Venc failed!\n");

goto END_VENC_1080P_CLASSIC_5;

}

}

/******************************************

step 6: stream venc process -- get stream, then save it to file.

******************************************/

printf("6");

s32Ret = SAMPLE_COMM_VENC_StartGetStream(s32ChnNum);

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("Start Venc failed!\n");

goto END_VENC_1080P_CLASSIC_5;

}

printf("7");

printf("please press twice ENTER to exit this sample\n");

getchar();

getchar();

/******************************************

step 7: exit process

******************************************/

SAMPLE_COMM_VENC_StopGetStream();

END_VENC_1080P_CLASSIC_5:

VpssGrp = 0;

VpssChn = 0;

VencChn = 0;

SAMPLE_COMM_VENC_UnBindVpss(VencChn, VpssGrp, VpssChn);

SAMPLE_COMM_VENC_Stop(VencChn);

VpssChn = 1;

VencChn = 1;

SAMPLE_COMM_VENC_UnBindVpss(VencChn, VpssGrp, VpssChn);

SAMPLE_COMM_VENC_Stop(VencChn);

if (SONY_IMX178_LVDS_5M_30FPS != SENSOR_TYPE)

{

VpssChn = 2;

VencChn = 2;

SAMPLE_COMM_VENC_UnBindVpss(VencChn, VpssGrp, VpssChn);

SAMPLE_COMM_VENC_Stop(VencChn);

}

SAMPLE_COMM_VI_UnBindVpss(stViConfig.enViMode);

END_VENC_1080P_CLASSIC_4: //vpss stop

VpssGrp = 0;

VpssChn = 0;

SAMPLE_COMM_VPSS_DisableChn(VpssGrp, VpssChn);

VpssChn = 1;

SAMPLE_COMM_VPSS_DisableChn(VpssGrp, VpssChn);

if (SONY_IMX178_LVDS_5M_30FPS != SENSOR_TYPE)

{

VpssChn = 2;

SAMPLE_COMM_VPSS_DisableChn(VpssGrp, VpssChn);

}

END_VENC_1080P_CLASSIC_3: //vpss stop

SAMPLE_COMM_VI_UnBindVpss(stViConfig.enViMode);

END_VENC_1080P_CLASSIC_2: //vpss stop

SAMPLE_COMM_VPSS_StopGroup(VpssGrp);

END_VENC_1080P_CLASSIC_1: //vi stop

SAMPLE_COMM_VI_StopVi(&stViConfig);

END_VENC_1080P_CLASSIC_0: //system exit

SAMPLE_COMM_SYS_Exit();

return s32Ret;

}

先分析SAMPLE_VENC_1080P_CLASSIC函数用到的结构体。

VB_CONF_S结构体

typedef struct hiVB_CONF_S

{

HI_U32 u32MaxPoolCnt; /* max count of pools, (0,VB_MAX_POOLS] */

struct hiVB_CPOOL_S

{

HI_U32 u32BlkSize; //每一块是多大

HI_U32 u32BlkCnt; //一共有多少块

HI_CHAR acMmzName[MAX_MMZ_NAME_LEN]; //该内存块的名字,16个字符

}astCommPool[VB_MAX_COMM_POOLS]; //16个内存池 ,用于16个通道

} VB_CONF_S;//该结构体定义了16个内存池数组,每个内存池有多个内存块

SAMPLE_VI_CONFIG_S结构体

typedef struct sample_vi_config_s

{

SAMPLE_VI_MODE_E enViMode; //模式

VIDEO_NORM_E enNorm; /*DC: VIDEO_ENCODING_MODE_AUTO */ //制式

ROTATE_E enRotate; //旋转

SAMPLE_VI_CHN_SET_E enViChnSet; //通道设置

WDR_MODE_E enWDRMode; //宽动态,这种技术需要sensor硬件支持。

//动态范围:在一幅图像中,能看到最亮与最暗的比例

} SAMPLE_VI_CONFIG_S;

VPSS_GRP_ATTR_S结构体

/*Define attributes of vpss GROUP*/

typedef struct hiVPSS_GRP_ATTR_S

{

/*statistic attributes*/

HI_U32 u32MaxW; /*MAX width of the group*/ //最大图像宽度

HI_U32 u32MaxH; /*MAX height of the group*/ //最大图像高度

PIXEL_FORMAT_E enPixFmt; /*Pixel format*/ //像素格式,包括rgb 、 yuv等。Hi3516a仅支持SemiPlannar422 和SemiPlannar420。

HI_BOOL bIeEn; /*Image enhance enable*/ //图像增强,必须设置为 HI_FALSE

HI_BOOL bDciEn; /*Dynamic contrast Improve enable*/ //动态对比度增强,必须设置为 HI_FALSE

HI_BOOL bNrEn; /*Noise reduce enable*/ //去噪

HI_BOOL bHistEn; /*Hist enable*/ //必须设置为 HI_FALSE

VPSS_DIE_MODE_E enDieMode; /*De-interlace enable*/ //去交叉,必须设置为VPSS_DIE_MODE_NODIE

}VPSS_GRP_ATTR_S;

VPSS_CHN_ATTR_S结构体

/*Define attributes of vpss channel*/

typedef struct hiVPSS_CHN_ATTR_S

{

HI_BOOL bSpEn; /*Sharpen enable*/ //锐化

HI_BOOL bBorderEn; /*Frame enable*/ //边框

HI_BOOL bMirror; /*mirror enable*/ //镜像

HI_BOOL bFlip; /*flip enable*/ //翻转

HI_S32 s32SrcFrameRate; /* source frame rate */ //源帧率

HI_S32 s32DstFrameRate; /* dest frame rate */ //目的帧率

BORDER_S stBorder; //边框

}VPSS_CHN_ATTR_S;

VPSS_CHN_MODE_S

/*Define attributes of vpss channel's work mode*/

typedef struct hiVPSS_CHN_MODE_S

{

VPSS_CHN_MODE_E enChnMode; /*Vpss channel's work mode*/

HI_U32 u32Width; /*Width of target image*/

HI_U32 u32Height; /*Height of target image*/

HI_BOOL bDouble; /*Field-frame transfer��only valid for VPSS_PRE0_CHN*/ //保留

PIXEL_FORMAT_E enPixelFormat;/*Pixel format of target image*/ //像素格式

COMPRESS_MODE_E enCompressMode; /*Compression mode of the output*/ //压缩模式

}VPSS_CHN_MODE_S;

SAMPLE_RC_E

typedef enum sample_rc_e

{

SAMPLE_RC_CBR = 0, //Constant Bit Rate,恒定比特率方式进行编码

SAMPLE_RC_VBR, //Variable Bit Rate,动态比特率,其码率可以随着图像的复杂程度的不同而变化,因此其编码效率比较高

SAMPLE_RC_FIXQP //固定的量化参数

} SAMPLE_RC_E;

首先,进行变量的初始化工作,init sys variable。

下面先来分析SAMPLE_COMM_SYS_CalcPicVbBlkSize函数。

/******************************************************************************

* function : calculate VB Block size of picture.

******************************************************************************/

HI_U32 SAMPLE_COMM_SYS_CalcPicVbBlkSize(VIDEO_NORM_E enNorm, PIC_SIZE_E enPicSize, PIXEL_FORMAT_E enPixFmt, HI_U32 u32AlignWidth)

{

HI_S32 s32Ret = HI_FAILURE;

SIZE_S stSize;

HI_U32 u32VbSize;

HI_U32 u32HeaderSize;

s32Ret = SAMPLE_COMM_SYS_GetPicSize(enNorm, enPicSize, &stSize);//根据enNorm, enPicSize得到stSize图片尺寸

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("get picture size[%d] failed!\n", enPicSize);

return HI_FAILURE;

}

if (PIXEL_FORMAT_YUV_SEMIPLANAR_422 != enPixFmt && PIXEL_FORMAT_YUV_SEMIPLANAR_420 != enPixFmt) //hi3516a只支持这两种格式

{

SAMPLE_PRT("pixel format[%d] input failed!\n", enPixFmt);

return HI_FAILURE;

}

if (16 != u32AlignWidth && 32 != u32AlignWidth && 64 != u32AlignWidth)

{

SAMPLE_PRT("system align width[%d] input failed!\n", \

u32AlignWidth);

return HI_FAILURE;

}

//SAMPLE_PRT("w:%d, u32AlignWidth:%d\n", CEILING_2_POWER(stSize.u32Width,u32AlignWidth), u32AlignWidth);

//CEILING_2_POWER(stSize.u32Width, u32AlignWidth) 这个宏的意思是stSize.u32Width向u32AlignWidth对齐

//怎么对齐,stSize.u32Width向上取整,u32AlignWidth是64,stSize.u32Width为1920,往上数,

//一直数到够第一个够64整除的数,意思就是往上留点余量.1920/64=32,是整数,还去1920即可

//怎么对齐,stSize.u32Height向上取整,u32AlignWidth是64,stSize.u32Width为1080,往上数,一直数到够第一个够64整除的数是1088

printf("CEILING_2_POWER(stSize.u32Width, u32AlignWidth)=%d\r\n",CEILING_2_POWER(stSize.u32Width, u32AlignWidth) );

printf("CEILING_2_POWER(stSize.u32Height, u32AlignWidth)=%d\r\n",CEILING_2_POWER(stSize.u32Height, u32AlignWidth) );

u32VbSize = ( CEILING_2_POWER(stSize.u32Width, u32AlignWidth) * \ //stSize.u32Width=1920,u32AlignWidth=64,1920

CEILING_2_POWER(stSize.u32Height, u32AlignWidth) * \ //stSize.u32Height=1080,u32AlignWidth=64,1088

((PIXEL_FORMAT_YUV_SEMIPLANAR_422 == enPixFmt) ? 2 : 1.5) ); //PIXEL_FORMAT_YUV_SEMIPLANAR_420 ,1.5

//1920x1088x1.5(本例用420,取1.5)

printf("u32VbSize=%d\r\n",u32VbSize );

VB_PIC_HEADER_SIZE(stSize.u32Width, stSize.u32Height, enPixFmt, u32HeaderSize);//u32HeaderSize=16x1080x3>>1

printf("u32HeaderSize=%d\r\n",u32HeaderSize );

u32VbSize += u32HeaderSize;//1920x1088x1.5+16x1080x1.5

printf("u32VbSize=%d\r\n",u32VbSize);

return u32VbSize;

}

运行并打印出实际值,按照上述分析进行计算,计算值完全匹配的。

接着分析SAMPLE_COMM_SYS_Init函数,实现mpp system init。

/******************************************************************************

* function : vb init & MPI system init

******************************************************************************/

HI_S32 SAMPLE_COMM_SYS_Init(VB_CONF_S* pstVbConf)

{

MPP_SYS_CONF_S stSysConf = {0};

HI_S32 s32Ret = HI_FAILURE;

//去初始化 MPP 系统。包括音频输入输出、视频输入输出、视频编码、视频叠加区域、

//视频侦测分析通道等都会被销毁或者禁用

//由于系统去初始化不会销毁音频的编解码通道,因此这些通道的销毁需要用户主

//动进行。如果创建这些通道的进程退出,则通道随之被销毁。

HI_MPI_SYS_Exit(); //MPI代表MPP interface, MPP-Media Process Platform

//必须先调用 HI_MPI_SYS_Exit 去初始化 MPP 系统,再去初始化缓存池,否则返

//回失败。

//可以反复去初始化,不返回失败。

//去初始化不会清除先前对缓存池的配置

HI_MPI_VB_Exit();

if (NULL == pstVbConf)

{

SAMPLE_PRT("input parameter is null, it is invaild!\n");

return HI_FAILURE;

}

//只能在系统处于未初始化的状态下,才可以设置缓存池属性,否则会返回失败

s32Ret = HI_MPI_VB_SetConf(pstVbConf);

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("HI_MPI_VB_SetConf failed!\n");

return HI_FAILURE;

}

//必须先调用 HI_MPI_VB_SetConf 配置缓存池属性,再初始化缓存池,否则会失败

s32Ret = HI_MPI_VB_Init();

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("HI_MPI_VB_Init failed!\n");

return HI_FAILURE;

}

//只有在 MPP 整个系统处于未初始化状态,才可调用此函数配置 MPP 系统,否则会配置失败

stSysConf.u32AlignWidth = SAMPLE_SYS_ALIGN_WIDTH; //64

s32Ret = HI_MPI_SYS_SetConf(&stSysConf);

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("HI_MPI_SYS_SetConf failed\n");

return HI_FAILURE;

}

//初始化 MPP 系统。包括音频输入输出、视频输入输出、视频编码、视频叠加区域、视频侦测分析等都会被初始化。

//必须先调用 HI_MPI_SYS_SetConf 配置 MPP 系统后才能初始化,否则初始化会失败。

//由于 MPP 系统的正常运行依赖于缓存池,因此必须先调用 HI_MPI_VB_Init 初始

//化缓存池,再初始化 MPP 系统。

s32Ret = HI_MPI_SYS_Init();

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("HI_MPI_SYS_Init failed!\n");

return HI_FAILURE;

}

return HI_SUCCESS;

}

实际上,上述函数主要按照以下顺序调用海思的接口库进行配置:

SAMPLE_COMM_SYS_Init()即 :

HI_MPI_SYS_Exit();

HI_MPI_VB_Exit();

HI_MPI_VB_SetConf(pstVbConf);

HI_MPI_VB_Init();

HI_MPI_SYS_SetConf(&stSysConf);

HI_MPI_SYS_Init();

接着继续解析SAMPLE_COMM_VI_StartVi函数,start vi dev & chn to capture,开启vi设备和通道进行采集及数据。

HI_S32 SAMPLE_COMM_VI_StartVi(SAMPLE_VI_CONFIG_S* pstViConfig)

{

HI_S32 s32Ret = HI_SUCCESS;

SAMPLE_VI_MODE_E enViMode;

if (!pstViConfig)

{

SAMPLE_PRT("%s: null ptr\n", __FUNCTION__);

return HI_FAILURE;

}

enViMode = pstViConfig->enViMode;

if (!IsSensorInput(enViMode)) //非摄像头传感器接口输入

{

s32Ret = SAMPLE_COMM_VI_StartBT656(pstViConfig);

}

else //传感器接口输入

{

// s32Ret = SAMPLE_COMM_VI_StartIspAndVi(pstViConfig);

}

return s32Ret;

}

接着start vpss and vi bind vpss,开启vpss视频处理子程序,并把vpss绑定到vi,相当于把vi的数据传给vpss进行处理。

开启VPSS函数SAMPLE_COMM_VPSS_StartGroup 和 vpss绑定到vi函数SAMPLE_COMM_VI_BindVpss如下:

HI_S32 SAMPLE_COMM_VPSS_StartGroup(VPSS_GRP VpssGrp, VPSS_GRP_ATTR_S* pstVpssGrpAttr)

{

HI_S32 s32Ret;

VPSS_GRP_PARAM_S stVpssParam;

if (VpssGrp < 0 || VpssGrp > VPSS_MAX_GRP_NUM) //128

{

printf("VpssGrp%d is out of rang. \n", VpssGrp);

return HI_FAILURE;

}

if (HI_NULL == pstVpssGrpAttr)

{

printf("null ptr,line%d. \n", __LINE__);

return HI_FAILURE;

}

s32Ret = HI_MPI_VPSS_CreateGrp(VpssGrp, pstVpssGrpAttr);//由组号VpssGrp和 组参数pstVpssGrpAttr创建组

if (s32Ret != HI_SUCCESS)

{

SAMPLE_PRT("HI_MPI_VPSS_CreateGrp failed with %#x!\n", s32Ret);

return HI_FAILURE;

}

/*** set vpss param ***/

s32Ret = HI_MPI_VPSS_GetGrpParam(VpssGrp, &stVpssParam); //由组号VpssGrp得到组参数pstVpssGrpAttr

if (s32Ret != HI_SUCCESS)

{

SAMPLE_PRT("failed with %#x!\n", s32Ret);

return HI_FAILURE;

}

s32Ret = HI_MPI_VPSS_SetGrpParam(VpssGrp, &stVpssParam);//设置对应的组参数

if (s32Ret != HI_SUCCESS)

{

SAMPLE_PRT("failed with %#x!\n", s32Ret);

return HI_FAILURE;

}

s32Ret = HI_MPI_VPSS_StartGrp(VpssGrp); //开启组

if (s32Ret != HI_SUCCESS)

{

SAMPLE_PRT("HI_MPI_VPSS_StartGrp failed with %#x\n", s32Ret);

return HI_FAILURE;

}

return HI_SUCCESS;

}

/*****************************************************************************

* function : Vi chn bind vpss group

*****************************************************************************/

HI_S32 SAMPLE_COMM_VI_BindVpss(SAMPLE_VI_MODE_E enViMode)

{

HI_S32 j, s32Ret;

VPSS_GRP VpssGrp;

MPP_CHN_S stSrcChn;

MPP_CHN_S stDestChn;

SAMPLE_VI_PARAM_S stViParam;

VI_CHN ViChn;

s32Ret = SAMPLE_COMM_VI_Mode2Param(enViMode, &stViParam);//视频输入模式enViMode转换为视频输入参数stViParam

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("SAMPLE_COMM_VI_Mode2Param failed!\n");

return HI_FAILURE;

}

VpssGrp = 0;

for (j = 0; j < stViParam.s32ViChnCnt; j++)

{

ViChn = j * stViParam.s32ViChnInterval;

stSrcChn.enModId = HI_ID_VIU; //源通道的类型,是vi

stSrcChn.s32DevId = 0;

stSrcChn.s32ChnId = ViChn;

stDestChn.enModId = HI_ID_VPSS; //目的通道的类型,是vpss

stDestChn.s32DevId = VpssGrp;

stDestChn.s32ChnId = 0;

s32Ret = HI_MPI_SYS_Bind(&stSrcChn, &stDestChn);//绑定源通道和目的通道

if (s32Ret != HI_SUCCESS)

{

SAMPLE_PRT("failed with %#x!\n", s32Ret);

return HI_FAILURE;

}

VpssGrp ++;

}

return HI_SUCCESS;

}

vi和vpss绑定后,使能vpss 的通道,SAMPLE_COMM_VPSS_EnableChn。

HI_S32 SAMPLE_COMM_VPSS_StartGroup(VPSS_GRP VpssGrp, VPSS_GRP_ATTR_S* pstVpssGrpAttr)

{

HI_S32 s32Ret;

VPSS_GRP_PARAM_S stVpssParam;

if (VpssGrp < 0 || VpssGrp > VPSS_MAX_GRP_NUM) //128

{

printf("VpssGrp%d is out of rang. \n", VpssGrp);

return HI_FAILURE;

}

if (HI_NULL == pstVpssGrpAttr)

{

printf("null ptr,line%d. \n", __LINE__);

return HI_FAILURE;

}

s32Ret = HI_MPI_VPSS_CreateGrp(VpssGrp, pstVpssGrpAttr);//由组号VpssGrp和 组参数pstVpssGrpAttr创建组

if (s32Ret != HI_SUCCESS)

{

SAMPLE_PRT("HI_MPI_VPSS_CreateGrp failed with %#x!\n", s32Ret);

return HI_FAILURE;

}

/*** set vpss param ***/

s32Ret = HI_MPI_VPSS_GetGrpParam(VpssGrp, &stVpssParam); //由组号VpssGrp得到组参数pstVpssGrpAttr

if (s32Ret != HI_SUCCESS)

{

SAMPLE_PRT("failed with %#x!\n", s32Ret);

return HI_FAILURE;

}

s32Ret = HI_MPI_VPSS_SetGrpParam(VpssGrp, &stVpssParam);//设置对应的组参数

if (s32Ret != HI_SUCCESS)

{

SAMPLE_PRT("failed with %#x!\n", s32Ret);

return HI_FAILURE;

}

s32Ret = HI_MPI_VPSS_StartGrp(VpssGrp); //开启组

if (s32Ret != HI_SUCCESS)

{

SAMPLE_PRT("HI_MPI_VPSS_StartGrp failed with %#x\n", s32Ret);

return HI_FAILURE;

}

return HI_SUCCESS;

}

接着,开启编码设备,把venc和vpss绑定,这样vpss处理完的数据就进入了编码venc设备中。

开启venc函数SAMPLE_COMM_VENC_Start如下:

/******************************************************************************

* funciton : Start venc stream mode (h264, mjpeg)

* note : rate control parameter need adjust, according your case.

******************************************************************************/

HI_S32 SAMPLE_COMM_VENC_Start(VENC_CHN VencChn, PAYLOAD_TYPE_E enType, VIDEO_NORM_E enNorm, PIC_SIZE_E enSize, SAMPLE_RC_E enRcMode, HI_U32 u32Profile)

{

HI_S32 s32Ret;

VENC_CHN_ATTR_S stVencChnAttr;

VENC_ATTR_H264_S stH264Attr;

VENC_ATTR_H264_CBR_S stH264Cbr;

VENC_ATTR_H264_VBR_S stH264Vbr;

VENC_ATTR_H264_FIXQP_S stH264FixQp;

VENC_ATTR_H265_S stH265Attr;

VENC_ATTR_H265_CBR_S stH265Cbr;

VENC_ATTR_H265_VBR_S stH265Vbr;

VENC_ATTR_H265_FIXQP_S stH265FixQp;

VENC_ATTR_MJPEG_S stMjpegAttr;

VENC_ATTR_MJPEG_FIXQP_S stMjpegeFixQp;

VENC_ATTR_JPEG_S stJpegAttr;

SIZE_S stPicSize;

s32Ret = SAMPLE_COMM_SYS_GetPicSize(enNorm, enSize, &stPicSize);//由视频模式enNorm和enSize大小得到图像尺寸

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("Get picture size failed!\n");

return HI_FAILURE;

}

/******************************************

step 1: Create Venc Channel

******************************************/

stVencChnAttr.stVeAttr.enType = enType;

switch (enType)

{

case PT_H264:

{

stH264Attr.u32MaxPicWidth = stPicSize.u32Width;

stH264Attr.u32MaxPicHeight = stPicSize.u32Height;

stH264Attr.u32PicWidth = stPicSize.u32Width;/*the picture width*/

stH264Attr.u32PicHeight = stPicSize.u32Height;/*the picture height*/

stH264Attr.u32BufSize = stPicSize.u32Width * stPicSize.u32Height * 2;/*stream buffer size*/

stH264Attr.u32Profile = u32Profile;/*0: baseline; 1:MP; 2:HP; 3:svc_t */

stH264Attr.bByFrame = HI_TRUE;/*get stream mode is slice mode or frame mode?*/

stH264Attr.u32BFrameNum = 0;/* 0: not support B frame; >=1: number of B frames */ //是否支持B帧,前后预测帧

stH264Attr.u32RefNum = 1;/* 0: default; number of refrence frame*/ //参考帧的数量

memcpy(&stVencChnAttr.stVeAttr.stAttrH264e, &stH264Attr, sizeof(VENC_ATTR_H264_S));

if (SAMPLE_RC_CBR == enRcMode) //CBR( Constant Bit Rate)固定比特率。即在码率统计时间内保证编码码率平稳

{

stVencChnAttr.stRcAttr.enRcMode = VENC_RC_MODE_H264CBR;

stH264Cbr.u32Gop = (VIDEO_ENCODING_MODE_PAL == enNorm) ? 25 : 30;

stH264Cbr.u32StatTime = 1; /* stream rate statics time(s) */

stH264Cbr.u32SrcFrmRate = (VIDEO_ENCODING_MODE_PAL == enNorm) ? 25 : 30; /* input (vi) frame rate */

stH264Cbr.fr32DstFrmRate = (VIDEO_ENCODING_MODE_PAL == enNorm) ? 25 : 30; /* target frame rate */

switch (enSize)

{

case PIC_QCIF:

stH264Cbr.u32BitRate = 256; /* average bit rate */

break;

case PIC_QVGA: /* 320 * 240 */

case PIC_CIF:

stH264Cbr.u32BitRate = 512;

break;

case PIC_D1:

case PIC_VGA: /* 640 * 480 */

stH264Cbr.u32BitRate = 1024 * 2;

break;

case PIC_HD720: /* 1280 * 720 */

stH264Cbr.u32BitRate = 1024 * 2;

break;

case PIC_HD1080: /* 1920 * 1080 */

stH264Cbr.u32BitRate = 1024 * 4;

break;

case PIC_5M: /* 2592 * 1944 */

stH264Cbr.u32BitRate = 1024 * 8;

break;

default :

stH264Cbr.u32BitRate = 1024 * 4;

break;

}

stH264Cbr.u32FluctuateLevel = 0; /* average bit rate */

memcpy(&stVencChnAttr.stRcAttr.stAttrH264Cbr, &stH264Cbr, sizeof(VENC_ATTR_H264_CBR_S));

}

else if (SAMPLE_RC_FIXQP == enRcMode) //Fix Qp 固定 Qp 值。在码率统计时间内,编码图像所有宏块 Qp 值相同

//采用用户设定的图像 Qp 值, I 帧和 P 帧的 QP 值可以分别设置

{

stVencChnAttr.stRcAttr.enRcMode = VENC_RC_MODE_H264FIXQP;

stH264FixQp.u32Gop = (VIDEO_ENCODING_MODE_PAL == enNorm) ? 25 : 30;

stH264FixQp.u32SrcFrmRate = (VIDEO_ENCODING_MODE_PAL == enNorm) ? 25 : 30;

stH264FixQp.fr32DstFrmRate = (VIDEO_ENCODING_MODE_PAL == enNorm) ? 25 : 30;

stH264FixQp.u32IQp = 20;

stH264FixQp.u32PQp = 23;

memcpy(&stVencChnAttr.stRcAttr.stAttrH264FixQp, &stH264FixQp, sizeof(VENC_ATTR_H264_FIXQP_S));

}

else if (SAMPLE_RC_VBR == enRcMode) //VBR( Variable Bit Rate)可变比特率,即允许在码率统计时间内编码码率波动,从而保证编码图像质量平稳。

{

stVencChnAttr.stRcAttr.enRcMode = VENC_RC_MODE_H264VBR;

stH264Vbr.u32Gop = (VIDEO_ENCODING_MODE_PAL == enNorm) ? 25 : 30;

stH264Vbr.u32StatTime = 1;

stH264Vbr.u32SrcFrmRate = (VIDEO_ENCODING_MODE_PAL == enNorm) ? 25 : 30;

stH264Vbr.fr32DstFrmRate = (VIDEO_ENCODING_MODE_PAL == enNorm) ? 25 : 30;

stH264Vbr.u32MinQp = 10;

stH264Vbr.u32MaxQp = 40;

switch (enSize)

{

case PIC_QCIF:

stH264Vbr.u32MaxBitRate = 256 * 3; /* average bit rate */

break;

case PIC_QVGA: /* 320 * 240 */

case PIC_CIF:

stH264Vbr.u32MaxBitRate = 512 * 3;

break;

case PIC_D1:

case PIC_VGA: /* 640 * 480 */

stH264Vbr.u32MaxBitRate = 1024 * 2;

break;

case PIC_HD720: /* 1280 * 720 */

stH264Vbr.u32MaxBitRate = 1024 * 3;

break;

case PIC_HD1080: /* 1920 * 1080 */

stH264Vbr.u32MaxBitRate = 1024 * 6;

break;

case PIC_5M: /* 2592 * 1944 */

stH264Vbr.u32MaxBitRate = 1024 * 8;

break;

default :

stH264Vbr.u32MaxBitRate = 1024 * 4;

break;

}

memcpy(&stVencChnAttr.stRcAttr.stAttrH264Vbr, &stH264Vbr, sizeof(VENC_ATTR_H264_VBR_S));

}

else

{

return HI_FAILURE;

}

}

break;

case PT_MJPEG:

{

stMjpegAttr.u32MaxPicWidth = stPicSize.u32Width;

stMjpegAttr.u32MaxPicHeight = stPicSize.u32Height;

stMjpegAttr.u32PicWidth = stPicSize.u32Width;

stMjpegAttr.u32PicHeight = stPicSize.u32Height;

stMjpegAttr.u32BufSize = stPicSize.u32Width * stPicSize.u32Height * 2;

stMjpegAttr.bByFrame = HI_TRUE; /*get stream mode is field mode or frame mode*/

memcpy(&stVencChnAttr.stVeAttr.stAttrMjpeg, &stMjpegAttr, sizeof(VENC_ATTR_MJPEG_S));

if (SAMPLE_RC_FIXQP == enRcMode)

{

stVencChnAttr.stRcAttr.enRcMode = VENC_RC_MODE_MJPEGFIXQP;

stMjpegeFixQp.u32Qfactor = 90;

stMjpegeFixQp.u32SrcFrmRate = (VIDEO_ENCODING_MODE_PAL == enNorm) ? 25 : 30;

stMjpegeFixQp.fr32DstFrmRate = (VIDEO_ENCODING_MODE_PAL == enNorm) ? 25 : 30;

memcpy(&stVencChnAttr.stRcAttr.stAttrMjpegeFixQp, &stMjpegeFixQp,

sizeof(VENC_ATTR_MJPEG_FIXQP_S));

}

else if (SAMPLE_RC_CBR == enRcMode)

{

stVencChnAttr.stRcAttr.enRcMode = VENC_RC_MODE_MJPEGCBR;

stVencChnAttr.stRcAttr.stAttrMjpegeCbr.u32StatTime = 1;

stVencChnAttr.stRcAttr.stAttrMjpegeCbr.u32SrcFrmRate = (VIDEO_ENCODING_MODE_PAL == enNorm) ? 25 : 30;

stVencChnAttr.stRcAttr.stAttrMjpegeCbr.fr32DstFrmRate = (VIDEO_ENCODING_MODE_PAL == enNorm) ? 25 : 30;

stVencChnAttr.stRcAttr.stAttrMjpegeCbr.u32FluctuateLevel = 0;

switch (enSize)

{

case PIC_QCIF:

stVencChnAttr.stRcAttr.stAttrMjpegeCbr.u32BitRate = 384 * 3; /* average bit rate */

break;

case PIC_QVGA: /* 320 * 240 */

case PIC_CIF:

stVencChnAttr.stRcAttr.stAttrMjpegeCbr.u32BitRate = 768 * 3;

break;

case PIC_D1:

case PIC_VGA: /* 640 * 480 */

stVencChnAttr.stRcAttr.stAttrMjpegeCbr.u32BitRate = 1024 * 3 * 3;

break;

case PIC_HD720: /* 1280 * 720 */

stVencChnAttr.stRcAttr.stAttrMjpegeCbr.u32BitRate = 1024 * 5 * 3;

break;

case PIC_HD1080: /* 1920 * 1080 */

stVencChnAttr.stRcAttr.stAttrMjpegeCbr.u32BitRate = 1024 * 10 * 3;

break;

case PIC_5M: /* 2592 * 1944 */

stVencChnAttr.stRcAttr.stAttrMjpegeCbr.u32BitRate = 1024 * 10 * 3;

break;

default :

stVencChnAttr.stRcAttr.stAttrMjpegeCbr.u32BitRate = 1024 * 10 * 3;

break;

}

}

else if (SAMPLE_RC_VBR == enRcMode)

{

stVencChnAttr.stRcAttr.enRcMode = VENC_RC_MODE_MJPEGVBR;

stVencChnAttr.stRcAttr.stAttrMjpegeVbr.u32StatTime = 1;

stVencChnAttr.stRcAttr.stAttrMjpegeVbr.u32SrcFrmRate = (VIDEO_ENCODING_MODE_PAL == enNorm) ? 25 : 30;

stVencChnAttr.stRcAttr.stAttrMjpegeVbr.fr32DstFrmRate = 5;

stVencChnAttr.stRcAttr.stAttrMjpegeVbr.u32MinQfactor = 50;

stVencChnAttr.stRcAttr.stAttrMjpegeVbr.u32MaxQfactor = 95;

switch (enSize)

{

case PIC_QCIF:

stVencChnAttr.stRcAttr.stAttrMjpegeVbr.u32MaxBitRate = 256 * 3; /* average bit rate */

break;

case PIC_QVGA: /* 320 * 240 */

case PIC_CIF:

stVencChnAttr.stRcAttr.stAttrMjpegeVbr.u32MaxBitRate = 512 * 3;

break;

case PIC_D1:

case PIC_VGA: /* 640 * 480 */

stVencChnAttr.stRcAttr.stAttrMjpegeVbr.u32MaxBitRate = 1024 * 2 * 3;

break;

case PIC_HD720: /* 1280 * 720 */

stVencChnAttr.stRcAttr.stAttrMjpegeVbr.u32MaxBitRate = 1024 * 3 * 3;

break;

case PIC_HD1080: /* 1920 * 1080 */

stVencChnAttr.stRcAttr.stAttrMjpegeVbr.u32MaxBitRate = 1024 * 6 * 3;

break;

case PIC_5M: /* 2592 * 1944 */

stVencChnAttr.stRcAttr.stAttrMjpegeVbr.u32MaxBitRate = 1024 * 12 * 3;

break;

default :

stVencChnAttr.stRcAttr.stAttrMjpegeVbr.u32MaxBitRate = 1024 * 4 * 3;

break;

}

}

else

{

SAMPLE_PRT("cann't support other mode in this version!\n");

return HI_FAILURE;

}

}

break;

case PT_JPEG:

stJpegAttr.u32PicWidth = stPicSize.u32Width;

stJpegAttr.u32PicHeight = stPicSize.u32Height;

stJpegAttr.u32MaxPicWidth = stPicSize.u32Width;

stJpegAttr.u32MaxPicHeight = stPicSize.u32Height;

stJpegAttr.u32BufSize = stPicSize.u32Width * stPicSize.u32Height * 2;

stJpegAttr.bByFrame = HI_TRUE;/*get stream mode is field mode or frame mode*/

stJpegAttr.bSupportDCF = HI_FALSE;

memcpy(&stVencChnAttr.stVeAttr.stAttrJpeg, &stJpegAttr, sizeof(VENC_ATTR_JPEG_S));

break;

case PT_H265:

{

stH265Attr.u32MaxPicWidth = stPicSize.u32Width;

stH265Attr.u32MaxPicHeight = stPicSize.u32Height;

stH265Attr.u32PicWidth = stPicSize.u32Width;/*the picture width*/

stH265Attr.u32PicHeight = stPicSize.u32Height;/*the picture height*/

stH265Attr.u32BufSize = stPicSize.u32Width * stPicSize.u32Height * 2;/*stream buffer size*/

if (u32Profile >= 1)

{ stH265Attr.u32Profile = 0; }/*0:MP; */

else

{ stH265Attr.u32Profile = u32Profile; }/*0:MP*/

stH265Attr.bByFrame = HI_TRUE;/*get stream mode is slice mode or frame mode?*/

stH265Attr.u32BFrameNum = 0;/* 0: not support B frame; >=1: number of B frames */

stH265Attr.u32RefNum = 1;/* 0: default; number of refrence frame*/

memcpy(&stVencChnAttr.stVeAttr.stAttrH265e, &stH265Attr, sizeof(VENC_ATTR_H265_S));

if (SAMPLE_RC_CBR == enRcMode)

{

stVencChnAttr.stRcAttr.enRcMode = VENC_RC_MODE_H265CBR;

stH265Cbr.u32Gop = (VIDEO_ENCODING_MODE_PAL == enNorm) ? 25 : 30;

stH265Cbr.u32StatTime = 1; /* stream rate statics time(s) */

stH265Cbr.u32SrcFrmRate = (VIDEO_ENCODING_MODE_PAL == enNorm) ? 25 : 30; /* input (vi) frame rate */

stH265Cbr.fr32DstFrmRate = (VIDEO_ENCODING_MODE_PAL == enNorm) ? 25 : 30; /* target frame rate */

switch (enSize)

{

case PIC_QCIF:

stH265Cbr.u32BitRate = 256; /* average bit rate */

break;

case PIC_QVGA: /* 320 * 240 */

case PIC_CIF:

stH265Cbr.u32BitRate = 512;

break;

case PIC_D1:

case PIC_VGA: /* 640 * 480 */

stH265Cbr.u32BitRate = 1024 * 2;

break;

case PIC_HD720: /* 1280 * 720 */

stH265Cbr.u32BitRate = 1024 * 3;

break;

case PIC_HD1080: /* 1920 * 1080 */

stH265Cbr.u32BitRate = 1024 * 4;

break;

case PIC_5M: /* 2592 * 1944 */

stH265Cbr.u32BitRate = 1024 * 8;

break;

default :

stH265Cbr.u32BitRate = 1024 * 4;

break;

}

stH265Cbr.u32FluctuateLevel = 0; /* average bit rate */

memcpy(&stVencChnAttr.stRcAttr.stAttrH265Cbr, &stH265Cbr, sizeof(VENC_ATTR_H265_CBR_S));

}

else if (SAMPLE_RC_FIXQP == enRcMode)

{

stVencChnAttr.stRcAttr.enRcMode = VENC_RC_MODE_H265FIXQP;

stH265FixQp.u32Gop = (VIDEO_ENCODING_MODE_PAL == enNorm) ? 25 : 30;

stH265FixQp.u32SrcFrmRate = (VIDEO_ENCODING_MODE_PAL == enNorm) ? 25 : 30;

stH265FixQp.fr32DstFrmRate = (VIDEO_ENCODING_MODE_PAL == enNorm) ? 25 : 30;

stH265FixQp.u32IQp = 20;

stH265FixQp.u32PQp = 23;

memcpy(&stVencChnAttr.stRcAttr.stAttrH265FixQp, &stH265FixQp, sizeof(VENC_ATTR_H265_FIXQP_S));

}

else if (SAMPLE_RC_VBR == enRcMode)

{

stVencChnAttr.stRcAttr.enRcMode = VENC_RC_MODE_H265VBR;

stH265Vbr.u32Gop = (VIDEO_ENCODING_MODE_PAL == enNorm) ? 25 : 30;

stH265Vbr.u32StatTime = 1;

stH265Vbr.u32SrcFrmRate = (VIDEO_ENCODING_MODE_PAL == enNorm) ? 25 : 30;

stH265Vbr.fr32DstFrmRate = (VIDEO_ENCODING_MODE_PAL == enNorm) ? 25 : 30;

stH265Vbr.u32MinQp = 10;

stH265Vbr.u32MaxQp = 40;

switch (enSize)

{

case PIC_QCIF:

stH265Vbr.u32MaxBitRate = 256 * 3; /* average bit rate */

break;

case PIC_QVGA: /* 320 * 240 */

case PIC_CIF:

stH265Vbr.u32MaxBitRate = 512 * 3;

break;

case PIC_D1:

case PIC_VGA: /* 640 * 480 */

stH265Vbr.u32MaxBitRate = 1024 * 2;

break;

case PIC_HD720: /* 1280 * 720 */

stH265Vbr.u32MaxBitRate = 1024 * 3;

break;

case PIC_HD1080: /* 1920 * 1080 */

stH265Vbr.u32MaxBitRate = 1024 * 6;

break;

case PIC_5M: /* 2592 * 1944 */

stH265Vbr.u32MaxBitRate = 1024 * 8;

break;

default :

stH265Vbr.u32MaxBitRate = 1024 * 4;

break;

}

memcpy(&stVencChnAttr.stRcAttr.stAttrH265Vbr, &stH265Vbr, sizeof(VENC_ATTR_H265_VBR_S));

}

else

{

return HI_FAILURE;

}

}

break;

default:

return HI_ERR_VENC_NOT_SUPPORT;

}

s32Ret = HI_MPI_VENC_CreateChn(VencChn, &stVencChnAttr); //根据编码通道结构体属性stVencChnAttr创建编码通道

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("HI_MPI_VENC_CreateChn [%d] faild with %#x!\n", \

VencChn, s32Ret);

return s32Ret;

}

/******************************************

step 2: Start Recv Venc Pictures

******************************************/

s32Ret = HI_MPI_VENC_StartRecvPic(VencChn); //开启编码通道接收输入图像

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("HI_MPI_VENC_StartRecvPic faild with%#x!\n", s32Ret);

return HI_FAILURE;

}

return HI_SUCCESS;

}

接着获取编码后的码流,并存入文件中。

/******************************************

step 6: stream venc process -- get stream, then save it to file.

******************************************/

s32Ret = SAMPLE_COMM_VENC_StartGetStream(s32ChnNum);

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("Start Venc failed!\n");

goto END_VENC_1080P_CLASSIC_5;

}

/******************************************************************************

* funciton : start get venc stream process thread

******************************************************************************/

HI_S32 SAMPLE_COMM_VENC_StartGetStream(HI_S32 s32Cnt)

{

gs_stPara.bThreadStart = HI_TRUE;

gs_stPara.s32Cnt = s32Cnt;

return pthread_create(&gs_VencPid, 0, SAMPLE_COMM_VENC_GetVencStreamProc, (HI_VOID*)&gs_stPara);

}

/******************************************************************************

* funciton : get stream from each channels and save them

******************************************************************************/

HI_VOID* SAMPLE_COMM_VENC_GetVencStreamProc(HI_VOID* p)

{

HI_S32 i;

HI_S32 s32ChnTotal; //通道总数

VENC_CHN_ATTR_S stVencChnAttr; //编码器属性和码率控制器属性

SAMPLE_VENC_GETSTREAM_PARA_S* pstPara;

HI_S32 maxfd = 0;

struct timeval TimeoutVal; //时间结构体

fd_set read_fds; //////

HI_S32 VencFd[VENC_MAX_CHN_NUM]; //16个编码文件描述符

HI_CHAR aszFileName[VENC_MAX_CHN_NUM][FILE_NAME_LEN]; //16个最长128字符的文件名字

FILE* pFile[VENC_MAX_CHN_NUM]; //16个文件

char szFilePostfix[10]; //文件后缀字符串

VENC_CHN_STAT_S stStat; //通道状态

VENC_STREAM_S stStream; //码流结构体,用来存放码流的数据

HI_S32 s32Ret;

VENC_CHN VencChn;

PAYLOAD_TYPE_E enPayLoadType[VENC_MAX_CHN_NUM]; //16个码流载荷的格式

pstPara = (SAMPLE_VENC_GETSTREAM_PARA_S*)p;

s32ChnTotal = pstPara->s32Cnt; //通道总数

/******************************************

step 1: check & prepare save-file & venc-fd

******************************************/

if (s32ChnTotal >= VENC_MAX_CHN_NUM) //超过最大通道数16

{

SAMPLE_PRT("input count invaild\n");

return NULL;

}

for (i = 0; i < s32ChnTotal; i++)

{

/* decide the stream file name, and open file to save stream */

VencChn = i;

s32Ret = HI_MPI_VENC_GetChnAttr(VencChn, &stVencChnAttr);//获取VencChn对应通道的通道属性,存入stVencChnAttr结构体,属性包括即编码器属性和码率控制器属性

if (s32Ret != HI_SUCCESS)

{

SAMPLE_PRT("HI_MPI_VENC_GetChnAttr chn[%d] failed with %#x!\n", \

VencChn, s32Ret);

return NULL;

}

enPayLoadType[i] = stVencChnAttr.stVeAttr.enType; //获取对应的载荷类型,如 PT_H265 = 265,

s32Ret = SAMPLE_COMM_VENC_GetFilePostfix(enPayLoadType[i], szFilePostfix);//根据载荷类型enPayLoadType[i] ,得到后缀字符串存入szFilePostfix中

if (s32Ret != HI_SUCCESS)

{

SAMPLE_PRT("SAMPLE_COMM_VENC_GetFilePostfix [%d] failed with %#x!\n", \

stVencChnAttr.stVeAttr.enType, s32Ret);

return NULL;

}

snprintf(aszFileName[i], FILE_NAME_LEN, "stream_chn%d%s", i, szFilePostfix); //FILE_NAME_LEN=128,把"stream_chn%d%s"和szFilePostfix结合的字符串存到aszFileName[i]中,如stream_chn0.h265

pFile[i] = fopen(aszFileName[i], "wb");//wb 以只写方式新建一个aszFileName[i]字符名称的二进制文件,只允许写数据

if (!pFile[i])

{

SAMPLE_PRT("open file[%s] failed!\n",

aszFileName[i]);

return NULL;

}

/* Set Venc Fd. */

VencFd[i] = HI_MPI_VENC_GetFd(i); //得到文件描述符

if (VencFd[i] < 0)

{

SAMPLE_PRT("HI_MPI_VENC_GetFd failed with %#x!\n",

VencFd[i]);

return NULL;

}

if (maxfd <= VencFd[i]) //得到最大的文件描述符

{

maxfd = VencFd[i];

}

}

/******************************************

step 2: Start to get streams of each channel.

******************************************/

while (HI_TRUE == pstPara->bThreadStart) //进程在while循环中一直处理,直到bThreadStart变成HI_FALSE,退出

{

FD_ZERO(&read_fds); //清空fd_set类型变量read_fds的所有位,为 0

for (i = 0; i < s32ChnTotal; i++)

{

FD_SET(VencFd[i], &read_fds); //设置read_fds变量中的VencFd[i]位置位

}

TimeoutVal.tv_sec = 2; //设置两秒

TimeoutVal.tv_usec = 0;

s32Ret = select(maxfd + 1, &read_fds, NULL, NULL, &TimeoutVal);

//调用select函数,拥塞等待文件描述符事件的到来;如果超过设定的时间,则不再等待,继续往下执行。

if (s32Ret < 0)

{

SAMPLE_PRT("select failed!\n"); // 出错

break;

}

else if (s32Ret == 0)

{

SAMPLE_PRT("get venc stream time out, exit thread\n"); //2s 超时

continue;

}

else

{

for (i = 0; i < s32ChnTotal; i++)

{

if (FD_ISSET(VencFd[i], &read_fds)) //检查read_fds变量的VencFd[i]是否置位

{

/*******************************************************

step 2.1 : query how many packs in one-frame stream.

*******************************************************/

memset(&stStream, 0, sizeof(stStream));//把stStream结构体的数据清零

s32Ret = HI_MPI_VENC_Query(i, &stStat); //查询编码通道的状态

if (HI_SUCCESS != s32Ret)

{

SAMPLE_PRT("HI_MPI_VENC_Query chn[%d] failed with %#x!\n", i, s32Ret);

break;

}

/*******************************************************

step 2.2 :suggest to check both u32CurPacks and u32LeftStreamFrames at the same time,for example:

if(0 == stStat.u32CurPacks || 0 == stStat.u32LeftStreamFrames)

{

SAMPLE_PRT("NOTE: Current frame is NULL!\n");

continue;

}

*******************************************************/

if(0 == stStat.u32CurPacks)

{

SAMPLE_PRT("NOTE: Current frame is NULL!\n");

continue; //如果当前剩余包为0,则跳过下面的处理

}

/*******************************************************

step 2.3 : malloc corresponding number of pack nodes.

*******************************************************/

stStream.pstPack = (VENC_PACK_S*)malloc(sizeof(VENC_PACK_S) * stStat.u32CurPacks);

//分配剩余包所需的内存空间(堆中),大小为每包的数据大小sizeof(VENC_PACK_S)乘以包数stStat.u32CurPacks

if (NULL == stStream.pstPack)

{

SAMPLE_PRT("malloc stream pack failed!\n");

break;

}

/*******************************************************

step 2.4 : call mpi to get one-frame stream

*******************************************************/

stStream.u32PackCount = stStat.u32CurPacks;

s32Ret = HI_MPI_VENC_GetStream(i, &stStream, HI_TRUE); //获取数据保存到stStream结构体中

if (HI_SUCCESS != s32Ret)

{

free(stStream.pstPack);

stStream.pstPack = NULL;

SAMPLE_PRT("HI_MPI_VENC_GetStream failed with %#x!\n", \

s32Ret);

break;

}

/*******************************************************

step 2.5 : save frame to file

*******************************************************/

s32Ret = SAMPLE_COMM_VENC_SaveStream(enPayLoadType[i], pFile[i], &stStream);

//以enPayLoadType[i]格式把stStream中数据保存到pFile[i]文件

if (HI_SUCCESS != s32Ret)

{

free(stStream.pstPack);

stStream.pstPack = NULL;

SAMPLE_PRT("save stream failed!\n");

break;

}

/*******************************************************

step 2.6 : release stream

*******************************************************/

s32Ret = HI_MPI_VENC_ReleaseStream(i, &stStream);

//已经保存完数据了,就把第i个通道对应的stStream里的数据释放了

if (HI_SUCCESS != s32Ret)

{

free(stStream.pstPack);

stStream.pstPack = NULL;

break;

}

/*******************************************************

step 2.7 : free pack nodes

*******************************************************/

free(stStream.pstPack); //清空malloc分配的空间

stStream.pstPack = NULL; //释放指针

}

}

}

}

/*******************************************************

* step 3 : close save-file

*******************************************************/

for (i = 0; i < s32ChnTotal; i++)

{

fclose(pFile[i]); //关闭文件

}

return NULL;

}

/******************************************************************************

* funciton : save stream

******************************************************************************/

HI_S32 SAMPLE_COMM_VENC_SaveStream(PAYLOAD_TYPE_E enType, FILE* pFd, VENC_STREAM_S* pstStream)

{

HI_S32 s32Ret;

if (PT_H264 == enType)

{

s32Ret = SAMPLE_COMM_VENC_SaveH264(pFd, pstStream);

}

else if (PT_MJPEG == enType)

{

s32Ret = SAMPLE_COMM_VENC_SaveMJpeg(pFd, pstStream);

}

else if (PT_H265 == enType)

{

s32Ret = SAMPLE_COMM_VENC_SaveH265(pFd, pstStream);

}

else

{

return HI_FAILURE;

}

return s32Ret;

}

H264原始码流分析

下面在保存的码流的代码部分加如打印信息,来分析码流的数据。

/******************************************************************************

* funciton : save H264 stream

******************************************************************************/

HI_S32 SAMPLE_COMM_VENC_SaveH264(FILE* fpH264File, VENC_STREAM_S* pstStream)

{

HI_S32 i;

printf("-----------------H264 start----------------------------\r\n");

for (i = 0; i < pstStream->u32PackCount; i++)

{

fwrite(pstStream->pstPack[i].pu8Addr + pstStream->pstPack[i].u32Offset,

pstStream->pstPack[i].u32Len - pstStream->pstPack[i].u32Offset, 1, fpH264File);

printf("i=%d\r\n",i);

printf("pstStream->pstPack[i].pu8Addr=%#x\r\n",pstStream->pstPack[i].pu8Addr);

printf("pstStream->pstPack[i].u32Offset=%#x\r\n",pstStream->pstPack[i].u32Offset);

printf("pstStream->pstPack[i].u32Len=%#x\r\n",pstStream->pstPack[i].u32Len);

HI_U8 k;

printf("data:0x");

for(k=0;k<30;k++)

printf("%x ",pstStream->pstPack[i].pu8Addr[k]);

printf("......\r\n");

fflush(fpH264File);

}

printf("-----------------H264 start----------------------------\r\n");

return HI_SUCCESS;

}

/******************************************************************************

* funciton : save H265 stream

******************************************************************************/

HI_S32 SAMPLE_COMM_VENC_SaveH265(FILE* fpH265File, VENC_STREAM_S* pstStream)

{

HI_S32 i;

printf("-----------------H265 start----------------------------\r\n");

for (i = 0; i < pstStream->u32PackCount; i++)

{

fwrite(pstStream->pstPack[i].pu8Addr + pstStream->pstPack[i].u32Offset,

pstStream->pstPack[i].u32Len - pstStream->pstPack[i].u32Offset, 1, fpH265File);

printf("i=%d\r\n",i);

printf("pstStream->pstPack[i].pu8Addr=%#x\r\n",pstStream->pstPack[i].pu8Addr);

printf("pstStream->pstPack[i].u32Offset=%#x\r\n",pstStream->pstPack[i].u32Offset);

printf("pstStream->pstPack[i].u32Len=%#x\r\n",pstStream->pstPack[i].u32Len);

HI_U8 k;

printf("data:0x");

for(k=0;k<30;k++)

printf("%x ",pstStream->pstPack[i].pu8Addr[k]);

printf("......\r\n");

fflush(fpH265File);

}

printf("-----------------H265 end----------------------------\r\n");

return HI_SUCCESS;

}

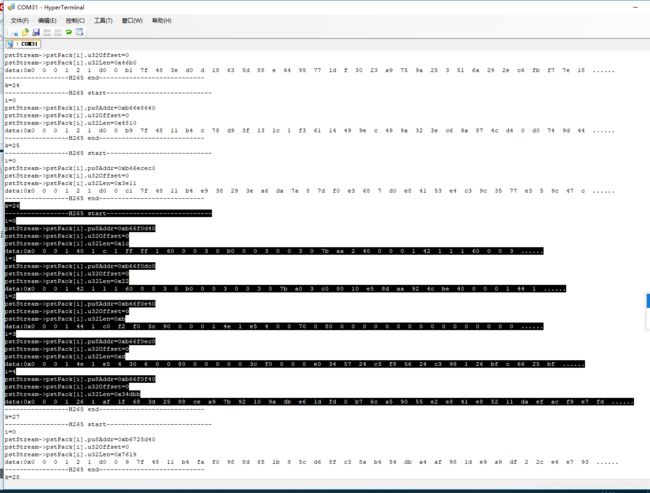

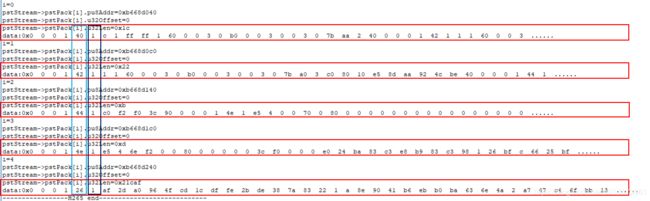

下面先屏蔽第二路和第三路,只分析第一路H.264@1080p@30fps的码流原始数据。运行,打印的原始h264码流如下:

首先,第一帧包含了4个包,下面来详细解析第一帧的数据。

具体数据包中67、68、6、65 的含义可参考传送门。

00 00 00 01 67: 0x67&0x1f = 0x07 :SPS

00 00 00 01 68: 0x68&0x1f = 0x08 :PPS

00 00 00 01 06: 0x06&0x1f = 0x06 :SEI信息

00 00 00 01 65: 0x65&0x1f = 0x05: IDR Slice

00 00 00 01 61: 0x61&0x1f = 0x01: P帧

如上图,第一帧的数据中共包含4包,分别是67 sps包、68 pps包、6 SEI包、65 IDR包。

之后的每帧均是0x61 p帧 数据包。IDR帧作为压缩的参考帧,该帧的数据量比较大的,上图中是0x2607c,后续的p帧以IDR帧为参考,存储与IDR帧有差异的数据,是压缩后的帧,数据量小了很多,如图中的0x2887、0x1219、0x14e2等,这样便实现了视频的压缩。

当然,用IDR帧作为参考,一旦IDR帧出现了问题,后续的p帧解析都会出错。所以在固定数量的p帧之后,都会更新一个IDR帧以进行视频的同步,防止后续的视频帧一直是错误的。

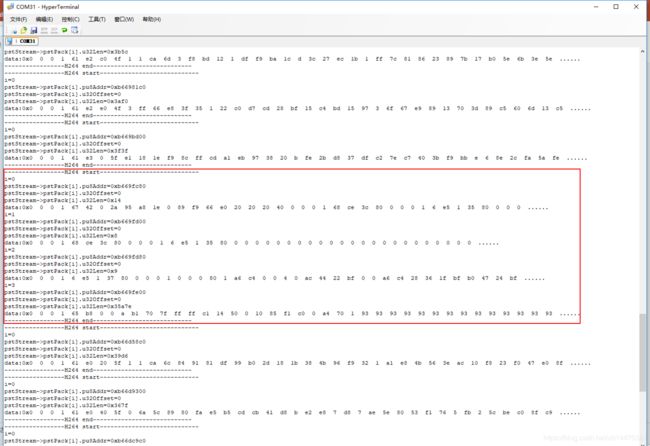

如下图:

在代码中加入每包编号的信息打印,打印如下:

如图,第一包编号是1,为包含IDR的帧,在第26包时,再次更新了包含IDR的帧。故两个idr帧之间间隔了25个P帧,这个数量时可以去配置的。

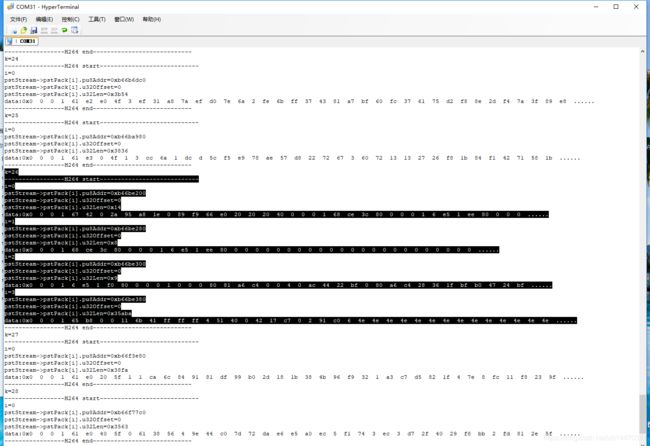

总体的数据结构如下图:

H265原始码流分析

加入相关的打印信息,分析H265的原始码流数据结构。代码如下:

/******************************************************************************

* funciton : save H265 stream

******************************************************************************/

HI_S32 SAMPLE_COMM_VENC_SaveH265(FILE* fpH265File, VENC_STREAM_S* pstStream)

{

HI_S32 i;

k++;

printf("k=%d\r\n",k);

printf("-----------------H265 start----------------------------\r\n");

for (i = 0; i < pstStream->u32PackCount; i++)

{

fwrite(pstStream->pstPack[i].pu8Addr + pstStream->pstPack[i].u32Offset,

pstStream->pstPack[i].u32Len - pstStream->pstPack[i].u32Offset, 1, fpH265File);

printf("i=%d\r\n",i);

printf("pstStream->pstPack[i].pu8Addr=%#x\r\n",pstStream->pstPack[i].pu8Addr);

printf("pstStream->pstPack[i].u32Offset=%#x\r\n",pstStream->pstPack[i].u32Offset);

printf("pstStream->pstPack[i].u32Len=%#x\r\n",pstStream->pstPack[i].u32Len);

HI_U8 k;

printf("data:0x");

for(k=0;k<40;k++)

printf("%x ",pstStream->pstPack[i].pu8Addr[k]);

printf("......\r\n");

fflush(fpH265File);

}

printf("-----------------H265 end----------------------------\r\n");

return HI_SUCCESS;

}

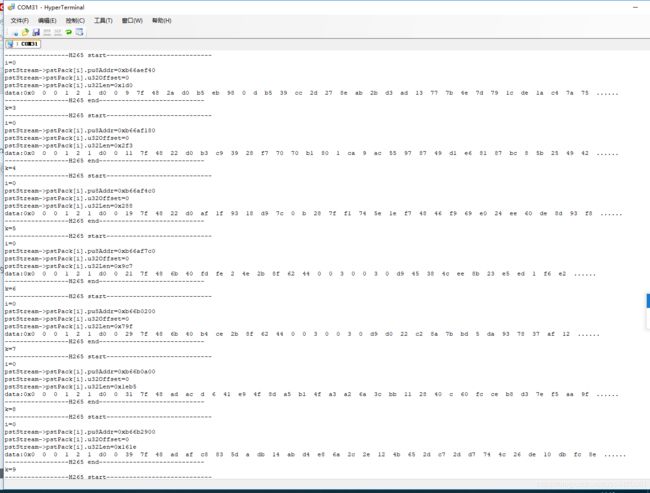

运行打印信息如下图:

第一个数据包中,共有5个nalu数据包,如下:

nal头的格式如下,具体的解析参考传送门。

00 00 00 01 40 01 的nuh_unit_type的值为 32, 语义为视频参数集 VPS

00 00 00 01 42 01 的nuh_unit_type的值为 33, 语义为序列参数集 SPS

00 00 00 01 44 01 的nuh_unit_type的值为 34, 语义为图像参数集 PPS

00 00 00 01 4E 01 的nuh_unit_type的值为 39, 语义为补充增强信息 SEI

00 00 00 01 26 01 的nuh_unit_type的值为 19, 语义为可能有RADL图像的IDR图像的SS编码数据 IDR

如图中黑色框的字节,TID的值均为1。

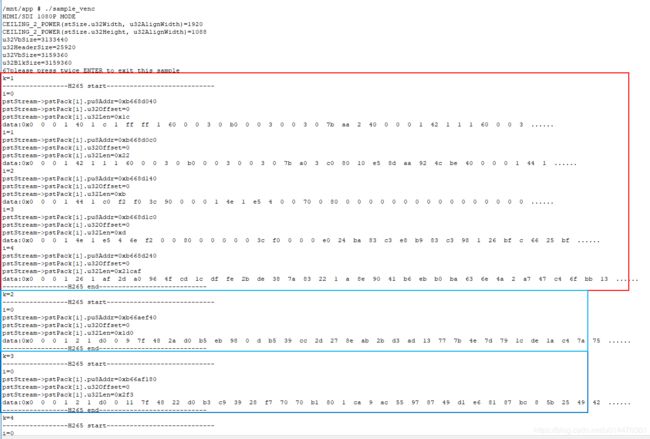

后续的数据包如下图:

00 00 00 01 02 01 的nuh_unit_type的值为1, 语义为被参考的后置图像,且非TSA、非STSA的SS编码数据。

TID也相同,都是1.