import pandas as pd

import numpy as np

import matplotlib. pyplot as plt

s = pd. Series( [ 1 , 3 , 5 , np. nan, 6 , 8 ] )

s

0 1.0

1 3.0

2 5.0

3 NaN

4 6.0

5 8.0

dtype: float64

len ( s)

6

s. describe( )

count 5.000000

mean 4.600000

std 2.701851

min 1.000000

25% 3.000000

50% 5.000000

75% 6.000000

max 8.000000

dtype: float64

dates = pd. date_range( '20190101' , periods= 6 )

dates

DatetimeIndex(['2019-01-01', '2019-01-02', '2019-01-03', '2019-01-04',

'2019-01-05', '2019-01-06'],

dtype='datetime64[ns]', freq='D')

df = pd. DataFrame( np. random. randn( 6 , 4 ) , index= dates, columns= list ( 'ABCD' ) )

df

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

2019-01-01

0.317575

0.330549

0.055375

-1.284453

2019-01-02

-0.272201

-0.770618

0.217658

1.349425

2019-01-03

0.842686

-0.973354

0.596166

0.215889

2019-01-04

0.252868

-0.778050

-0.324255

-1.378140

2019-01-05

0.281876

1.143140

-0.781625

-1.245069

2019-01-06

-0.468258

-1.682376

1.494058

1.262588

df. describe( )

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

count

6.000000

6.000000

6.000000

6.000000

mean

0.159091

-0.455118

0.209563

-0.179960

std

0.468160

1.014914

0.786212

1.293573

min

-0.468258

-1.682376

-0.781625

-1.378140

25%

-0.140934

-0.924528

-0.229347

-1.274607

50%

0.267372

-0.774334

0.136517

-0.514590

75%

0.308650

0.055257

0.501539

1.000913

max

0.842686

1.143140

1.494058

1.349425

df. shape

(6, 4)

df2 = pd. DataFrame( { 'A' : 1 . ,

'B' : pd. Timestamp( '20190101' ) ,

'C' : pd. Series( 1 , index= list ( range ( 4 ) ) , dtype= 'float32' ) ,

'D' : np. array( [ 3 ] * 4 , dtype= 'int32' ) ,

'E' : pd. Categorical( [ "test" , "train" , "test" , "train" ] ) ,

'F' : 'foo' } )

df2

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

E

F

0

1.0

2019-01-01

1.0

3

test

foo

1

1.0

2019-01-01

1.0

3

train

foo

2

1.0

2019-01-01

1.0

3

test

foo

3

1.0

2019-01-01

1.0

3

train

foo

df2. dtypes

A float64

B datetime64[ns]

C float32

D int32

E category

F object

dtype: object

df. head( )

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

2019-01-01

0.317575

0.330549

0.055375

-1.284453

2019-01-02

-0.272201

-0.770618

0.217658

1.349425

2019-01-03

0.842686

-0.973354

0.596166

0.215889

2019-01-04

0.252868

-0.778050

-0.324255

-1.378140

2019-01-05

0.281876

1.143140

-0.781625

-1.245069

df. tail( )

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

2019-01-02

-0.272201

-0.770618

0.217658

1.349425

2019-01-03

0.842686

-0.973354

0.596166

0.215889

2019-01-04

0.252868

-0.778050

-0.324255

-1.378140

2019-01-05

0.281876

1.143140

-0.781625

-1.245069

2019-01-06

-0.468258

-1.682376

1.494058

1.262588

df. index

DatetimeIndex(['2019-01-01', '2019-01-02', '2019-01-03', '2019-01-04',

'2019-01-05', '2019-01-06'],

dtype='datetime64[ns]', freq='D')

df. columns

Index(['A', 'B', 'C', 'D'], dtype='object')

df. values

array([[ 0.31757454, 0.33054893, 0.05537508, -1.28445319],

[-0.27220143, -0.77061807, 0.21765843, 1.34942538],

[ 0.84268621, -0.97335385, 0.59616646, 0.21588867],

[ 0.25286828, -0.77804969, -0.32425479, -1.37813964],

[ 0.28187609, 1.14314031, -0.78162546, -1.24506887],

[-0.4682577 , -1.68237556, 1.49405812, 1.26258772]])

df. describe( )

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

count

6.000000

6.000000

6.000000

6.000000

mean

0.159091

-0.455118

0.209563

-0.179960

std

0.468160

1.014914

0.786212

1.293573

min

-0.468258

-1.682376

-0.781625

-1.378140

25%

-0.140934

-0.924528

-0.229347

-1.274607

50%

0.267372

-0.774334

0.136517

-0.514590

75%

0.308650

0.055257

0.501539

1.000913

max

0.842686

1.143140

1.494058

1.349425

df2. describe( include= 'all' )

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

E

F

count

4.0

4

4.0

4.0

4

4

unique

NaN

1

NaN

NaN

2

1

top

NaN

2019-01-01 00:00:00

NaN

NaN

train

foo

freq

NaN

4

NaN

NaN

2

4

first

NaN

2019-01-01 00:00:00

NaN

NaN

NaN

NaN

last

NaN

2019-01-01 00:00:00

NaN

NaN

NaN

NaN

mean

1.0

NaN

1.0

3.0

NaN

NaN

std

0.0

NaN

0.0

0.0

NaN

NaN

min

1.0

NaN

1.0

3.0

NaN

NaN

25%

1.0

NaN

1.0

3.0

NaN

NaN

50%

1.0

NaN

1.0

3.0

NaN

NaN

75%

1.0

NaN

1.0

3.0

NaN

NaN

max

1.0

NaN

1.0

3.0

NaN

NaN

df. T

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

2019-01-01 00:00:00

2019-01-02 00:00:00

2019-01-03 00:00:00

2019-01-04 00:00:00

2019-01-05 00:00:00

2019-01-06 00:00:00

A

0.317575

-0.272201

0.842686

0.252868

0.281876

-0.468258

B

0.330549

-0.770618

-0.973354

-0.778050

1.143140

-1.682376

C

0.055375

0.217658

0.596166

-0.324255

-0.781625

1.494058

D

-1.284453

1.349425

0.215889

-1.378140

-1.245069

1.262588

df. sort_index( axis= 1 , ascending= True )

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

2019-01-01

0.317575

0.330549

0.055375

-1.284453

2019-01-02

-0.272201

-0.770618

0.217658

1.349425

2019-01-03

0.842686

-0.973354

0.596166

0.215889

2019-01-04

0.252868

-0.778050

-0.324255

-1.378140

2019-01-05

0.281876

1.143140

-0.781625

-1.245069

2019-01-06

-0.468258

-1.682376

1.494058

1.262588

df. sort_values( by= 'B' )

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

2019-01-06

-0.468258

-1.682376

1.494058

1.262588

2019-01-03

0.842686

-0.973354

0.596166

0.215889

2019-01-04

0.252868

-0.778050

-0.324255

-1.378140

2019-01-02

-0.272201

-0.770618

0.217658

1.349425

2019-01-01

0.317575

0.330549

0.055375

-1.284453

2019-01-05

0.281876

1.143140

-0.781625

-1.245069

df[ 'A' ]

2019-01-01 0.317575

2019-01-02 -0.272201

2019-01-03 0.842686

2019-01-04 0.252868

2019-01-05 0.281876

2019-01-06 -0.468258

Freq: D, Name: A, dtype: float64

df[ 0 : 3 ]

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

2019-01-01

0.317575

0.330549

0.055375

-1.284453

2019-01-02

-0.272201

-0.770618

0.217658

1.349425

2019-01-03

0.842686

-0.973354

0.596166

0.215889

df[ '20190101' : '20190103' ]

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

2019-01-01

0.317575

0.330549

0.055375

-1.284453

2019-01-02

-0.272201

-0.770618

0.217658

1.349425

2019-01-03

0.842686

-0.973354

0.596166

0.215889

df. loc[ dates[ 0 ] ]

A 0.317575

B 0.330549

C 0.055375

D -1.284453

Name: 2019-01-01 00:00:00, dtype: float64

dates[ 0 ]

Timestamp('2019-01-01 00:00:00', freq='D')

df. loc[ : , [ 'A' , 'B' ] ]

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

2019-01-01

0.317575

0.330549

2019-01-02

-0.272201

-0.770618

2019-01-03

0.842686

-0.973354

2019-01-04

0.252868

-0.778050

2019-01-05

0.281876

1.143140

2019-01-06

-0.468258

-1.682376

df. loc[ '20190104' , [ 'A' , 'B' ] ] . shape

(2,)

df. loc[ dates[ 0 ] , 'A' ]

0.3175745430028141

df. at[ dates[ 0 ] , 'A' ]

0.3175745430028141

df. at[ dates[ 0 ] , 'A' ]

df. iat[ 1 , 2 ]

0.21765843113729494

df. iloc[ 3 ]

A 0.252868

B -0.778050

C -0.324255

D -1.378140

Name: 2019-01-04 00:00:00, dtype: float64

df. iloc[ 3 , 1 ]

-0.7780496857268889

df. iloc[ [ 1 , 2 , 4 ] , [ 0 , 2 ] ]

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

C

2019-01-02

-0.272201

0.217658

2019-01-03

0.842686

0.596166

2019-01-05

0.281876

-0.781625

df. iloc[ 1 : 3 , : ]

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

2019-01-02

-0.272201

-0.770618

0.217658

1.349425

2019-01-03

0.842686

-0.973354

0.596166

0.215889

df. iloc[ 1 : 3 ]

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

2019-01-02

-0.272201

-0.770618

0.217658

1.349425

2019-01-03

0.842686

-0.973354

0.596166

0.215889

df[ df. A> 0 ]

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

2019-01-01

0.317575

0.330549

0.055375

-1.284453

2019-01-03

0.842686

-0.973354

0.596166

0.215889

2019-01-04

0.252868

-0.778050

-0.324255

-1.378140

2019-01-05

0.281876

1.143140

-0.781625

-1.245069

df[ df> 0 ]

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

2019-01-01

0.317575

0.330549

0.055375

NaN

2019-01-02

NaN

NaN

0.217658

1.349425

2019-01-03

0.842686

NaN

0.596166

0.215889

2019-01-04

0.252868

NaN

NaN

NaN

2019-01-05

0.281876

1.143140

NaN

NaN

2019-01-06

NaN

NaN

1.494058

1.262588

df3 = df. copy( )

df3[ 'E' ] = [ 'one' , 'two' , 'three' , 'four' , 'three' , 'one' ]

df3

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

E

2019-01-01

0.317575

0.330549

0.055375

-1.284453

one

2019-01-02

-0.272201

-0.770618

0.217658

1.349425

two

2019-01-03

0.842686

-0.973354

0.596166

0.215889

three

2019-01-04

0.252868

-0.778050

-0.324255

-1.378140

four

2019-01-05

0.281876

1.143140

-0.781625

-1.245069

three

2019-01-06

-0.468258

-1.682376

1.494058

1.262588

one

df3[ df3[ 'E' ] . isin( [ 'one' , 'two' ] ) ]

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

E

2019-01-01

0.317575

0.330549

0.055375

-1.284453

one

2019-01-02

-0.272201

-0.770618

0.217658

1.349425

two

2019-01-06

-0.468258

-1.682376

1.494058

1.262588

one

s1= pd. Series( [ 1 , 2 , 3 , 4 , 5 , 6 ] , index= pd. date_range( '20190102' , periods= 6 ) )

s1

2019-01-02 1

2019-01-03 2

2019-01-04 3

2019-01-05 4

2019-01-06 5

2019-01-07 6

Freq: D, dtype: int64

df[ 'F' ] = s1

df

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

F

2019-01-01

0.317575

0.330549

0.055375

-1.284453

NaN

2019-01-02

-0.272201

-0.770618

0.217658

1.349425

1.0

2019-01-03

0.842686

-0.973354

0.596166

0.215889

2.0

2019-01-04

0.252868

-0.778050

-0.324255

-1.378140

3.0

2019-01-05

0.281876

1.143140

-0.781625

-1.245069

4.0

2019-01-06

-0.468258

-1.682376

1.494058

1.262588

5.0

df. at[ dates[ 0 ] , 'A' ] = 0

df. iat[ 0 , 1 ] = 0

df. loc[ : , 'D' ] = np. array( [ 5 ] * len ( df) )

df

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

F

2019-01-01

0.000000

0.000000

0.055375

5

NaN

2019-01-02

-0.272201

-0.770618

0.217658

5

1.0

2019-01-03

0.842686

-0.973354

0.596166

5

2.0

2019-01-04

0.252868

-0.778050

-0.324255

5

3.0

2019-01-05

0.281876

1.143140

-0.781625

5

4.0

2019-01-06

-0.468258

-1.682376

1.494058

5

5.0

df4 = df. copy( )

df4[ df4> 0 ] = - df4

df4

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

F

2019-01-01

0.000000

0.000000

-0.055375

-5

NaN

2019-01-02

-0.272201

-0.770618

-0.217658

-5

-1.0

2019-01-03

-0.842686

-0.973354

-0.596166

-5

-2.0

2019-01-04

-0.252868

-0.778050

-0.324255

-5

-3.0

2019-01-05

-0.281876

-1.143140

-0.781625

-5

-4.0

2019-01-06

-0.468258

-1.682376

-1.494058

-5

-5.0

df1 = df. reindex( index= dates[ 0 : 4 ] , columns= list ( df. columns) + [ 'E' ] )

df1. loc[ dates[ 0 ] : dates[ 1 ] , 'E' ] = 1

df1

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

F

E

2019-01-01

0.000000

0.000000

0.055375

5

NaN

1.0

2019-01-02

-0.272201

-0.770618

0.217658

5

1.0

1.0

2019-01-03

0.842686

-0.973354

0.596166

5

2.0

NaN

2019-01-04

0.252868

-0.778050

-0.324255

5

3.0

NaN

df1. dropna( how= 'any' )

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

F

E

2019-01-02

-0.272201

-0.770618

0.217658

5

1.0

1.0

df1. fillna( value= 5 )

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

F

E

2019-01-01

0.000000

0.000000

0.055375

5

5.0

1.0

2019-01-02

-0.272201

-0.770618

0.217658

5

1.0

1.0

2019-01-03

0.842686

-0.973354

0.596166

5

2.0

5.0

2019-01-04

0.252868

-0.778050

-0.324255

5

3.0

5.0

pd. isna( df1)

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

F

E

2019-01-01

False

False

False

False

True

False

2019-01-02

False

False

False

False

False

False

2019-01-03

False

False

False

False

False

True

2019-01-04

False

False

False

False

False

True

df. mean( )

A 0.106162

B -0.510209

C 0.209563

D 5.000000

F 3.000000

dtype: float64

df. mean( 1 )

2019-01-01 1.263844

2019-01-02 1.034968

2019-01-03 1.493100

2019-01-04 1.430113

2019-01-05 1.928678

2019-01-06 1.868685

Freq: D, dtype: float64

s = pd. Series( [ 1 , 3 , 5 , np. nan, 6 , 8 ] , index= dates) . shift( 2 )

s

2019-01-01 NaN

2019-01-02 NaN

2019-01-03 1.0

2019-01-04 3.0

2019-01-05 5.0

2019-01-06 NaN

Freq: D, dtype: float64

df. sub( s, axis= 'index' )

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

F

2019-01-01

NaN

NaN

NaN

NaN

NaN

2019-01-02

NaN

NaN

NaN

NaN

NaN

2019-01-03

-0.157314

-1.973354

-0.403834

4.0

1.0

2019-01-04

-2.747132

-3.778050

-3.324255

2.0

0.0

2019-01-05

-4.718124

-3.856860

-5.781625

0.0

-1.0

2019-01-06

NaN

NaN

NaN

NaN

NaN

df

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

F

2019-01-01

0.000000

0.000000

0.055375

5

NaN

2019-01-02

-0.272201

-0.770618

0.217658

5

1.0

2019-01-03

0.842686

-0.973354

0.596166

5

2.0

2019-01-04

0.252868

-0.778050

-0.324255

5

3.0

2019-01-05

0.281876

1.143140

-0.781625

5

4.0

2019-01-06

-0.468258

-1.682376

1.494058

5

5.0

df. apply ( np. cumsum)

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

F

2019-01-01

0.000000

0.000000

0.055375

5

NaN

2019-01-02

-0.272201

-0.770618

0.273034

10

1.0

2019-01-03

0.570485

-1.743972

0.869200

15

3.0

2019-01-04

0.823353

-2.522022

0.544945

20

6.0

2019-01-05

1.105229

-1.378881

-0.236680

25

10.0

2019-01-06

0.636971

-3.061257

1.257378

30

15.0

df. apply ( lambda x: x. max ( ) - x. min ( ) )

A 1.310944

B 2.825516

C 2.275684

D 0.000000

F 4.000000

dtype: float64

df[ 'F' ] . value_counts( )

5.0 1

4.0 1

3.0 1

2.0 1

1.0 1

Name: F, dtype: int64

s = pd. Series( np. random. randint( 0 , 7 , size= 10 ) )

s

0 1

1 1

2 1

3 2

4 3

5 2

6 6

7 0

8 4

9 6

dtype: int32

s. dtype

dtype('int32')

s. shape

(10,)

s. value_counts( )

1 3

6 2

2 2

4 1

3 1

0 1

dtype: int64

s = pd. Series( [ 'A' , 'B' , 'C' , 'Aaba' , 'Baca' , np. nan, 'CABA' , 'dog' , 'cat' ] )

s. dtype

dtype('O')

s. str

s. str . lower( )

0 a

1 b

2 c

3 aaba

4 baca

5 NaN

6 caba

7 dog

8 cat

dtype: object

df = pd. DataFrame( np. random. randn( 10 , 4 ) )

df

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

0

1

2

3

0

-0.936960

-0.210650

1.887069

0.128005

1

0.657660

0.254801

-0.092190

-1.957160

2

-0.920141

-2.259964

0.083965

0.371865

3

-0.755403

0.633426

0.090949

-0.626964

4

0.170052

1.164537

-1.193706

1.391785

5

0.084560

-1.513814

0.069032

0.099851

6

-0.683663

0.031873

-0.062998

0.523253

7

-0.926594

0.125286

-1.894089

-0.449402

8

0.610722

0.329156

0.025149

-2.673445

9

0.336673

1.205792

-1.346179

0.214389

pieces = [ df[ : 3 ] , df[ 3 : 7 ] , df[ 7 : ] ]

pieces

[ 0 1 2 3

0 -0.936960 -0.210650 1.887069 0.128005

1 0.657660 0.254801 -0.092190 -1.957160

2 -0.920141 -2.259964 0.083965 0.371865,

0 1 2 3

3 -0.755403 0.633426 0.090949 -0.626964

4 0.170052 1.164537 -1.193706 1.391785

5 0.084560 -1.513814 0.069032 0.099851

6 -0.683663 0.031873 -0.062998 0.523253,

0 1 2 3

7 -0.926594 0.125286 -1.894089 -0.449402

8 0.610722 0.329156 0.025149 -2.673445

9 0.336673 1.205792 -1.346179 0.214389]

pd. concat( pieces)

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

0

1

2

3

0

-0.936960

-0.210650

1.887069

0.128005

1

0.657660

0.254801

-0.092190

-1.957160

2

-0.920141

-2.259964

0.083965

0.371865

3

-0.755403

0.633426

0.090949

-0.626964

4

0.170052

1.164537

-1.193706

1.391785

5

0.084560

-1.513814

0.069032

0.099851

6

-0.683663

0.031873

-0.062998

0.523253

7

-0.926594

0.125286

-1.894089

-0.449402

8

0.610722

0.329156

0.025149

-2.673445

9

0.336673

1.205792

-1.346179

0.214389

concat

axis=1列合并;axis=0行合并(default)

join=‘inner’只返回合并的共同对象;join=‘outer’返回合并后的所有列

ignore_index=True会自动连续生成索引值;ignore_index=False则不改变原有的索引值

verify_integrity会检查当前合并是否重复索引

keys会导致生成多维数组

name会重新命名列名。

pd. concat( pieces, axis= 1 )

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

0

1

2

3

0

1

2

3

0

1

2

3

0

-0.936960

-0.210650

1.887069

0.128005

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

1

0.657660

0.254801

-0.092190

-1.957160

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

2

-0.920141

-2.259964

0.083965

0.371865

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

3

NaN

NaN

NaN

NaN

-0.755403

0.633426

0.090949

-0.626964

NaN

NaN

NaN

NaN

4

NaN

NaN

NaN

NaN

0.170052

1.164537

-1.193706

1.391785

NaN

NaN

NaN

NaN

5

NaN

NaN

NaN

NaN

0.084560

-1.513814

0.069032

0.099851

NaN

NaN

NaN

NaN

6

NaN

NaN

NaN

NaN

-0.683663

0.031873

-0.062998

0.523253

NaN

NaN

NaN

NaN

7

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

-0.926594

0.125286

-1.894089

-0.449402

8

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

0.610722

0.329156

0.025149

-2.673445

9

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

0.336673

1.205792

-1.346179

0.214389

pd. concat( pieces, join= 'outer' , axis= 1 )

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

0

1

2

3

0

1

2

3

0

1

2

3

0

-0.936960

-0.210650

1.887069

0.128005

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

1

0.657660

0.254801

-0.092190

-1.957160

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

2

-0.920141

-2.259964

0.083965

0.371865

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

3

NaN

NaN

NaN

NaN

-0.755403

0.633426

0.090949

-0.626964

NaN

NaN

NaN

NaN

4

NaN

NaN

NaN

NaN

0.170052

1.164537

-1.193706

1.391785

NaN

NaN

NaN

NaN

5

NaN

NaN

NaN

NaN

0.084560

-1.513814

0.069032

0.099851

NaN

NaN

NaN

NaN

6

NaN

NaN

NaN

NaN

-0.683663

0.031873

-0.062998

0.523253

NaN

NaN

NaN

NaN

7

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

-0.926594

0.125286

-1.894089

-0.449402

8

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

0.610722

0.329156

0.025149

-2.673445

9

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

0.336673

1.205792

-1.346179

0.214389

left = pd. DataFrame( { 'key' : [ 'foo' , 'bar' ] , 'lval' : [ 1 , 2 ] } )

right = pd. DataFrame( { 'key' : [ 'foo' , 'bar' ] , 'rval' : [ 4 , 5 ] } )

left

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

right

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

pd. merge( left, right, on= 'key' )

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

key

lval

rval

0

foo

1

4

1

bar

2

5

left = pd. DataFrame( { 'key' : [ 'foo' , 'foo' ] , 'lval' : [ 1 , 2 ] } )

right = pd. DataFrame( { 'key' : [ 'foo' , 'foo' ] , 'rval' : [ 4 , 5 ] } )

left

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

right

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

pd. merge( left, right, on= 'key' )

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

key

lval

rval

0

foo

1

4

1

foo

1

5

2

foo

2

4

3

foo

2

5

df = pd. DataFrame( np. random. randn( 8 , 4 ) , columns= list ( 'ABCD' ) )

df

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

0

0.862756

-1.436692

0.367396

1.033803

1

-0.732357

-0.350199

-0.116083

-2.435210

2

0.316582

0.468616

0.433001

-0.443120

3

-0.189939

-2.437137

0.126893

-2.273711

4

0.913514

-0.752727

-1.651140

1.156839

5

-0.314581

1.296585

0.579130

-0.871556

6

0.361473

0.687854

-1.044602

0.233138

7

0.045199

2.176608

-0.258569

-1.018576

s = df. iloc[ 3 ]

df. append( s, ignore_index= False )

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

0

0.862756

-1.436692

0.367396

1.033803

1

-0.732357

-0.350199

-0.116083

-2.435210

2

0.316582

0.468616

0.433001

-0.443120

3

-0.189939

-2.437137

0.126893

-2.273711

4

0.913514

-0.752727

-1.651140

1.156839

5

-0.314581

1.296585

0.579130

-0.871556

6

0.361473

0.687854

-1.044602

0.233138

7

0.045199

2.176608

-0.258569

-1.018576

3

-0.189939

-2.437137

0.126893

-2.273711

“分组依据”是指涉及以下一个或多个步骤的过程:

df = pd. DataFrame( { 'A' : [ 'foo' , 'bar' , 'foo' , 'bar' ,

. . . . : 'foo' , 'bar' , 'foo' , 'foo' ] ,

. . . . : 'B' : [ 'one' , 'one' , 'two' , 'three' ,

. . . . : 'two' , 'two' , 'one' , 'three' ] ,

. . . . : 'C' : np. random. randn( 8 ) ,

. . . . : 'D' : np. random. randn( 8 ) } )

df

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

0

foo

one

0.982988

-0.386029

1

bar

one

1.632482

-0.327520

2

foo

two

0.149619

-0.138297

3

bar

three

-1.480397

1.105690

4

foo

two

0.647044

-1.097276

5

bar

two

-0.675596

0.250176

6

foo

one

0.437309

1.031742

7

foo

three

0.434659

1.197695

df. groupby( 'A' ) . sum ( )

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

C

D

A

bar

-0.523512

1.028346

foo

2.651618

0.607834

df. groupby( 'A' ) . max ( )

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

B

C

D

A

bar

two

1.632482

1.105690

foo

two

0.982988

1.197695

df. groupby( [ 'A' , 'B' ] ) . sum ( )

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

C

D

A

B

bar

one

1.632482

-0.327520

three

-1.480397

1.105690

two

-0.675596

0.250176

foo

one

1.420297

0.645713

three

0.434659

1.197695

two

0.796663

-1.235574

tuples = list ( zip ( * [ [ 'bar' , 'bar' , 'baz' , 'baz' ,

. . . . : 'foo' , 'foo' , 'qux' , 'qux' ] ,

. . . . : [ 'one' , 'two' , 'one' , 'two' ,

. . . . : 'one' , 'two' , 'one' , 'two' ] ] ) )

tuples

[('bar', 'one'),

('bar', 'two'),

('baz', 'one'),

('baz', 'two'),

('foo', 'one'),

('foo', 'two'),

('qux', 'one'),

('qux', 'two')]

zip() 函数用于将可迭代的对象作为参数,将对象中对应的元素打包成一个个元组,然后返回由这些元组组成的列表,在python3中用于减少内存

index = pd. MultiIndex. from_tuples( tuples, names= [ 'first' , 'second' ] )

index

MultiIndex(levels=[['bar', 'baz', 'foo', 'qux'], ['one', 'two']],

labels=[[0, 0, 1, 1, 2, 2, 3, 3], [0, 1, 0, 1, 0, 1, 0, 1]],

names=['first', 'second'])

df = pd. DataFrame( np. random. randn( 8 , 2 ) , index= index, columns= [ 'A' , 'B' ] )

df

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

first

second

bar

one

-0.752364

0.982241

two

-0.626690

1.358099

baz

one

0.342360

-0.618870

two

-0.399448

-0.500175

foo

one

-0.746328

-0.244752

two

1.556458

-1.340098

qux

one

-0.569393

-0.340625

two

0.513714

0.151477

stacked = df. stack( )

stacked

first second

bar one A -0.752364

B 0.982241

two A -0.626690

B 1.358099

baz one A 0.342360

B -0.618870

two A -0.399448

B -0.500175

foo one A -0.746328

B -0.244752

two A 1.556458

B -1.340098

qux one A -0.569393

B -0.340625

two A 0.513714

B 0.151477

dtype: float64

stacked. unstack( )

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

first

second

bar

one

-0.752364

0.982241

two

-0.626690

1.358099

baz

one

0.342360

-0.618870

two

-0.399448

-0.500175

foo

one

-0.746328

-0.244752

two

1.556458

-1.340098

qux

one

-0.569393

-0.340625

two

0.513714

0.151477

stacked. unstack( 1 )

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

second

one

two

first

bar

A

-0.752364

-0.626690

B

0.982241

1.358099

baz

A

0.342360

-0.399448

B

-0.618870

-0.500175

foo

A

-0.746328

1.556458

B

-0.244752

-1.340098

qux

A

-0.569393

0.513714

B

-0.340625

0.151477

stacked. unstack( 0 )

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

first

bar

baz

foo

qux

second

one

A

-0.752364

0.342360

-0.746328

-0.569393

B

0.982241

-0.618870

-0.244752

-0.340625

two

A

-0.626690

-0.399448

1.556458

0.513714

B

1.358099

-0.500175

-1.340098

0.151477

df = pd. DataFrame( { 'A' : [ 'one' , 'one' , 'two' , 'three' ] * 3 ,

. . . . . : 'B' : [ 'A' , 'B' , 'C' ] * 4 ,

. . . . . : 'C' : [ 'foo' , 'foo' , 'foo' , 'bar' , 'bar' , 'bar' ] * 2 ,

. . . . . : 'D' : np. random. randn( 12 ) ,

. . . . . : 'E' : np. random. randn( 12 ) } )

df

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

E

0

one

A

foo

0.346892

-0.615216

1

one

B

foo

0.808681

0.161598

2

two

C

foo

-1.324783

-0.088082

3

three

A

bar

-0.227795

-0.180022

4

one

B

bar

1.130028

-0.361439

5

one

C

bar

0.510629

-1.466063

6

two

A

foo

0.379503

0.008279

7

three

B

foo

0.921087

-0.148614

8

one

C

foo

0.332222

-0.127428

9

one

A

bar

-0.784876

-0.736117

10

two

B

bar

-0.793957

1.705022

11

three

C

bar

-0.898485

1.038166

pd. pivot_table( df, values= 'D' , index= [ 'A' , 'B' ] , columns= [ 'C' ] )

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

C

bar

foo

A

B

one

A

-0.784876

0.346892

B

1.130028

0.808681

C

0.510629

0.332222

three

A

-0.227795

NaN

B

NaN

0.921087

C

-0.898485

NaN

two

A

NaN

0.379503

B

-0.793957

NaN

C

NaN

-1.324783

rng = pd. date_range( '1/1/2012' , periods= 100 , freq= 'S' )

rng

DatetimeIndex(['2012-01-01 00:00:00', '2012-01-01 00:00:01',

'2012-01-01 00:00:02', '2012-01-01 00:00:03',

'2012-01-01 00:00:04', '2012-01-01 00:00:05',

'2012-01-01 00:00:06', '2012-01-01 00:00:07',

'2012-01-01 00:00:08', '2012-01-01 00:00:09',

'2012-01-01 00:00:10', '2012-01-01 00:00:11',

'2012-01-01 00:00:12', '2012-01-01 00:00:13',

'2012-01-01 00:00:14', '2012-01-01 00:00:15',

'2012-01-01 00:00:16', '2012-01-01 00:00:17',

'2012-01-01 00:00:18', '2012-01-01 00:00:19',

'2012-01-01 00:00:20', '2012-01-01 00:00:21',

'2012-01-01 00:00:22', '2012-01-01 00:00:23',

'2012-01-01 00:00:24', '2012-01-01 00:00:25',

'2012-01-01 00:00:26', '2012-01-01 00:00:27',

'2012-01-01 00:00:28', '2012-01-01 00:00:29',

'2012-01-01 00:00:30', '2012-01-01 00:00:31',

'2012-01-01 00:00:32', '2012-01-01 00:00:33',

'2012-01-01 00:00:34', '2012-01-01 00:00:35',

'2012-01-01 00:00:36', '2012-01-01 00:00:37',

'2012-01-01 00:00:38', '2012-01-01 00:00:39',

'2012-01-01 00:00:40', '2012-01-01 00:00:41',

'2012-01-01 00:00:42', '2012-01-01 00:00:43',

'2012-01-01 00:00:44', '2012-01-01 00:00:45',

'2012-01-01 00:00:46', '2012-01-01 00:00:47',

'2012-01-01 00:00:48', '2012-01-01 00:00:49',

'2012-01-01 00:00:50', '2012-01-01 00:00:51',

'2012-01-01 00:00:52', '2012-01-01 00:00:53',

'2012-01-01 00:00:54', '2012-01-01 00:00:55',

'2012-01-01 00:00:56', '2012-01-01 00:00:57',

'2012-01-01 00:00:58', '2012-01-01 00:00:59',

'2012-01-01 00:01:00', '2012-01-01 00:01:01',

'2012-01-01 00:01:02', '2012-01-01 00:01:03',

'2012-01-01 00:01:04', '2012-01-01 00:01:05',

'2012-01-01 00:01:06', '2012-01-01 00:01:07',

'2012-01-01 00:01:08', '2012-01-01 00:01:09',

'2012-01-01 00:01:10', '2012-01-01 00:01:11',

'2012-01-01 00:01:12', '2012-01-01 00:01:13',

'2012-01-01 00:01:14', '2012-01-01 00:01:15',

'2012-01-01 00:01:16', '2012-01-01 00:01:17',

'2012-01-01 00:01:18', '2012-01-01 00:01:19',

'2012-01-01 00:01:20', '2012-01-01 00:01:21',

'2012-01-01 00:01:22', '2012-01-01 00:01:23',

'2012-01-01 00:01:24', '2012-01-01 00:01:25',

'2012-01-01 00:01:26', '2012-01-01 00:01:27',

'2012-01-01 00:01:28', '2012-01-01 00:01:29',

'2012-01-01 00:01:30', '2012-01-01 00:01:31',

'2012-01-01 00:01:32', '2012-01-01 00:01:33',

'2012-01-01 00:01:34', '2012-01-01 00:01:35',

'2012-01-01 00:01:36', '2012-01-01 00:01:37',

'2012-01-01 00:01:38', '2012-01-01 00:01:39'],

dtype='datetime64[ns]', freq='S')

ts = pd. Series( np. random. randint( 0 , 500 , len ( rng) ) , index= rng)

ts

2012-01-01 00:00:00 225

2012-01-01 00:00:01 354

2012-01-01 00:00:02 438

2012-01-01 00:00:03 440

2012-01-01 00:00:04 9

2012-01-01 00:00:05 179

2012-01-01 00:00:06 396

2012-01-01 00:00:07 200

2012-01-01 00:00:08 413

2012-01-01 00:00:09 490

2012-01-01 00:00:10 37

2012-01-01 00:00:11 57

2012-01-01 00:00:12 33

2012-01-01 00:00:13 388

2012-01-01 00:00:14 44

2012-01-01 00:00:15 95

2012-01-01 00:00:16 8

2012-01-01 00:00:17 1

2012-01-01 00:00:18 307

2012-01-01 00:00:19 332

2012-01-01 00:00:20 20

2012-01-01 00:00:21 84

2012-01-01 00:00:22 309

2012-01-01 00:00:23 308

2012-01-01 00:00:24 67

2012-01-01 00:00:25 245

2012-01-01 00:00:26 180

2012-01-01 00:00:27 9

2012-01-01 00:00:28 126

2012-01-01 00:00:29 232

...

2012-01-01 00:01:10 409

2012-01-01 00:01:11 355

2012-01-01 00:01:12 70

2012-01-01 00:01:13 266

2012-01-01 00:01:14 118

2012-01-01 00:01:15 325

2012-01-01 00:01:16 214

2012-01-01 00:01:17 3

2012-01-01 00:01:18 143

2012-01-01 00:01:19 28

2012-01-01 00:01:20 56

2012-01-01 00:01:21 120

2012-01-01 00:01:22 99

2012-01-01 00:01:23 102

2012-01-01 00:01:24 71

2012-01-01 00:01:25 464

2012-01-01 00:01:26 489

2012-01-01 00:01:27 404

2012-01-01 00:01:28 356

2012-01-01 00:01:29 197

2012-01-01 00:01:30 390

2012-01-01 00:01:31 345

2012-01-01 00:01:32 115

2012-01-01 00:01:33 377

2012-01-01 00:01:34 388

2012-01-01 00:01:35 39

2012-01-01 00:01:36 406

2012-01-01 00:01:37 408

2012-01-01 00:01:38 410

2012-01-01 00:01:39 256

Freq: S, Length: 100, dtype: int32

rng = pd. date_range( '3/6/2012 00:00' , periods= 5 , freq= 'D' )

rng

DatetimeIndex(['2012-03-06', '2012-03-07', '2012-03-08', '2012-03-09',

'2012-03-10'],

dtype='datetime64[ns]', freq='D')

ts_utc = ts. tz_localize( 'UTC' )

ts_utc

2012-01-01 00:00:00+00:00 225

2012-01-01 00:00:01+00:00 354

2012-01-01 00:00:02+00:00 438

2012-01-01 00:00:03+00:00 440

2012-01-01 00:00:04+00:00 9

2012-01-01 00:00:05+00:00 179

2012-01-01 00:00:06+00:00 396

2012-01-01 00:00:07+00:00 200

2012-01-01 00:00:08+00:00 413

2012-01-01 00:00:09+00:00 490

2012-01-01 00:00:10+00:00 37

2012-01-01 00:00:11+00:00 57

2012-01-01 00:00:12+00:00 33

2012-01-01 00:00:13+00:00 388

2012-01-01 00:00:14+00:00 44

2012-01-01 00:00:15+00:00 95

2012-01-01 00:00:16+00:00 8

2012-01-01 00:00:17+00:00 1

2012-01-01 00:00:18+00:00 307

2012-01-01 00:00:19+00:00 332

2012-01-01 00:00:20+00:00 20

2012-01-01 00:00:21+00:00 84

2012-01-01 00:00:22+00:00 309

2012-01-01 00:00:23+00:00 308

2012-01-01 00:00:24+00:00 67

2012-01-01 00:00:25+00:00 245

2012-01-01 00:00:26+00:00 180

2012-01-01 00:00:27+00:00 9

2012-01-01 00:00:28+00:00 126

2012-01-01 00:00:29+00:00 232

...

2012-01-01 00:01:10+00:00 409

2012-01-01 00:01:11+00:00 355

2012-01-01 00:01:12+00:00 70

2012-01-01 00:01:13+00:00 266

2012-01-01 00:01:14+00:00 118

2012-01-01 00:01:15+00:00 325

2012-01-01 00:01:16+00:00 214

2012-01-01 00:01:17+00:00 3

2012-01-01 00:01:18+00:00 143

2012-01-01 00:01:19+00:00 28

2012-01-01 00:01:20+00:00 56

2012-01-01 00:01:21+00:00 120

2012-01-01 00:01:22+00:00 99

2012-01-01 00:01:23+00:00 102

2012-01-01 00:01:24+00:00 71

2012-01-01 00:01:25+00:00 464

2012-01-01 00:01:26+00:00 489

2012-01-01 00:01:27+00:00 404

2012-01-01 00:01:28+00:00 356

2012-01-01 00:01:29+00:00 197

2012-01-01 00:01:30+00:00 390

2012-01-01 00:01:31+00:00 345

2012-01-01 00:01:32+00:00 115

2012-01-01 00:01:33+00:00 377

2012-01-01 00:01:34+00:00 388

2012-01-01 00:01:35+00:00 39

2012-01-01 00:01:36+00:00 406

2012-01-01 00:01:37+00:00 408

2012-01-01 00:01:38+00:00 410

2012-01-01 00:01:39+00:00 256

Freq: S, Length: 100, dtype: int32

ts_utc. tz_convert( 'US/Eastern' )

2011-12-31 19:00:00-05:00 225

2011-12-31 19:00:01-05:00 354

2011-12-31 19:00:02-05:00 438

2011-12-31 19:00:03-05:00 440

2011-12-31 19:00:04-05:00 9

2011-12-31 19:00:05-05:00 179

2011-12-31 19:00:06-05:00 396

2011-12-31 19:00:07-05:00 200

2011-12-31 19:00:08-05:00 413

2011-12-31 19:00:09-05:00 490

2011-12-31 19:00:10-05:00 37

2011-12-31 19:00:11-05:00 57

2011-12-31 19:00:12-05:00 33

2011-12-31 19:00:13-05:00 388

2011-12-31 19:00:14-05:00 44

2011-12-31 19:00:15-05:00 95

2011-12-31 19:00:16-05:00 8

2011-12-31 19:00:17-05:00 1

2011-12-31 19:00:18-05:00 307

2011-12-31 19:00:19-05:00 332

2011-12-31 19:00:20-05:00 20

2011-12-31 19:00:21-05:00 84

2011-12-31 19:00:22-05:00 309

2011-12-31 19:00:23-05:00 308

2011-12-31 19:00:24-05:00 67

2011-12-31 19:00:25-05:00 245

2011-12-31 19:00:26-05:00 180

2011-12-31 19:00:27-05:00 9

2011-12-31 19:00:28-05:00 126

2011-12-31 19:00:29-05:00 232

...

2011-12-31 19:01:10-05:00 409

2011-12-31 19:01:11-05:00 355

2011-12-31 19:01:12-05:00 70

2011-12-31 19:01:13-05:00 266

2011-12-31 19:01:14-05:00 118

2011-12-31 19:01:15-05:00 325

2011-12-31 19:01:16-05:00 214

2011-12-31 19:01:17-05:00 3

2011-12-31 19:01:18-05:00 143

2011-12-31 19:01:19-05:00 28

2011-12-31 19:01:20-05:00 56

2011-12-31 19:01:21-05:00 120

2011-12-31 19:01:22-05:00 99

2011-12-31 19:01:23-05:00 102

2011-12-31 19:01:24-05:00 71

2011-12-31 19:01:25-05:00 464

2011-12-31 19:01:26-05:00 489

2011-12-31 19:01:27-05:00 404

2011-12-31 19:01:28-05:00 356

2011-12-31 19:01:29-05:00 197

2011-12-31 19:01:30-05:00 390

2011-12-31 19:01:31-05:00 345

2011-12-31 19:01:32-05:00 115

2011-12-31 19:01:33-05:00 377

2011-12-31 19:01:34-05:00 388

2011-12-31 19:01:35-05:00 39

2011-12-31 19:01:36-05:00 406

2011-12-31 19:01:37-05:00 408

2011-12-31 19:01:38-05:00 410

2011-12-31 19:01:39-05:00 256

Freq: S, Length: 100, dtype: int32

prng = pd. period_range( '1990Q1' , '2000Q4' , freq= 'Q-NOV' )

prng

PeriodIndex(['1990Q1', '1990Q2', '1990Q3', '1990Q4', '1991Q1', '1991Q2',

'1991Q3', '1991Q4', '1992Q1', '1992Q2', '1992Q3', '1992Q4',

'1993Q1', '1993Q2', '1993Q3', '1993Q4', '1994Q1', '1994Q2',

'1994Q3', '1994Q4', '1995Q1', '1995Q2', '1995Q3', '1995Q4',

'1996Q1', '1996Q2', '1996Q3', '1996Q4', '1997Q1', '1997Q2',

'1997Q3', '1997Q4', '1998Q1', '1998Q2', '1998Q3', '1998Q4',

'1999Q1', '1999Q2', '1999Q3', '1999Q4', '2000Q1', '2000Q2',

'2000Q3', '2000Q4'],

dtype='period[Q-NOV]', freq='Q-NOV')

ts = pd. Series( np. random. randn( len ( prng) ) , prng)

ts

1990Q1 1.796304

1990Q2 0.659808

1990Q3 -0.647755

1990Q4 1.846486

1991Q1 0.488348

1991Q2 1.830351

1991Q3 -1.658804

1991Q4 0.585780

1992Q1 -0.596026

1992Q2 -1.900346

1992Q3 -0.066638

1992Q4 0.419037

1993Q1 0.055711

1993Q2 -2.103900

1993Q3 0.229944

1993Q4 0.317348

1994Q1 -0.776638

1994Q2 -0.241438

1994Q3 -0.587104

1994Q4 0.825772

1995Q1 2.444721

1995Q2 0.803142

1995Q3 0.494378

1995Q4 -0.984900

1996Q1 -0.431641

1996Q2 0.766768

1996Q3 -1.176313

1996Q4 0.339700

1997Q1 -1.523029

1997Q2 0.512173

1997Q3 1.359914

1997Q4 0.564407

1998Q1 0.354859

1998Q2 -0.493561

1998Q3 0.514986

1998Q4 -0.156142

1999Q1 1.047135

1999Q2 0.648944

1999Q3 -1.581937

1999Q4 0.261181

2000Q1 -0.809498

2000Q2 1.102175

2000Q3 0.424905

2000Q4 -0.775245

Freq: Q-NOV, dtype: float64

ts. index = ( prng. asfreq( 'M' , 'e' ) + 1 ) . asfreq( 'H' , 's' ) + 9

ts. head( )

1990-03-01 09:00 1.796304

1990-06-01 09:00 0.659808

1990-09-01 09:00 -0.647755

1990-12-01 09:00 1.846486

1991-03-01 09:00 0.488348

Freq: H, dtype: float64

df = pd. DataFrame( { "id" : [ 1 , 2 , 3 , 4 , 5 , 6 ] , "raw_grade" : [ 'a' , 'b' , 'b' , 'a' , 'a' , 'e' ] } )

df

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

id

raw_grade

0

1

a

1

2

b

2

3

b

3

4

a

4

5

a

5

6

e

df[ "grade" ] = df[ "raw_grade" ] . astype( "category" )

df[ "grade" ]

0 a

1 b

2 b

3 a

4 a

5 e

Name: grade, dtype: category

Categories (3, object): [a, b, e]

df[ "grade" ] . cat. categories = [ "very good" , "good" , "very bad" ]

df[ "grade" ]

0 very good

1 good

2 good

3 very good

4 very good

5 very bad

Name: grade, dtype: category

Categories (3, object): [very good, good, very bad]

df[ "grade" ] = df[ "grade" ] . cat. set_categories( [ "very bad" , "bad" , "medium" , "good" , "very good" ] )

df[ "grade" ]

0 very good

1 good

2 good

3 very good

4 very good

5 very bad

Name: grade, dtype: category

Categories (5, object): [very bad, bad, medium, good, very good]

df. sort_values( by= "grade" )

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

id

raw_grade

grade

5

6

e

very bad

1

2

b

good

2

3

b

good

0

1

a

very good

3

4

a

very good

4

5

a

very good

df. groupby( "grade" ) . size( )

grade

very bad 1

bad 0

medium 0

good 2

very good 3

dtype: int64

ts = pd. Series( np. random. randn( 1000 ) , index= pd. date_range( '1/1/2000' , periods= 1000 ) )

ts

2000-01-01 0.585074

2000-01-02 0.605786

2000-01-03 0.697632

2000-01-04 -0.783338

2000-01-05 1.150780

2000-01-06 -0.718491

2000-01-07 0.696745

2000-01-08 0.270574

2000-01-09 0.657496

2000-01-10 -2.613661

2000-01-11 -1.978929

2000-01-12 0.325563

2000-01-13 0.286470

2000-01-14 -0.315502

2000-01-15 0.487703

2000-01-16 -1.830420

2000-01-17 0.847074

2000-01-18 -2.363392

2000-01-19 0.139429

2000-01-20 -0.512045

2000-01-21 0.209301

2000-01-22 -0.202987

2000-01-23 -0.605512

2000-01-24 0.113967

2000-01-25 -0.546799

2000-01-26 1.758145

2000-01-27 0.299658

2000-01-28 -0.614838

2000-01-29 0.262877

2000-01-30 0.021676

...

2002-08-28 0.222110

2002-08-29 -1.846013

2002-08-30 -0.094660

2002-08-31 1.281895

2002-09-01 -1.072053

2002-09-02 0.503427

2002-09-03 -0.499512

2002-09-04 -1.080912

2002-09-05 -0.780288

2002-09-06 -0.537608

2002-09-07 -0.991904

2002-09-08 0.159327

2002-09-09 0.224638

2002-09-10 2.063388

2002-09-11 1.217366

2002-09-12 0.603689

2002-09-13 0.832689

2002-09-14 -1.788089

2002-09-15 -2.183370

2002-09-16 -0.759798

2002-09-17 -0.836241

2002-09-18 0.298536

2002-09-19 1.969939

2002-09-20 -0.688728

2002-09-21 -0.964116

2002-09-22 -1.279596

2002-09-23 0.357739

2002-09-24 1.253534

2002-09-25 -0.798673

2002-09-26 -1.023241

Freq: D, Length: 1000, dtype: float64

ts. cumsum( )

2000-01-01 0.585074

2000-01-02 1.190860

2000-01-03 1.888493

2000-01-04 1.105155

2000-01-05 2.255935

2000-01-06 1.537445

2000-01-07 2.234190

2000-01-08 2.504764

2000-01-09 3.162260

2000-01-10 0.548599

2000-01-11 -1.430329

2000-01-12 -1.104767

2000-01-13 -0.818296

2000-01-14 -1.133798

2000-01-15 -0.646095

2000-01-16 -2.476516

2000-01-17 -1.629442

2000-01-18 -3.992834

2000-01-19 -3.853405

2000-01-20 -4.365450

2000-01-21 -4.156149

2000-01-22 -4.359136

2000-01-23 -4.964649

2000-01-24 -4.850682

2000-01-25 -5.397481

2000-01-26 -3.639336

2000-01-27 -3.339678

2000-01-28 -3.954516

2000-01-29 -3.691639

2000-01-30 -3.669963

...

2002-08-28 -12.290664

2002-08-29 -14.136676

2002-08-30 -14.231337

2002-08-31 -12.949442

2002-09-01 -14.021495

2002-09-02 -13.518068

2002-09-03 -14.017579

2002-09-04 -15.098492

2002-09-05 -15.878779

2002-09-06 -16.416387

2002-09-07 -17.408292

2002-09-08 -17.248965

2002-09-09 -17.024327

2002-09-10 -14.960940

2002-09-11 -13.743574

2002-09-12 -13.139885

2002-09-13 -12.307196

2002-09-14 -14.095285

2002-09-15 -16.278655

2002-09-16 -17.038453

2002-09-17 -17.874694

2002-09-18 -17.576157

2002-09-19 -15.606219

2002-09-20 -16.294946

2002-09-21 -17.259062

2002-09-22 -18.538658

2002-09-23 -18.180919

2002-09-24 -16.927385

2002-09-25 -17.726058

2002-09-26 -18.749299

Freq: D, Length: 1000, dtype: float64

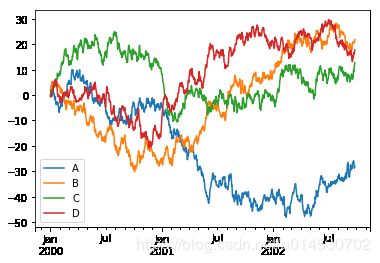

ts. plot( )

df = pd. DataFrame( np. random. randn( 1000 , 4 ) , index= ts. index,

. . . . . : columns= [ 'A' , 'B' , 'C' , 'D' ] )

df

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

A

B

C

D

2000-01-01

-0.434770

1.797170

0.354820

-0.166193

2000-01-02

0.420233

0.823251

0.468701

-0.582949

2000-01-03

0.674668

1.032230

1.134346

0.696656

2000-01-04

0.948684

0.188295

-0.604249

-0.062779

2000-01-05

-1.381247

-0.360335

-0.346491

1.072618

2000-01-06

0.492510

1.924341

0.522605

0.293788

2000-01-07

-1.129093

0.063874

0.099183

0.557496

2000-01-08

1.142263

-0.309192

1.140049

1.007656

2000-01-09

1.751273

-0.747153

0.795127

-0.480155

2000-01-10

-1.519661

-1.187734

0.417908

-0.675147

2000-01-11

-0.096192

1.095308

0.094648

1.485943

2000-01-12

0.109115

-0.213535

-0.927250

1.189941

2000-01-13

-0.787367

-0.919787

1.286709

0.894471

2000-01-14

-0.584850

0.794088

0.533716

-0.159539

2000-01-15

-1.352332

-0.880446

0.041934

0.002573

2000-01-16

0.317933

0.957925

0.813780

0.952499

2000-01-17

0.950317

0.162642

-0.018575

-0.940598

2000-01-18

-2.021125

1.592108

0.219355

-1.300103

2000-01-19

-0.673145

-1.852674

-0.492845

0.070786

2000-01-20

-0.562802

-0.504083

0.980132

-0.079636

2000-01-21

0.693927

0.276601

-0.502267

1.824789

2000-01-22

0.240543

-0.049004

0.051460

-1.093965

2000-01-23

0.159181

0.559377

0.353952

-1.750909

2000-01-24

-1.009695

-0.169914

2.214441

-1.301680

2000-01-25

0.741394

-0.206067

-1.250305

-2.021061

2000-01-26

-1.050527

-0.448726

0.744841

0.559876

2000-01-27

-0.268987

0.755171

-0.865320

-0.077159

2000-01-28

-1.445525

-0.443887

0.048399

0.295317

2000-01-29

-0.348641

-0.570866

0.446533

-0.745215

2000-01-30

-0.803883

0.719817

0.035095

-0.057671

...

...

...

...

...

2002-08-28

-1.400857

-1.993967

-0.563839

-0.553431

2002-08-29

-0.860120

-0.252746

-0.585336

0.083630

2002-08-30

0.677218

0.113083

-0.507485

-1.247440

2002-08-31

1.901913

0.124469

-0.482948

0.093981

2002-09-01

1.728861

1.909778

-1.206848

-1.324399

2002-09-02

1.419153

-1.000495

-0.117854

-0.630926

2002-09-03

0.716920

-0.831795

2.443522

-0.247801

2002-09-04

-0.886588

-0.487240

0.476527

1.273604

2002-09-05

-2.361533

-0.074533

-1.095040

0.087406

2002-09-06

-1.225924

-0.444836

0.378192

-0.785585

2002-09-07

-1.064395

0.046003

0.148525

0.393557

2002-09-08

-0.294659

0.912430

-0.795767

0.064672

2002-09-09

0.276846

0.993007

-0.493192

0.673319

2002-09-10

1.676072

0.102106

-1.286082

-1.454404

2002-09-11

2.124521

0.069451

0.495054

0.148496

2002-09-12

0.821348

-0.880714

0.933978

1.869043

2002-09-13

-0.890738

-1.263920

0.128660

-0.282550

2002-09-14

-1.097484

0.652124

0.702043

-0.552927

2002-09-15

0.161343

0.157393

0.851718

-1.265120

2002-09-16

0.865516

-1.196734

-0.985248

-1.472387

2002-09-17

-0.539248

1.388908

-0.870515

-0.671165

2002-09-18

1.154511

0.879535

-0.249820

-0.393302

2002-09-19

1.237163

0.668046

0.917817

0.300664

2002-09-20

-0.187801

0.173142

-0.225307

2.142230

2002-09-21

0.517452

-0.547158

1.587477

-0.922776

2002-09-22

0.424784

0.696831

1.340258

1.252117

2002-09-23

-0.687751

-0.006990

-0.607220

0.709964

2002-09-24

-1.811347

0.200485

2.117700

-0.468944

2002-09-25

-0.431668

-0.385997

0.303936

0.817534

2002-09-26

0.678959

1.061957

1.252870

0.735550

1000 rows × 4 columns

df = df. cumsum( )

plt. figure( ) ; df. plot( ) ; plt. legend( loc= 'best' )