多媒体通信基础

多媒体通信基础

- 多媒体通信基础

- 一路媒体流

- RTP的流内同步

- Jitter

- 音频流+视频流

- Rtp/rtcp的流间同步

- 媒体间同步

- Rtp/rtcp的流间同步

- reference

- 一路媒体流

一路媒体流

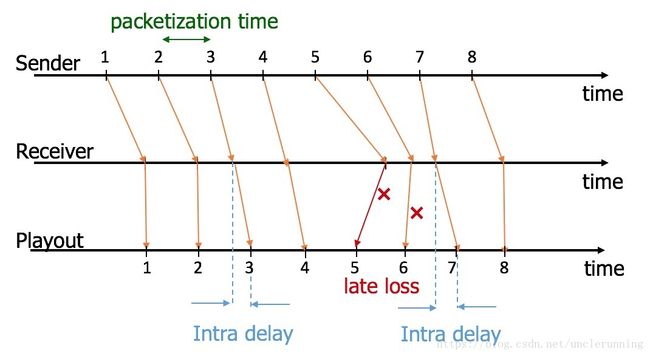

case 1:

无丢包,无网络抖动

无须同步,无须缓存,解码即播。

case 2:

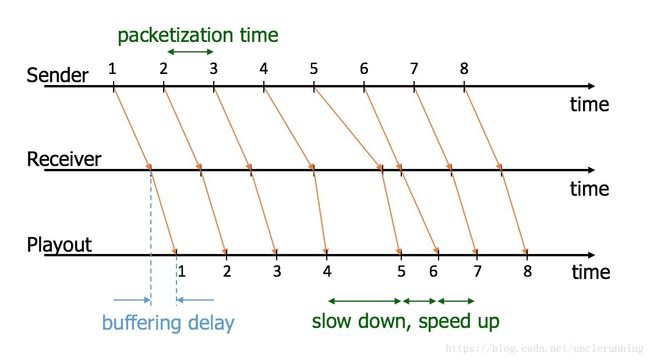

存在网络抖动

如果这时候收到帧就立马解码播放,由于网络的抖动,在某些时刻,大量的帧到来,会出现明显的快进的感觉,在某些时刻,没有数据帧到来,会有明显慢放甚至卡顿的感觉。

合理的做法是:

流内同步

流内同步是指在接收到一帧可解码的数据之后,不立即去解码播放,而是等到帧间间隔时间到来时再去播放。

RTP的流内同步

a) 发送端:

从发送端出发的每一帧数据都有一个时间戳,表示该帧第一个采样点被采集的时间点。在将帧数据封装为rtp包的时候,发送端会将该时间戳写入rtp数据包包头:

timeStampi=timeStampi−1+Δtimestamp t i m e S t a m p i = t i m e S t a m p i − 1 + Δ t i m e s t a m p 其中, Δtimestamp Δ t i m e s t a m p 是第i帧和第i-1帧之间的时间戳增量, Δtimestamp=frequency/fps Δ t i m e s t a m p = f r e q u e n c y / f p s ,即一帧的样本增量。一般,第一帧的timeStamp取值为随机值。

所以,相邻两帧之间的时间戳之差其实就是样本的增量,用样本的增量除以采样率(frequency)将得到相邻两帧之间[以秒为单位]的时间间隔。而这个时间间隔就可以用来做流内的同步。

b) 接收端:

在接收端接收到一帧数据之后,需要参考前一帧播放的时间,计算得到当前帧的播放时间:

presentTimei=presentTimei−1+Δtimestamp/frequency p r e s e n t T i m e i = p r e s e n t T i m e i − 1 + Δ t i m e s t a m p / f r e q u e n c y

如此,播放端将不会出现忽快忽慢的现象,可是,当网络抖动的时候,以这种方式处理将不可避免的出现卡顿和掉帧的现象[数据帧错过了显示时间,被丢弃]。

那要怎么做?

设置一个buffer,消除网络抖动。

Buffering reduces a system’s sensitivity to short-term fluctuations in the data arrival rate by absorbing variations in end-to-end delay and allowing margin for retransmission attempts when packets are lost.

Jitter

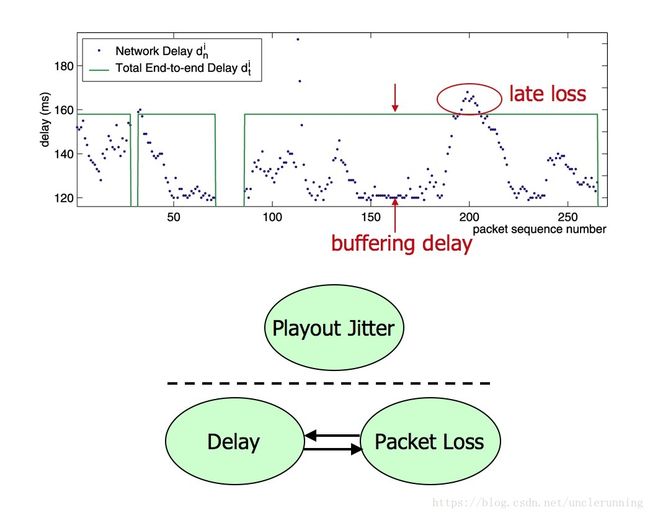

The network delivers RTP packets asynchronously, with variable delays. To be able to play the audio stream with reasonable quality, the receiving endpoint needs to turn the variable delays into constant delays. This can be done by using a jitter buffer.

The jitter buffer is used to compensate transmission impairments which appears because of the time-variant delays of received data packet.

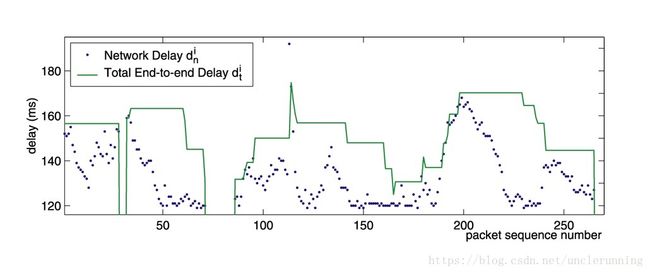

Generally the larger the jitter buffer is, the bigger the added delay and the more packets that are successfully played out. Unfortunately this additional delay lowers the perceived QoS. On the other hand, if the playout delay is set too low, the network-induced delay will cause some packets to arrive too late for playout and thus be lost, which also lowers the perceived QoS. The main objective of jitter buffering is to keep the packet loss rate under 5% and to keep the end-to-end delay as small as possible.

固定buffer VS 动态buffer

通过在网络路由到播放端之间设置一个buffer,可以对播放端将网络抖动隐藏起来。

我们知道,网络不好时,网络中传输的数据包由于排队,逗留在网络路由中,导致邻近包之间的网络延迟发生波动,这就是抖动,如果要对接收端的播放模块隐藏网络抖动,就需要在网络路由到播放端之间插入一个buffer delay,这个buffer delay必须足够大,要能包容网络中延迟最大的那一帧,这样才能最好的消除抖动。

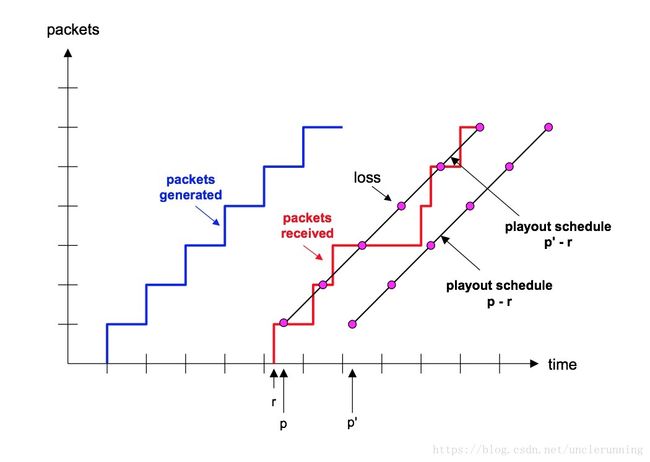

固定buffer:

固定buffer的好处是算法简单:对于接收到的第一帧数据,在其arriveTime上再加入一个固定的buffer延迟,对以后接收的帧按流内同步进行播放。

p = t * r + offset + jitter-delay.

在接收到第一帧时计算出offset,建立jitter buffer,其中:

•p = playout time

•t = timestamp of DU

•r = period of DU clock

•offset = clock offset

•jitter-delay = fix size jitter delay allowance to p.

例子:

•Variance allowance = 60 ms

•Media timestamp period = 30 ms

•First DU:

–Media Timestamp = 10

–System Clock = 1000

•Solve for offset:

–1000 = 10 * 30 + offset => offset = 700

•Add variance allowance

–p = t * 30 + 700 + 60

| Packet | TS | ArrivalTime | PlayoutTime |

|---|---|---|---|

| a | 10 | 1000 | 1060 |

| b | 11 | 1025 | 1090 |

| c | 12 | 1050 | 1120 |

| d | 13 | 1095 | 1150 |

| e | 14 | 1185 | 1180 [late lost] |

如上图所示, r r 为第一帧数据包的到达时间, p p , p′ p ′ 是采用不同大小的固定buffer之后第一帧数据包的播放时间。图中playout schedule的箭头指向将 p−r p − r 和 p′−r p ′ − r 弄反了。

固定buffer的缺点也很明显:如果buffer设置的过小,则不能有效的消除抖动;如果buffer设置的过大,则会导致过大的延迟,很难在延迟和丢包之间取舍。

合理的做法:

引入一个动态buffer

一个十分优秀的动态buffer能够有效的利用一些先验知识预测网络状态,动态的调整buffer的大小,对播放端隐藏网络抖动的同时,最小化播放延迟。

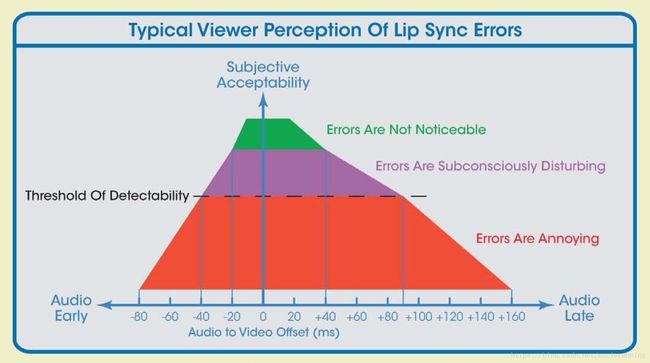

音频流+视频流

The idea behind the audio-video synchronization process is that the adaptive playout algorithms are performed first, and the video frames are played out dependent on the playout times of their corresponding audio packets. This is done by storing the video frames in a video playout buffer and by delaying each frame until the corresponding audio packets are played out. The correspondence between audio and video frames is given by their timestamps.

If the video quality is not acceptable using this scheme which gives priority to audio, additional measures may be needed to improve the video playout, e.g. adaptive adjusting of the video frame playout process.

Rtp/rtcp的流间同步

根据Rtp协议,每一路正在发送的rtp媒体流都有一个同步源标识ssrc,都伴随一路定期发送的rtcp数据流来统计和反馈该路媒体流的状态。

rtp媒体流有自己的时钟频率[采样率]和起始时刻[startTimestamp]:

ti=startTimestamp+Δsamples t i = s t a r t T i m e s t a m p + Δ s a m p l e s

rtp标准建议startTimestamp为一个随机值。rtp在发送时会将相应的timeStamp填入rtp header中。

可以看出,如果仅仅只有rtp提供的时间戳信息,接收端完全无法知道这路媒体流每一帧数据实际被采集的wall clock time。

wall clock 作为不同媒体流的参考时钟,被用来指示媒体流间的time align。

如果有一个参考时钟就好了!

Clocki⇔Clockwall C l o c k i ⇔ C l o c k w a l l

Rtcp协议中有一类包为SR包,是发送端报告(sender report),SR包中有两个时间戳信息,一个记录wall clock time,另一个记录media clock time。有了这两个信息,接收端就可以将media clock time映射到wall clock time上。

设media clock time为 t t , T T 为其对应的wall clock time [以秒为单位],有:

t/frequency=T+offset⇒offset=T−t/frequency t / f r e q u e n c y = T + o f f s e t ⇒ o f f s e t = T − t / f r e q u e n c y

设 ti t i 为第i帧媒体数据的media clock time,则其对应的wall clock time Ti T i :

Ti=ti/frequency−offset T i = t i / f r e q u e n c y − o f f s e t

有了Rtcp SR包的存在,我们可以将media clock align to reference clock,这很好,接下来就可以去做同步了。

媒体间同步

有了参考时钟,同步其实就只是个策略问题了,例如:

对于每一路媒体流,计算最近播放的一帧数据的wall clock time,然后比较他们之间的相对延迟,然后调整媒体的播放速度。

reference

RTP: A Transport Protocol for Real-Time Applications

VoIP Basics: About Jitter

Indepth: Jitter

Adaptive Playout Mechanisms for Packetized Audio Applications in

Wide-Area Networks

Packet audio playout delay adjustment:performance bounds and algorithms