C#开发FFMPEG例子(API方式) FFmpeg拉取RTMP流并播放

最近公司有个视频的项目,需要调用FFMPEG的API来实现部分功能,这么多语言用下来,还是C#最舒服,所以就还是希望用C#来写,但C#不能直接调用ffmpeg的静态库,动态库又得采用pinvoke方式,ffmpeg那么多API,还涉及类型转换,要完全实现工作量真不小,开源项目SharpFFmpeg也很久没更新了,版本太老,正要自己实现的时候发现了ffmpeg.autogen这个项目,几乎是全部支持ffmpeg的API,而且一直在更新,所以就采用了这个项目,用下来还真不错。啰嗦了这么多,还是上个简单的例子 。

FFmpeg拉取RTMP流并播放

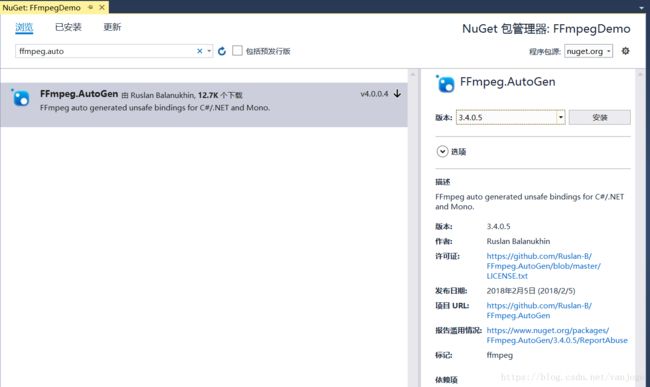

- 1. 项目添加ffmpeg.autogen引用,nuget可以直接获取到,因为我用的FFmpeg是3.4版本,ffmpeg.autogen也用的3.4版本,如图。

- 2.创建一个frmPlayer窗体,来显示图像,为了方便,我这里用一个PictureBox来显示图像。

FFmpeg解码后是YUV图像,需通过sws_scale转会成RGB,然后构造成 System.Drawing.Bitmap给PictureBox显示。

- 3. FFmpeg拉流和解码类 里面有详细注释。

using FFmpeg.AutoGen;

using System;

using System.Drawing;

using System.Drawing.Imaging;

using System.Runtime.InteropServices;

namespace FFmpegDemo

{

public unsafe class tstRtmp

{

///

/// 显示图片委托

///

///

public delegate void ShowBitmap(Bitmap bitmap);

///

/// 执行控制变量

///

bool CanRun;

///

/// 对读取的264数据包进行解码和转换

///

/// 解码完成回调函数

/// 播放地址,也可以是本地文件地址

public unsafe void Start(ShowBitmap show, string url)

{

CanRun = true;

Console.WriteLine(@"Current directory: " + Environment.CurrentDirectory);

Console.WriteLine(@"Runnung in {0}-bit mode.", Environment.Is64BitProcess ? @"64" : @"32");

//FFmpegDLL目录查找和设置

FFmpegBinariesHelper.RegisterFFmpegBinaries();

#region ffmpeg 初始化

// 初始化注册ffmpeg相关的编码器

ffmpeg.av_register_all();

ffmpeg.avcodec_register_all();

ffmpeg.avformat_network_init();

Console.WriteLine($"FFmpeg version info: {ffmpeg.av_version_info()}");

#endregion

#region ffmpeg 日志

// 设置记录ffmpeg日志级别

ffmpeg.av_log_set_level(ffmpeg.AV_LOG_VERBOSE);

av_log_set_callback_callback logCallback = (p0, level, format, vl) =>

{

if (level > ffmpeg.av_log_get_level()) return;

var lineSize = 1024;

var lineBuffer = stackalloc byte[lineSize];

var printPrefix = 1;

ffmpeg.av_log_format_line(p0, level, format, vl, lineBuffer, lineSize, &printPrefix);

var line = Marshal.PtrToStringAnsi((IntPtr)lineBuffer);

Console.Write(line);

};

ffmpeg.av_log_set_callback(logCallback);

#endregion

#region ffmpeg 转码

// 分配音视频格式上下文

var pFormatContext = ffmpeg.avformat_alloc_context();

int error;

//打开流

error = ffmpeg.avformat_open_input(&pFormatContext, url, null, null);

if (error != 0) throw new ApplicationException(GetErrorMessage(error));

// 读取媒体流信息

error = ffmpeg.avformat_find_stream_info(pFormatContext, null);

if (error != 0) throw new ApplicationException(GetErrorMessage(error));

// 这里只是为了打印些视频参数

AVDictionaryEntry* tag = null;

while ((tag = ffmpeg.av_dict_get(pFormatContext->metadata, "", tag, ffmpeg.AV_DICT_IGNORE_SUFFIX)) != null)

{

var key = Marshal.PtrToStringAnsi((IntPtr)tag->key);

var value = Marshal.PtrToStringAnsi((IntPtr)tag->value);

Console.WriteLine($"{key} = {value}");

}

// 从格式化上下文获取流索引

AVStream* pStream = null, aStream;

for (var i = 0; i < pFormatContext->nb_streams; i++)

{

if (pFormatContext->streams[i]->codec->codec_type == AVMediaType.AVMEDIA_TYPE_VIDEO)

{

pStream = pFormatContext->streams[i];

}

else if (pFormatContext->streams[i]->codec->codec_type == AVMediaType.AVMEDIA_TYPE_AUDIO)

{

aStream = pFormatContext->streams[i];

}

}

if (pStream == null) throw new ApplicationException(@"Could not found video stream.");

// 获取流的编码器上下文

var codecContext = *pStream->codec;

Console.WriteLine($"codec name: {ffmpeg.avcodec_get_name(codecContext.codec_id)}");

// 获取图像的宽、高及像素格式

var width = codecContext.width;

var height = codecContext.height;

var sourcePixFmt = codecContext.pix_fmt;

// 得到编码器ID

var codecId = codecContext.codec_id;

// 目标像素格式

var destinationPixFmt = AVPixelFormat.AV_PIX_FMT_BGR24;

// 某些264格式codecContext.pix_fmt获取到的格式是AV_PIX_FMT_NONE 统一都认为是YUV420P

if (sourcePixFmt == AVPixelFormat.AV_PIX_FMT_NONE && codecId == AVCodecID.AV_CODEC_ID_H264)

{

sourcePixFmt = AVPixelFormat.AV_PIX_FMT_YUV420P;

}

// 得到SwsContext对象:用于图像的缩放和转换操作

var pConvertContext = ffmpeg.sws_getContext(width, height, sourcePixFmt,

width, height, destinationPixFmt,

ffmpeg.SWS_FAST_BILINEAR, null, null, null);

if (pConvertContext == null) throw new ApplicationException(@"Could not initialize the conversion context.");

//分配一个默认的帧对象:AVFrame

var pConvertedFrame = ffmpeg.av_frame_alloc();

// 目标媒体格式需要的字节长度

var convertedFrameBufferSize = ffmpeg.av_image_get_buffer_size(destinationPixFmt, width, height, 1);

// 分配目标媒体格式内存使用

var convertedFrameBufferPtr = Marshal.AllocHGlobal(convertedFrameBufferSize);

var dstData = new byte_ptrArray4();

var dstLinesize = new int_array4();

// 设置图像填充参数

ffmpeg.av_image_fill_arrays(ref dstData, ref dstLinesize, (byte*)convertedFrameBufferPtr, destinationPixFmt, width, height, 1);

#endregion

#region ffmpeg 解码

// 根据编码器ID获取对应的解码器

var pCodec = ffmpeg.avcodec_find_decoder(codecId);

if (pCodec == null) throw new ApplicationException(@"Unsupported codec.");

var pCodecContext = &codecContext;

if ((pCodec->capabilities & ffmpeg.AV_CODEC_CAP_TRUNCATED) == ffmpeg.AV_CODEC_CAP_TRUNCATED)

pCodecContext->flags |= ffmpeg.AV_CODEC_FLAG_TRUNCATED;

// 通过解码器打开解码器上下文:AVCodecContext pCodecContext

error = ffmpeg.avcodec_open2(pCodecContext, pCodec, null);

if (error < 0) throw new ApplicationException(GetErrorMessage(error));

// 分配解码帧对象:AVFrame pDecodedFrame

var pDecodedFrame = ffmpeg.av_frame_alloc();

// 初始化媒体数据包

var packet = new AVPacket();

var pPacket = &packet;

ffmpeg.av_init_packet(pPacket);

var frameNumber = 0;

while (CanRun)

{

try

{

do

{

// 读取一帧未解码数据

error = ffmpeg.av_read_frame(pFormatContext, pPacket);

Console.WriteLine(pPacket->dts);

if (error == ffmpeg.AVERROR_EOF) break;

if (error < 0) throw new ApplicationException(GetErrorMessage(error));

if (pPacket->stream_index != pStream->index) continue;

// 解码

error = ffmpeg.avcodec_send_packet(pCodecContext, pPacket);

if (error < 0) throw new ApplicationException(GetErrorMessage(error));

// 解码输出解码数据

error = ffmpeg.avcodec_receive_frame(pCodecContext, pDecodedFrame);

} while (error == ffmpeg.AVERROR(ffmpeg.EAGAIN) && CanRun);

if (error == ffmpeg.AVERROR_EOF) break;

if (error < 0) throw new ApplicationException(GetErrorMessage(error));

if (pPacket->stream_index != pStream->index) continue;

Console.WriteLine($@"frame: {frameNumber}");

// YUV->RGB

ffmpeg.sws_scale(pConvertContext, pDecodedFrame->data, pDecodedFrame->linesize, 0, height, dstData, dstLinesize);

}

finally

{

ffmpeg.av_packet_unref(pPacket);//释放数据包对象引用

ffmpeg.av_frame_unref(pDecodedFrame);//释放解码帧对象引用

}

// 封装Bitmap图片

var bitmap = new Bitmap(width, height, dstLinesize[0], PixelFormat.Format24bppRgb, convertedFrameBufferPtr);

// 回调

show(bitmap);

//bitmap.Save(AppDomain.CurrentDomain.BaseDirectory + "\\264\\frame.buffer."+ frameNumber + ".jpg", ImageFormat.Jpeg);

frameNumber++;

}

//播放完置空播放图片

show(null);

#endregion

#region 释放资源

Marshal.FreeHGlobal(convertedFrameBufferPtr);

ffmpeg.av_free(pConvertedFrame);

ffmpeg.sws_freeContext(pConvertContext);

ffmpeg.av_free(pDecodedFrame);

ffmpeg.avcodec_close(pCodecContext);

ffmpeg.avformat_close_input(&pFormatContext);

#endregion

}

///

/// 获取ffmpeg错误信息

///

///

///

- 4.调用代码

public partial class frmPlayer : Form

{

public frmPlayer()

{

InitializeComponent();

}

tstRtmp rtmp = new tstRtmp();

Thread thPlayer;

private void btnStart_Click(object sender, EventArgs e)

{

btnStart.Enabled = false;

if (thPlayer != null)

{

rtmp.Stop();

thPlayer = null;

}

else

{

thPlayer = new Thread(DeCoding);

thPlayer.IsBackground = true;

thPlayer.Start();

btnStart.Text = "停止播放";

btnStart.Enabled = true;

}

}

///

/// 播放线程执行方法

///

private unsafe void DeCoding()

{

try

{

Console.WriteLine("DeCoding run...");

Bitmap oldBmp = null;

// 更新图片显示

tstRtmp.ShowBitmap show = (bmp) =>

{

this.Invoke(new MethodInvoker(() =>

{

this.pic.Image = bmp;

if (oldBmp != null)

{

oldBmp.Dispose();

}

oldBmp = bmp;

}));

};

rtmp.Start(show, txtUrl.Text.Trim());

}

catch (Exception ex)

{

Console.WriteLine(ex);

}

finally

{

Console.WriteLine("DeCoding exit");

this.Invoke(new MethodInvoker(() =>

{

btnStart.Text = "开始播放";

btnStart.Enabled = true;

}));

}

}

}

- 5.最终运行效果

- 其他说明:

- 构造Bitmap给PictureBox显示的方式效率比较低,可以采用SDL_NET版本直接显示YUV;

- 音频这里没处理,音频播放可以用DirectSound 或者SDL_NET;

- 因为这里用到了指针,所以在项目中需要勾选允许不安全代码;

- 网上C#调用FFmpeg的API例子很少,大多是C/C++的,因为ffmpeg.autogen的名称与FFmpeg完全一样,所以C#中可以直接复制C/C++代码,然后在FFmpeg的方法前加入 ffmpeg. 即可;

- ffmpeg.autogen也支持.net core 和mono。

代码放到了github上

https://github.com/vanjoge/CSharpVideoDemo

点这里下载本示例代码