hadoop单节点集群、多节点集群hadoop+zookeeper+yarn+hbase

Hadoop实现了一个分布式文件系统(Hadoop Distributed File System),简称HDFS。

HDFS有高容错性的特点,并且设计用来部署在低廉的(low-cost)硬件上;而且它提供高吞吐量(high throughput)来访问应用程序的数据,适合那些有着超大数据集(large data set)的应用程序。HDFS放宽了(relax)POSIX的要求,可以以流的形式访问(streaming access)文件系统中的数据。

Hadoop的框架最核心的设计就是:HDFS和MapReduce。HDFS为海量的数据提供了存储,则MapReduce为海量的数据提供了计算。

实验环境:

操作系统:redhat6.5 iptables selinux off

hadoop-2.7.3版本,jdk 8版本

Hadoop安装及java环境搭建

解压tar包

[root@server1 mnt]# useradd -u 800 hadoop

[root@server1 mnt]# id hadoop

uid=800(hadoop) gid=800(hadoop) groups=800(hadoop)

[root@server1 mnt]# mv hadoop-2.7.3.tar.gz jdk-7u79-linux-x64.tar.gz /home/hadoop/

[root@server1 mnt]# cd /home/hadoop/

[root@server1 hadoop]# su hadoop

[hadoop@server1 ~]$ ls

hadoop-2.7.3.tar.gz jdk-7u79-linux-x64.tar.gz

[hadoop@server1 ~]$ tar zxf jdk-7u79-linux-x64.tar.gz

[hadoop@server1 ~]$ ln -s jdk1.7.0_79/ java

[hadoop@server1 ~]$ vim .bash_profile

PATH=$PATH:$HOME/bin:~/java/bin

[hadoop@server1 ~]$ source .bash_profile

[hadoop@server1 ~]$ tar zxf hadoop-2.7.3.tar.gz

[hadoop@server1 ~]$ ln -s hadoop-2.7.3 hadoop

[hadoop@server1 ~]$ cd hadoop

[hadoop@server1 hadoop]$ cd etc/hadoop/

[hadoop@server1 hadoop]$ vim hadoop-env.sh

export JAVA_HOME=/home/hadoop/java

[hadoop@server1 hadoop]$ cd ..

[hadoop@server1 etc]$ ls

hadoop

[hadoop@server1 etc]$ cd ..

[hadoop@server1 hadoop]$ ls

bin etc include lib libexec LICENSE.txt NOTICE.txt README.txt sbin share

[hadoop@server1 hadoop]$ mkdir input

[hadoop@server1 hadoop]$ cp etc/hadoop/*.xml input/

[hadoop@server1 hadoop]$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar grep input output 'dfs[a-z.]+'

[hadoop@server1 hadoop]$ cd output/

[hadoop@server1 output]$ ls

part-r-00000 _SUCCESS

[hadoop@server1 output]$ cat *

1 dfsadmin

[hadoop@server1 hadoop]$ vim core-site.xml

[hadoop@server1 hadoop]$ vim hdfs-site.xml

[hadoop@server1 hadoop]$ vim slaves

172.25.20.1

[hadoop@server1 hadoop]$ ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

[hadoop@server1 hadoop]$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

[hadoop@server1 hadoop]$ chmod 0600 ~/.ssh/authorized_keys

[hadoop@server1 hadoop]$ bin/hdfs namenode -format

[hadoop@server1 hadoop]$ sbin/start-dfs.sh

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user/hadoop

[hadoop@server1 hadoop]$ bin/hdfs dfs -ls

[hadoop@server1 hadoop]$ bin/hdfs dfs -put input/

Server1:

[hadoop@server1 hadoop]$ sbin/stop-dfs.sh

Stopping namenodes on [server1]

server1: stopping namenode

172.25.20.1: stopping datanode

Stopping secondary namenodes [0.0.0.0]

0.0.0.0: stopping secondarynamenode

[root@server1 hadoop]# yum install nfs-utils -y

[root@server1 hadoop]# /etc/init.d/rpcbind start

Starting rpcbind: [ OK ]

[root@server1 hadoop]# vim /etc/exports

/home/hadoop *(rw,anonuid=800,anongid=800)

[root@server1 hadoop]# /etc/init.d/nfs start

[root@server1 hadoop]# showmount -e

Export list for server1:

/home/hadoop *

[root@server1 hadoop]# exportfs -v

/home/hadoop

[root@server1 hadoop]# su – hadoop

Server2,server3:

[root@server2 ~]# yum install -y nfs-utils

[root@server2 ~]# /etc/init.d/rpcbind start

Starting rpcbind: [ OK ]

[root@server2 ~]# useradd -u 800 hadoop

[root@server2 ~]# mount 172.25.20.1:/home/hadoop /home/hadoop/

[root@server2 ~]# su - hadoop

Server1:

[hadoop@server1 ~]$ ssh 172.25.20.2

[hadoop@server1 ~]$ ssh 172.25.20.3

[hadoop@server1 ~]$ ssh server2

[hadoop@server1 ~]$ ssh server3

[hadoop@server1 ~]$ vim hadoop/etc/hadoop/slaves

172.25.20.2

172.25.20.3

[hadoop@server1 ~]$ cd /tmp/

[hadoop@server1 tmp]$ ls

hadoop-hadoop Jetty_0_0_0_0_50090_secondary____y6aanv

hsperfdata_hadoop Jetty_localhost_57450_datanode____ycac0k

Jetty_0_0_0_0_50070_hdfs____w2cu08

[hadoop@server1 tmp]$ rm -rf *

[hadoop@server1 ~]$ vim hadoop/etc/hadoop/hdfs-site.xml

[hadoop@server1 hadoop]$ vim core-site.xml

[hadoop@server1 hadoop]$ bin/hdfs namenode -format

[hadoop@server1 hadoop]$ sbin/start-dfs.sh

Starting namenodes on [server1]

server1: starting namenode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-namenode-server1.out

172.25.20.2: starting datanode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-server2.out

172.25.20.3: starting datanode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-server3.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-secondarynamenode-server1.out

[hadoop@server1 hadoop]$ jps

3356 Jps

3059 NameNode

3247 SecondaryNameNode

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user/hadoop

[hadoop@server1 hadoop]$ bin/hdfs dfs -put etc/hadoop/ test

[hadoop@server1 hadoop]$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar wordcount test output

[hadoop@server1 hadoop]$ bin/hdfs dfs -get output

Server1:

[hadoop@server1 hadoop]$ cp mapred-site.xml.template mapred-site.xml

[hadoop@server1 hadoop]$ vim mapred-site.xml

mapreduce.framework.name

yarn

[hadoop@server1 hadoop]$ vim yarn-site.xml

yarn.nodemanager.aux-services

mapreduce_shuffle

[hadoop@server1 hadoop]$ cd ..

[hadoop@server1 etc]$ cd ..

[hadoop@server1 hadoop]$ ls

bin include lib LICENSE.txt NOTICE.txt README.txt share

etc input libexec logs output sbin

[hadoop@server1 hadoop]$ sbin/start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /home/hadoop/hadoop-2.7.3/logs/yarn-hadoop-resourcemanager-server1.out

172.25.20.3: starting nodemanager, logging to /home/hadoop/hadoop-2.7.3/logs/yarn-hadoop-nodemanager-server3.out

172.25.20.2: starting nodemanager, logging to /home/hadoop/hadoop-2.7.3/logs/yarn-hadoop-nodemanager-server2.out

[hadoop@server1 hadoop]$ jps

3901 ResourceManager

4148 Jps

3059 NameNode

3247 SecondaryNameNode

Server2,serve3:

[hadoop@server2 ~]$ jps

1559 Jps

1450 NodeManager

1277 DataNode

添加datanode

Server4:

[root@server4 ~]# yum install nfs-utils -y

[root@server4 ~]# /etc/init.d/rpcbind start

Starting rpcbind: [ OK ]

[root@server4 ~]# mount 172.25.20.1:/home/hadoop/ /home/hadoop/

[root@server4 ~]# su - hadoop

[hadoop@server4 ~]$ cd hadoop

[hadoop@server4 hadoop]$ cd etc/hadoop/

[hadoop@server4 hadoop]$ vim slaves

172.25.20.2

172.25.20.3

172.25.20.4

[hadoop@server4 hadoop]$ cd ..

[hadoop@server4 etc]$ cd ..

[hadoop@server4 hadoop]$ ls

bin include lib LICENSE.txt NOTICE.txt README.txt share

etc input libexec logs output sbin

[hadoop@server4 hadoop]$ sbin/hadoop-daemon.sh start datanode

starting datanode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-server4.out

[hadoop@server4 hadoop]$ jps

1184 DataNode

1258 Jps

客户端存入数据:

[root@server5 ~]# yum install -y nfs-utils

[root@server5 ~]# useradd -u 800 hadoop

[root@server5 ~]# /etc/init.d/rpcbind start

Starting rpcbind: [ OK ]

[root@server5 ~]# mount 172.25.20.1:/home/hadoop/ /home/hadoop/

[root@server5 ~]# su - hadoop

[hadoop@server5 ~]$ cd hadoop

[hadoop@server5 hadoop]$ ls

bin include lib LICENSE.txt NOTICE.txt README.txt share

etc input libexec logs output sbin

[hadoop@server5 hadoop]$ dd if=/dev/zero of=bigfile bs=1M count=200

200+0 records in

200+0 records out

209715200 bytes (210 MB) copied, 1.1298 s, 186 MB/s

[hadoop@server5 hadoop]$ bin/hdfs dfs -put bigfile

移除datanode

Server1:

[hadoop@server1 hadoop]$ vim hdfs-site.xml

[hadoop@server1 hadoop]$ vim hosts-exclude

[hadoop@server1 hadoop]$ vim slaves

[hadoop@server1 hadoop]$ cd ../..

[hadoop@server1 hadoop]$ ls

bigfile etc input libexec logs output sbin

bin include lib LICENSE.txt NOTICE.txt README.txt share

[hadoop@server1 hadoop]$ bin/hdfs dfsadmin -refreshNodes

Refresh nodes successful

[hadoop@server1 hadoop]$ bin/hdfs dfsadmin -report

Configured Capacity: 39322402823 (36.62 GB)

Present Capacity: 35416879111 (32.98 GB)

DFS Remaining: 34993430528 (32.59 GB)

DFS Used: 423448583 (403.83 MB)

DFS Used%: 1.20%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 0

-------------------------------------------------

Live datanodes (3):

Name: 172.25.20.2:50010 (server2)

Hostname: server2

Decommission Status : Normal

Configured Capacity: 19593555968 (18.25 GB)

DFS Used: 211714048 (201.91 MB)

Non DFS Used: 1952763904 (1.82 GB)

DFS Remaining: 17429078016 (16.23 GB)

DFS Used%: 1.08%

DFS Remaining%: 88.95%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Thu Oct 18 15:03:54 CST 2018

Name: 172.25.20.4:50010 (server4)

Hostname: server4

Decommission Status : Decommission in progress

Configured Capacity: 19593555968 (18.25 GB)

DFS Used: 135290887 (129.02 MB)

Non DFS Used: 1948409849 (1.81 GB)

DFS Remaining: 17509855232 (16.31 GB)

DFS Used%: 0.69%

DFS Remaining%: 89.37%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Thu Oct 18 15:03:54 CST 2018

Name: 172.25.20.3:50010 (server3)

Hostname: server3

Decommission Status : Normal

Configured Capacity: 19593555968 (18.25 GB)

DFS Used: 76443648 (72.90 MB)

Non DFS Used: 1952759808 (1.82 GB)

DFS Remaining: 17564352512 (16.36 GB)

DFS Used%: 0.39%

DFS Remaining%: 89.64%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Thu Oct 18 15:03:53 CST 2018

Decommissioning datanodes (1):

Name: 172.25.20.4:50010 (server4)

Hostname: server4

Decommission Status : Decommission in progress

Configured Capacity: 19593555968 (18.25 GB)

DFS Used: 135290887 (129.02 MB)

Non DFS Used: 1948409849 (1.81 GB)

DFS Remaining: 17509855232 (16.31 GB)

DFS Used%: 0.69%

DFS Remaining%: 89.37%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Thu Oct 18 15:03:54 CST 2018

[hadoop@server1 hadoop]$ bin/hdfs dfsadmin -report

Configured Capacity: 39322419200 (36.62 GB)

Present Capacity: 35416891399 (32.98 GB)

DFS Remaining: 34858160128 (32.46 GB)

DFS Used: 558731271 (532.85 MB)

DFS Used%: 1.58%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 0

-------------------------------------------------

Live datanodes (3):

Name: 172.25.20.2:50010 (server2)

Hostname: server2

Decommission Status : Normal

Configured Capacity: 19593555968 (18.25 GB)

DFS Used: 211714048 (201.91 MB)

Non DFS Used: 1952763904 (1.82 GB)

DFS Remaining: 17429078016 (16.23 GB)

DFS Used%: 1.08%

DFS Remaining%: 88.95%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Thu Oct 18 15:04:45 CST 2018

Name: 172.25.20.4:50010 (server4)

Hostname: server4

Decommission Status : Decommissioned

Configured Capacity: 19593555968 (18.25 GB)

DFS Used: 135307264 (129.04 MB)

Non DFS Used: 1948393472 (1.81 GB)

DFS Remaining: 17509855232 (16.31 GB)

DFS Used%: 0.69%

DFS Remaining%: 89.37%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Thu Oct 18 15:04:45 CST 2018

Name: 172.25.20.3:50010 (server3)

Hostname: server3

Decommission Status : Normal

Configured Capacity: 19593555968 (18.25 GB)

DFS Used: 211709959 (201.90 MB)

Non DFS Used: 1952763897 (1.82 GB)

DFS Remaining: 17429082112 (16.23 GB)

DFS Used%: 1.08%

DFS Remaining%: 88.95%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Thu Oct 18 15:04:47 CST 2018

Server4:

[hadoop@server4 hadoop]$ sbin/hadoop-daemon.sh stop datanode

stopping datanode

Server1:

[hadoop@server1 hadoop]$ sbin/stop-dfs.sh

[hadoop@server1 hadoop]$ sbin/stop-yarn.sh stop

[hadoop@server1 hadoop]$ cd /tmp/

[hadoop@server1 tmp]$ rm -rf *

[hadoop@server1 tmp]$ cd

[hadoop@server1 ~]$ tar zxf zookeeper-3.4.9.tar.gz

[hadoop@server1 ~]$ cd zookeeper-3.4.9

[hadoop@server1 zookeeper-3.4.9]$ cd conf/

[hadoop@server1 conf]$ ls

configuration.xsl log4j.properties zoo_sample.cfg

[hadoop@server1 conf]$ cp zoo_sample.cfg zoo.cfg

[hadoop@server1 conf]$ vim zoo.cfg

server.1=172.25.20.2:2888:3888

server.2=172.25.20.3:2888:3888

server.3=172.25.20.4:2888:3888

Server2,server3.server4:

[hadoop@server2 ~]$ cd /tmp/

[hadoop@server2 tmp]$ ls

hadoop-hadoop Jetty_localhost_37472_datanode____y6l3ma

hsperfdata_hadoop Jetty_localhost_39030_datanode____vqwn2m

[hadoop@server2 tmp]$ rm -rf *

[hadoop@server2 tmp]$ mkdir /tmp/zookeeper

[hadoop@server2 tmp]$ vim /tmp/zookeeper/myid

1

[hadoop@server2 tmp]$ cd

[hadoop@server2 ~]$ cd zookeeper-3.4.9

[hadoop@server2 zookeeper-3.4.9]$ bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /home/hadoop/zookeeper-3.4.9/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

Server3:

[hadoop@server3 tmp]$ vim /tmp/zookeeper/myid

2

Server4:

[hadoop@server4 tmp]$ vim /tmp/zookeeper/myid

3

Server1:

[hadoop@server1 ~]$ tar zxf zookeeper-3.4.9.tar.gz

[hadoop@server1 ~]$ cd zookeeper-3.4.9

[hadoop@server1 zookeeper-3.4.9]$ cd conf/

[hadoop@server1 conf]$ ls

configuration.xsl log4j.properties zoo_sample.cfg

[hadoop@server1 conf]$ cp zoo_sample.cfg zoo.cfg

[hadoop@server1 conf]$ vim zoo.cfg

server.1=172.25.20.2:2888:3888

server.2=172.25.20.3:2888:3888

server.3=172.25.20.4:2888:3888

[hadoop@server1 conf]$ cd

[hadoop@server1 ~]$ cd hadoop/etc/hadoop/

[hadoop@server1 hadoop]$ vim core-site.xml

[hadoop@server1 hadoop]$ vim hdfs-site.xml

sshfence

shell(/bin/true)

[hadoop@server1 hadoop]$ vim slaves

172.25.20.2

172.25.20.3

172.25.20.4

[hadoop@server1 hadoop]$ cd ../..

[hadoop@server1 hadoop]$ bin/hdfs namenode -format

[hadoop@server1 hadoop]$ cd /tmp/

[hadoop@server1 tmp]$ scp -r hadoop-hadoop 172.25.20.5:/tmp

[hadoop@server1 tmp]$ cd -

/home/hadoop/hadoop

[hadoop@server1 hadoop]$ bin/hdfs zkfc -formatZK

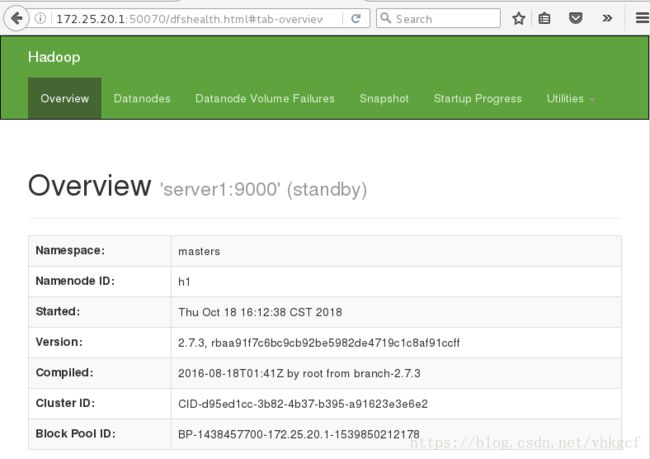

[hadoop@server1 hadoop]$ sbin/start-dfs.sh

Starting namenodes on [server1 server5]

server5: starting namenode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-namenode-server5.out

server1: starting namenode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-namenode-server1.out

172.25.20.3: starting datanode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-server3.out

172.25.20.2: starting datanode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-server2.out

172.25.20.4: starting datanode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-server4.out

Starting journal nodes [172.25.20.2 172.25.20.3 172.25.20.4]

172.25.20.3: journalnode running as process 1468. Stop it first.

172.25.20.2: journalnode running as process 1449. Stop it first.

172.25.20.4: journalnode running as process 1463. Stop it first.

Starting ZK Failover Controllers on NN hosts [server1 server5]

server5: starting zkfc, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-zkfc-server5.out

server1: starting zkfc, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-zkfc-server1.out

[hadoop@server1 hadoop]$ jps

2550 NameNode

2844 DFSZKFailoverController

2893 Jps

Server2:

[hadoop@server2 hadoop]$ jps

1387 QuorumPeerMain

1449 JournalNode

1533 DataNode

1633 Jps

Server3:

[hadoop@server3 hadoop]$ jps

1550 DataNode

1370 QuorumPeerMain

1650 Jps

1468 JournalNode

Server4:

[hadoop@server4 hadoop]$ jps

1643 Jps

1372 QuorumPeerMain

1463 JournalNode

1543 DataNode

Server5:

[hadoop@server5 tmp]$ jps

1718 NameNode

1881 Jps

1815 DFSZKFailoverController

Server5:

[hadoop@server5 hadoop]$ kill 1718

[hadoop@server5 hadoop]$ sbin/hadoop-daemon.sh start namenode

starting namenode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-namenode-server5.out

Server1:

[hadoop@server1 hadoop]$ vim mapred-site.xml

[hadoop@server1 hadoop]$ vim yarn-site.xml

[hadoop@server1 hadoop]$ cd ../..

[hadoop@server1 hadoop]$ sbin/start-yarn.sh

[hadoop@server1 hadoop]$ jps

3118 ResourceManager

3194 Jps

2550 NameNode

2844 DFSZKFailoverController

Server5:

[hadoop@server5 hadoop]$ sbin/yarn-daemon.sh start resourcemanager

starting resourcemanager, logging to /home/hadoop/hadoop-2.7.3/logs/yarn-hadoop-resourcemanager-server5.out

[hadoop@server5 hadoop]$ jps

2597 Jps

2221 NameNode

1815 DFSZKFailoverController

2546 ResourceManager

Server1;

[hadoop@server1 hadoop]$ kill 3118

[hadoop@server1 hadoop]$ sbin/yarn-daemon.sh start resourcemanager

starting resourcemanager, logging to /home/hadoop/hadoop-2.7.3/logs/yarn-hadoop-resourcemanager-server1.out

Server1:

[hadoop@server1 ~]$ tar zxf hbase-1.2.4-bin.tar.gz

[hadoop@server1 ~]$ cd hbase-1.2.4

[hadoop@server1 hbase-1.2.4]$ cd conf/

[hadoop@server1 conf]$ vim hbase-env.sh

export JAVA_HOME=/home/hadoop/java

export HBASE_MANAGES_ZK=false

export HADOOP_HOME=/home/hadoop/hadoop

[hadoop@server1 conf]$ vim hbase-site.xml

[hadoop@server1 conf]$ vim regionservers

172.25.20.2

172.25.20.3

172.25.20.4

[hadoop@server1 hbase-1.2.4]$ bin/start-hbase.sh

starting master, logging to /home/hadoop/hbase-1.2.4/bin/../logs/hbase-hadoop-master-server1.out

172.25.20.2: starting regionserver, logging to /home/hadoop/hbase-1.2.4/bin/../logs/hbase-hadoop-regionserver-server2.out

172.25.20.4: starting regionserver, logging to /home/hadoop/hbase-1.2.4/bin/../logs/hbase-hadoop-regionserver-server4.out

172.25.20.3: starting regionserver, logging to /home/hadoop/hbase-1.2.4/bin/../logs/hbase-hadoop-regionserver-server3.out

[hadoop@server1 hbase-1.2.4]$ jps

3454 ResourceManager

4379 HMaster

2550 NameNode

2844 DFSZKFailoverController

4632 Jps

[hadoop@server1 hbase-1.2.4]$ bin/hbase shell

hbase(main):001:0> create 'test', 'cf'

hbase(main):002:0> list 'test'

hbase(main):003:0> put 'test', 'row1', 'cf:a', 'value1'

hbase(main):004:0> put 'test', 'row2', 'cf:b', 'value2'

hbase(main):005:0> put 'test', 'row3', 'cf:c', 'value3'

hbase(main):006:0> scan 'test'

ROW COLUMN+CELL

row1 column=cf:a, timestamp=1539856660230, value=value1

row2 column=cf:b, timestamp=1539856665464, value=value2

row3 column=cf:c, timestamp=1539856669820, value=value3

3 row(s) in 0.1010 seconds

[hadoop@server1 ~]$ cd hadoop

[hadoop@server1 hadoop]$ bin/hdfs dfs -ls /

Found 2 items

drwxr-xr-x - hadoop supergroup 0 2018-10-18 17:55 /hbase

drwxr-xr-x - hadoop supergroup 0 2018-10-18 16:16 /user

[hadoop@server1 hadoop]$ jps

3454 ResourceManager

4882 Jps

4379 HMaster

2550 NameNode

2844 DFSZKFailoverController

[hadoop@server1 hadoop]$ kill 4379

Server5:

[hadoop@server5 hbase-1.2.4]$ bin/hbase-daemon.sh start master