文本分析之TFIDF/LDA/Word2vec实践

(自己觉得以前写的不够好,20160721重新做了修改)

写在最前面的话,最好的学习材料是官方文档及API:

http://radimrehurek.com/gensim/tutorial.html

http://radimrehurek.com/gensim/apiref.html

以下内空有部分是出自官方文档。

使用TFIDF/LDA来对中文文档做主题分类,TFIDF scikit-learn也有实现,中文的先做分词处理,然后生成向量,根据向量去做主题聚类。

scikit-learn官方文档为:

http://scikit-learn.org/stable/modules/feature_extraction.html#text-feature-extraction

tfidf是这样一个意思,一个词的重要性和他在当前文档中出现的次数成正比,和所有文档中出现的次数成反比。

scikit-learn提供了tfidf的实现,我在这里分两种情况来说明,

1.根据原始语料生成生tfidf的vocabulary和权重

tfidf_vectorizer = TfidfVectorizer()

real_test_raw = ['丈夫他抢劫杀人罪了跑路的话要判多少年','丈夫借名买车离婚时能否要求分割','妻子离婚时丈夫欠的赌债是否要偿还?','夫妻一方下落不明 离婚请求获支持']

real_documents = []

for item_text in real_test_raw:

item_str = word_process.word_cut(item_text)

real_documents.append(item_str)

real_vec = tfidf_vectorizer.fit_transform(real_documents)

logging.info(tfidf_vectorizer.idf_) #特征对应的权重

logging.info(tfidf_vectorizer.get_feature_names())#特征词

logging.info(real_vec.toarray()) #上面四句话对应的向量表示代码解释:

把对应的中文语料先分词,然后转换成向量表示的形式,logging对应的信息如下:

[ 1.22314355 1.91629073 1.91629073 1.91629073 1.91629073 1.91629073 1.91629073 1.91629073 1.91629073 1.91629073 1.22314355 1.91629073

1.91629073 1.91629073 1.91629073 1.91629073]

['丈夫', '买车', '借名', '偿还', '分割', '夫妻', '妻子', '抢劫', '支持', '是否', '离婚', '能否', '要判', '要求', '请求', '赌债']

[[ 0.41137791 0. 0. 0. 0. 0. 0. 0.64450299 0. 0. 0. 0. 0.64450299 0. 0. 0. ]

[ 0.2646963 0.4146979 0.4146979 0. 0.4146979 0. 0. 0. 0. 0. 0.2646963 0.4146979 0. 0.4146979 0. 0. ]

[ 0.29088811 0. 0. 0.45573244 0. 0. 0.45573244 0. 0. 0.45573244 0.29088811 0. 0. 0. 0. 0.45573244]

[ 0. 0. 0. 0. 0. 0.5417361 0. 0. 0.5417361 0. 0.34578314 0. 0. 0. 0.5417361 0. ]]

2,指定自己的诩表vocabulary来运用tfidf模型。

vocabulary = {'丈夫':0, '买车':1, '借名':2, '偿还':3, '分割':4, '夫妻':5, '妻子':6, '抢劫':7, '支持':8, '离婚':9, '赌债':10 }

tfidf_vectorizer = TfidfVectorizer(vocabulary=vocabulary)

real_test_raw = ['丈夫他抢劫杀人罪了跑路的话要判多少年','丈夫借名买车离婚时能否要求分割','妻子离婚时丈夫欠的赌债是否要偿还?','夫妻一方下落不明 离婚请求获支持']

real_documents = []

for item_text in real_test_raw:

item_str = word_process.word_cut(item_text)

real_documents.append(item_str)

real_vec = tfidf_vectorizer.fit_transform(real_documents)

logging.info(tfidf_vectorizer.idf_)

logging.info(tfidf_vectorizer.get_feature_names())

logging.info(real_vec.toarray())打印的日志信息如下:

[ 1.22314355 1.91629073 1.91629073 1.91629073 1.91629073 1.91629073 1.91629073 1.91629073 1.91629073 1.22314355 1.91629073]

['丈夫', '买车', '借名', '偿还', '分割', '夫妻', '妻子', '抢劫', '支持', '离婚', '赌债']

[[ 0.53802897 0. 0. 0. 0. 0. 0. 0.84292635 0. 0. 0. ]

[ 0.32679768 0.51199172 0.51199172 0. 0.51199172 0. 0. 0. 0. 0.32679768 0. ]

[ 0.32679768 0. 0. 0.51199172 0. 0. 0.51199172 0. 0. 0.32679768 0.51199172]

[ 0. 0. 0. 0. 0. 0.64450299 0. 0. 0.64450299 0.41137791 0. ]]

关于gensim的例子如下:

#中文需做分词处理

raw_documents = [

'无偿居间介绍买卖毒品的行为应如何定性',

'吸毒男动态持有大量毒品的行为该如何认定',

'如何区分是非法种植毒品原植物罪还是非法制造毒品罪',

'为毒贩贩卖毒品提供帮助构成贩卖毒品罪',

'将自己吸食的毒品原价转让给朋友吸食的行为该如何认定',

'为获报酬帮人购买毒品的行为该如何认定',

'毒贩出狱后再次够买毒品途中被抓的行为认定',

'虚夸毒品功效劝人吸食毒品的行为该如何认定',

'妻子下落不明丈夫又与他人登记结婚是否为无效婚姻',

'一方未签字办理的结婚登记是否有效',

'夫妻双方1990年按农村习俗举办婚礼没有结婚证 一方可否起诉离婚',

'结婚前对方父母出资购买的住房写我们二人的名字有效吗',

'身份证被别人冒用无法登记结婚怎么办?',

'同居后又与他人登记结婚是否构成重婚罪',

'未办登记只举办结婚仪式可起诉离婚吗',

'同居多年未办理结婚登记,是否可以向法院起诉要求离婚'

]

corpora_documents = []

for item_text in raw_documents:

item_str = raw_word_process.word_list(item_text)

corpora_documents.append(item_str)

#判断关键字的相似度

model = gensim.models.Word2Vec(corpora_documents,size=1000)

print(model.most_similar('毒品',topn=10))

dictionary = corpora.Dictionary(corpora_documents)

print(dictionary)

corpus = [dictionary.doc2bow(text) for text in corpora_documents]

print(corpus)

tfidf = models.TfidfModel(corpus)

corpus_tfidf = tfidf[corpus]

#根据结果使用lsi做主题分类效果会比较好

print('#############'*4)

lsi = gensim.models.lsimodel.LsiModel(corpus=corpus, id2word=dictionary, num_topics=2)

corpus_lsi = lsi[corpus_tfidf]

lsi.print_topics(2)

for doc in corpus_lsi:

print(doc)

print('#############'*4)

lda = gensim.models.ldamodel.LdaModel(corpus=corpus, id2word=dictionary, num_topics=2, update_every=0, passes=1)

corpus_lda = lda[corpus_tfidf]

lda.print_topics(2)

for doc in corpus_lda:

print(doc)topic #0(4.710): 0.625*"毒品" + 0.487*"行为" + 0.422*"认定" + 0.202*"吸食" + 0.101*"原价" + 0.101*"朋友" + 0.101*"转让" + 0.091*"毒贩" + 0.085*"出狱" + 0.085*"途中"

topic #1(3.763): -0.405*"离婚" + -0.405*"起诉" + -0.295*"登记" + -0.294*"举办" + -0.281*"是否" + -0.205*"结婚" + -0.179*"习俗" + -0.179*"可否" + -0.179*"夫妻" + -0.179*"农村"

topic #0(4.710): 0.625*"毒品" + 0.487*"行为" + 0.422*"认定" + 0.202*"吸食" + 0.101*"原价" + 0.101*"朋友" + 0.101*"转让" + 0.091*"毒贩" + 0.085*"出狱" + 0.085*"途中"

topic #1(3.763): -0.405*"离婚" + -0.405*"起诉" + -0.295*"登记" + -0.294*"举办" + -0.281*"是否" + -0.205*"结婚" + -0.179*"习俗" + -0.179*"可否" + -0.179*"夫妻" + -0.179*"农村"

[('行为', 0.05141765624284744), ('认定', -0.03172670304775238)]

Dictionary(66 unique tokens: ['离婚', '未办理', '重婚罪', '农村', '父母']...)

[[(0, 1), (1, 1), (2, 1), (3, 1), (4, 1), (5, 1), (6, 1)], [(1, 1), (2, 1), (7, 1), (8, 1), (9, 1), (10, 1), (11, 1)], [(2, 2), (12, 1), (13, 1), (14, 1), (15, 1)], [(16, 1), (17, 1), (18, 1), (19, 1), (20, 2)], [(1, 1), (2, 1), (8, 1), (21, 1), (22, 1), (23, 1), (24, 2)], [(1, 1), (2, 1), (8, 1), (25, 1), (26, 1)], [(1, 1), (2, 1), (8, 1), (17, 1), (27, 1), (28, 1), (29, 1)], [(1, 1), (2, 1), (8, 1), (30, 1), (31, 1)], [(32, 1), (33, 1), (34, 1), (35, 1), (36, 1), (37, 1)], [(36, 1), (38, 1), (39, 1), (40, 1)], [(41, 1), (42, 1), (43, 1), (44, 1), (45, 1), (46, 1), (47, 1), (48, 1), (49, 1), (50, 1), (51, 1)], [(26, 1), (32, 1), (52, 1), (53, 1), (54, 1), (55, 1), (56, 1)], [(32, 1), (35, 1), (57, 1), (58, 1), (59, 1)], [(18, 1), (32, 1), (35, 1), (36, 1), (60, 1)], [(35, 1), (42, 1), (48, 1), (51, 1), (61, 1), (62, 1)], [(36, 1), (40, 1), (42, 1), (48, 1), (63, 1), (64, 1), (65, 1)]]

####################################################

[(0, 0.29872994703278144), (1, 0.0032136955893949659)]

[(0, 0.41683297103876943), (1, 0.0037700369853333315)]

[(0, 0.31015752535889324), (1, 0.0035015644062133941)]

[(0, 0.040983756341177083), (1, -0.047886572020680543)]

[(0, 0.45734029836454099), (1, 0.0052165153698539544)]

[(0, 0.48174895526278899), (1, -0.012419557201146111)]

[(0, 0.43158668228991659), (1, -0.0020950179245666925)]

[(0, 0.44493877711071161), (1, 0.0035142244172158468)]

[(0, 0.0035786086727413857), (1, -0.30967635286082096)]

[(0, 0.0006789248776884302), (1, -0.19066836312240332)]

[(0, 0.00034026900786290383), (1, -0.70247247521765532)]

[(0, 0.037451047357012344), (1, -0.10306778690876126)]

[(0, 0.0031460286359622411), (1, -0.2052231630925769)]

[(0, 0.0073922523004300014), (1, -0.33649372839235459)]

[(0, 0.00096852808835178159), (1, -0.5771922482444436)]

[(0, 0.00081873854163113594), (1, -0.50226371801036518)]

####################################################

using symmetric alpha at 0.5

using symmetric eta at 0.5

using serial LDA version on this node

running batch LDA training, 2 topics, 1 passes over the supplied corpus of 16 documents, updating model once every 16 documents, evaluating perplexity every 16 documents, iterating 50x with a convergence threshold of 0.001000

too few updates, training might not converge; consider increasing the number of passes or iterations to improve accuracy

-6.673 per-word bound, 102.1 perplexity estimate based on a held-out corpus of 16 documents with 102 words

PROGRESS: pass 0, at document #16/16

[(0, 0.80598127858443314), (1, 0.19401872141556697)]

[(0, 0.77383580140712671), (1, 0.22616419859287332)]

[(0, 0.16859816614123529), (1, 0.83140183385876465)]

[(0, 0.18313955504413976), (1, 0.8168604449558603)]

[(0, 0.16630754637088993), (1, 0.83369245362911004)]

[(0, 0.18362862636944227), (1, 0.81637137363055767)]

[(0, 0.17929020671331408), (1, 0.82070979328668603)]

[(0, 0.19108829213058723), (1, 0.80891170786941269)]

[(0, 0.82779594048933969), (1, 0.17220405951066026)]

[(0, 0.80335716828914572), (1, 0.1966428317108542)]

[(0, 0.8545679112894532), (1, 0.14543208871054666)]

[(0, 0.15063381779075966), (1, 0.84936618220924043)]

[(0, 0.81997654196261827), (1, 0.18002345803738173)]

[(0, 0.74311114295719272), (1, 0.25688885704280728)]

[(0, 0.83926798629720578), (1, 0.16073201370279427)]

[(0, 0.84457848085659659), (1, 0.15542151914340335)]

topic #0 (0.500): 0.047*登记 + 0.045*是否 + 0.035*起诉 + 0.034*离婚 + 0.034*行为 + 0.030*结婚 + 0.026*毒品 + 0.025*结婚登记 + 0.024*举办 + 0.023*认定

topic #1 (0.500): 0.079*毒品 + 0.049*行为 + 0.048*认定 + 0.030*结婚 + 0.027*购买 + 0.027*吸食 + 0.025*贩卖毒品 + 0.023*毒贩 + 0.022*构成 + 0.017*对方

topic diff=0.590837, rho=1.000000

topic #0 (0.500): 0.047*登记 + 0.045*是否 + 0.035*起诉 + 0.034*离婚 + 0.034*行为 + 0.030*结婚 + 0.026*毒品 + 0.025*结婚登记 + 0.024*举办 + 0.023*认定

topic #1 (0.500): 0.079*毒品 + 0.049*行为 + 0.048*认定 + 0.030*结婚 + 0.027*购买 + 0.027*吸食 + 0.025*贩卖毒品 + 0.023*毒贩 + 0.022*构成 + 0.017*对方关于word2vec(未做更改)

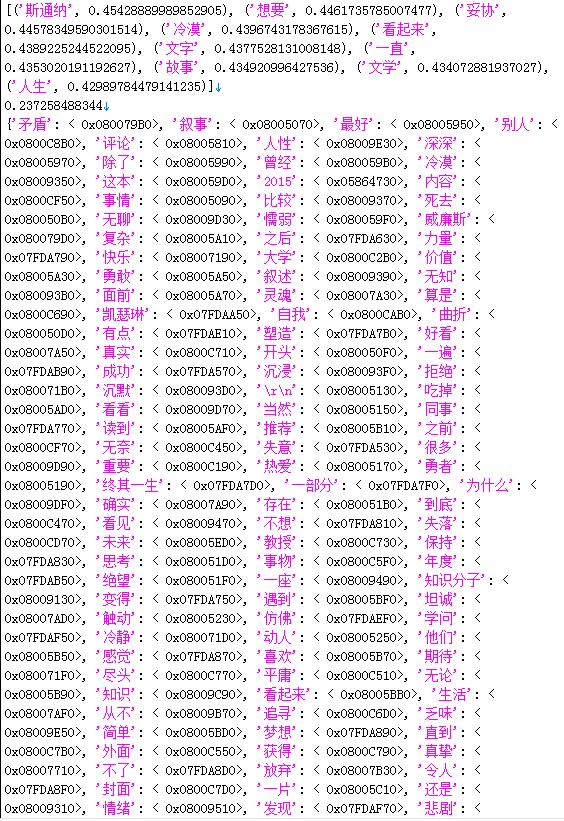

对以前抓取的豆瓣书评做word2vec,要实现的目标是,把从豆瓣网站上的书评做相似性查询,因为抓取的数据大部分是中文,所以需要做中文分词处理,然后根据分词的数据生成词向量模型,然后根据API去查询字典里面的词的相关性。根据语料库的规模,结果会有不同的差异。

我抓取了848条关于斯通纳的书评,这个语料库其实远远不够用来测试word2vec,只是用来帮助理解word2vec,代码部分如下:

stop_words = [line.strip() for line in open('stop_words.txt',encoding='utf-8').readlines()]

logging.info(stop_words)

text_list = []

# 读取csv文件

reviews = pd.read_csv('26425831.csv', encoding='utf-8')

for idx, row in reviews.iterrows():

review_content = row['UserComment']

seg_list = jieba.cut(str(review_content), cut_all=False)

word_list = [item for item in seg_list if len(item) > 1]

text_list.append(list(set(word_list) - set(stop_words)))

logging.info(text_list)

model = Word2Vec(text_list)

#model.save("word.model")

print(model.most_similar('格蕾丝'))

#print(model['通纳'])

print(model.similarity("通纳", "小说"))

print(model.vocab)以下对跑出来的对就的print值,请自己对比