一、关于kong的详细内容这里不再赘述,可以查看官网。

kong升级到1.0以后功能越来越完善,并切新版本的kong可以作为service-mesh使用,并可以将其作为kubernetes的ingress-controlor。虽然在作为service-mesh方面与istio还有差异,但是kong的发展前景很好,kong-ingress-controlor可以自动发现kubernetes集群里面的ingress服务并统一管理。所以我们的测试集群正在试用kong,这里先记录一下部署过程。

二、部署

提前准备好:kubernetes 集群(我线上使用的是1.13.2)、PV持久化(使用nfs做的)、helm

获取charts:

安装好了helm,可以直接使用:

helm fetch stable/kong

这个默认repo获取是需要FQ的。

我们使用的是根据官方的定制的:

https://github.com/cuishuaigit/k8s-kong

部署前可以根据自己的需要进行定制:

修改values.yaml文件,我这里取消了admin API的https,因为是纯内网环境。然后做了admin、proxy(http、https)的nodeport端口分别为32344、32380、32343。然后就是设置了默认开启 ingressController。

部署kong:

git clone https://github.com/cuishuaigit/k8s-kong

cd k8s-kong

helm install -n kong-ingress --tiller-namespace default .

测试环境的tiller是部署在default这个namespace下的。

部署完的效果:

root@ku13-1:~# kubectl get pods | grep kong

kong-ingress-kong-5c968fdb74-gsrr8 1/1 Running 0 4h14m

kong-ingress-kong-controller-5896fd6d67-4xcg5 2/2 Running 1 4h14m

kong-ingress-kong-init-migrations-k9ztt 0/1 Completed 0 4h14m

kong-ingress-postgresql-0 1/1 Running 0 4h14m

root@ku13-1:/data/k8s-kong# kubectl get svc | grep kong

kong-ingress-kong-admin NodePort 192.103.113.85 8444:32344/TCP 4h18m

kong-ingress-kong-proxy NodePort 192.96.47.146 80:32380/TCP,443:32343/TCP 4h18m

kong-ingress-postgresql ClusterIP 192.97.113.204 5432/TCP 4h18m

kong-ingress-postgresql-headless ClusterIP None 5432/TCP 4h18m

然后根据https://github.com/Kong/kubernetes-ingress-controller/blob/master/docs/deployment/minikube.md部署了demo服务:

wget https://raw.githubusercontent.com/Kong/kubernetes-ingress-controller/master/deploy/manifests/dummy-application.yaml

# cat dummy-application.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: http-svc

spec:

replicas: 1

selector:

matchLabels:

app: http-svc

template:

metadata:

labels:

app: http-svc

spec:

containers:

- name: http-svc

image: gcr.io/google_containers/echoserver:1.8

ports:

- containerPort: 8080

env:

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

# cat demo-service.yaml

apiVersion: v1

kind: Service

metadata:

name: http-svc

labels:

app: http-svc

spec:

type: NodePort

ports:

- port: 80

targetPort: 8080

protocol: TCP

name: http

selector:

app: http-svc

kubectl create -f dummy-application.yaml -f demo-servcie.yaml

创建ingress rule:

ingress 必须与相应的service部署在相同的namespace下。这里没有指定默认demo-service 和demo-ingress 都是部署在default这个namespace下面。

#cat demo-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: foo-bar

spec:

rules:

- host: foo.bar

http:

paths:

- path: /

backend:

serviceName: http-svc

servicePort: 80

kubectl create -f demon-ingress.yaml

使用curl测试:

root@ku13-1:/data/k8s-kong# curl http://192.96.47.146 -H Host:foo.bar

Hostname: http-svc-6f459dc547-qpqmv

Pod Information:

node name: ku13-2

pod name: http-svc-6f459dc547-qpqmv

pod namespace: default

pod IP: 192.244.32.25

Server values:

server_version=nginx: 1.13.3 - lua: 10008

Request Information:

client_address=192.244.6.216

method=GET

real path=/

query=

request_version=1.1

request_uri=http://192.244.32.25:8080/

Request Headers:

accept=*/*

connection=keep-alive

host=192.244.32.25:8080

user-agent=curl/7.47.0

x-forwarded-for=10.2.6.7

x-forwarded-host=foo.bar

x-forwarded-port=8000

x-forwarded-proto=http

x-real-ip=10.2.6.7

Request Body:

-no body in request-

三、部署konga

konga是kong的一个dashboard,具体部署参考https://www.cnblogs.com/cuishuai/p/9378960.html

四、kong plugin

kong有很多插件,帮助用户更好的使用kong来完成更加强大的代理功能。这里介绍两种,其他的使用都是相似的,只是配置参数不同,具体参数配置参考https://docs.konghq.com/1.1.x/admin-api/#plugin-object

kong-ingress-controlor提供了四种crd:KongPlugin、KongIngress、KongConmuser、KongCredential

1、request-transform

创建yaml:

#cat demo-request-transformer.yaml

apiVersion: configuration.konghq.com/v1

kind: KongPlugin

metadata:

name: transform-request-to-dummy

namespace: default

labels:

global: "false"

disable: false

config:

replace:

headers:

- 'host:llll'

add:

headers:

- "x-myheader:my-header-value"

plugin: request-transformer

创建插件:

kubectl create -f demo-request-transformer.yaml

2、file-log

创建yaml:

# cat demo-file-log.yaml

apiVersion: configuration.konghq.com/v1

kind: KongPlugin

metadata:

name: echo-file-log

namespace: default

labels:

global: "false"

disable: false

plugin: file-log

config:

path: /tmp/req.log

reopen: true

创建插件:

kubectl create -f demo-file-log.yaml

3、插件应用

插件可以与route、servcie绑定,绑定的方式就是使用annotation,0.20版本后的ingress controlor使用的是plugins.konghq.com.

1)route

在route层添加插件,就是在ingress里面添加:

# cat demo-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: foo-bar

annotations:

plugins.konghq.com: transform-request-to-dummy,echo-file-log

spec:

rules:

- host: foo.bar

http:

paths:

- path: /

backend:

serviceName: http-svc

servicePort: 80

应用:

kubectl apply -f demo-ingress.yaml

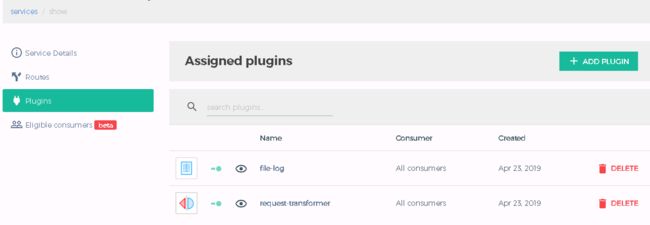

去dashboard上查看效果:

或者使用admin API查看:

curl http://10.1.2.8:32344/plugins | jq

32344是kong admin API映射到node节点的端口, jq格式化输出

2)service

在service层添加插件,直接在dummy的service的yaml里面添加anntations:

# cat demo-service.yaml

apiVersion: v1

kind: Service

metadata:

name: http-svc

labels:

app: http-svc

annotations:

plugins.konghq.com: transform-request-to-dummy,echo-file-log

spec:

type: NodePort

ports:

- port: 80

targetPort: 8080

protocol: TCP

name: http

selector:

app: http-svc

应用:

kubectl apply -f demo-service.yaml

去dashboard上查看效果:

或者使用admin API:

curl http://10.1.2.8:32344/plugins | jq

4、插件效果

1)request-transformer

# curl http://10.1.2.8:32380 -H Host:foo.bar

Hostname: http-svc-6f459d7-7qb2n

Pod Information:

node name: ku13-2

pod name: http-svc-6f459d7-7qb2n

pod namespace: default

pod IP: 192.244.32.37

Server values:

server_version=nginx: 1.13.3 - lua: 10008

Request Information:

client_address=192.244.6.216

method=GET

real path=/

query=

request_version=1.1

request_uri=http://llll:8080/

Request Headers:

accept=*/*

connection=keep-alive

host=llll

user-agent=curl/7.47.0

x-forwarded-for=10.1.2.8

x-forwarded-host=foo.bar

x-forwarded-port=8000

x-forwarded-proto=http

x-myheader=my-header-value

x-real-ip=10.1.2.8

Request Body:

-no body in request-

可以看到我们在上面的plugin的设置生效了。host被替换成了llll,并且添加了x-myheader。

2)file-log

需要登陆kong的pod去查看:

kubectl exec -it kong-ingress-kong-5c9lo74-gsrr8 -- grep -c request /tmp/req.log

51

可以看到正确收集到日志了。

5、使用注意事项

目前使用的时候如果当前的某个plugin被删掉了,而annotations没有修改,那么会导致所有的plugin都不可用,这个官方正在修复这个bug。所以现在使用的时候要格外注意避免出现问题。

参考:

https://github.com/Kong/kubernetes-ingress-controller/blob/master/docs/custom-resources.md

https://github.com/Kong/kubernetes-ingress-controller/blob/master/docs/external-service/externalnamejwt.md

https://github.com/Kong/kubernetes-ingress-controller/blob/master/docs/deployment/minikube.md

https://github.com/cuishuaigit/k8s-kong