pytorch0.4 mnist样例程序gpu版+t-SNE

这里写自定义目录标题

- mnist简介

- pytorch简介

- 代码

mnist简介

mnist数据集为手写数字识别的数据集,训练集60000张2828,测试集10000张2828,为数字0~9。

pytorch简介

pytorch为近几年兴起的训练架构,继承自torch,灵活易用,被科研工作者所喜爱。c++api还没有完善完毕。

代码

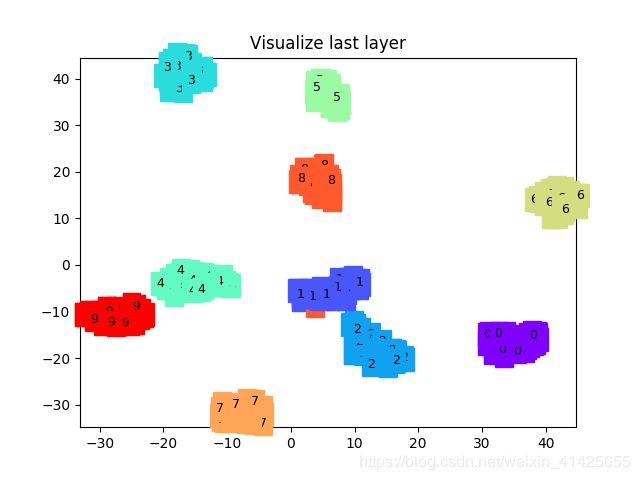

代码部分参考caffe的mnist样例实现,增加了几层卷积,增加了网络的表达能力,同时,参考了几位大神的代码,再次表示感谢,此代码训练完毕后,可以达到99%以上准确度,用t-SNE用于事实显示分类情况。

代码为GPU版

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets, transforms

from torch.autograd import Variable

import os

import matplotlib.pyplot as plt

#os.environ["CUDA_VISIBLE_DEVICES"] = "0"

#torch.cuda.set_device(0)

# Training settings

batch_size_train = 64

batch_size_test = 500

# MNIST Dataset

# MNIST数据集已经集成在pytorch datasets中,可以直接调用

train_dataset = datasets.MNIST(root='./data/',

train=True,

transform=transforms.ToTensor(),

download=True)

test_dataset = datasets.MNIST(root='./data/',

train=False,

transform=transforms.ToTensor())

# Data Loader (Input Pipeline)

train_loader = torch.utils.data.DataLoader(dataset=train_dataset,

batch_size=batch_size_train,

shuffle=True)

test_loader = torch.utils.data.DataLoader(dataset=test_dataset,

batch_size=batch_size_test,

shuffle=False)

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

# 输入1通道,输出10通道,kernel 5*5

self.conv1 = nn.Conv2d(in_channels=1, out_channels=40, kernel_size=3,stride=1,padding=1,dilation=1,groups=1,bias=True)

self.conv2 = nn.Conv2d(40, 80, 3,1,1,1,1,True)

self.conv3 = nn.Conv2d(80,80,3,1,1,1,1,True)

self.conv4 = nn.Conv2d(80, 80, 3,1,1,1,1,True)

self.conv5 = nn.Conv2d(80,80,3,1,1,1,1,True)

self.mp = nn.MaxPool2d(2)

self.fc1 = nn.Linear(3920, 500)#(in_features, out_features)

self.fc2 = nn.Linear(500,10)

def forward(self, x):

in_size = x.size(0)

x=self.conv1(x)

x=F.relu(x)

x=self.conv2(x)

x=F.relu(x)

x=self.conv3(x)

x=F.relu(x)

x=self.mp(x)

x=self.conv4(x)

x=self.conv5(x)

x=self.mp(x)

x=x.view(in_size,-1)

x=self.fc1(x)

x=F.relu(x)

out=self.fc2(x)

#return out

return F.log_softmax(out),x

model = Net()

model = model.cuda()

def plot_with_labels(lowDWeights, labels):

plt.cla()

X, Y = lowDWeights[:, 0], lowDWeights[:, 1]

for x, y, s in zip(X, Y, labels):

c = cm.rainbow(int(255 * s / 9));

plt.text(x, y, s, backgroundcolor=c, fontsize=9)

plt.xlim(X.min(), X.max());

plt.ylim(Y.min(), Y.max());

plt.title('Visualize last layer');

plt.show();

plt.pause(0.01)

optimizer =optim.Adam([ {'params': model.conv1.weight}, {'params': model.conv1.bias, 'lr': 0.002,'weight_decay': 0 },

{'params': model.conv2.weight}, {'params': model.conv2.bias, 'lr': 0.002,'weight_decay': 0 },

{'params': model.fc1.weight}, {'params': model.fc1.bias, 'lr': 0.002,'weight_decay': 0 },

{'params': model.fc2.weight}, {'params': model.fc2.bias, 'lr': 0.002,'weight_decay': 0 },

{'params': model.conv3.weight}, {'params': model.conv3.bias, 'lr': 0.002,'weight_decay': 0 },

{'params': model.conv4.weight}, {'params': model.conv4.bias, 'lr': 0.002,'weight_decay': 0 },

{'params': model.conv5.weight}, {'params': model.conv5.bias, 'lr': 0.002,'weight_decay': 0 },], lr=0.001, weight_decay=0.0001)

loss_function = nn.CrossEntropyLoss()

def train(epoch):

for batch_idx, (data_o, target_o) in enumerate(train_loader):#batch_idx是enumerate()函数自带的索引,从0开始

# data.size():[64, 1, 28, 28]

# target.size():[64]

data = Variable(data_o, requires_grad=False)

target = Variable(target_o, requires_grad=False)

data=data.cuda()

target=target.cuda()

output ,_= model(data)

#output:64*10

loss = F.nll_loss(output, target)

#loss = loss_function(output, target)

if batch_idx % 200 == 0:

print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

epoch, batch_idx * len(data), len(train_loader.dataset),

100. * batch_idx / len(train_loader), loss.data[0]))

optimizer.zero_grad() # 所有参数的梯度清零

loss.backward() #即反向传播求梯度

optimizer.step() #调用optimizer进行梯度下降更新参数

from matplotlib import cm

try:

from sklearn.manifold import TSNE; HAS_SK = True

except:

HAS_SK = False; print('Please install sklearn for layer visualization')

def test():

test_loss = 0

correct = 0

plt.ion()

for data_o, target_o in test_loader:

data, target = Variable(data_o, volatile=True), Variable(target_o)

data=data.cuda()

target=target.cuda()

output,last_layer = model(data)

# sum up batch loss

#test_loss = loss_function(output, target).data[0]

test_loss += F.nll_loss(output, target, size_average=False).data[0]

# get the index of the max log-probability

pred = output.data.max(1, keepdim=True)[1]

#print(pred)

correct += pred.eq(target.data.view_as(pred)).sum()

tsne = TSNE(perplexity=30, n_components=2, init='pca', n_iter=5000)

plot_only = 500

low_dim_embs = tsne.fit_transform(last_layer.cpu().data.numpy()[:plot_only, :])

labels = target.cpu().numpy()[:plot_only]

plot_with_labels(low_dim_embs, labels)

test_loss /= len(test_loader.dataset)

print('\nTest set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

test_loss, correct, len(test_loader.dataset),

100. * correct / len(test_loader.dataset)))

plt.ioff()

for epoch in range(1, 21):

train(epoch)

test()