Semantic Flow for Fast and Accurate Scene Parsing

Semantic Flow for Fast and Accurate Scene Parsing

- Abstract

- 1. Introduction

- 2. Related Work

- 3. Method

- 3.1. Preliminary

- 3.2. Flow Alignment Module

- 3.3. Network Architectures

- 4. Experiment

- 4.1. Experiments on Cityscapes

- 4.2. Experiment on More Datasets

- 5. Conclusion

- References

Abstract

In this paper, we focus on effective methods for fast and accurate scene parsing. A common practice to improve the performance is to attain high resolution feature maps with strong semantic representation. Two strategies are widely used—astrous convolutions and feature pyramid fu- sion, are either computation intensive or ineffective. In- spired by Optical Flow for motion alignment between ad- jacent video frames, we propose a Flow Alignment Module (FAM) to learn Semantic Flow between feature maps of ad- jacent levels and broadcast high-level features to high reso- lution features effectively and efficiently. Furthermore, inte- grating our module to a common feature pyramid structure exhibits superior performance over other real-time meth- ods even on very light-weight backbone networks, such as ResNet-18. Extensive experiments are conducted on sev- eral challenging datasets, including Cityscapes, PASCAL Context, ADE20K and CamVid. Particularly, our network is the first to achieve 80.4% mIoU on Cityscapes with a frame rate of 26 FPS. The code will be available at https: //github.com/donnyyou/torchcv .

在本文中,我们专注于快速,准确的场景解析的有效方法。提高性能的常用方法是获得具有强语义表示的高分辨率特征图。广泛使用两种策略-运算量大或效率低下的灾难性卷积和特征金字塔融合。受光流启发,在相邻视频帧之间进行运动对齐,我们提出了一种流对齐模块(FAM),以学习相邻级别的特征图之间的语义流,并有效地广播高级特征到高分辨率的特征高效地此外,即使在重量非常轻的骨干网络(例如ResNet-18)上,将我们的模块集成到一个共同的特征金字塔结构中,也比其他实时方法具有更好的性能。在包括Cityscapes,PASCAL Context,ADE20K和CamVid在内的多个具有挑战性的数据集上进行了广泛的实验。特别是,我们的网络率先在Cityscapes上实现了80.4%的mIoU,帧速率为26 FPS。该代码将在https://github.com/donnyyou/torchcv上提供。

Figure 1. Inference speed versus mIoU performance on test set of Cityscapes. Previous models are marked as red points, and our models are shown in blue points which achieve the best speed/accuracy trade-off. Note that our method with light-weight ResNet-18 as backbone even achieves comparable accuracy with all accurate models at much faster speed.

图1.在Cityscapes测试集上推理速度与mIoU性能的关系。 以前的型号标记为红点,而我们的模型则显示为蓝点,可以实现最佳的速度/精度权衡。 请注意,我们使用轻量级ResNet-18作为主干的方法甚至可以以更快的速度在所有精确模型中达到相当的精度。

1. Introduction

Scene parsing or semantic segmentation is a fundamental vision task which aims to classify each pixel in the images correctly. Two important factors that have prominent im- pacts on the performance are: detailed resolution informa- tion [42] and strong semantics representation [5, 60]. The seminal work of Long et. al. [29] built a deep Fully Convo- lutional Network (FCN), which is mainly composed from convolutional layers, in order to carve strong semantic rep- resentation. However, detailed object boundary informa- tion, which is also crucial to the performance, is usually missing due to the use of the built-in down-sampling pool- ing and convolutional layers. To alleviate this problem,

many state-of-the-art methods [13, 60, 61, 65] apply atrous convolutions [52] at the last several stages of their networks to yield feature maps with strong semantic representation while at the same time maintaining the high resolution.

Nevertheless, doing so inevitably requires huge extra computation since the feature maps in the last several layers can reach up to 64 times bigger than those in FCNs. Given that the regular FCN using ResNet-18 [17] as the backbone network has a frame rate of 57.2 FPS for a 1024 × 2048 im- age, after applying atrous convolutions [52] to the network as done in [60, 61], the modified network only has a frame rate of 8.7 FPS. Moreover, under single GTX 1080Ti GPU with no ongoing programs, the state-of-art model PSP- Net [60] has a frame rate of only 1.6 FPS for 1024 × 2048 input images. As a consequence, this is excessively prob- lematic to many advanced real-world applications, such as self-driving cars and robots navigation, which desperately demand real-time online data processing.

On the other hand, recent fast models still have a large

accuracy gap to accurate models, e.g., DFANet [23] only achieves 71.2% mIoU though running at 112 FPS shown in Figure 1. In summary, fast and accurate models for scene paring are demanding for real-time applications.

In order to not only maintain detailed resolution informa- tion but also get features that exhibit strong semantic rep- resentation, another direction is to build FPN-like [21, 28] models which leverage the lateral path to fuse feature maps in a top-down manner. In this way, the deep features of last several layers strengthen the shallow features with high resolution and therefore, the refined features are possible to satisfy the above two factors and beneficial to the accuracy improvement. However, such methods [1, 42] still undergo unsatisfying accuracy issues when compared to those net- works holding thick and big feature maps in the last several stages.

场景解析或语义分割是一项基本的视觉任务,旨在对图像中的每个像素进行正确分类。影响性能的两个重要因素是:详细的解析信息[42]和强大的语义表示[5,60]。龙等人的开创性工作。等[29]建立了一个深层的全卷积网络(FCN),它主要由卷积层组成,以刻画出强大的语义表示。但是,由于使用了内置的下采样池和卷积层,通常缺少对性能也至关重要的详细对象边界信息。为了减轻这个问题,

许多最先进的方法[13,60,61,65]在其网络的最后几个阶段应用无规则卷积[52]来生成具有强语义表示的特征图,同时又保持高分辨率。

但是,这样做不可避免地需要大量额外的计算,因为最后几层中的要素映射可以达到FCN中的要素映射最大64倍。假设使用ResNet-18 [17]作为主干网络的常规FCN在1024×2048图像上具有57.2 FPS的帧速率,然后按照[60,61]的方法对网络应用了无规则卷积[52] ,修改后的网络的帧速率仅为8.7 FPS。此外,在没有正在进行程序的单个GTX 1080Ti GPU下,最新型号的PSPNet [60]对于1024×2048输入图像的帧速率仅为1.6 FPS。结果,这对于许多先进的现实世界应用(例如无人驾驶汽车和机器人导航)极度不利,这些应用迫切需要实时在线数据处理。

另一方面,最近的快速模型仍然具有很大的优势

精确模型之间的准确度差距,例如DFANet [23]仅以图1所示的112 FPS运行,仅达到71.2%mIoU。总而言之,用于实时场景分析的快速准确模型要求实时应用。

为了不仅保持详细的分辨率信息,而且获得表现出强大语义表示的特征,另一个方向是建立类似于FPN的模型[21,28],该模型利用横向路径融合顶部的特征图。向下的方式。这样,最后几层的深层特征以高分辨率增强了浅层特征,因此,细化的特征有可能满足上述两个因素,并且有利于精度的提高。然而,与那些在最后几个阶段持有厚实特征图和大型特征图的网络相比,此类方法[1、42]仍然会遇到精度问题。

We believe the main reason lies in the ineffective seman- tics delivery from deep layers to shallow layers of these methods. To mitigate this issue, we propose to learn the Se- mantic Flow between layers with different resolutions. Se- mantic Flow is inspired from the optical flow method [11] used to align pixels between adjacent frames in the video processing task [64]. Based on Semantic Flow, we con- struct a novel network module called Flow Alignment Mod- ule(FAM) to the area of scene parsing. It takes features from adjacent levels as inputs, produces the offset field, and then warps the coarse feature to the fine feature with higher res- olution according to the offset field. Because FAM effec- tively transmits the semantic information from deep layers to shallow layers through very simple operations, it shows superior efficacy in both improving the accuracy and keep- ing super efficiency. Moreover, the proposed module is end- to-end trainable, and can be plugged into any backbone net- works to form new networks called SFNet. As depicted in Figure 1, our method with different backbones outper- forms other competitors by a large margin under the same speed. In particular, our method adopting ResNet-18 as backbone achieves 80.4% mIoU on Cityscapes test server with a frame rate of 26 FPS. When adopting DF2 [48] as backbone, our method achieves 77.8% mIoU with 61 FPS and 74.5% mIoU with 121 FPS when equipping the DF1 backbone. Moreover, when equipped with deeper back- bone, such as ResNet-101, our method achieves compara- ble results with the state-of-the-art model DANet [13] and only requires 33% computation of DANet. Besides, exper- iments also clearly illustrate the generality of our SFNet across various datasets: Cityscapes [8], Pascal Context [34], ADE20K [62] and CamVid [3].

To conclude, our main contributions are three-fold:

• we propose a novel flow-based align module (FAM) to learn Semantic Flow between feature maps of adjacent levels and broadcast high-level features to high resolu- tion features effectively and efficiently.

• We insert FAM into the feature pyramid framework and build a feature pyramid aligned network named SFNet for fast and accurate scene parsing.

• Detailed experiments and analysis indicate the ef- ficacy of our proposed module in both improving the accuracy and keeping light-weight. We achieve state-of-the-art results on Cityscapes, Pascal Context, ADE20K and Camvid datasets. Specifically, our net- work achieves 80.4% mIoU on Cityscapes test server while attaining a real-time speed of 26 FPS on single GTX 1080Ti GPU.

我们认为,主要原因是这些方法从深层到浅层的语义传递效率不高。为了缓解这个问题,我们建议学习具有不同分辨率的图层之间的语义流。语义流的灵感来自光流方法[11],该方法用于在视频处理任务[64]中对齐相邻帧之间的像素。基于语义流,我们在场景解析领域构建了一个称为流对齐模块(FAM)的新型网络模块。它以来自相邻层的要素为输入,生成偏移场,然后根据偏移场将具有较高分辨率的粗特征变形为精细特征。因为FAM通过非常简单的操作有效地将语义信息从深层传输到浅层,所以它在提高准确性和保持超高效率方面均显示出卓越的功效。此外,所提议的模块是端到端可培训的,并且可以插入任何骨干网络中以形成称为SFNet的新网络。如图1所示,在相同的速度下,采用不同主干的方法在很大程度上优于其他竞争对手。尤其是,我们采用ResNet-18作为主干的方法在Cityscapes测试服务器上的帧速率为26 FPS,可达到80.4%mIoU。当采用DF2 [48]作为骨干网时,我们的方法在装备DF1骨干网时可达到77.8%mIoU(61 FPS)和74.5%mIoU(121 FPS)。而且,当配备了更深的骨架(例如ResNet-101)时,我们的方法可以与最新模型DANet [13]取得可比的结果,并且只需要33%的DANet计算即可。此外,实验还清楚地说明了SFNet在各种数据集上的普遍性:Cityscapes [8],Pascal Context [34],ADE20K [62]和CamVid [3]。

总而言之,我们的主要贡献包括三个方面:

•我们提出了一种新颖的基于流的对齐模块(FAM),以学习相邻层的特征图之间的语义流,并有效地将高层特征广播到高分辨率的特征。

•我们将FAM插入要素金字塔框架,并构建一个名为SFNet的要素金字塔对齐网络,以进行快速,准确的场景解析。

•详细的实验和分析表明,我们提出的模块在提高精度和保持轻量化方面是有效的。我们在Cityscapes,Pascal Context,ADE20K和Camvid数据集上取得了最先进的结果。具体来说,我们的网络在Cityscapes测试服务器上实现了80.4%的mIoU,同时在单个GTX 1080Ti GPU上实现了26 FPS的实时速度。

2. Related Work

For scene parsing, there are mainly two paradigms for high-resolution semantic map prediction. One paradigm tries to keep both spatial and semantic information along the main pathway, while the other paradigm distributes spa- tial and semantic information to different parts in a network, then merges them back via different strategies.

The first paradigm is mostly based on astrous convo- lution [52], which keeps high-resolution feature maps in the latter network stages. Current state-of-the-art accurate methods [13,60,65] follow this paradigm and keep improv- ing performance by designing sophisticated head networks to capture contextual information. PSPNet [60] proposes pyramid pooling module (PPM) to model multi-scale con- texts, whilst DeepLab series [4–6] uses astrous spatial pyra- mid pooling (ASPP). In [13,15,16,18,25,53,66], non-local operator [46] and self-attention mechanism [45] are adopt to harvest pixel-wise context from whole image. Meanwhile, graph convolution networks [20, 26] are used to propagate information over the whole image by projecting features into an interaction space.

The second paradigm contains state-of-the-art fast meth- ods, where high-level semantics are represented by low- resolution feature maps. A common strategy follows the same backbone networks for image classification without using astrous convolution, and fuses multi-level feature maps for both spatiality and semantics [1,29,38,42,47]. IC- Net [59] uses multi-scale images as input and a cascade net- work to raise efficiency. DFANet [24] utilizes a light-weight backbone to speed up and is equipped with a cross-level fea- ture aggregation to boost accuracy, while SwiftNet [38] uses lateral connections as the cost-effective solution to restore the prediction resolution while maintaining the speed. To further speed up, low-resolution image is used as input for high-level semantics [31,59]. All these methods reduce fea- ture maps into quite low resolution and upsample them back by a large factor, which causes inferior results especially for small objects and object boundaries. Guided upsam- pling [31] is closely related to our method, where semantic map is upsampled back to the input image size guided by the feature map from an early layer. However, the guidance is insufficient due to both the semantic and resolution gap, which make the model still incomparable to accurate mod- els. In contrast, our method aligns feature maps from ad- jacent levels and enhances a feature pyramid towards both high resolution and strong semantics, and results in state- of-the-art models for both high accuracy and speed.

对于场景解析,主要有两种用于高分辨率语义图预测的范例。一个范例试图将空间和语义信息都保留在主要路径上,而另一个范例则将空间和语义信息分布到网络中的不同部分,然后通过不同的策略将它们合并回去。

第一个范例主要是基于天文卷积[52],它在后面的网络阶段中保留了高分辨率的特征图。当前最先进的精确方法[13,60,65]遵循此范例,并通过设计复杂的头部网络来捕获上下文信息来保持性能的提高。 PSPNet [60]提出了金字塔池化模块(PPM)来建模多尺度上下文,而DeepLab系列[4-6]使用天体空间吡喃池(ASPP)。在[13,15,16,18,25,53,66]中,采用了非局部算子[46]和自我注意机制[45]来从整个图像中获取像素级上下文。同时,图卷积网络[20、26]用于通过将特征投影到交互空间中来在整个图像上传播信息。

第二种范式包含最新的快速方法,其中高级语义由低分辨率特征图表示。一种通用策略遵循相同的骨干网络,无需使用卷积卷积进行图像分类,并且融合了多级特征图的空间和语义[1,29,38,42,47]。 ICNet [59]使用多尺度图像作为输入和级联网络来提高效率。 DFANet [24]利用轻型骨干网来加快速度,并配备了跨级别的功能聚合来提高准确性,而SwiftNet [38]使用横向连接作为具有成本效益的解决方案来恢复预测分辨率,同时保持速度。为了进一步加快速度,将低分辨率图像用作高级语义的输入[31,59]。所有这些方法都将特征图缩小为相当低的分辨率,并以较大的系数对其进行上采样,这会导致结果较差,尤其是对于小物体和物体边界。引导式上样[31]与我们的方法密切相关,在语义上,语义图从早期层被上采样回到特征图式所引导的输入图像大小。但是,由于语义和分辨率方面的差距,指导还不够,这使得该模型仍然无法与精确模型相提并论。相比之下,我们的方法从相邻层对齐特征图,并朝着高分辨率和强语义增强了特征金字塔,并产生了具有高精度和高速度的最新模型。

Figure 2. Visualization of feature maps and semantic flow field in FAM. Feature maps are visualized by averaging along the channel dimension, where large values are denoted by hot colors and vice versa. For visualizing semantic flow field, color code proposed by [2] and showed in top-right corner is adopted, where orientation and magnitude of flow vectors are represented by hue and saturation respectively.

图2. FAM中特征图和语义流域的可视化。 通过沿通道尺寸取平均值来可视化特征图,其中较大的值由热色表示,反之亦然。 为了可视化语义流场,采用[2]提出的并在右上角显示的颜色代码,其中流向量的方向和大小分别由色相和饱和度表示。

There are also a set of works focusing on designing light-weight networks for real-time scene parsing. ESP- Nets [32, 33] save computation by decomposing standard convolution into point-wise convolution and spatial pyra- mid of dilated convolutions. BiSeNet [50] introduces spa- tial path and semantic path to reduce computation. Re- cently, several methods [35,48,58] use auto-ML algorithms to search efficient architectures for scene parsing. Our method is complementary to these works, which will fur- ther boost the segmentation speed as demonstrated in our experiments.

Our proposed semantic flow is inspired by optical flow [11], which is widely used in video semantic segmen- tation for both high accuracy and speed. For accurate re- sults, temporal information is exceedingly exploited by us- ing optical flow. Gadde et. al. [14] warps internal feature maps and Nilsson et. al. [37] warps final semantic maps. To pursue faster speed, optical flow is used to bypass the low-level feature computation of some frames by warping features from their preceding frames [27, 64]. Our work is different from them by propagating information hierar- chically in another dimension, which is orthogonal to the temporal propagation for videos.

还有一组工作专注于设计用于实时场景解析的轻量级网络。 ESPNets [32,33]通过将标准卷积分解为点卷积和膨胀卷积的空间金字塔来节省计算量。 BiSeNet [50]引入了空间路径和语义路径以减少计算量。最近,有几种方法[35,48,58]使用自动ML算法来搜索有效的架构以进行场景解析。我们的方法是对这些工作的补充,这将进一步提高分割速度,如我们的实验所示。

我们提出的语义流受到光流的启发[11],光流以其高精度和高速度而广泛用于视频语义分段中。为了获得准确的结果,使用光流可以极大地利用时间信息。加德等等[14]扭曲内部特征图和Nilsson等。等[37]扭曲了最终的语义图。为了追求更快的速度,光流被用来绕过某些帧的先前帧[27、64]来绕过某些帧的低级特征计算。我们的工作与他们不同,我们以另一种层次的方式传播信息,该信息与视频的时间传播正交。

3. Method

In this section, we will first give some preliminary knowledge about scene parsing and introduce the misalign- ment problem therein. Then, Flow Alignment Module (FAM) is proposed to resolve the misalignment issue by learning Semantic Flow and warping top-layer feature maps accordingly. Finally, we present the whole network archi- tecture equipped with FAMs based on FPN framework [28] for fast and accurate scene parsing.

在本节中,我们将首先提供有关场景解析的一些初步知识,并在其中介绍未对准问题。 然后,提出了流对齐模块(FAM),通过学习语义流并相应地扭曲顶层特征图来解决不对齐问题。 最后,我们介绍了基于FPN框架[28]的配备FAM的整个网络体系结构,以实现快速,准确的场景解析。

3.1. Preliminary

pling rates of {4,8,16,32} with respect to the input im- age. The coarsest feature map F5 comes from the deepest layer with strong semantics, FCN-32s directly do prediction on it and causes oversmoothed results without fine details, and improvements are achieved by fusing predictions from lower levels [29]. FPN takes a step further to gradually fuse high-level feature maps into low-level feature maps in a top- down pathway through 2x bilinear upsampling, it was orig- inally proposed for object detection [28] and recently used for scene parsing [21, 47].

最粗糙的特征图F5来自语义最强的最深层,FCN-32直接在其上进行预测,并导致平滑的结果而没有精细的细节,并且通过融合较低层级的预测来实现改进[29]。 FPN采取了进一步措施,通过2倍双线性上采样以自上而下的方式将高级特征图逐渐融合为低级特征图,最初被提议用于对象检测[28],最近用于场景解析[21]。 ,47]。

While the whole design looks like symmetry with both downsampling encoder and upsampling decoder, there is an important issue lies in the common and simple opera- tor, bilinear upsampling, which breaks the symmetry. Bi- linear upsampling recovers the resolution of downsampled feature maps by interpolating a set of uniformly sampled positions (i.e., it can only handle one kind of fixed and predefined misalignment), while the misalignment between feature maps caused by a residual connection is far more complex. Therefore, correspondence between feature maps needs to be explicitly established to resolve their true misalignment.

虽然整个设计看起来像具有下采样编码器和上采样解码器的对称性,但一个重要的问题在于通用和简单的运算符双线性上采样会破坏对称性。 双线性上采样通过对一组统一采样的位置进行插值(即,它只能处理一种固定的和预定义的未对准)来恢复下采样特征图的分辨率,而由残余连接引起的特征图之间的未对准要复杂得多。 。 因此,需要明确建立特征图之间的对应关系以解决其真正的未对准问题。

Figure 3. (a) The details of Flow Alignment Module. We combine the transformed high-resolution feature map and low-resolution feature map to generate the semantic flow field, which is utilized to warp the low-resolution feature map to high-resolution feature map. (b) Warp procedure of Flow Alignment Module. The value of the high-resolution feature map is the bilinear interpolation of the neighboring pixels in low-resolution feature map, where the neighborhoods are defined according learned semantic flow field (i.e., offsets).

图3.(a)流量对准模块的详细信息。 我们将转换后的高分辨率特征图和低分辨率特征图结合起来,生成语义流场,该语义流场用于将低分辨率特征图扭曲为高分辨率特征图。 (b)流量对准模块的翘曲程序。 高分辨率特征图的值是低分辨率特征图中相邻像素的双线性插值,其中根据学习的语义流场(即偏移量)定义邻域。

Figure 4. Overview of our proposed SFNet. ResNet-18 backbone with four stages is used for exemplar illustration. FAM: Flow Alignment Module. PPM: Pyramid Pooling Module [60].

图4.我们提议的SFNet概述。 具有四个阶段的ResNet-18主干用于示例说明。 FAM:流量对齐模块。 PPM:金字塔合并模块[60]。

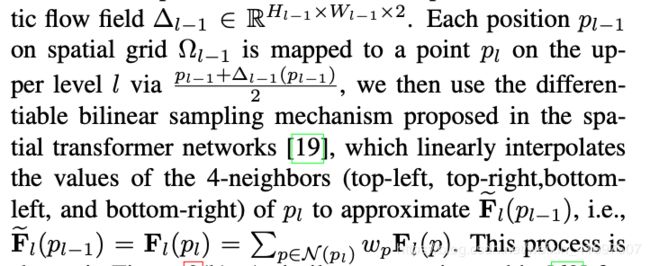

3.2. Flow Alignment Module

The task is formally similar to aligning two video frames

via optical flow [11], which motivates us to design a flow-

based alignment module, and align feature maps of two ad-

jacent levels by predicting a flow field. We define such flow

field Semantic Flow which are generated between differ-

ent levels in a feature pyramid. Specifically, we follow the

design of FlowNet-S [11] for its efficiency. Given two ad-

jacent feature maps Fl and Fl−1, we first upsample Fl to

the same size as Fl−1 via bilinear interpolation, then con-

catenate them together for a convolutional layer using two

kernels with spatial size of 3 × 3, and predict the semantic

该任务在形式上类似于对齐两个视频帧

通过光流[11],这促使我们设计出一种

基于对齐模块,并对齐两个ad-

通过预测流场来确定液位。 我们定义这样的流程

在不同的

要素金字塔中的实体级别。 具体来说,我们遵循

FlowNet-S [11]的效率设计。 给定两个广告

相邻特征图Fl和Fl-1,我们首先将Fl上采样到

通过双线性插值,使尺寸与Fl-1相同,然后

使用两个将它们分类在一起形成卷积层

空间大小为3×3的核,并预测语义

shown in Figure 3(b). A similar strategy is used in [63] for self-supervised monodepth learning via view synthesis. The proposed module is light-weight and end-to-end trainable and Figure 3(a) gives the detailed settings of the proposed module while Figure 3(b) shows the warping process. Figure 2 visualizes feature maps of two adjacent levels, their learned semantic flow and the finally warped feature map. As shown in Figure 2, the warped feature is more structured than normal bilinear upsampled feature and leads to more consistent representation inner the objects like bus and car.

在[63]中,类似的策略用于通过视图合成进行自我监督的单深度学习。 所提出的模块重量轻且端到端可训练,图3(a)给出了所提出模块的详细设置,而图3(b)显示了翘曲过程。 图2可视化了两个相邻级别的特征图,它们的学习语义流以及最终变形的特征图。 如图2所示,与正常的双线性上采样特征相比,扭曲特征的结构更复杂,并且可以在诸如公共汽车和汽车之类的物体内部产生更一致的表示。

3.3. Network Architectures

Figure 4 illustrates the whole network architecture, which contains a bottom-up pathway as encoder and a top- down pathway as decoder, the encoder has a backbone same as image classification by replacing fully connected layers with contextual modeling module, and the decoder is a FPN equipped with FAM. Details of each part are described as follows.

Backbone We choose standard networks pretrained from ImageNet [43] for image classification as our backbone net- work by removing the last fully connected layer. Specif- ically, ResNet series [17], ShuffleNet v2 [30] and DF se- ries [48] are used in our experiments. All backbones have four stages with residual blocks, and each stage has stride 2 in the first convolution layer to downsample the feature map for both computational efficiency and larger receptive fields.

Contextual Module plays an important role in scene pars- ing to capture long-range contextual information [54, 60], and we adopt Pyramid Pooling Module (PPM) [60] in this work. Since PPM outputs the same resolution feature map as last residual module, we treat PPM and last residual module together as last stage for FPN. Other modules like ASPP [5] can be readily plugged into our architecture in a similar manner, which are also verified in the Experiment section.

图4说明了整个网络体系结构,其中包含自下而上的路径作为编码器和自上而下的路径作为解码器,通过使用上下文建模模块替换完全连接的层,编码器具有与图像分类相同的主干,并且解码器是FPN配备FAM。每个部分的细节描述如下。

骨干我们选择从ImageNet [43]预训练的用于图像分类的标准网络作为我们的骨干网络,方法是删除最后一个完全连接的层。具体来说,在我们的实验中使用了ResNet系列[17],ShuffleNet v2 [30]和DF系列[48]。所有主干具有四个带有残差块的阶段,并且每个阶段在第一卷积层中都具有步幅2,以对特征图进行下采样,以实现计算效率和更大的接收场。

上下文模块在捕获远程上下文信息的场景解析中起着重要的作用[54,60],我们在这项工作中采用了金字塔池模块(PPM)[60]。由于PPM输出的分辨率特征图与最后一个残差模块相同,因此我们将PPM和最后一个残差模块一起视为FPN的最后一个阶段。其他模块,例如ASPP [5]可以很容易地以类似的方式插入我们的体系结构,这也在“实验”部分进行了验证。

Cascaded Deeply Supervised Learning We use deeply su- pervised loss [60] to supervise intermediate outputs of the decoder for easier optimization. In addition, online hard ex- ample mining [44, 50] is used by only training on the 10% hardest pixels sorted by cross-entropy loss.

级联深度监督学习我们使用深度监督损失[60]来监督解码器的中间输出,以便于优化。 另外,仅通过对按交叉熵损失排序的10%最难像素进行训练,即可使用在线硬示例挖掘[44,50]。

4. Experiment

We first carry out experiments on the Cityscapes [8] dataset, which is comprised of a large, diverse set of high- resolution (2048 × 1024) images recorded in street scenes. This dataset has 5,000 images with high quality pixel-wise annotations for 19 classes, which is further divided into 2975, 500, and 1525 images for training, validation and testing. To be noted, 20,000 coarsely labeled images pro- vided by this dataset are not used in this work. Besides, more experiments on Pascal Context [12], ADE20K [62] and CamVid [3] are summarised to further prove the effec- tiveness of our method.

我们首先对Cityscapes [8]数据集进行实验,该数据集由记录在街道场景中的高分辨率的大尺寸图像集(2048×1024)组成。 该数据集有5,000个图像,具有19个类别的高质量逐像素注释,并且进一步分为2975、500和1525个图像进行训练,验证和测试。 需要注意的是,本工作中未使用该数据集提供的20,000张粗标签图像。 此外,总结了有关Pascal Context [12],ADE20K [62]和CamVid [3]的更多实验,以进一步证明我们方法的有效性。

4.1. Experiments on Cityscapes

Implementation details: We use PyTorch [40] framework

to carry out following experiments. All networks are trained

with the same setting, where stochastic gradient descent

(SGD) with batch size of 16 is used as optimizer, with mo-

mentum of 0.9 and weight decay of 5e-4. All models are

trained for 50K iterations with an initial learning rate of

0.01. As a common practice, the “poly” learning rate pol-

icy is adopted to decay the initial learning rate by multiplying

实施细节:我们使用PyTorch [40]框架

进行以下实验。 所有网络都经过培训

具有相同的设置,其中随机梯度下降

(SGD),批量大小为16,用作优化程序,

智力为0.9,体重衰减为5e-4。 所有型号都是

经过5万次迭代训练,初始学习率为

0.01。 通常,“多元”学习率

采用icy乘以衰减初始学习率

![]()

during training. Data augmentation total iter

contains random horizontal flip, random resizing with scale range of [0.75, 2.0], and random cropping with crop size of 1024 × 1024.

During inference, we use whole picture as input to re- port performance unless explicitly mentioned. For quan- titative evaluation, mean of class-wise intersection-over- union (mIoU) is used for accurate comparison, and num- ber of float-point operations (FLOPs) and frames per sec- ond (FPS) are adopted for speed comparison. Most abla- tion studies are conducted on the validation set, and we also compare our method with other state-of-the-art methods on the test set.

Comparison with baseline methods: Table 1 reports the comparison results against baselines on the validation set of Cityscapes [8], where ResNet-18 [17] serves as the back- bone. Comparing with the naive FCN, dilated FCN im- proves mIoU by 1.1%. By appending the FPN decoder to the naive FCN, we get 74.8% mIoU by an improvement of 3.2%. By replacing bilinear upsampling with the proposed FAM, mIoU is boosted to 77.2%, which improves the naive FCN and FPN decoder by 5.7% and 2.4% respectively. Fi- nally, we append PPM (Pyramid Pooling Module) [60] to capture global contextual information, which achieves the best mIoU of 78.7 % together with FAM. Meanwhile, FAM is complementary to PPM by observing FAM improves PPM from 76.6% to 78.7%.

Positions to insert FAM: We insert FAM to different stage positions in the FPN decoder and report the results as Table 2. From the first three rows, FAM improves all stages and gets the greatest improvement at the last stage, which demonstrate that misalignment exists in all stages on FPN and is more severe in coarse layers. This phe- nomenon is consistent with the fact that coarse layers con- taining stronger semantics but with lower resolution, and can greatly boost segmentation performance when they are appropriately upsampled to high resolution. The best per- formance is achieved by adding FAM to all stages as listed in the last row.

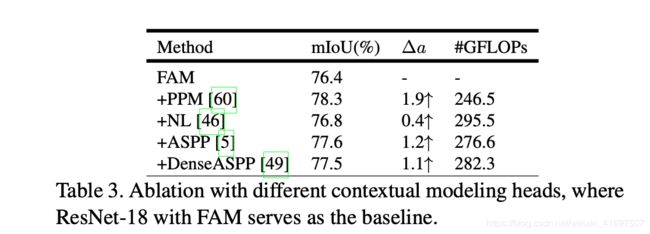

Ablation study on different contextual heads: Consider- ing current state-of-the-art contextual modules are used as heads on dilated backbone networks [5,13,49,56,60,61], we further try different contextual heads in our methods where coarse feature map is used for contextual modeling. Table 3 reports the comparison results, where PPM [60] delivers the best result, while more recently proposed methods such as Non-Local based heads [18, 46, 53] perform worse. There- fore, we choose PPM as our contextual head considering its better performance with lower computational cost.

数据扩充总迭代

包含随机水平翻转,缩放范围为[0.75,2.0]的随机大小调整以及裁剪大小为1024×1024的随机裁剪。

在推断过程中,除非明确说明,否则我们将整个图片用作报告性能的输入。为了进行定量评估,使用了类交叉相交(mIoU)的平均值进行精确比较,并采用了浮点运算(FLOP)和每秒帧数(FPS)的速度。比较。大多数消融研究都是在验证集上进行的,我们还将测试方法上的方法与其他最新方法进行了比较。

与基线方法的比较:表1报告了在Cityscapes [8]的验证集上与基线的比较结果,其中ResNet-18 [17]作为背景。与幼稚的FCN相比,膨胀的FCN将mIoU提高了1.1%。通过将FPN解码器附加到朴素的FCN,我们获得了74.8%的mIoU,提高了3.2%。通过用提议的FAM代替双线性上采样,mIoU提升到77.2%,这将朴素的FCN和FPN解码器分别提高了5.7%和2.4%。最后,我们附加了PPM(金字塔合并模块)[60]以捕获全局上下文信息,这与FAM一起可达到78.7%的最佳mIuU。同时,FAM通过观察FAM将PPM从76.6%提高到78.7%来补充PPM。

插入FAM的位置:我们将FAM插入到FPN解码器中的不同阶段位置,并将结果报告为表2。从前三行来看,FAM改善了所有阶段,并在最后阶段得到最大的改善,这表明存在未对准问题。在FPN上的所有阶段,在粗层中更为严重。这种现象与以下事实一致:粗糙层包含更强的语义,但分辨率较低,并且在将它们适当地上采样为高分辨率时,可以大大提高分割性能。通过将FAM添加到最后一行中列出的所有阶段,可以实现最佳性能。

对不同上下文标题的消融研究:考虑到当前最新的上下文模块被用作散布的骨干网络上的标题[5,13,49,56,60,61],我们进一步尝试在我们的上下文中使用不同的上下文标题粗特征图用于上下文建模的方法。表3报告了比较结果,其中PPM [60]提供了最佳结果,而最近提出的方法(例如基于非本地的打印头[18、46、53])表现较差。因此,考虑到PPM具有更好的性能和更低的计算成本,我们选择PPM作为上下文头。

Table 2. Ablation study on different positions to insert FAM, where Fl denote the upsampling position between level l and level l − 1,

ResNet-18 with FPN decoder and PPM head serves as baseline.

表2.对插入FAM的不同位置的消融研究,其中F1表示级别l和级别l − 1之间的上采样位置

带有FPN解码器和PPM磁头的ResNet-18作为基线。

Table 3. Ablation with different contextual modeling heads, where ResNet-18 with FAM serves as the baseline.

表3.具有不同上下文建模头的消融,其中带有FAM的ResNet-18作为基线。

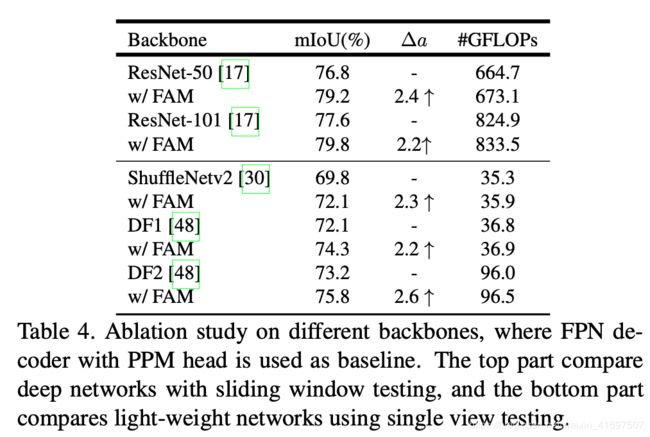

Table 4. Ablation study on different backbones, where FPN de- coder with PPM head is used as baseline. The top part compare deep networks with sliding window testing, and the bottom part compares light-weight networks using single view testing.

表4.不同骨干网的消融研究,其中带有PPM头的FPN解码器用作基线。 顶部比较深层网络和滑动窗口测试,底部比较使用单视图测试的轻量级网络。

Ablation study on different backbones: We further carry out a set of experiments with different backbone networks including both deep and light-weight networks, where FPN decoder with PPM head is used as a strong baseline. For heavy networks, we choose ResNet-50 and ResNet- 101 [17] as representation. For light-weight networks, ShuffleNetv2 [30] and DF1/DF2 [48] are experimented. All these backbones are pretrained on ImageNet [43]. Table 4 reports the results, where FAM significantly achieves better mIoU on all backbones with only slightly extra computa- tional cost.

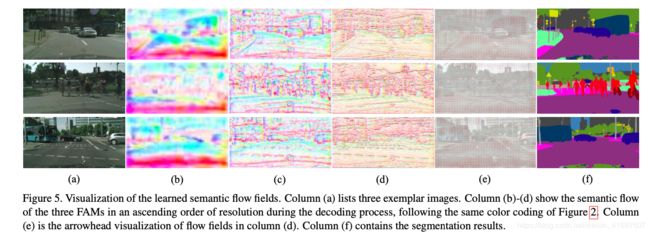

Visualization of Semantic Flow: Figure 5 visualizes se- mantic flow from FAM in different stages. Similar with tra- ditional optical flow, semantic flow is visualized by color coding and is bilinearly interpolated to image size for quick overview. Besides, vector field is also visualized for de- tailed inspection. From the visualization, we observe that semantic flow tend to converge to some positions inside ob- jects, where these positions are generally near object centers and have better receptive fields to activate top-level features with pure, strong semantics. Top-level features at these po- sitions are then propagated to appropriate high-resolution positions following the guidance of semantic flow. In ad- dition, semantic flows also have coarse-to-fine trends from top level to bottom level, which phenomenon is consistent with the fact that semantic flows gradually describe offsets between gradually smaller patterns.

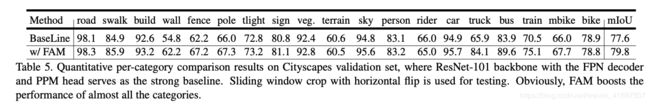

Improvement analysis: Table 5 compares the detailed re- sults of each category on the validation set, where ResNet- 101 is used as backbone, and FPN decoder with PPM head serves as the baseline. Our method improves almost all categories, especially for ’truck’ with more than 19% mIoU improvement. Figure 6 visualizes the prediction errors by both methods, where FAM considerably resolves ambigui- ties inside large objects (e.g., truck) and produces more pre- cise boundaries for small and thin objects (e.g., poles, edges of wall).

ComparisonwithPSPNet: Wecompareoursegmentation results with previous state-of-the-art model PSPNet [60] us- ing ResNet-101 as backbone. We re-implement PSPNet us- ing open-source code provided by the author and achieve 78.8% mIoU on validation set. Based on the same back- bone ResNet-101 without using astrous convolution, our method achieves 79.8% mIoU while being about 3 times faster than PSPNet. Figure 7 shows the comparison results, where our model gets more consistent results for large ob- jects and keeps more detailed information benefited from the well fused multi-level feature pyramid in our decoder.

Comparison with state-of-the-art real-time models: All compared methods are evaluated by single-scale inference and input sizes are also listed for fair comparison. Our speed is tested on one GTX 1080Ti GPU with full image resolution 1024 × 2048 as input, and we report speed of two versions, i.e., without and with TensorRT acceleration. As shown in Table 6, our method based on DF1 achieves more accurate result(74.5%) than all methods faster than it. With DF2, our method outperforms all previous meth- ods while running at 60 FPS. With ResNet-18 as backbone, our method achieves 78.9% mIoU and even reaches per- formance of accurate models which will be discussed in the next experiment. By additionally using Mapillary [36] dataset for pretraining, our ResNet-18 based model achieves 26 FPS with 80.4% mIoU, which sets the new state-of-the- art record on accuracy and speed trade-off on Cityscapes benchmark. More detailed information about Mapillary pretraining and TensorRT acceleration can be referred in supplementary file.

对不同骨干网的消融研究:我们进一步对包括深层和轻型网络在内的不同骨干网进行了一组实验,其中使用带有PPM头的FPN解码器作为强基准。对于重型网络,我们选择ResNet-50和ResNet-101 [17]作为表示。对于轻量级网络,已测试ShuffleNetv2 [30]和DF1 / DF2 [48]。所有这些主干都在ImageNet上进行了预训练[43]。表4报告了结果,其中FAM在所有骨干网上仅以略微额外的计算成本即可显着提高mIoU。

语义流的可视化:图5可视化了FAM在不同阶段的语义流。与传统的光流相似,语义流通过颜色编码可视化,并被双线性内插到图像大小,以便快速查看。此外,矢量场也可视化以进行详细检查。从可视化中,我们观察到语义流趋于收敛到对象内部的某些位置,这些位置通常位于对象中心附近,并且具有更好的接受域,可以激活具有纯净,强大语义的顶级功能。然后,根据语义流的指导,将这些位置的顶级功能传播到适当的高分辨率位置。另外,语义流从顶层到底层也有从粗到精的趋势,这一现象与语义流逐渐描述逐渐减小的模式之间的偏移这一事实是一致的。

改进分析:表5比较了验证集上每个类别的详细结果,其中ResNet-101被用作主干,带PPM头的FPN解码器用作基线。我们的方法几乎可以改善所有类别,特别是对于卡车而言,mIoU改善了19%以上。图6可视化了这两种方法的预测误差,其中FAM极大地解决了大型物体(例如卡车)内部的歧义,并为较小和较薄的物体(例如杆,墙的边缘)产生了更精确的边界。

与PSPNet的比较:我们使用ResNet-101作为骨干,将细分结果与以前的最新模型PSPNet [60]进行比较。我们使用作者提供的开源代码重新实现PSPNet,并在验证集上实现了78.8%的mIoU。基于相同的骨干ResNet-101,而没有使用卷积卷积,我们的方法可实现79.8%mIoU,而速度是PSPNet的3倍左右。图7显示了比较结果,其中我们的模型针对大对象获得了更一致的结果,并从解码器中融合良好的多级特征金字塔中获得了更多详细信息。

与最新的实时模型进行比较:所有比较的方法均通过单尺度推断进行评估,并且还列出了输入大小以进行公平比较。我们的速度在一个具有完整图像分辨率1024×2048作为输入的GTX 1080Ti GPU上进行了测试,我们报告了两种版本的速度,即没有和有TensorRT加速。如表6所示,与所有方法相比,我们基于DF1的方法获得了更准确的结果(74.5%)。使用DF2,我们的方法在以60 FPS运行时优于所有以前的方法。以ResNet-18为骨干,我们的方法可以达到78.9%的mIoU,甚至可以达到精确模型的性能,这将在下一个实验中进行讨论。通过另外使用Mapillary [36]数据集进行预训练,我们基于ResNet-18的模型可达到26 FPS,mIoU为80.4%,这在Cityscapes基准上创下了准确度和速度折衷的最新记录。有关Mapillary预训练和TensorRT加速的更多详细信息,请参阅补充文件。

Comparison with state-of-the-art accurate models:

State-of-the-art accurate models [13, 49, 60, 65] perform multi-scale and horizontal flip inference to achieve better re- sults on the Cityscapes test server. Although our model can run fast in real-time scenario with single-scale inference, for fair comparison, we also report multi-scale with flip testing results, which is common settings following previous meth- ods [13,60]. Number of model parameters and computation FLOPs are also listed for comparison. Table 7 summarizes the results, where our models achieve state-of-the-art accu- racy while costs much less computation. In particular, our method based on ResNet-18 is 1.1% mIoU higher than PSP- Net [60] while only requiring 11% of its computation. Our ResNet-101 based model achieves comparable results with DAnet [13] and only requires 32% of its computation.

与最新的精确模型进行比较:

最新的精确模型[13、49、60、65]执行多尺度和水平翻转推断,以在Cityscapes测试服务器上获得更好的结果。 尽管我们的模型可以通过单尺度推理在实时场景中快速运行,但为了公平地比较,我们还报告了带有翻转测试结果的多尺度,这是先前方法的常见设置[13,60]。 还列出了模型参数和计算FLOP的数量以进行比较。 表7总结了结果,其中我们的模型达到了最先进的精度,而计算成本却大大降低。 特别是,我们基于ResNet-18的方法比PSPNet [60]高1.1%mIoU,而只需要11%的计算量。 我们基于ResNet-101的模型可与DAnet [13]取得可比的结果,只需要其计算的32%。

Figure 5. Visualization of the learned semantic flow fields. Column (a) lists three exemplar images. Column (b)-(d) show the semantic flow of the three FAMs in an ascending order of resolution during the decoding process, following the same color coding of Figure 2. Column (e) is the arrowhead visualization of flow fields in column (d). Column (f) contains the segmentation results.

图5.所学语义流字段的可视化。 (a)列列出了三个示例图像。 (b)-(d)列显示了在解码过程中,按照图2相同的颜色编码,三个FAM的语义流以分辨率的升序排列。(e)列是( d)。 (f)列包含细分结果。

Table 5. Quantitative per-category comparison results on Cityscapes validation set, where ResNet-101 backbone with the FPN decoder and PPM head serves as the strong baseline. Sliding window crop with horizontal flip is used for testing. Obviously, FAM boosts the performance of almost all the categories.

表5. Cityscapes验证集上按类别的定量比较结果,其中带有FPN解码器和PPM头的ResNet-101主干网是强基准。 使用水平翻转的滑动窗口裁剪进行测试。 显然,FAM可以提高几乎所有类别的性能。

Figure 6. Qualitative comparison in terms of errors in predictions, where correctly predicted pixels are shown as black background while wrongly predicted pixels are colored with their groundtruth label color codes.

图6.就预测错误而言的定性比较,其中正确预测的像素显示为黑色背景,而错误预测的像素则用其底标标签颜色代码进行着色。

Figure 7. Scene parsing results comparison against PSPNet [60], where significantly improved regions are marked with red dashed boxes. Our method performs better on both small scale and large scale objects.

图7.场景解析结果与PSPNet [60]的比较,其中显着改善的区域用红色虚线框标记。 我们的方法在小规模和大型对象上都表现更好。

4.2. Experiment on More Datasets

To further prove the effectiveness of our method, we per- form more experiments on other three data-sets including Pascal Context [34], ADE20K [62] and CamVid [3]. Stan- dard settings of each benchmark are used, which are sum- marized in supplementary file.

PASCAL Context: provides pixel-wise segmentation an- notation for 59 classes, and contains 4,998 training images and 5,105 testing images. The results are illustrated as Ta- ble 8, our method outperforms corresponding baselines by 1.7% mIoU and 2.6% mIoU with ResNet-50 and ResNet101 as backbones respectively. In addition, our method on both ResNet-50 and ResNet-101 outperforms their existing counterparts by large margins with significant lower com- putational cost.

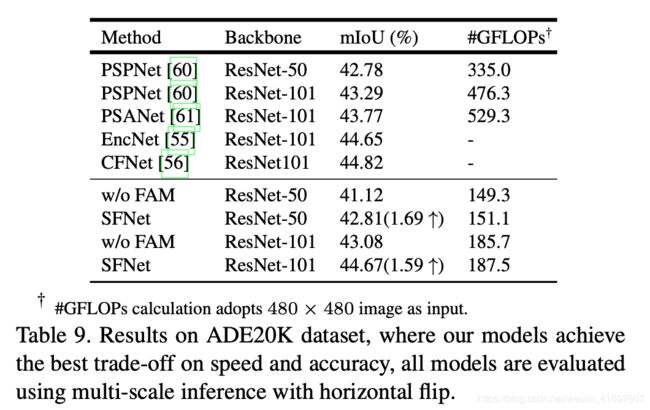

ADE20K: is a challenging scene parsing dataset annotated with 150 classes, and it contains 20K/2K images for train- ing and validation. Images in this dataset are from different scenes with more scale variations. Table 9 reports the per- formance comparisons, our method improves the baselines by 1.69% mIoU and 1.59% mIoU respectively, and outper- forms previous state-of-the-art methods [60, 61] with much less computation.

为了进一步证明我们方法的有效性,我们对其他三个数据集进行了更多实验,包括Pascal Context [34],ADE20K [62]和CamVid [3]。使用每个基准的标准设置,这些设置汇总在补充文件中。

PASCAL上下文:提供59个类别的逐像素分割注释,并包含4,998幅训练图像和5,105幅测试图像。结果显示为表8,我们的方法分别以ResNet-50和ResNet101为骨干,分别比相应的基线高出1.7%mIoU和2.6%mIoU。此外,我们在ResNet-50和ResNet-101上的方法都大大优于现有方法,大大降低了计算成本。

ADE20K:是一个具有挑战性的场景解析数据集,带有150个类别,并包含20K / 2K图像,用于训练和验证。该数据集中的图像来自不同场景,比例变化更大。表9报告了性能比较,我们的方法分别将基线提高了1.69%mIoU和1.59%mIoU,并以更少的计算量超越了现有的最新技术[60,61]。

Table 6. Comparison on Cityscapes test set with state-of-the-art real-time models. For fair comparison, input size is also consid- ered, and all models use single scale inference.

表6. Cityscapes测试集与最新实时模型的比较。 为了公平地比较,还考虑了输入大小,并且所有模型都使用单比例推断。

Table 7. Comparison on Cityscapes test set with state-of-the-art accurate models. For better accuracy, all models use multi-scale inference.

CamVid: is another road scene dataset for autonomous driving. This dataset involves 367 training images, 101 val- idation images and 233 testing images with resolution of 480 × 360. We apply our method with different light-weight backbones on this dataset and report comparison results in Table 10. With DF2 as backbone, FAM improves its base- line by 3.4% mIoU. Our method based on ResNet-18 per- forms best with 72.4% mIoU while running at 45.2 FPS.

表7. Cityscapes测试集与最新精确模型的比较。 为了获得更高的准确性,所有模型都使用多尺度推理。

CamVid:是用于自动驾驶的另一个道路场景数据集。 该数据集包含367个训练图像,101个验证图像和233个测试图像,分辨率为480×360。我们在该数据集上应用了具有不同轻量级骨架的方法,并在表10中报告了比较结果。以DF2作为骨架,FAM 将其基准提高了3.4%mIoU。 我们的基于ResNet-18的方法在以45.2 FPS运行时,以72.4%mIoU表现最佳。

Table 8. Comparison with the state-of-art methods on Pascal Con- text testing set [34]. All the models use multi-scale inference with horizontal flip.

表8.与Pascal语境测试集上的最新方法的比较[34]。 所有模型都使用水平翻转的多尺度推理。

Table 9. Results on ADE20K dataset, where our models achieve the best trade-off on speed and accuracy, all models are evaluated using multi-scale inference with horizontal flip.

表9. ADE20K数据集的结果,其中我们的模型在速度和准确性上取得了最佳折衷,所有模型都使用水平翻转的多尺度推理进行了评估。

Table 10. Accuracy and efficiency comparison with previous state- of-the-art real-time models on CamVid [3] test set, where the input size is 360 × 480 and single scale inference is used.

表10.与CamVid [3]测试集上的现有最新实时模型的精度和效率比较,其中输入大小为360×480,并且使用单比例推断。

5. Conclusion

In this paper, we devise to use the learned Semantic Flow to align multi-level feature maps generated by a fea- ture pyramid to the task of scene parsing. With the pro- posed flow alignment module, high-level features are well flowed to low-level feature maps with high resolution. By discarding atrous convolutions to reduce computation over- head and employing the flow alignment module to enrich the semantic representation of low-level features, our net- work achieves the best trade-off between semantic segmen- tation accuracy and running time efficiency. Experiments on multiple challenging datasets illustrate the efficacy of our method. Since our network is super efficient and shares the same spirit as optical flow for aligning different maps (i.e.,feature maps of different video frames), it can be naturally extended to video semantic segmentation to align feature maps hierarchically and temporally. Besides, we’re also in- terested in extending the idea of semantic flow to other re- lated areas like panoptic segmentation, etc.

在本文中,我们设计使用学习的语义流将特征金字塔生成的多级特征图与场景解析任务对齐。使用建议的流程对齐模块,高级特征可以很好地流向具有高分辨率的低级特征图。通过丢弃无用的卷积以减少计算开销,并使用流对齐模块来丰富低级特征的语义表示,我们的网络在语义分段精度和运行时间效率之间实现了最佳折衷。在多个具有挑战性的数据集上进行的实验说明了我们方法的有效性。由于我们的网络非常高效,并且具有与光流相同的精神来对齐不同的地图(即不同视频帧的特征地图),因此可以自然地扩展到视频语义分割,以按层次和时间对齐特征地图。此外,我们也很感兴趣将语义流的概念扩展到其他相关领域,例如全景分割等。

References

[1] Vijay Badrinarayanan, Alex Kendall, and Roberto Cipolla. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. PAMI, 2017.

[2] Simon Baker, Daniel Scharstein, J. P. Lewis, Stefan Roth, Michael J. Black, and Richard Szeliski. A database and eval- uation methodology for optical flow. International Journal of Computer Vision, 92(1):1–31, Mar 2011.

[3] Gabriel J. Brostow, Julien Fauqueur, and Roberto Cipolla. Semantic object classes in video: A high-definition ground truth database. Pattern Recognition Letters, xx(x):xx–xx, 2008.

[4] Liang-Chieh Chen, George Papandreou, Iasonas Kokkinos, Kevin Murphy, and Alan L. Yuille. Deeplab: Semantic im- age segmentation with deep convolutional nets, atrous con- volution, and fully connected crfs. PAMI, 2018.

[5] Liang-ChiehChen,GeorgePapandreou,FlorianSchroff,and Hartwig Adam. Rethinking atrous convolution for seman- tic image segmentation. arXiv preprint arXiv:1706.05587, 2017.

[6] Liang-ChiehChen,YukunZhu,GeorgePapandreou,Florian Schroff, and Hartwig Adam. Encoder-decoder with atrous separable convolution for semantic image segmentation. In ECCV, 2018.

[7] Bowen Cheng, Liang-Chieh Chen, Yunchao Wei, Yukun Zhu, Zilong Huang, Jinjun Xiong, Thomas S. Huang, Wen- Mei Hwu, and Honghui Shi. Spgnet: Semantic prediction guidance for scene parsing. In ICCV, October 2019.

[8] Marius Cordts, Mohamed Omran, Sebastian Ramos, Timo Rehfeld, Markus Enzweiler, Rodrigo Benenson, Uwe Franke, Stefan Roth, and Bernt Schiele. The cityscapes dataset for semantic urban scene understanding. In CVPR, 2016.

[9] HenghuiDing,XudongJiang,AiQunLiu,NadiaMagnenat- Thalmann, and Gang Wang. Boundary-aware feature propa- gation for scene segmentation. 2019.

[10] Henghui Ding, Xudong Jiang, Bing Shuai, Ai Qun Liu, and Gang Wang. Context contrasted feature and gated multi- scale aggregation for scene segmentation. In CVPR, 2018.

[11] Alexey Dosovitskiy, Philipp Fischer, Eddy Ilg, Philip Hausser, Caner Hazirbas, Vladimir Golkov, Patrick Van Der Smagt, Daniel Cremers, and Thomas Brox. Flownet: Learning optical flow with convolutional networks. In CVPR, 2015.

[12] Mark Everingham, Luc Van Gool, Christopher KI Williams, John Winn, and Andrew Zisserman. The pascal visual object classes (voc) challenge. IJCV, 2010.

[13] Jun Fu, Jing Liu, Haijie Tian, Zhiwei Fang, and Hanqing Lu. Dual attention network for scene segmentation. arXiv preprint arXiv:1809.02983, 2018.

[14] Raghudeep Gadde, Varun Jampani, and Peter V. Gehler. Se- mantic video cnns through representation warping. In ICCV, Oct 2017.

[15] Junjun He, Zhongying Deng, and Yu Qiao. Dynamic multi- scale filters for semantic segmentation. In ICCV, October 2019.

[16] Junjun He, Zhongying Deng, Lei Zhou, Yali Wang, and Yu Qiao. Adaptive pyramid context network for semantic seg- mentation. In CVPR, June 2019.

[17] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In CVPR, 2016.

[18] Zilong Huang, Xinggang Wang, Lichao Huang, Chang Huang, Yunchao Wei, and Wenyu Liu. Ccnet: Criss-cross attention for semantic segmentation. 2019.

[19] Max Jaderberg, Karen Simonyan, Andrew Zisserman, and Koray Kavukcuoglu. Spatial transformer networks. ArXiv, abs/1506.02025, 2015.

[20] Thomas N. Kipf and Max Welling. Semi-supervised classification with graph convolutional networks. ArXiv, abs/1609.02907, 2016.

[21] Alexander Kirillov, Ross Girshick, Kaiming He, and Piotr Dollar. Panoptic feature pyramid networks. In CVPR, June 2019.

[22] ShuKongandCharlessC.Fowlkes.Recurrentsceneparsing with perspective understanding in the loop. In CVPR, 2018.

[23] Hanchao Li, Pengfei Xiong, Haoqiang Fan, and Jian Sun.

Dfanet: Deep feature aggregation for real-time semantic seg-

mentation. In CVPR, June 2019.

[24] Hanchao Li, Pengfei Xiong, Haoqiang Fan, and Jian Sun.

Dfanet: Deep feature aggregation for real-time semantic seg-

mentation. In CVPR, June 2019.

[25] XiaLi,ZhishengZhong,JianlongWu,YiboYang,Zhouchen

Lin, and Hong Liu. Expectation-maximization attention net-

works for semantic segmentation. In ICCV, 2019.

[26] Yin Li and Abhinav Gupta. Beyond grids: Learning graph

representations for visual recognition. In NIPS. 2018.

[27] Yule Li, Jianping Shi, and Dahua Lin. Low-latency video

semantic segmentation. In CVPR, June 2018.

[28] Tsung-Yi Lin, Piotr Dollr, Ross B. Girshick, Kaiming He, Bharath Hariharan, and Serge J. Belongie. Feature pyramid

networks for object detection. In CVPR, 2017.

[29] Jonathan Long, Evan Shelhamer, and Trevor Darrell. Fully convolutional networks for semantic segmentation. In

CVPR, 2015.

[30] NingningMa,XiangyuZhang,Hai-TaoZheng,andJianSun.

Shufflenet v2: Practical guidelines for efficient cnn architec-

ture design. In ECCV, September 2018.

[31] Davide Mazzini. Guided upsampling network for real-time

semantic segmentation. In BMVC, 2018.

[32] Sachin Mehta, Mohammad Rastegari, Anat Caspi, Linda

Shapiro, and Hannaneh Hajishirzi. Espnet: Efficient spatial pyramid of dilated convolutions for semantic segmentation. In ECCV, September 2018.

[33] Sachin Mehta, Mohammad Rastegari, Linda Shapiro, and Hannaneh Hajishirzi. Espnetv2: A light-weight, power ef- ficient, and general purpose convolutional neural network. In CVPR, June 2019.

9

[34] Roozbeh Mottaghi, Xianjie Chen, Xiaobai Liu, Nam-Gyu Cho, Seong-Whan Lee, Sanja Fidler, Raquel Urtasun, and Alan Yuille. The role of context for object detection and se- mantic segmentation in the wild. In CVPR, 2014.

[35] Vladimir Nekrasov, Hao Chen, Chunhua Shen, and Ian Reid. Fast neural architecture search of compact semantic segmen- tation models via auxiliary cells. In CVPR, June 2019.

[36] Gerhard Neuhold, Tobias Ollmann, Samuel Rota Bulo, and Peter Kontschieder. The mapillary vistas dataset for semantic understanding of street scenes. In ICCV, 2017.

[37] David Nilsson and Cristian Sminchisescu. Semantic video segmentation by gated recurrent flow propagation. In CVPR, June 2018.

[38] Marin Orsic, Ivan Kreso, Petra Bevandic, and Sinisa Segvic. In defense of pre-trained imagenet architectures for real-time semantic segmentation of road-driving images. In CVPR, June 2019.

[39] Adam Paszke, Abhishek Chaurasia, Sangpil Kim, and Euge- nio Culurciello. Enet: A deep neural network architecture for real-time semantic segmentation.

[40] Adam Paszke, Sam Gross, Soumith Chintala, Gregory Chanan, Edward Yang, Zachary DeVito, Zeming Lin, Al- ban Desmaison, Luca Antiga, and Adam Lerer. Automatic differentiation in pytorch. In NIPS-W, 2017.

[41] Eduardo Romera, Jose M. Alvarez, Luis Miguel Bergasa, and Roberto Arroyo. Erfnet: Efficient residual factorized convnet for real-time semantic segmentation. IEEE Trans. Intelligent Transportation Systems, pages 263–272, 2018.

[42] OlafRonneberger,PhilippFischer,andThomasBrox.U-net: Convolutional networks for biomedical image segmentation. MICCAI, 2015.

[43] OlgaRussakovsky,JiaDeng,HaoSu,JonathanKrause,San- jeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, Alexander C. Berg, and Li Fei-Fei. Imagenet large scale visual recognition challenge. IJCV, 2015.

[44] Abhinav Shrivastava, Abhinav Gupta, and Ross Girshick. Training region-based object detectors with online hard ex- ample mining. In CVPR, 2016.

[45] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszko- reit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser, and Illia Polosukhin. Attention is all you need. In NIPS, 2017.

[46] Xiaolong Wang, Ross Girshick, Abhinav Gupta, and Kaim- ing He. Non-local neural networks. In CVPR, June 2018.

[47] Tete Xiao, Yingcheng Liu, Bolei Zhou, Yuning Jiang, and Jian Sun. Unified perceptual parsing for scene understand- ing. In ECCV, 2018.

[48] Zheng Pan Jiashi Feng Xin Li, Yiming Zhou. Partial order pruning: for best speed/accuracy trade-off in neural architec- ture search. In CVPR, 2019.

[49] Maoke Yang, Kun Yu, Chi Zhang, Zhiwei Li, and Kuiyuan Yang. Denseaspp for semantic segmentation in street scenes. In CVPR, 2018.

[50] Changqian Yu, Jingbo Wang, Chao Peng, Changxin Gao, Gang Yu, and Nong Sang. Bisenet: Bilateral segmenta- tion network for real-time semantic segmentation. In ECCV, 2018.

[51] Changqian Yu, Jingbo Wang, Chao Peng, Changxin Gao, Gang Yu, and Nong Sang. Learning a discriminative feature network for semantic segmentation. In CVPR, 2018.

[52] Fisher Yu and Vladlen Koltun. Multi-scale context aggrega- tion by dilated convolutions. ICLR, 2016.

[53] Yuhui Yuan and Jingdong Wang. Ocnet: Object context net- work for scene parsing. arXiv preprint arXiv:1809.00916, 2018.

[54] Hang Zhang, Kristin Dana, Jianping Shi, Zhongyue Zhang, Xiaogang Wang, Ambrish Tyagi, and Amit Agrawal. Con- text encoding for semantic segmentation. In CVPR, June 2018.

[55] Hang Zhang, Kristin Dana, Jianping Shi, Zhongyue Zhang, Xiaogang Wang, Ambrish Tyagi, and Amit Agrawal. Con- text encoding for semantic segmentation. In CVPR, 2018.

[56] Hang Zhang, Han Zhang, Chenguang Wang, and Junyuan Xie. Co-occurrent features in semantic segmentation. In CVPR, June 2019.

[57] Rui Zhang, Sheng Tang, Yongdong Zhang, Jintao Li, and Shuicheng Yan. Scale-adaptive convolutions for scene pars- ing. In ICCV, 2017.

[58] Yiheng Zhang, Zhaofan Qiu, Jingen Liu, Ting Yao, Dong Liu, and Tao Mei. Customizable architecture search for se- mantic segmentation. In CVPR, June 2019.

[59] Hengshuang Zhao, Xiaojuan Qi, Xiaoyong Shen, Jianping Shi, and Jiaya Jia. Icnet for real-time semantic segmentation on high-resolution images. In ECCV, September 2018.

[60] Hengshuang Zhao, Jianping Shi, Xiaojuan Qi, Xiaogang Wang, and Jiaya Jia. Pyramid scene parsing network. In CVPR, 2017.

[61] Hengshuang Zhao, Yi Zhang, Shu Liu, Jianping Shi, Chen Change Loy, Dahua Lin, and Jiaya Jia. Psanet: Point-wise spatial attention network for scene parsing. In ECCV, 2018.

[62] Bolei Zhou, Hang Zhao, Xavier Puig, Sanja Fidler, Adela Barriuso, and Antonio Torralba. Semantic understanding of scenes through the ADE20K dataset. arXiv preprint arXiv:1608.05442, 2016.

[63] Tinghui Zhou, Matthew Brown, Noah Snavely, and David G Lowe. Unsupervised learning of depth and ego-motion from video. In CVPR, 2017.

[64] XizhouZhu,YuwenXiong,JifengDai,LuYuan,andYichen Wei. Deep feature flow for video recognition. In CVPR, July 2017.

[65] YiZhu,KaranSapra,FitsumA.Reda,KevinJ.Shih,Shawn Newsam, Andrew Tao, and Bryan Catanzaro. Improving se- mantic segmentation via video propagation and label relax- ation. In CVPR, June 2019.

[66] Zhen Zhu, Mengde Xu, Song Bai, Tengteng Huang, and Xi- ang Bai. Asymmetric non-local neural networks for semantic segmentation. In ICCV, 2019.