- Java后端开发规范

糖心何包蛋爱编程

java开发语言

在Java后端开发中,遵循一套明确的规范和最佳实践是非常重要的,这不仅可以提高代码的质量和可维护性,还可以促进团队协作和项目的长期发展。因此,对于有志于学习Java语言的朋友而言,从学习初期便着力培养良好的代码编写习惯,是通往成功掌握这门语言的关键一步。一、开发环境1.Java82.IntelliJIDEA二、Java命名1.maven模块名小写,多个单词使用-连接。正确:example-moud

- Java后端服务接口性能优化常用技巧

南波塞文

Java基础MySQL数据库java性能优化

接口性能优化常用技巧前言1.数据库索引2.慢SQL优化3.异步执行4.批量处理5.数据预加载6.池化技术(多线程)8.事件回调机制9.串行改为并行调用10.深度分页问题前言对于高标准程序员来说提供高性能的服务接口是我们所追求的目标,以下梳理了一些提升接口性能的技术方案,希望对大家有所帮助。1.数据库索引当接口响应慢时,我们可能会去排查是否是数据库查询慢了,进而会去关注数据库查询优化,而索引优化是代

- Java后端面试八股文:系统化学习指南,告别零散知识点

钢板兽

高频八股java面试后端jvmredismysqllinux

Java后端面试中的八股大家通常都会参考小林或者JavaGuide,但是这些八股内容太多了,字数成万,我们基本上是看一遍忘一遍,自己也曾经根据网上面经整理过自己的八股题库,通篇共有五万字,知识点也很散,所以想把每个部分的内容系统地写成文章,比如JVM部分的八股,我会写两到三篇的文章帮助自己系统地理解这部分的八股知识,所以这篇文章会按照分块整理自己发布过的所有八股文章,这篇文章后续也会持续更新,也起

- JAVA后端面试八股文汇总(2)

使峹行者

java面试后端

二、Java多线程篇1.简述java内存模型(JMM)java内存模型定义了程序中各种变量的访问规则。其规定所有变量都存储在主内存,线程均有自己的工作内存。工作内存中保存被该线程使用的变量的主内存副本,线程对变量的所有操作都必须在工作空间进行,不能直接读写主内存数据。操作完成后,线程的工作内存通过缓存一致性协议将操作完的数据刷回主存。2.简述as-if-serial编译器等会对原始的程序进行指令重

- Nginx给Vue和Java后端做代理

入职啦

实战项目知识vue.jsnginxjava

本文发表于入职啦(公众号:ruzhila)可以访问入职啦查看更多技术文章网站架构入职啦采用了Nuxt.js、Vue3、Go的混合架构开发,包括了官网、简历工具、职位搜索、简历搜索等功能。为三个部分:官网:采用Nuxt.js+Vue3+TailwindCSS开发,属于SSR渲染简历工具:采用Vue3+TailwindCSS开发,属于SPA渲染后端:采用Gin+Gorm+MySQL开发,提供API接口

- 智能化知识管理:AI助力Java后端开发优化与创新!! 探讨未来AI开发趋势!!

小南AI学院

人工智能大数据

JAVA后端开发者利用AI优化知识管理的方法1.业务资料智能管理自动资料收集与分类利用AI爬虫自动收集项目相关文档和行业资料智能分类系统根据内容自动归类到适当知识领域提取关键业务术语并构建业务词汇表,统一团队认知上下文关联构建AI分析文档间关系,构建知识关联网络自动标记业务规则和关键决策点将非结构化会议记录转化为结构化业务知识2.需求智能管理多源需求提取从会议记录、邮件、产品文档中自动提取需求点识

- 华为OD-不限经验,急招,机考资料,面试攻略,不过改推,捞人

2301_79125642

java

超星(学习通)-Java后端一面网易互娱40min(感觉是G了)一篇不太像面经的面经2023总结,前端大二上进小红书秋招面经第一波海康红外图像算法实习(微影)面经测试工程师社招-测试面试题大厂在职傻屌。TPlink图像算法工程师一二三面经深圳海康红外图像算法实习(微影)面经TPLink提前批面经(已OC)传统车辆转规控算法岗秋招记录腾讯TEG测试与质量管理全记录瑞幸Java开发校招一面腾讯金融科技

- 2024年华为OD机试真题-提取字符串中的最长数学表达式

2301_79125642

java

我想大家看看我的帖子可以获得一些经验#许愿池##牛客在线求职答疑中心(35799)##牛客在线求职答疑中心#德国弗劳恩霍夫物流研究院还有一个叫帝欧的公司,这个实习值得去吗?有人知道吗offer选择(java后端)华子还是杭州银行希望uu们给给意见华子华为云部门,业务关于基础平台开发(偏软),无转正杭州银行#牛客在线求职答疑中心(35799)##牛客在线求职答疑中心#https://www.nowc

- 【java后端学习路线4】SpringBoot+MyBatisPlus+Redis学习指南,985本海硕自学转码

程序员城南

java后端学习路线javaspringbootmybatisredis

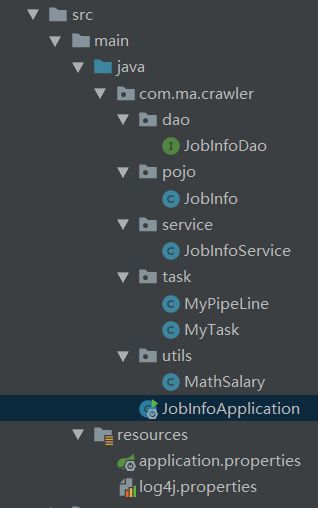

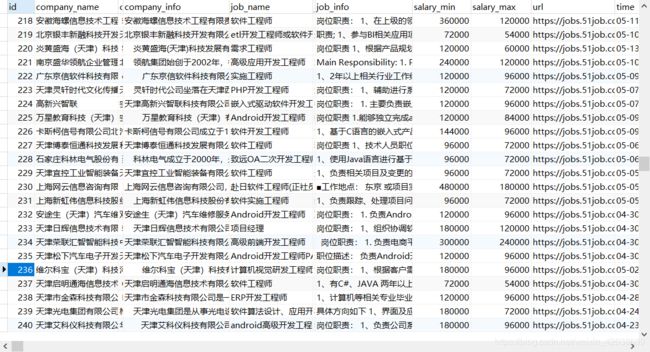

JAVA后端学习路线路线总览javase->Mysql->计算机网络->JavaWeb->Maven(1)->Spring->SpringMVC->Mybatis->Maven(2)->Linux->Git->SpringBoot->MyBatisPlus->Redis->JVM->JUC->Nginx->Docker->RabbitMQ->SpringCloud->项目(谷粒商城/仿牛客网)方法

- Java后端面试到底要如何准备?

财高八斗者

Java程序员JavaJava编程java面试jvm

我把面试准备拆成以下几个步骤:1.写简历2.整理好自己最熟悉的项目,相对有代表性的项目。3.整理自己的技术栈4.收拾好自己的自我介绍5.被八股文6.模拟面试7.针对模拟面试表现出来的问题进行改进8.开始投投简历本人10年开发经验,做过coder、做过领导、也做过架构师,面试过500人+。现在全职做技术分享和面试辅导。针对自己多年的面试经验,以及被面试的经验,我自己整理了一份面试小抄:《面试小抄》《

- java面试题

阿芯爱编程

面试javajava开发语言

以下是一些Java后端面试题:一、基础部分Java中的基本数据类型有哪些?它们的默认值是什么?答案:基本数据类型有8种。整数类型:byte(默认值为0)、short(默认值为0)、int(默认值为0)、long(默认值为0L)。浮点类型:float(默认值为0.0f)、double(默认值为0.0d)。字符类型:char(默认值为’\u0000’,即空字符)。布尔类型:boolean(默认值为fa

- java后端开发day25--阶段项目(二)

元亓亓亓

java后端开发java开发语言

(以下内容全部来自上述课程)1.美化界面privatevoidinitImage(){//路径分两种://1.绝对路径:从盘符开始写的路径D:\\aaa\\bbb\\ccc.jpg//2.相对路径:从当前项目开始写的路径aaa\\bbb\\ccc.jpg//添加图片的时候,就需要按照二维数组中管理的数据添加图片//外循环----把内循环的代码重复执行4次for(inti=0;iActionList

- LayoutInflater & Factory2

Android西红柿

Android基础java开发语言android

关于作者:CSDN内容合伙人、技术专家,从零开始做日活千万级APP。专注于分享各领域原创系列文章,擅长java后端、移动开发、商业变现、人工智能等,希望大家多多支持。未经允许不得转载目录一、导读二、概览三、使用3.1LayoutInflater实例获取3.2调用inflate方法解析3.3四、LayoutInflater.Factory(2)4.1使用4.2注意点五、推荐阅读一、导读我们继续总结学

- uml类图

Android西红柿

工具-效率androidflutter

关于作者:CSDN内容合伙人、技术专家,从零开始做日活千万级APP,带领团队单日营收超千万。专注于分享各领域原创系列文章,擅长java后端、移动开发、商业化变现、人工智能等,希望大家多多支持。目录一、导读二、概览三、推荐阅读一、导读我们继续总结学习基础知识,温故知新。二、概览无他,唯记录尔!publicvoidtest(){System.out.println("HelloWorld");}fun

- java后端开发day19--学生管理系统升级

元亓亓亓

java后端开发java开发语言

(以下内容全部来自上述课程)1.要求及思路1.总体框架2.注册3.登录4.忘记密码2.代码1.javabeanpublicclassUser1{privateStringusername;privateStringpassword;privateStringpersonID;privateStringphoneNumber;publicUser1(){}publicUser1(Stringuser

- java后端开发day17--ArrayList--集合

元亓亓亓

java后端开发java开发语言

(以下内容全部来自上述课程)1.集合和数组差不多,但能自动扩容。1.集合存储数据类型的特点可以存引用数据类型。可以存基本数据类型,但要变成包装类。2.集合和数组的对比1.长度数组:长度固定集合:长度可变2.存储类型数组:可以存基本数据类型和引用数据类型。集合:引用数据类型和基本数据类型—>包装类。3.定义泛型(E):限定集合中存储数据的类型ArrayListlist=newArrayListlis

- Deepseek整合SpringAI

java技术小馆

javaspringcloud

在现代应用开发中,问答系统是一个常见的需求,尤其是在客服、教育和技术支持领域。本文将介绍如何使用SpringBoot、Deepseek和SpringAI构建一个简单的问答系统,并通过Postman调用API接口实现问答功能。通过本文,你将学习如何整合这些技术,快速实现一个高效的问答系统。1.技术栈介绍SpringBoot:用于快速构建Java后端服务。Deepseek:高性能的深度学习推理框架,用

- vue3 naive ui+java下载文件

weixin_42485982

javavue

java后端代码importorg.springframework.http.HttpHeaders;importorg.springframework.http.MediaType;importorg.springframework.http.ResponseEntity;importorg.springframework.web.bind.annotation.GetMapping;impor

- Java 后端面试必备:Java 中 == 和 equals 有什么区别

刘小炮吖i

Java后端开发面试题面试java

欢迎并且感谢大家指出我的问题,由于本人水平有限,有些内容写的不是很全面,只是把比较实用的东西给写下来,如果有写的不对的地方,还希望各路大牛多多指教!谢谢大家!大家如果对Java后端面试题感兴趣可以关注一下面试题专栏引言在Java后端开发的面试中,“Java中==和equals有什么区别”是一个高频问题。虽然这看似基础,但其中蕴含的原理和细节,对于深入理解Java的内存管理和对象比较机制至关重要。接

- Python 自动排班表格(代码分享)

趣享先生

Python案例分享专栏python开发语言

✅作者简介:2022年博客新星第八。热爱国学的Java后端开发者,修心和技术同步精进。个人主页:JavaFans的博客个人信条:不迁怒,不贰过。小知识,大智慧。当前专栏:Java案例分享专栏✨特色专栏:国学周更-心性养成之路本文内容:Python自动排班表格(代码分享) 前些天发现了一个巨牛的人工智能学习网站,通俗易懂,风趣幽默,忍不住分享一下给大家。点击跳转到网站。文章目录前言问题描述解决步骤1

- java后端开发面试常问

躲在没风的地方

java面试题java面试spring

面试常问问题1spring相关(1)@Transactional失效的场景@Transactional注解默认只会回滚运行时异常(RuntimeException),如果方法中抛出了其他异常,则事务不会回滚(数据库数据仍然插入成功了)。@Transactional(rollbackFor=Exception.class)如果方法中有trycatch语句,并且抛出的异常的代码被try捕获,那么方法上

- Java后端面试题:Java中的关键字、数据类型、运算符

青灯文案

面试题javapython开发语言

在Java中,关键字、数据类型和运算符是编程的基础组成部分。一、Java关键字Java关键字是具有特殊含义的单词,它们不能用作变量名、方法名、类名等标识符,Java共有53个关键字。1、控制流关键字if、else:用于条件判断。switch、case、default:用于多分支选择。for、while、do、break、continue:用于循环控制。2、类和对象关键字class:用于定义类。in

- 新手21天学java后端-day4-oracle数据库

大人物i

java

oracle数据库前言oraclesql第一章SelectingRows第二章Sorting&LimitingSelectedRows第三章SingleRowFunctions第四章DisplayingDatafromMultipleTables第五章GroupFunction第六章Subqueries第七章SpecifyingVariablesatRuntime第八章OverviewofData

- java后端开发day14--之前练习的总结和思考

元亓亓亓

java后端开发java开发语言

1.感受这两天学点儿新的就直接上手打代码,真的是累死个人。我唯一的感受就是,课听完了,代码也跟着打完了(是的,跟着打的,没自己打),感觉自己脑袋里乱乱的,对代码的分区啊作用啊啥的,感觉内理解的程度有点儿呼之欲出,可能和我之前专业课学习积累了点儿经验有关吧,但是听了几天课就感觉自己有点儿体系,但是要写出来,就又觉得自己实在不成火候。再往下学感觉又有点儿堆积之前的知识了,所以干脆停一天沉淀一下。好好想

- java后端研发经典面试题总结二

Netty711

java面试大数据redisspring

四种读取XML文件读取的办法1.DOM生成和解析XML文档为XML文档的已解析版本定义了一组接口。解析器读入整个文档,然后构建一个驻留内存的树结构。优点:整个文档树在内存中,便于操作;支持删除,修改,重新排列等。缺点:把整个文档调入内存,存在很多无用的节点,浪费了时间和空间。2.SAX为解决DOM1、边读边解析,应用于大型XML文档2、只支持读3、访问效率低4、顺序访问3.DOM4J生成和解析XM

- Java后端微服务架构下的数据库分库分表:Sharding-Sphere

微赚淘客机器人开发者联盟@聚娃科技

架构java微服务

Java后端微服务架构下的数据库分库分表:Sharding-Sphere大家好,我是微赚淘客返利系统3.0的小编,是个冬天不穿秋裤,天冷也要风度的程序猿!随着微服务架构的广泛应用,数据库层面的扩展性问题逐渐凸显。Sharding-Sphere作为一个分布式数据库中间件,提供了数据库分库分表的能力,帮助开发者解决数据水平拆分的问题。数据库分库分表概述数据库分库分表是将数据分布到不同的数据库和表中,以

- Java后端分布式系统的服务路由:智能DNS与服务网格

微赚淘客机器人开发者联盟@聚娃科技

java开发语言

Java后端分布式系统的服务路由:智能DNS与服务网格大家好,我是微赚淘客返利系统3.0的小编,是个冬天不穿秋裤,天冷也要风度的程序猿!在分布式系统中,服务路由是确保请求高效、稳定地到达目标服务的关键技术。智能DNS和服努网格是两种不同的服务路由实现方式。服务路由概述服务路由负责将请求根据一定的策略分发到不同的服务实例或集群。智能DNS智能DNS通过域名解析将请求指向最佳的服务节点,通常基于地理位

- Android面向切面AspectJ

ljt2724960661

Android基础androidjava

这一节主要了解一下AspectJ技术,它属于AOP(AspectOrientedProgramming)技术,意为:面向切面编程,通过预编译方式和运行期动态代理实现程序功能的统一维护的一种技术。最先是应用在Java后端,如Spring,在Android中一般应用场景如方法耗时,统计埋点,日志打印/打点;使用这个技术的原因是它能够降低代码耦合度,提高程序的可重用性,同时提高了开发的效率;AOP常用A

- 2023饿了吗Java后端面经和网易Java面经【赶紧来试试!】_饿了么 面试

2501_90326065

java面试开发语言

2023饿了吗Java后端面经和网易Java面经饿了吗Java后端面经(感觉有点难)网易日常Java面经往期文章>>>Java最全面试题【五分钟看完】Java后端精选面试题分享Java经典面试题带答案(五)Java经典面试题带答案(四)Java经典面试题带答案(三)2023年春招Java面试选择题及答案解析…饿了吗Java后端面经(感觉有点难)1、自我介绍2、哪一年开始学Java的3、JVM的内存

- git-submodule-python管理子项目

U_U

submodulegitpythonsubmodulegitpython

最近有个java项目拆分成了几个子模块,主工程为java后端代码,子工程有db脚本和h5代码。这些子模块都比较稳定,一般不存在经常增删模块的情况。然后经常要本地打包外发给客户部署,不通过自动化打包。经常遇到子模块拉到游离分支,提交代码的时候老提示子模块newcommits的一些情况,用起来常常有坑,比较困扰。所以特意写了个简单的python脚本来管理这种含gitsubmodule的项目,且对mac

- jdk tomcat 环境变量配置

Array_06

javajdktomcat

Win7 下如何配置java环境变量

1。准备jdk包,win7系统,tomcat安装包(均上网下载即可)

2。进行对jdk的安装,尽量为默认路径(但要记住啊!!以防以后配置用。。。)

3。分别配置高级环境变量。

电脑-->右击属性-->高级环境变量-->环境变量。

分别配置 :

path

&nbs

- Spring调SDK包报java.lang.NoSuchFieldError错误

bijian1013

javaspring

在工作中调另一个系统的SDK包,出现如下java.lang.NoSuchFieldError错误。

org.springframework.web.util.NestedServletException: Handler processing failed; nested exception is java.l

- LeetCode[位运算] - #136 数组中的单一数

Cwind

java题解位运算LeetCodeAlgorithm

原题链接:#136 Single Number

要求:

给定一个整型数组,其中除了一个元素之外,每个元素都出现两次。找出这个元素

注意:算法的时间复杂度应为O(n),最好不使用额外的内存空间

难度:中等

分析:

题目限定了线性的时间复杂度,同时不使用额外的空间,即要求只遍历数组一遍得出结果。由于异或运算 n XOR n = 0, n XOR 0 = n,故将数组中的每个元素进

- qq登陆界面开发

15700786134

qq

今天我们来开发一个qq登陆界面,首先写一个界面程序,一个界面首先是一个Frame对象,即是一个窗体。然后在这个窗体上放置其他组件。代码如下:

public class First { public void initul(){ jf=ne

- Linux的程序包管理器RPM

被触发

linux

在早期我们使用源代码的方式来安装软件时,都需要先把源程序代码编译成可执行的二进制安装程序,然后进行安装。这就意味着每次安装软件都需要经过预处理-->编译-->汇编-->链接-->生成安装文件--> 安装,这个复杂而艰辛的过程。为简化安装步骤,便于广大用户的安装部署程序,程序提供商就在特定的系统上面编译好相关程序的安装文件并进行打包,提供给大家下载,我们只需要根据自己的

- socket通信遇到EOFException

肆无忌惮_

EOFException

java.io.EOFException

at java.io.ObjectInputStream$PeekInputStream.readFully(ObjectInputStream.java:2281)

at java.io.ObjectInputStream$BlockDataInputStream.readShort(ObjectInputStream.java:

- 基于spring的web项目定时操作

知了ing

javaWeb

废话不多说,直接上代码,很简单 配置一下项目启动就行

1,web.xml

<?xml version="1.0" encoding="UTF-8"?>

<web-app xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns="h

- 树形结构的数据库表Schema设计

矮蛋蛋

schema

原文地址:

http://blog.csdn.net/MONKEY_D_MENG/article/details/6647488

程序设计过程中,我们常常用树形结构来表征某些数据的关联关系,如企业上下级部门、栏目结构、商品分类等等,通常而言,这些树状结构需要借助于数据库完成持久化。然而目前的各种基于关系的数据库,都是以二维表的形式记录存储数据信息,

- maven将jar包和源码一起打包到本地仓库

alleni123

maven

http://stackoverflow.com/questions/4031987/how-to-upload-sources-to-local-maven-repository

<project>

...

<build>

<plugins>

<plugin>

<groupI

- java IO操作 与 File 获取文件或文件夹的大小,可读,等属性!!!

百合不是茶

类 File

File是指文件和目录路径名的抽象表示形式。

1,何为文件:

标准文件(txt doc mp3...)

目录文件(文件夹)

虚拟内存文件

2,File类中有可以创建文件的 createNewFile()方法,在创建新文件的时候需要try{} catch(){}因为可能会抛出异常;也有可以判断文件是否是一个标准文件的方法isFile();这些防抖都

- Spring注入有继承关系的类(2)

bijian1013

javaspring

被注入类的父类有相应的属性,Spring可以直接注入相应的属性,如下所例:1.AClass类

package com.bijian.spring.test4;

public class AClass {

private String a;

private String b;

public String getA() {

retu

- 30岁转型期你能否成为成功人士

bijian1013

成长励志

很多人由于年轻时走了弯路,到了30岁一事无成,这样的例子大有人在。但同样也有一些人,整个职业生涯都发展得很优秀,到了30岁已经成为职场的精英阶层。由于做猎头的原因,我们接触很多30岁左右的经理人,发现他们在职业发展道路上往往有很多致命的问题。在30岁之前,他们的职业生涯表现很优秀,但从30岁到40岁这一段,很多人

- 【Velocity四】Velocity与Java互操作

bit1129

velocity

Velocity出现的目的用于简化基于MVC的web应用开发,用于替代JSP标签技术,那么Velocity如何访问Java代码.本篇继续以Velocity三http://bit1129.iteye.com/blog/2106142中的例子为基础,

POJO

package com.tom.servlets;

public

- 【Hive十一】Hive数据倾斜优化

bit1129

hive

什么是Hive数据倾斜问题

操作:join,group by,count distinct

现象:任务进度长时间维持在99%(或100%),查看任务监控页面,发现只有少量(1个或几个)reduce子任务未完成;查看未完成的子任务,可以看到本地读写数据量积累非常大,通常超过10GB可以认定为发生数据倾斜。

原因:key分布不均匀

倾斜度衡量:平均记录数超过50w且

- 在nginx中集成lua脚本:添加自定义Http头,封IP等

ronin47

nginx lua csrf

Lua是一个可以嵌入到Nginx配置文件中的动态脚本语言,从而可以在Nginx请求处理的任何阶段执行各种Lua代码。刚开始我们只是用Lua 把请求路由到后端服务器,但是它对我们架构的作用超出了我们的预期。下面就讲讲我们所做的工作。 强制搜索引擎只索引mixlr.com

Google把子域名当作完全独立的网站,我们不希望爬虫抓取子域名的页面,降低我们的Page rank。

location /{

- java-3.求子数组的最大和

bylijinnan

java

package beautyOfCoding;

public class MaxSubArraySum {

/**

* 3.求子数组的最大和

题目描述:

输入一个整形数组,数组里有正数也有负数。

数组中连续的一个或多个整数组成一个子数组,每个子数组都有一个和。

求所有子数组的和的最大值。要求时间复杂度为O(n)。

例如输入的数组为1, -2, 3, 10, -4,

- Netty源码学习-FileRegion

bylijinnan

javanetty

今天看org.jboss.netty.example.http.file.HttpStaticFileServerHandler.java

可以直接往channel里面写入一个FileRegion对象,而不需要相应的encoder:

//pipeline(没有诸如“FileRegionEncoder”的handler):

public ChannelPipeline ge

- 使用ZeroClipboard解决跨浏览器复制到剪贴板的问题

cngolon

跨浏览器复制到粘贴板Zero Clipboard

Zero Clipboard的实现原理

Zero Clipboard 利用透明的Flash让其漂浮在复制按钮之上,这样其实点击的不是按钮而是 Flash ,这样将需要的内容传入Flash,再通过Flash的复制功能把传入的内容复制到剪贴板。

Zero Clipboard的安装方法

首先需要下载 Zero Clipboard的压缩包,解压后把文件夹中两个文件:ZeroClipboard.js

- 单例模式

cuishikuan

单例模式

第一种(懒汉,线程不安全):

public class Singleton { 2 private static Singleton instance; 3 pri

- spring+websocket的使用

dalan_123

一、spring配置文件

<?xml version="1.0" encoding="UTF-8"?><beans xmlns="http://www.springframework.org/schema/beans" xmlns:xsi="http://www.w3.or

- 细节问题:ZEROFILL的用法范围。

dcj3sjt126com

mysql

1、zerofill把月份中的一位数字比如1,2,3等加前导0

mysql> CREATE TABLE t1 (year YEAR(4), month INT(2) UNSIGNED ZEROFILL, -> day

- Android开发10——Activity的跳转与传值

dcj3sjt126com

Android开发

Activity跳转与传值,主要是通过Intent类,Intent的作用是激活组件和附带数据。

一、Activity跳转

方法一Intent intent = new Intent(A.this, B.class); startActivity(intent)

方法二Intent intent = new Intent();intent.setCla

- jdbc 得到表结构、主键

eksliang

jdbc 得到表结构、主键

转自博客:http://blog.csdn.net/ocean1010/article/details/7266042

假设有个con DatabaseMetaData dbmd = con.getMetaData(); rs = dbmd.getColumns(con.getCatalog(), schema, tableName, null); rs.getSt

- Android 应用程序开关GPS

gqdy365

android

要在应用程序中操作GPS开关需要权限:

<uses-permission android:name="android.permission.WRITE_SECURE_SETTINGS" />

但在配置文件中添加此权限之后会报错,无法再eclipse里面正常编译,怎么办?

1、方法一:将项目放到Android源码中编译;

2、方法二:网上有人说cl

- Windows上调试MapReduce

zhiquanliu

mapreduce

1.下载hadoop2x-eclipse-plugin https://github.com/winghc/hadoop2x-eclipse-plugin.git 把 hadoop2.6.0-eclipse-plugin.jar 放到eclipse plugin 目录中。 2.下载 hadoop2.6_x64_.zip http://dl.iteye.com/topics/download/d2b

- 如何看待一些知名博客推广软文的行为?

justjavac

博客

本文来自我在知乎上的一个回答:http://www.zhihu.com/question/23431810/answer/24588621

互联网上的两种典型心态:

当初求种像条狗,如今撸完嫌人丑

当初搜贴像条犬,如今读完嫌人软

你为啥感觉不舒服呢?

难道非得要作者把自己的劳动成果免费给你用,你才舒服?

就如同 Google 关闭了 Gooled Reader,那是

- sql优化总结

macroli

sql

为了是自己对sql优化有更好的原则性,在这里做一下总结,个人原则如有不对请多多指教。谢谢!

要知道一个简单的sql语句执行效率,就要有查看方式,一遍更好的进行优化。

一、简单的统计语句执行时间

declare @d datetime ---定义一个datetime的变量set @d=getdate() ---获取查询语句开始前的时间select user_id

- Linux Oracle中常遇到的一些问题及命令总结

超声波

oraclelinux

1.linux更改主机名

(1)#hostname oracledb 临时修改主机名

(2) vi /etc/sysconfig/network 修改hostname

(3) vi /etc/hosts 修改IP对应的主机名

2.linux重启oracle实例及监听的各种方法

(注意操作的顺序应该是先监听,后数据库实例)

&nbs

- hive函数大全及使用示例

superlxw1234

hadoophive函数

具体说明及示例参 见附件文档。

文档目录:

目录

一、关系运算: 4

1. 等值比较: = 4

2. 不等值比较: <> 4

3. 小于比较: < 4

4. 小于等于比较: <= 4

5. 大于比较: > 5

6. 大于等于比较: >= 5

7. 空值判断: IS NULL 5

- Spring 4.2新特性-使用@Order调整配置类加载顺序

wiselyman

spring 4

4.1 @Order

Spring 4.2 利用@Order控制配置类的加载顺序

4.2 演示

两个演示bean

package com.wisely.spring4_2.order;

public class Demo1Service {

}

package com.wisely.spring4_2.order;

public class