flink 1.10 on yarn安装

flink集群安装分为三种:

Local模式:

Local模式比较简单,用于本地测试,因此不多做赘述。只需在主节点上解压安装包就代表成功安装了,在flink安装目录下使用./bin/start-cluster.sh命令,就可以通过master:8081监控集群状态,关闭集群命令:./bin/stop-cluster.sh。

Standalone模式:

修改flink-conf.yaml,需要配置masters,slaves,启动集群,可以配置高可用。

Standalone模式顾名思义,是在本地集群上调度执行,不依赖于外部调度机制例如YARN。

Flink On Yarn模式:

不需要配置master,slave,不需要启动集群,和spark一样,提交任务时输入启动命令即可。

flink客户端必须有hadoop环境变量,不然flink通信不到yarn

参考链接:

链接1 https://blog.csdn.net/a_drjiaoda/article/details/88037282

链接2 https://blog.csdn.net/a_drjiaoda/article/details/88203323

开始安装,flink安装节点需要hadoop,此处忽略hadoop的安装:

1.依赖的jar包

flink-hadoop-compatibility_2.12-1.7.2.jar

javax.ws.rs-api-2.0.1.jar

jersey-common-2.28.jar

jersey-core-1.19.4.jar

flink-shaded-hadoop-2-uber-2.8.3-10.0.jar

2.下载flink安装包

flink-1.10.0-bin-scala_2.12.tgz

3.解压安装包

tar -xzvf flink-1.10.0-bin-scala_2.12.tgz

4.将上面的依赖包上传到flink安装目录的lib下

5.配置环境变量

#FLINK_HOME

export FLINK_HOME=/opt/module/flink-1.10.0

export PATH= P A T H : PATH: PATH:FLINK_HOME/bin

6.修改配置文件

对于Flink on yarn模式,我们并不需要在conf配置下配置 masters和slaves。因为在指定TM的时候可以通过参数“-n”来标识需要启动几个TM,需要flink所在机器上面有hadoop环境。rest.port 8082 Flink web UI默认端口与spark的端口8081冲突,更改为8082

系统默认使用con/flink-conf.yaml里的配置。

Flink on yarn将会覆盖掉几个参数:

jobmanager.rpc.address因为jobmanager的在集群的运行位置并不是实现确定的,它就是am的地址;

taskmanager.tmp.dirs使用yarn给定的临时目录;

parallelism.default也会被覆盖掉,如果在命令行里指定了slot数。

vim flink-conf.yaml

#==============================================================================

# Common

#==============================================================================

#flink on yarn该参数可以不设置,直接写成localhost即可

jobmanager.rpc.address: localhost

# The RPC port where the JobManager is reachable.

jobmanager.rpc.port: 6123

# The heap size for the JobManager JVM

jobmanager.heap.size: 1024m

# The total process memory size for the TaskManager.

taskmanager.memory.process.size: 1568m

# It is not recommended to set both 'taskmanager.memory.process.size' and Flink memory.

# taskmanager.memory.flink.size: 1280m

# The number of task slots that each TaskManager offers. Each slot runs one parallel pipeline.

taskmanager.numberOfTaskSlots: 2

# The parallelism used for programs that did not specify and other parallelism.

parallelism.default: 2

#==============================================================================

# High Availability

#==============================================================================

# The high-availability mode. Possible options are 'NONE' or 'zookeeper'.

#high-availability: zookeeper

# The path where metadata for master recovery is persisted. While ZooKeeper stores

# the small ground truth for checkpoint and leader election, this location stores

# the larger objects, like persisted dataflow graphs.

# Must be a durable file system that is accessible from all nodes

# (like HDFS, S3, Ceph, nfs, ...)

#high-availability.storageDir: hdfs://yantian/flink/recovery

# The list of ZooKeeper quorum peers that coordinate the high-availability

# "host1:clientPort,host2:clientPort,..." (default clientPort: 2181)

#high-availability.zookeeper.quorum: master:2181,segment01:2181,segment02:2181

# It can be either "creator" (ZOO_CREATE_ALL_ACL) or "open" (ZOO_OPEN_ACL_UNSAFE)

# The default value is "open" and it can be changed to "creator" if ZK security is enabled

# high-availability.zookeeper.client.acl: open

#high-availability.zookeeper.path.root: /flink

#==============================================================================

# Fault tolerance and checkpointing

#==============================================================================

# The backend that will be used to store operator state checkpoints if

# checkpointing is enabled.

# Directory for checkpoints filesystem, when using any of the default bundled

#state.checkpoints.dir: hdfs://yantian/flink/checkpoints

# Default target directory for savepoints, optional.

#state.savepoints.dir: hdfs://yantian/flink/savepoints

# Flag to enable/disable incremental checkpoints for backends that

# support incremental checkpoints (like the RocksDB state backend).

# state.backend.incremental: false

# The failover strategy, i.e., how the job computation recovers from task failures.

# Only restart tasks that may have been affected by the task failure, which typically includes

#jobmanager.execution.failover-strategy: region

#==============================================================================

# Rest & web frontend

#==============================================================================

# The port to which the REST client connects to. If rest.bind-port has

# not been specified, then the server will bind to this port as well.

rest.port: 8082

# The address to which the REST client will connect to

#rest.address: 0.0.0.0

# The address that the REST & web server binds to

#rest.bind-address: 0.0.0.0

# Flag to specify whether job submission is enabled from the web-based

# runtime monitor. Uncomment to disable.

#web.submit.enable: false

#==============================================================================

# Advanced

#==============================================================================

# Override the directories for temporary files. If not specified, the

# system-specific Java temporary directory (java.io.tmpdir property) is taken.

# For framework setups on Yarn or Mesos, Flink will automatically pick up the

# containers' temp directories without any need for configuration.

# Add a delimited list for multiple directories, using the system directory

# delimiter (colon ':' on unix) or a comma, e.g.:

# /data1/tmp:/data2/tmp:/data3/tmp

# Note: Each directory entry is read from and written to by a different I/O

# thread. You can include the same directory multiple times in order to create

# multiple I/O threads against that directory. This is for example relevant for

# high-throughput RAIDs.

# io.tmp.dirs: /tmp

#需要手动创建,否则启动不了集群

#io.tmp.dirs: /home/flink/data/flinkdata/tmp

#env.log.dir: /home/flink/data/flinkdata/logs

# The amount of memory going to the network stack. These numbers usually need

# no tuning. Adjusting them may be necessary in case of an "Insufficient number

# of network buffers" error. The default min is 64MB, the default max is 1GB.

# taskmanager.memory.network.fraction: 0.1

# taskmanager.memory.network.min: 64mb

# taskmanager.memory.network.max: 1gb

#==============================================================================

# Flink Cluster Security Configuration

#==============================================================================

# Kerberos authentication for various components - Hadoop, ZooKeeper, and connectors -

# may be enabled in four steps:

# 1. configure the local krb5.conf file

# 2. provide Kerberos credentials (either a keytab or a ticket cache w/ kinit)

# 3. make the credentials available to various JAAS login contexts

# 4. configure the connector to use JAAS/SASL

# The below configure how Kerberos credentials are provided. A keytab will be used instead of

# a ticket cache if the keytab path and principal are set.

# security.kerberos.login.use-ticket-cache: true

# security.kerberos.login.keytab: /path/to/kerberos/keytab

# security.kerberos.login.principal: flink-user

# The configuration below defines which JAAS login contexts

# security.kerberos.login.contexts: Client,KafkaClient

#==============================================================================

# ZK Security Configuration

#==============================================================================

# Below configurations are applicable if ZK ensemble is configured for security

# Override below configuration to provide custom ZK service name if configured

# zookeeper.sasl.service-name: zookeeper

# The configuration below must match one of the values set in "security.kerberos.login.contexts"

# zookeeper.sasl.login-context-name: Client

#==============================================================================

#HistoryServer

#==============================================================================

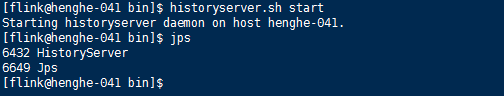

# The HistoryServer is started and stopped via bin/historyserver.sh (start|stop)

# Directory to upload completed jobs to. Add this directory to the list of

# monitored directories of the HistoryServer as well (see below).

#jobmanager.archive.fs.dir: hdfs://yantian/flink/completed_jobs/

# The address under which the web-based HistoryServer listens.

#historyserver.web.address: 0.0.0.0

# The port under which the web-based HistoryServer listens.

#historyserver.web.port: 18082

# Comma separated list of directories to monitor for completed jobs.

#值与“jobmanager.archive.fs.dir”保持一致

#historyserver.archive.fs.dir: hdfs://yantian/flink/completed_jobs/

# Interval in milliseconds for refreshing the monitored directories.

#history server页面默认刷新时长

#historyserver.archive.fs.refresh-interval: 10000

7.测试;

[flink@master flink-1.10.0]$ ./bin/flink run -m yarn-cluster -yn 2 -yjm 1024 -ytm 1024 ./examples/batch/WordCount.jar

配置文件详解:

#jobManager的IP地址

jobmanager.rpc.address: localhost

#JobManager的端口号

jobmanager.rpc.port: 6123

# JobManagerJVM heap 内存大小(任务提交阶段可再设置,优先级高于配置文件)

jobmanager.heap.mb: 1024

# TaskManager JVM heap 内存大小(任务提交阶段可再设置,优先级高于配置文件)

taskmanager.heap.mb: 2048

#每个TaskManager 提供的任务slots梳理大小(任务提交阶段可再设置,优先级高于配置文件)

taskmanager.numberOfTaskSlots: 1

#Flink任务默认并行度 (一般情况下如果是kafka 按照kafka分区数即可,p=slot*tm)

parallelism.default: 1

#Web的运行监视器端扣

web.port: 8081

#将已完成的作业上传到的目录(用于帮助发现任务运行阶段日志信息)

jobmanager.archive.fs.dir: hdfs://nameservice/flink/flink-jobs/

#基于Web的HistoryServer的端口号

historyserver.web.port: 8082

#以逗号分割的目录列表,将作业归档到目录中

historyserver.archive.fs.dir: hdfs://nameservice/flink/flink-jobs/

#刷新存档的作业目录的时间间隔(单位:毫秒)

historyserver.archive.fs.refresh-interval: 10000

#用于存储和检查点状态的存储类型:filesystem hdfs rocksdb

state.backend: rocksdb

##存储检查点的数据文件和元数据的默认目录

state.backend.fs.checkpointdir: hdfs://nameservice/flink/pointsdata/

#用于保存检查点的目录(用户任务代码可设置覆盖,这里省略了nameservice,如果多集群任务需要从A发到B,nameservice可不用,相对路径即可)

state.checkpoints.dir: hdfs:///flink/checkpoints/

#save point的目录 (一般需要上次ck成功才能savepoint,同上相对路径)

state.savepoints.dir: hdfs:///flink/savepoints/

#保存最近的检查点数量 可是业务情况调整

state.checkpoints.num-retained: 20

#开启增量ck 这里全局生效 用户代码也可设置

state.backend.incremental:true

#超时

akka.ask.timeout: 300s

#akka心跳间隔,用于检测失效的TaskManager,误报减小此值

akka.watch.heartbeat.interval: 30s

#如果由于丢失或延迟的心跳信息而错误的将TaskManager标记为无效,增加此值

akka.watch.heartbeat.pause: 120s

#网络缓冲区的最大内存大小

taskmanager.network.memory.max: 4gb

#网络缓冲区的最小内存大小

taskmanager.network.memory.min: 256mb

#用于网络缓冲区的JVM内存的分数。这决定了TaskManager可以同时具有多少个流数据交换通道以及通道的缓冲程度。

taskmanager.network.memory.fraction: 0.5

#hadoop配置文件地址

fs.hdfs.hadoopconf: /etc/ecm/hadoop-conf/

#任务失败尝试次数

yarn.application-attempts: 10

#高可用

high-availability: zookeeper

high-availability.zookeeper.path.root: /flink

high-availability.zookeeper.quorum:zk1,zk2,zk3

high-availability.storageDir: hdfs://nameservice/flink/ha/

#metric收集信息

metrics.reporters: prom

#收集器

metrics.reporter.prom.class: org.apache.flink.metrics.prometheus.PrometheusReporter

#metric对外暴露端口

metrics.reporter.prom.port: 9250-9260

或者

metrics.reporter.influxdb.class: org.apache.flink.metrics.influxdb.InfluxdbReporter

metrics.reporter.influxdb.host: xx.xx.xx.xx

metrics.reporter.influxdb.port: 8086

metrics.reporter.influxdb.db: flink

metrics.reporter.influxdb.username:

metrics.reporter.influxdb.password: