torchtext补充---利用torchtext读取和处理json、csv、tsv

本篇文章参考:

Using TorchText with Your Own Datasets

部分细节可能会略作改动,代码注释尽数基于自己的理解。文章目的仅作个人领悟记录,并不完全是tutorial的翻译,可能并不适用所有初学者,但也可从中互相借鉴吸收参考。

接上篇:torchtext使用–Transformer的IMDB情感分析

这次将使用torchtext读取和处理自己的数据集,而不是运用内置的datasets.

这是这个系列最后一篇,作为torchtext的必要补充,剩余还有两篇补充的tutorial较为基础,了解即可。

首先读取json格式

看看数据长什么样

import glob

def find_file(path,tar):

return glob.glob("".join(["".join([path,"*."]),tar]))

files=find_file("data/",'json')

with open(files[0]) as f:

for line in f:

print(line)

{"name": "Craig", "location": "Finland", "age": 29, "quote": ["go", "baseball", "team", "!"]}

{"name": "Janet", "location": "Hong Kong", "age": 24, "quote": ["knowledge", "is", "great"]}

规整一点:

def text_name(path_):

(filepath, tempfilename) = os.path.split(path_)

(filename, extension) = os.path.splitext(tempfilename)

return filename

import json

import os

for i in range(len(files)):

with open(files[i]) as f:

name=text_name(files[i])

for line in f:

content=json.loads(line)

print(f'datasets : {name}, content : {content}')

datasets : test, content : {'name': 'Craig', 'location': 'Finland', 'age': 29, 'quote': ['go', 'baseball', 'team', '!']}

datasets : test, content : {'name': 'Janet', 'location': 'Hong Kong', 'age': 24, 'quote': ['knowledge', 'is', 'great']}

datasets : train, content : {'name': 'John', 'location': 'United Kingdom', 'age': 42, 'quote': ['i', 'love', 'the', 'united kingdom']}

datasets : train, content : {'name': 'Mary', 'location': 'United States', 'age': 36, 'quote': ['i', 'want', 'more', 'telescopes']}

datasets : valid, content : {'name': 'Fred', 'location': 'France', 'age': 21, 'quote': ['what', 'am', 'i', 'doing', '?']}

datasets : valid, content : {'name': 'Pauline', 'location': 'Canada', 'age': 44, 'quote': ['hello', 'world']}

可以看到三个数据集的json的格式是一模一样的

接下来针对json的每一个属性构造一个Field:

from torchtext import data

from torchtext import datasets

T=lambda x:list(str(x))

NAME=data.Field()

LOCATION=data.Field()

AGE=data.Field(preprocessing=T)

QUOTE=data.Field()

接下来构造一个python字典,用于指定上述Field应该分别应用到json对象的哪些属性

field={'name':('n',NAME),'location':('p',LOCATION),'age':('gg',AGE),'quote':('q',QUOTE)}

上面这个field字典的意思就是json的每个属性应该用哪种Field处理,并且处理之后新的属性名是什么。eg.‘name’应该被NAME处理,且处理之后它将被重命名为’n’.后续构建iterator之后就可以用batch.n来访问

要注意,应该确保数据集中所有json对象的属性都有这个字典里包含的所有键(field含有的内容只能少于所有json,而不能大于)

接下来构造datasets,如果要用自己的数据集,而且数据集是下列三者之一:

1.json

2.csv

3.tsv

那么可以利用 Tabulardatasets

官方对Tabulardatasets的解释 :Defines a Dataset of columns stored in CSV, TSV, or JSON format.

train_data, valid_data, test_data=data.TabularDataset.splits(path='data',

train = 'train.json',

validation = 'valid.json',

test = 'test.json',

format = 'json',

fields = field)

print(type(train_data))

print(type(valid_data))

print(type(test_data))

<class 'torchtext.data.dataset.TabularDataset'>

<class 'torchtext.data.dataset.TabularDataset'>

<class 'torchtext.data.dataset.TabularDataset'>

由于三个数据集的格式是一样的,因此可以三个数据集可以共用一个Field,否则就要构造三次datasets

例如:

train_data2=data.TabularDataset.splits(path="data",

train="train.json",

format='json',

fields=field

)

但是会发现单个数据集构造出来是个Tuple,这是因为不管输入有多少个数据集,splits总会返回一个元组,如果是多个数据集那么可以元组自动拆包,只有一个数据集的话将会返回只有一个元素的元组

type(train_data2)

tuple

可以这样:

train_data2=data.TabularDataset.splits(path="data",

train="train.json",

format='json',

fields=field

)[0]

type(train_data2)

torchtext.data.dataset.TabularDataset

看一下数据集长啥样

vars(train_data.examples[0])

{'n': ['John'],

'p': ['United', 'Kingdom'],

'gg': ['4', '2'],

'q': ['i', 'love', 'the', 'united kingdom']}

同时可以看到对于quote字段,并没有被处理,这是因为它本身就已经是一个list了 。同时,对于两个不同json对象的同名属性,可以有不同的值,string、list均可

接下来是vocab

def build_vocab(datasets,fileds):

for field in fileds:

for dataset in datasets:

field.build_vocab(dataset)

build_vocab([train_data, valid_data, test_data],[NAME,LOCATION,AGE,QUOTE])

接下来就是常规的iterator

import torch

train_it,valid_it,test_it=data.BucketIterator.splits((train_data,valid_data,test_data),

batch_size=1,

device=torch.device(0),

)

next(iter(train_it))

[torchtext.data.batch.Batch of size 1]

[.n]:[torch.cuda.LongTensor of size 1x1 (GPU 0)]

[.p]:[torch.cuda.LongTensor of size 2x1 (GPU 0)]

[.gg]:[torch.cuda.LongTensor of size 2x1 (GPU 0)]

[.q]:[torch.cuda.LongTensor of size 4x1 (GPU 0)]

可以用batch.n、batch.q等调用,就和之前的tutorial里面batch.text、batch.label一样

for i,batch in enumerate(train_it):

print(f"batch{i}.n: {batch.n}\nbatch{i}.p: {batch.p}\nbatch{i}.gg: {batch.gg}\nbatch{i}.q: {batch.q}\n")

batch0.n: tensor([[0]], device='cuda:0')

batch0.p: tensor([[0],

[0]], device='cuda:0')

batch0.gg: tensor([[3],

[2]], device='cuda:0')

batch0.q: tensor([[0],

[0],

[0],

[0]], device='cuda:0')

batch1.n: tensor([[0]], device='cuda:0')

batch1.p: tensor([[0],

[0]], device='cuda:0')

batch1.gg: tensor([[0],

[0]], device='cuda:0')

batch1.q: tensor([[0],

[0],

[0],

[0]], device='cuda:0')

将数据集的每一个batch打印:

print('Train:')

for batch in train_it:

print(batch)

Train:

[torchtext.data.batch.Batch of size 1]

[.n]:[torch.cuda.LongTensor of size 1x1 (GPU 0)]

[.p]:[torch.cuda.LongTensor of size 2x1 (GPU 0)]

[.gg]:[torch.cuda.LongTensor of size 2x1 (GPU 0)]

[.q]:[torch.cuda.LongTensor of size 4x1 (GPU 0)]

[torchtext.data.batch.Batch of size 1]

[.n]:[torch.cuda.LongTensor of size 1x1 (GPU 0)]

[.p]:[torch.cuda.LongTensor of size 2x1 (GPU 0)]

[.gg]:[torch.cuda.LongTensor of size 2x1 (GPU 0)]

[.q]:[torch.cuda.LongTensor of size 4x1 (GPU 0)]

csv&tsv

import pandas as pd

files=find_file("data/",'csv')

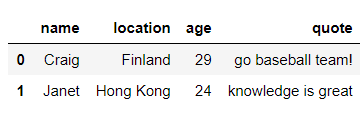

pd.read_csv(files[0])

和json的处理过程不一样,这里的field不再是一个字典,而必须是一个列表,列表里每一个元素是一个元组,元组的第一个元素是新的属性名,第二个元素是Field。而且由于没有指定原始的属性名,所以这些元组的顺序必须和列名的顺序一一对应,如果某个属性不要(例如age),那么用(None,None)替代。

由于第一行总是列名,所以可以使用skip_header=True

fields=[('n',NAME),('P',LOCATION),(None,None),('q',QUOTE)]

train_data, valid_data, test_data = data.TabularDataset.splits(

path = 'data',

train = 'train.csv',

validation = 'valid.csv',

test = 'test.csv',

format = 'csv',

fields = fields,

skip_header = True

)

vars(train_data.examples[0])

{'n': ['John'],

'P': ['United', 'Kingdom'],

'q': ['i', 'love', 'the', 'united', 'kingdom']}

tsv一模一样:

train_data, valid_data, test_data = data.TabularDataset.splits(

path = 'data',

train = 'train.tsv',

validation = 'valid.tsv',

test = 'test.tsv',

format = 'tsv',

fields = fields,

skip_header = True)

vars(train_data.examples[0])

{'n': ['John'],

'P': ['United', 'Kingdom'],

'q': ['i', 'love', 'the', 'united', 'kingdom']}

tutorial的作者认为,对于torchtext而言,json好于csv和tsv,原因有两个:

1.无法事先分词。由于csv、tsv无法存储list,所以一旦使用torchtext处理数据,就算你已经事先将数据处理好,Field还是将不可避免进行分词。而json的属性值可以是list,Field将不对list做处理,这样可以节省时间。

2.错误的分割。csv、tsv自动会对数据中的’,’,’\t’做分割,而json则没有这个限制。

结束语:

torchtext使得文本处理、数据清洗变得简单,但是在很多情况下它无法灵活地满足使用者的需求,所以笔者建议可以将torchtext使用当作一个快速的搭建工具,而不要过于依赖。

下一篇:没有了