CentOS7部署Ceph(Luminous)

CentOS7部署Ceph

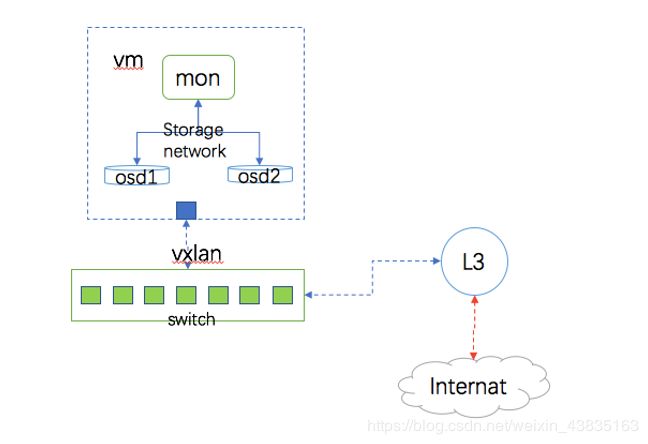

1.部署的架构

2.基础环境的准备

2.1 系统信息

| 系统版本 | CentOS7.6 |

| 内存 | 2G |

| CPU | 2核 |

| Ceph版本 | Luminous |

| 虚拟机数 | 1 |

| Osd个数 | 1 |

2.2 网络配置

本机IP地址172.16.14.227/24 使用NAT可以联网

2.3 安装基本工具

备份本地的yum源

mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo_bak

使用阿里云的yum源

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

配置ceph源

vi /etc/yum.repo/ceph

内容如下:

[ceph]

name=Ceph packages for $basearch

baseurl=https://download.ceph.com/rpm-luminous/el7/$basearch

enabled=1

priority=2

gpgcheck=1

gpgkey=https://download.ceph.com/keys/release.asc

[ceph-noarch]

name=Ceph noarch packages

baseurl=https://download.ceph.com/rpm-luminous/el7/noarch

enabled=1

priority=2

gpgcheck=1

gpgkey=https://download.ceph.com/keys/release.asc

[ceph-source]

name=Ceph source packages

baseurl=https://download.ceph.com/rpm-luminous/el7/SRPMS

enabled=0

priority=2

gpgcheck=1

gpgkey=https://download.ceph.com/keys/release.asc

安装epel源

yum install epel-release -y

*** Notice:如果没有epel源,Ceph的一些依赖将会找不到,诸如leveldb,libbabeltrace

创建ceph,epel源数据

yum makecache

编辑/etc/hosts文件

172.16.14.227 ceph-traing

关闭防火墙和SELinux

vi /etc/selinux/config

SELINUX=disabled

setenforce 0

systemctl stop firewalld

3.Ceph的安装

3.1 安装ceph软件包

yum install ceph -y

3.2 安装mon

生成fsid

uuidgen

创建/etc/ceph/ceph.conf

[global]

fsid = f461dce6-1256-4f11-b91b-2188dd36f72e #替换成你的fsid

mon initial members = ceph-traing

mon host = 172.16.14.227

public network = 172.16.14.0/24

auth cluster required = cephx

auth service required = cephx

auth client required = cephx

osd journal size = 1024

osd pool default size = 1

osd pool default min size = 1

osd pool default pg num = 256

osd pool default pgp num = 256

osd crush chooseleaf type = 1

创建monitor keyring

ceph-authtool --create-keyring /tmp/ceph.mon.keyring --gen-key -n mon. --cap mon 'allow *'

创建administrator keyring

ceph-authtool --create-keyring /etc/ceph/ceph.client.admin.keyring --gen-key -n client.admin -cap mon 'allow *' --cap osd 'allow *' --cap mds 'allow *' --cap mgr 'allow *'

创建bootstrap-osd keyring

ceph-authtool --create-keyring /var/lib/ceph/bootstrap-osd/ceph.keyring --gen-key -n client.bootstrap-osd --cap mon 'profile bootstrap-osd'

将bootstrap-osd keyring 和administrator keyring 导入mon里面

ceph-authtool /tmp/ceph.mon.keyring --import-keyring /etc/ceph/ceph.client.admin.keyring

ceph-authtool /tmp/ceph.mon.keyring --import-keyring /var/lib/ceph/bootstrap-osd/ceph.keyring

创建monmap

***注意替换成你的fsid

monmaptool --create --add ceph-traing 172.16.14.227 --fsid f461dce6-1256-4f11-b91b2188dd36f72e /tmp/monmap

创建monitor的数据目录:

sudo -u ceph mkdir /var/lib/ceph/mon/ceph-traing

设置权限

chown ceph.ceph /tmp/ceph.mon.keyring

将用户的身份转化为ceph

sudo -u ceph ceph-mon --mkfs -i ceph --monmap /tmp/monmap --keyring /tmp/ceph.mon.keyring

初始化monitor

ceph-mon --mkfs -i ceph-traing --monmap /tmp/monmap --keyring /tmp/ceph.mon.keyring

启动ceph-mon进程

systemctl start ceph-mon@ceph-traing

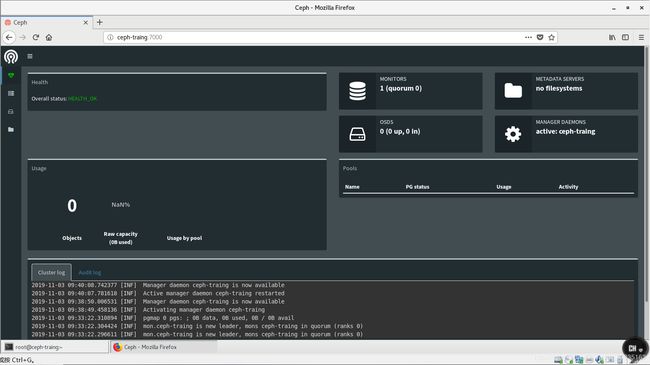

验证mon是否启动成功

ceph -s

#显示信息如下:

cluster:

id: f461dce6-1256-4f11-b91b-2188dd36f72e

health: HEALTH_OK

services:

mon: 1 daemons, quorum ceph-traing #服务起来了

mgr: no daemon (active)

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0B

usage: 0B used, 0B / 0B avail

pgs:

3.3 安装mgr

创建mgr的数据目录

mkdir /var/lib/ceph/mgr/ceph-ceph-traing

创建mgr的keyring

ceph auth get-or-create mgr.ceph-traing mon 'allow profile mgr' osd 'allow *' mds 'allow *'

#输出:

[mgr.ceph-traing]

key = AQCx76FbnNkrAhAAPlS2W7wWXIlqGgSR0+c7Yw==

将以上输出信息导入到/var/lib/ceph/mgr/ceph-ceph-traing/keyring 中

vim /var/lib/ceph/mgr/ceph-ceph-traing/keyring

修改用户所属组和用户

chown -R ceph. /var/lib/ceph/mgr/*

启动ceph-mgr进程

systemctl start ceph-mgr@ceph-traing

验证mgr是否启动成功

ceph -s

#输出如下:

cluster:

id: f461dce6-1256-4f11-b91b-2188dd36f72e

health: HEALTH_OK

services:

mon: 1 daemons, quorum ceph-traing

mgr: ceph-traing(active) #服务起来了

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0B

usage: 0B used, 0B / 0B avail

pgs:

查看mgr所支持的模块

ceph mgr module ls

#输出如下:

{

"enabled_modules": [

"balancer",

"restful",

"status"

],

"disabled_modules": [

"influx",

"dashboard",

"localpool",

"prometheus",

"selftest",

"zabbix"

]

启动dashboard

ceph mgr module enable dashboard

查看登陆的url

ceph mgr services

#输出如下:

{

"dashboard": "http://ceph-traing

}

准备一块磁盘20GB

将磁盘分区

fdisk /dev/sdb

创建文件系统

mkfs.xfs /dev/sdb1

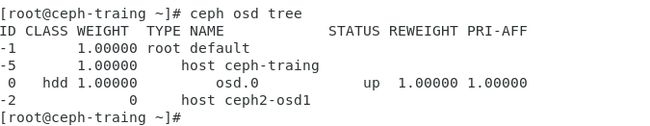

创建osd-number

ceph osd create

#记住生成的数字osd-number

创建OSD目录并挂载刚才的分区

mkdir /var/lib/ceph/osd/ceph-0/ #ceph-{osd-number}

mount -o rw,noatime,attr2,inode64,noquota /dev/sdb1 /var/lib/ceph/osd/ceph-0

编辑/etc/fstab文件

#内容如下:

/dev/sdb1 /var/lib/ceph/osd/ceph-0 xfs rw,noatime,attr2,inode64,noquota 0 0

配置OSD

***注:osd-number是前述命令

ceph osd create生成的数字;cluster-name是前述配置mon节点时使用的名称;hostname是新osd节点服务器主机名;id-or-name是osd.{osd-number};weight是权重,一般容量为1T时权重为1

#命令行参数:

chown -R ceph:ceph /var/lib/ceph/osd/

sudo -u ceph ceph-osd -i {osd-number} --mkfs --mkkey

ceph auth add osd.{osd-number} osd 'allow *' mon 'allow rwx' -i /var/lib/ceph/osd/ceph-{osd-number}/keyring

ceph [--cluster {cluster-name}] osd crush add-bucket {hostname} host

ceph osd crush move {hostname} root=default

ceph [--cluster {cluster-name}] osd crush add {id-or-name} {weight} host={hostname}

#这里使用:

chown -R ceph:ceph /var/lib/ceph/osd/

sudo -u ceph ceph-osd -i 0 --mkfs --mkkey

ceph auth add osd.0 osd 'allow *' mon 'allow rwx' -i /var/lib/ceph/osd/ceph-0/keyring

ceph --cluster ceph osd crush add-bucket ceph2-osd1 host

ceph osd crush move ceph2-osd1 root=default

ceph osd crush add osd.0 1.0 host=ceph2-osd1

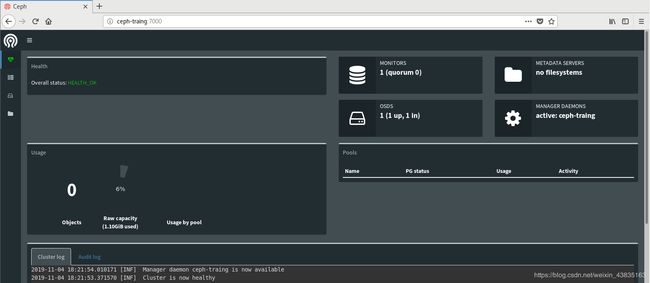

启动osd服务

systemctl enable ceph-osd@{osd-number}

systemctl start ceph-osd@{osd-number}

#我们使用:

systemctl enable ceph-osd@0

systemctl start ceph-osd@0

***若启动osd服务后执行命令ceph -s时显示新osd的状态不是up或不是in,重启机器