Kubernetes存储之Rook的ceph搭建

环境准备:

| 主机名 | IP | 角色 |

|---|---|---|

| master01 | 192.168.200.182 | k8s-master,rook release-0.9 |

| node | 192.168.200.183 | k8s-node01 |

| node | 192.68.200.184 | k8s-node02 |

ceph简介:

Ceph 是一个开源的分布式存储系统,包括对象存储、块设备、文件系统。它具有高可靠性、安装方便、管理简便、能够轻松管理海量数据。Ceph 存储集群具备了企业级存储的能力,它通过组织大量节点,节点之间靠相互通讯来复制数据、并动态地重分布数据,从而达到高可用分布式存储功能

rook简介:

Rook 是专用于 Cloud-Native 环境的文件、块、对象存储服务。它实现了一个自动管理的、自动扩容的、自动修复的分布式存储服务。Rook 支持自动部署、启动、配置、分配、扩容/缩容、升级、迁移、灾难恢复、监控以及资源管理。为了实现所有这些功能,Rook 需要依赖底层的容器编排平台,例如 kubernetes、CoreOS 等。Rook 目前支持 Ceph、NFS、Minio Object Store、Edegefs、Cassandra、CockroachDB 存储的搭建,后期会支持更多存储方案。

Rook的主要组件有两个,功能如下:

Rook Operator:

Rook与Kubernetes交互的组件

整个Rook集群只有一个

Rook Agent:

与Rook Operator交互,执行命令

每个Kubernetes的Node上都会启动一个

不同的存储系统,启动的Agent是不同的

获取rook源,切换为release1.1

[root@master01 ~]# git clone https://github.com/rook/rook.git

[root@master01 ~]# cd rook/

[root@master01 rook]# [root@master01 rook]# git checkout -b release-0.9 remotes/origin/release-0.9

分支 release-0.9 设置为跟踪来自 origin 的远程分支 release-0.9。

切换到一个新分支 'release-0.9'

[root@master01 rook]# git branch -a

master

* release-0.9

release-1.1

remotes/origin/HEAD -> origin/master

remotes/origin/master

remotes/origin/release-0.4

remotes/origin/release-0.5

remotes/origin/release-0.6

remotes/origin/release-0.7

remotes/origin/release-0.8

remotes/origin/release-0.9

为什么要切换?这里是个深坑。

[root@master01 ~]# git clone https://github.com/rook/rook.git

[root@master01 ~]# cd rook//cluster/examples/kubernetes/ceph/

[root@master01 ceph]# kubectl create -f operator.yaml

[root@master01 ceph]# kubectl create -f cluster.yaml

如果正常的话,Rook 会创建好所有需要的资源,但是很遗憾,你会发现当 cluster.yaml 创建完毕后,不会创建 rook-ceph-mgr、rook-ceph-mon、rook-ceph-osd 等资源。参考https://github.com/rook/rook/issues/2338这里,我们可以通过查看 rook-ceph-operator Pod 的日志来分析下:

[root@master01 ~]# kubectl logs -n rook-ceph-system rook-ceph-operator-68576ff976-m9m6l

......

E0107 12:06:23.272607 6 reflector.go:205] github.com/rook/rook/vendor/github.com/rook/operator-kit/watcher.go:76: Failed to list *v1beta1.Cluster: the server could not find the requested resource (get clusters.ceph.rook.io)

E0107 12:06:24.274364 6 reflector.go:205] github.com/rook/rook/vendor/github.com/rook/operator-kit/watcher.go:76: Failed to list *v1beta1.Cluster: the server could not find the requested resource (get clusters.ceph.rook.io)

E0107 12:06:25.288800 6 reflector.go:205] github.com/rook/rook/vendor/github.com/rook/operator-kit/watcher.go:76: Failed to list *v1beta1.Cluster: the server could not find the requested resource (get clusters.ceph.rook.io)

类似以上日志输出,这是因为创建的 CRDs资源版本不匹配导致的。正确的方法就是切换到最新固定版本

部署Rook Operator

[root@master01 ~]# cd rook/cluster/examples/kubernetes/ceph/

[root@master01 ceph]# kubectl create -f operator.yaml

namespace/rook-ceph-system created

customresourcedefinition.apiextensions.k8s.io/cephclusters.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephfilesystems.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectstores.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectstoreusers.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephblockpools.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/volumes.rook.io created

clusterrole.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt created

role.rbac.authorization.k8s.io/rook-ceph-system created

clusterrole.rbac.authorization.k8s.io/rook-ceph-global created

clusterrole.rbac.authorization.k8s.io/rook-ceph-mgr-cluster created

serviceaccount/rook-ceph-system created

rolebinding.rbac.authorization.k8s.io/rook-ceph-system created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-global created

deployment.apps/rook-ceph-operator created

[root@master01 ceph]# kubectl get pods -n rook-ceph-system

NAME READY STATUS RESTARTS AGE

rook-ceph-operator-9f9c5ffd7-zzpzn 0/1 ContainerCreating 0 22s

[root@master01 ceph]# kubectl get pods -n rook-ceph-system

NAME READY STATUS RESTARTS AGE

rook-ceph-agent-765kj 1/1 Running 0 50s

rook-ceph-agent-9bk72 1/1 Running 0 50s

rook-ceph-operator-9f9c5ffd7-85btz 1/1 Running 0 1m

rook-discover-4b5qh 1/1 Running 0 50s

rook-discover-gkt67 1/1 Running 0 50s

说明一下,这里先创建了 rook-ceph-operator,然后在由它在每个节点创建 rook-ceph-agent 和 rook-discover。接下来,就可以部署 CephCluster 了。

[root@master01 ceph]# kubectl create -f cluster.yaml

namespace/rook-ceph created

serviceaccount/rook-ceph-osd created

serviceaccount/rook-ceph-mgr created

role.rbac.authorization.k8s.io/rook-ceph-osd created

role.rbac.authorization.k8s.io/rook-ceph-mgr-system created

role.rbac.authorization.k8s.io/rook-ceph-mgr created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt created

rolebinding.rbac.authorization.k8s.io/rook-ceph-osd created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-system created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-cluster created

cephcluster.ceph.rook.io/rook-ceph created

[root@master01 ceph]# kubectl get cephcluster -n rook-ceph

NAME DATADIRHOSTPATH MONCOUNT AGE STATE

rook-ceph /var/lib/rook 3 34s

[root@master01 ceph]# kubectl get pod -n rook-ceph

NAME READY STATUS RESTARTS AGE

rook-ceph-mon-a-ddbc448d6-5q466 0/1 Init:1/3 0 2m

rook-ceph-mon-d-5d696f6fbb-t2pd2 1/1 Running 0 1m

[root@master01 ceph]# kubectl get pod -n rook-ceph -o wide

NAME READY STATUS RESTARTS AGE IP NODE

rook-ceph-mgr-a-56d5cbc754-p9gsn 1/1 Running 0 1m 10.244.2.12 node02

rook-ceph-mon-a-ddbc448d6-5q466 1/1 Running 0 12m 10.244.1.10 node01

rook-ceph-mon-d-5d696f6fbb-t2pd2 1/1 Running 0 10m 10.244.2.11 node02

rook-ceph-mon-f-576847df6c-ntwjf 1/1 Running 0 8m 10.244.1.11 node01

rook-ceph-osd-0-66469b499d-ls9pg 1/1 Running 0 19s 10.244.1.13 node01

rook-ceph-osd-1-757c69cfcf-th667 1/1 Running 0 17s 10.244.2.14 node02

rook-ceph-osd-prepare-node01-bgk4j 0/2 Completed 1 34s 10.244.1.12 node01

rook-ceph-osd-prepare-node02-d5q54 0/2 Completed 1 34s 10.244.2.13 node02

cephcluster 是一个 CRD 自定义资源类型,通过它来创建一些列 ceph 的 mgr、osd 等。我们可以直接使用默认配置,默认开启 3 个 mon 资源,dataDirHostPath 存储路径在 /var/lib/rook,当然也可以自定义配置,例如 DATADIRHOSTPATH、MONCOUNT 等,可以参考https://rook.github.io/docs/rook/v0.9/ceph-cluster-crd.html

配置 Rook Dashboard

[root@master01 ceph]# kubectl get svc -n rook-ceph

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rook-ceph-mgr ClusterIP 10.102.154.66 9283/TCP 4m

rook-ceph-mgr-dashboard ClusterIP 10.105.132.253 8443/TCP 4m

rook-ceph-mon-a ClusterIP 10.109.149.87 6790/TCP 15m

rook-ceph-mon-d ClusterIP 10.99.79.25 6790/TCP 13m

rook-ceph-mon-f ClusterIP 10.96.202.164 6790/TCP 12m

#修改service类型为NodePort:

[root@master01 ceph]# vim dashboard-external-https.yaml

[root@master01 ceph]# cat dashboard-external-https.yaml

apiVersion: v1

kind: Service

metadata:

name: rook-ceph-mgr-dashboard-external-https

namespace: rook-ceph

labels:

app: rook-ceph-mgr

rook_cluster: rook-ceph

spec:

ports:

- name: dashboard

port: 8443

protocol: TCP

targetPort: 8443

nodePort: 30007 # 固定端口访问

selector:

app: rook-ceph-mgr

rook_cluster: rook-ceph

sessionAffinity: None

type: NodePort # 修改类型

#部署

[root@master01 ceph]# kubectl create -f dashboard-external-https.yaml

service/rook-ceph-mgr-dashboard-external-https created

[root@master01 ceph]# kubectl get svc -n rook-ceph

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rook-ceph-mgr ClusterIP 10.102.154.66 9283/TCP 6m

rook-ceph-mgr-dashboard ClusterIP 10.105.132.253 8443/TCP 6m

rook-ceph-mgr-dashboard-external-https NodePort 10.99.174.253 8443:30007/TCP 7s

rook-ceph-mon-a ClusterIP 10.109.149.87 6790/TCP 18m

rook-ceph-mon-d ClusterIP 10.99.79.25 6790/TCP 16m

rook-ceph-mon-f ClusterIP 10.96.202.164 6790/TCP 14m

获取两种密码方式(默认用户admin):

方式一:rook-ceph 默认创建了一个 rook-ceph-dashboard-password 的 secret,可以用这种方式获取 password。

[root@master01 ceph]# kubectl -n rook-ceph get secret rook-ceph-dashboard-password -o jsonpath='{.data.password}' | base64 --decode

QIOiG58xcR

方式二:从 rook-ceph-mgr Pod 的日志中获取,日志会打印出来用户名和密码。

[root@master01 ceph]# kubectl get pod -n rook-ceph | grep mgr

rook-ceph-mgr-a-56d5cbc754-p9gsn 1/1 Running 0 10m

[root@master01 ceph]# kubectl -n rook-ceph logs rook-ceph-mgr-a-56d5cbc754-p9gsn | grep password

2019-11-29 08:26:11.446 7f25e6654700 0 log_channel(audit) log [DBG] : from='client.4139 10.244.1.8:0/1021643349' entity='client.admin' cmd=[{"username": "admin", "prefix": "dashboard set-login-credentials", "password": "QIOiG58xcR", "target": ["mgr", ""], "format": "json"}]: dispatch

[root@master01 ceph]# kubectl create -f toolbox.yaml

deployment.apps/rook-ceph-tools created

[root@master01 ceph]# kubectl -n rook-ceph get pod -l "app=rook-ceph-tools"

NAME READY STATUS RESTARTS AGE

rook-ceph-tools-76bf8448f6-lhk26 1/1 Running 0 35s

#验证是否成功(错误提示不用管)

[root@master01 ceph]# kubectl -n rook-ceph exec -it rook-ceph-tools-76bf8448f6-lhk26 bash

bash: warning: setlocale: LC_CTYPE: cannot change locale (en_US.UTF-8): No such file or directory

bash: warning: setlocale: LC_COLLATE: cannot change locale (en_US.UTF-8): No such file or directory

bash: warning: setlocale: LC_MESSAGES: cannot change locale (en_US.UTF-8): No such file or directory

bash: warning: setlocale: LC_NUMERIC: cannot change locale (en_US.UTF-8): No such file or directory

bash: warning: setlocale: LC_TIME: cannot change locale (en_US.UTF-8): No such file or directory

#测试

[root@node02 /]# ceph status

cluster:

id: 8104f7e4-26fc-4b45-b67b-6fa7a128dce6

health: HEALTH_OK

services:

mon: 3 daemons, quorum f,d,a

mgr: a(active)

osd: 2 osds: 2 up, 2 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 12 GiB used, 22 GiB / 34 GiB avail

pgs:

[root@node02 /]# ceph osd status

+----+--------+-------+-------+--------+---------+--------+---------+-----------+

| id | host | used | avail | wr ops | wr data | rd ops | rd data | state |

+----+--------+-------+-------+--------+---------+--------+---------+-----------+

| 0 | node01 | 6049M | 11.0G | 0 | 0 | 0 | 0 | exists,up |

| 1 | node02 | 6007M | 11.1G | 0 | 0 | 0 | 0 | exists,up |

+----+--------+-------+-------+--------+---------+--------+---------+-----------+

[root@node02 /]# ceph df

GLOBAL:

SIZE AVAIL RAW USED %RAW USED

34 GiB 22 GiB 12 GiB 34.66

POOLS:

NAME ID USED %USED MAX AVAIL OBJECTS

[root@node02 /]# rados df

POOL_NAME USED OBJECTS CLONES COPIES MISSING_ON_PRIMARY UNFOUND DEGRADED RD_OPS RD WR_OPS WR

total_objects 0

total_used 12 GiB

total_avail 22 GiB

total_space 34 GiB

创建pool:

[root@node02 /]# ceph osd pool create pool1 5

pool 'pool1' created

[root@node02 /]# ceph df

GLOBAL:

SIZE AVAIL RAW USED %RAW USED

34 GiB 22 GiB 12 GiB 34.65

POOLS:

NAME ID USED %USED MAX AVAIL OBJECTS

pool1 1 0 B 0 20 GiB 0

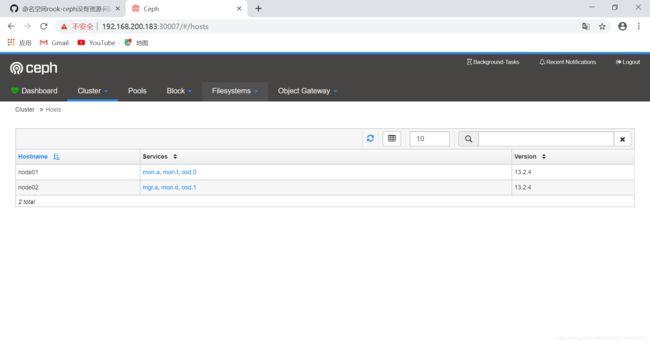

dashbool查看pool: