Springboot集成Flume对接kafka实现日志采集

一.flume的安装

- 安装JDK 1.8+ 配置JAVA_HOME环境变量-略

- 安装Flume下载地址http://mirrors.tuna.tsinghua.edu.cn/apache/flume/1.9.0/apache-flume-1.9.0-bi

n.tar.gz

3.解压安装flume

[root@CentOS ~]# tar -zxf apache-flume-1.9.0-bin.tar.gz -C /usr/

[root@CentOS ~]# cd /usr/apache-flume-1.9.0-bin/

[root@CentOS apache-flume-1.9.0-bin]# ./bin/flume-ng version

Flume 1.9.0

Source code repository: https://git-wip-us.apache.org/repos/asf/flume.git

Revision: d4fcab4f501d41597bc616921329a4339f73585e

Compiled by fszabo on Mon Dec 17 20:45:25 CET 2018

From source with checksum 35db629a3bda49d23e9b3690c80737f9

flume的配置文件如下:

[root@mycat conf]# vi usermodel.properties

# 声明基本组件 Source Channel Sink example2.properties

a1.sources = s1

a1.sinks = sk1

a1.channels = c1

# 配置Source组件,从Socket中接收⽂本数据

a1.sources.s1.type = avro

a1.sources.s1.bind = mycat

a1.sources.s1.port = 44444

# 配置Sink组件,将接收数据输出到对应kafka

a1.sinks.sk1.type = org.apache.flume.sink.kafka.KafkaSink

a1.sinks.sk1.kafka.bootstrap.servers = mycat:9092

a1.sinks.sk1.kafka.topic = topic01

a1.sinks.sk1.kafka.flumeBatchSize = 20

a1.sinks.sk1.kafka.producer.acks = 1

a1.sinks.sk1.kafka.producer.linger.ms = 1

a1.sinks.sk1.kafka.producer.compression.type = snappy

# 配置Channel通道,主要负责数据缓冲

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# 进⾏组件间的绑定

a1.sources.s1.channels = c1

a1.sinks.sk1.channel = c1

以上配置文件各项信息可在flume官网进行查询。

a1.sources.s1.bind = mycat

mycat为我的虚拟机的主机名,配置为自己的主机名即可。

flume的启动命令:

[root@CentOS apache-flume-1.9.0-bin]# ./bin/flume-ng agent --conf conf/ --name a1 --

conf-file conf/usermodel.properties -Dflume.root.logger=INFO,console

flume内置了一个avro服务器可以提供测试。新建一个t_tmp用来测试flume是否配置成功.

[root@CentOS apache-flume-1.9.0-bin]# ./bin/flume-ng avro-client --host CentOS --port

44444 --filename /root/t_emp

二.Springboot项目配置

创建spring项目,引入如下依赖:

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<!--flume日志采集的依赖-->

<dependency>

<groupId>org.apache.flume</groupId>

<artifactId>flume-ng-sdk</artifactId>

<version>1.9.0</version>

</dependency>

<!-- 引入logback-flume的依赖 -->

<dependency>

<groupId>com.teambytes.logback</groupId>

<artifactId>logback-flume-appender_2.10</artifactId>

<version>0.0.9</version>

</dependency>

然后在resources目录下创建logback.xml配置文件

logback.xml配置如下:

<?xml version="1.0" encoding="UTF-8"?>

<configuration scan="true" scanPeriod="60 seconds" debug="false">

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender" >

<encoder>

<pattern>%p %c#%M %d{yyyy-MM-dd HH:mm:ss} %m%n</pattern>

<charset>UTF-8</charset>

</encoder>

</appender>

//此处为flume日志采集的配置

<appender name="flume" class="com.teambytes.logback.flume.FlumeLogstashV1Appender">

<flumeAgents>

//配置flume所在服务器的ip地址以及端口号。如果配置了主机名映射,可以填写主机名.

mycat:44444

</flumeAgents>

<flumeProperties>

connect-timeout=4000;

request-timeout=8000

</flumeProperties>

<batchSize>1</batchSize>

<reportingWindow>1</reportingWindow>

<additionalAvroHeaders>

myHeader=myValue

</additionalAvroHeaders>

<application>smapleapp</application>

<layout class="ch.qos.logback.classic.PatternLayout">

<pattern>%p %c#%M %d{yyyy-MM-dd HH:mm:ss} %m%n</pattern>

</layout>

</appender>

<!-- 控制台输出日志级别 -->

<root level="ERROR">

<appender-ref ref="STDOUT" />

</root>

<logger name="com.wushuang.interceptor" level="INFO" additivity="false">

<appender-ref ref="STDOUT" />

//配置完成之后一定要在此处引入flume配置组件,不然flume接收不到发送的日志。

<appender-ref ref="flume" />

</logger>

<logger name="com.wushuang.flume" level="DEBUG" additivity="false">

<appender-ref ref="STDOUT" />

<appender-ref ref="flume" />

</logger>

</configuration>

<logger name="com.wushuang.interceptor" level="INFO" additivity="false">

<appender-ref ref="STDOUT" />

//配置完成之后一定要在此处引入flume日志配置组件,不然flume接收不到发送的日志。

<appender-ref ref="flume" />

</logger>

配置完成之后一定要在此处引入flume日志配置组件,不然flume接收不到发送的日志。

配置完成之后一定要在此处引入flume日志配置组件,不然flume接收不到发送的日志。

配置完成之后一定要在此处引入flume日志配置组件,不然flume接收不到发送的日志!!!

配置完成后可以进行测试

@Component

public class LoginFeatuersInterceptor implements HandlerInterceptor {

@Autowired

private RestTemplate restTemplate;

private Logger logger = LoggerFactory.getLogger(LoginFeatuersInterceptor.class);

@Override

public boolean preHandle(HttpServletRequest request, HttpServletResponse response, Object handler) throws Exception {

Cookie[] cookies = request.getCookies();

String features = null;

for (Cookie cookie : cookies) {

if (cookie.getName().equals("userKeyFeatures")){

features = cookie.getValue();

}

}

//获取当前登录用户的用户名

String name = request.getParameter("name");

logger.debug("当前登录的用户的用户名为:"+name);

//获取用户登录密码

String password = request.getParameter("password");

//获取用户输入特征

logger.debug("获取的用户键盘特征为:"+URLDecoder.decode(features));

//获取用户ip

String ip = request.getRemoteAddr();

//URLDecoder.decode(features)-->把cookie解码

logger.debug("获取的用户密码为:"+password);

logger.debug("获取的用户ip为:"+ip);

//根据用户IP获取经纬度

String ipUrl = "http://ip-api.com/json/"+"115.48.115.83";

//使用map接收传递回来的数据.

HashMap map = restTemplate.getForObject(ipUrl, HashMap.class);

logger.debug("当前用户登录的经纬度为:"+map.get("lat")+"\t"+map.get("lon"));

//获取用户登录设备

String userEquipment = request.getHeader("User-Agent");

logger.debug("当前用户登陆的设备:"+userEquipment);

//flume采集日志的格式为:

//用户名 密码 输入特征 用户ip 经纬度 登录设备

logger.info(name+" "+password+" "+URLDecoder.decode(features)+" "+ip+" "+map.get("lat")+" "+map.get("lon")+" "+userEquipment);

return true;

}

}

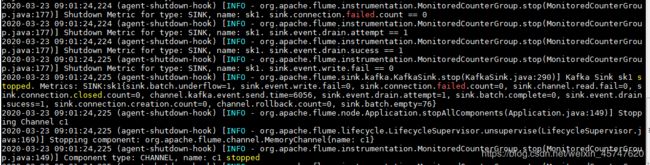

我做的是一个收集用户登录行为的日志。简单测试只需要测试加入的日志能否被flume接收即可。

到这里,spring就已经完成了和flume的整合,产生的日志已经顺利的被flume采集。下面,配置下kafka用来接收flume写出的日志.

三.kafka的安装与配置

单机环境

- 安装JDK,配置JAVA_HOME

- 配置主机名

- 配置主机名和IP映射

- 关闭防火墙

- 安装配置Zookeeper(kafka的运行依赖于zookeeper组件)

[root@CentOS ~]# tar -zxf zookeeper-3.4.6.tar.gz -C /usr/

[root@CentOS ~]# cd /usr/zookeeper-3.4.6/

[root@CentOS zookeeper-3.4.6]# cp conf/zoo_sample.cfg conf/zoo.cfg

[root@CentOS zookeeper-3.4.6]# vi conf/zoo.cfg

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/root/zkdata

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

[root@CentOS ~]# mkdir /root/zkdata

- 启动zookeeper服务

[root@CentOS zookeeper-3.4.6]# ./bin/zkServer.sh

JMX enabled by default

Using config: /usr/zookeeper-3.4.6/bin/../conf/zoo.cfg

Usage: ./bin/zkServer.sh {start|start-foreground|stop|restart|status|upgrade|print-cmd}

[root@CentOS zookeeper-3.4.6]# ./bin/zkServer.sh start zoo.cfg

JMX enabled by default

Using config: /usr/zookeeper-3.4.6/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[root@CentOS zookeeper-3.4.6]#

[root@CentOS zookeeper-3.4.6]# ./bin/zkServer.sh status zoo.cfg

JMX enabled by default

Using config: /usr/zookeeper-3.4.6/bin/../conf/zoo.cfg

Mode: standalone

[root@CentOS zookeeper-3.4.6]# jps

1778 Jps

1733 QuorumPeerMain

- 安装配置Kafka集群

[root@CentOS ~]# tar -zxf kafka_2.11-2.2.0.tgz -C /usr/

[root@CentOS ~]# cd /usr/kafka_2.11-2.2.0/

[root@CentOS kafka_2.11-2.2.0]# vi config/server.properties

############################# Server Basics #############################

# The id of the broker. This must be set to a unique integer for each broker.

broker.id=0

############################# Socket Server Settings #############################

listeners=PLAINTEXT://CentOS:9092

############################# Log Basics #############################

log.dirs=/usr/kafka-logs

############################# Log Retention Policy #############################

log.retention.hours=168

############################# Zookeeper #############################

zookeeper.connect=CentOS:2181

[root@CentOS kafka_2.11-2.2.0]# ./bin/kafka-server-start.sh -daemon config/server.properties

- 测试Kafka服务

创建Topic

[root@CentOS kafka_2.11-2.2.0]# ./bin/kafka-topics.sh

--bootstrap-server CentOS:9092

--create --topic topic01

--partitions 1

--replication-factor 1

kafka自2.2.0版本以后,Toipic的管理使用的的是–bootstrap-server不在使用–zookeeper,–partitions:指定分区数、–replication-factor指定副本因子数,该副本因子不能大于可用的broker节点的个数

查看Topic列表

[root@CentOS kafka_2.11-2.2.0]# ./bin/kafka-topics.sh

--bootstrap-server CentOS:9092

--list

topic01

查看Topic详情

[root@CentOS kafka_2.11-2.2.0]# ./bin/kafka-topics.sh --bootstrap-server CentOS:9092 --describe --topic topic01

Topic:topic01 PartitionCount:3 ReplicationFactor:1 Configs:segment.bytes=1073741824

Topic: topic01 Partition: 0 Leader: 0 Replicas: 0 Isr: 0

Topic: topic01 Partition: 1 Leader: 0 Replicas: 0 Isr: 0

Topic: topic01 Partition: 2 Leader: 0 Replicas: 0 Isr: 0

Topic管理

创建

[root@CentOSA kafka_2.11-2.2.0]# ./bin/kafka-topics.sh

--bootstrap-server CentOSA:9092,CentOSB:9092,CentOSC:9092

--create

--topic topic02

--partitions 3

--replication-factor 3

列表

[root@CentOSA kafka_2.11-2.2.0]# ./bin/kafka-topics.sh

--bootstrap-server CentOSA:9092,CentOSB:9092,CentOSC:9092

--list

topic01

topic02

详情

[root@CentOSA kafka_2.11-2.2.0]# ./bin/kafka-topics.sh

--bootstrap-server CentOSA:9092,CentOSB:9092,CentOSC:9092

--describe

--topic topic01

Topic:topic01 PartitionCount:3 ReplicationFactor:3 Configs:segment.bytes=1073741824

Topic: topic01 Partition: 0 Leader: 0 Replicas: 0,2,3 Isr: 0,2,3

Topic: topic01 Partition: 1 Leader: 2 Replicas: 2,3,0 Isr: 2,3,0

Topic: topic01 Partition: 2 Leader: 0 Replicas: 3,0,2 Isr: 0,2,3

修改

[root@CentOSA kafka_2.11-2.2.0]# ./bin/kafka-topics.sh

--bootstrap-server CentOSA:9092,CentOSB:9092,CentOSC:9092

--create

--topic topic03

--partitions 1

--replication-factor 1

[root@CentOSA kafka_2.11-2.2.0]# ./bin/kafka-topics.sh

--bootstrap-server CentOSA:9092,CentOSB:9092,CentOSC:9092

--alter

--topic topic03

--partitions 2

删除

[root@CentOSA kafka_2.11-2.2.0]# ./bin/kafka-topics.sh

--bootstrap-server CentOSA:9092,CentOSB:9092,CentOSC:9092

--delete

--topic topic03

订阅

[root@CentOSA kafka_2.11-2.2.0]# ./bin/kafka-console-consumer.sh

--bootstrap-server CentOSA:9092,CentOSB:9092,CentOSC:9092

--topic topic01

--group g1

--property print.key=true

--property print.value=true

--property key.separator=,

消费组

[root@CentOSA kafka_2.11-2.2.0]# ./bin/kafka-consumer-groups.sh

--bootstrap-server CentOSA:9092,CentOSB:9092,CentOSC:9092

--list

g1

[root@CentOSA kafka_2.11-2.2.0]# ./bin/kafka-consumer-groups.sh

--bootstrap-server CentOSA:9092,CentOSB:9092,CentOSC:9092

--describe

--group g1

TOPIC PARTITION CURRENT-OFFSET LOG-END-OFFSET LAG CONSUMER-ID HOST CLIENT-ID

topic01 1 0 0 0 consumer-1-** /192.168.52.130 consumer-1

topic01 0 0 0 0 consumer-1-** /192.168.52.130 consumer-1

topic01 2 1 1 0 consumer-1-** /192.168.52.130 consumer-1

生产

[root@CentOSA kafka_2.11-2.2.0]# ./bin/kafka-console-producer.sh

--broker-list CentOSA:9092,CentOSB:9092,CentOSC:9092

--topic topic01

上面介绍了一些kafka的基本操作.下面完成flume的对接

首先创建一个topic,名字一定要和flume的配置文件中 a1.sinks.sk1.kafka.topic = topic01配置的topic的名字保持一致.

[root@CentOSA kafka_2.11-2.2.0]# ./bin/kafka-topics.sh

--bootstrap-server CentOSA:9092

--create

--topic topic01

--partitions 3

--replication-factor 3

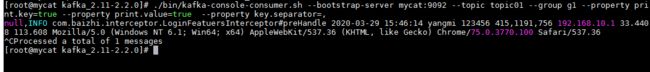

然后订阅该topic,运行springboot项目,查看kafka的订阅情况

[root@CentOSA kafka_2.11-2.2.0]# ./bin/kafka-console-consumer.sh

--bootstrap-server CentOSA:9092

--topic topic01

--group g1

--property print.key=true

--property print.value=true

--property key.separator=,