ELK收集MySQL慢日志

ELK6.6.1+FileBeat6.6.1收集mysql慢日志

一、elk简单介绍

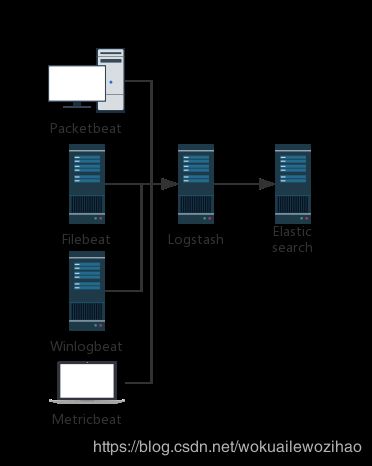

1、之前常说的elk,现已被官方整合为Elastic Stack,官网地址(https://www.elastic.co/cn/products),官网给出的架构如下, 其中Beats是一系列轻量级日志收集器,ELK中E指Elasticsearch,L指Logstash,K指Kibana:

其中各个产品的介绍、特性等建议直接查看官网。

2、常用部署架构

a、单一的架构,logstash作为日志搜集器,从数据源采集数据,并对数据进行过滤,格式化处理,然后交由Elasticsearch存储,kibana对日志进行可视化处理。

b、多节点logstash,这种架构模式适合需要采集日志的客户端不多,且各服务端cpu,内存等资源充足的情况下。因为每个节点都安装Logstash, 非常消耗节点资源。其中,logstash作为日志搜集器,将每一台节点的数据发送到Elasticsearch上进行存储,再由kibana进行可视化分析。

c、Beats是一系列轻量级的日志搜集器,其不占用系统资源,自出现之后,迅速更新了原有的elk架构。Filebeats将收集到的数据发送给Logstash解析过滤,在Filebeats与Logstash传输数据的过程中,为了安全性,可以通过ssl认证来加强安全性。之后将其发送到Elasticsearch存储,并由kibana可视化分析。

二、安装部署,实现mysql慢日志的收集和分析

为了方便,实验环境只用了一台服务器,即使用单一的架构,我的环境:

| 系统 | centos7 |

| mysql | 5.6 |

| jdk | 1.8 |

| elk+filebeat | 6.6.1 |

| 静态ip | 192.168.8.254 |

其中elasticsearch和kibana的安装不在赘述,这里的重点主要是filebeat和logstash的一些配置。

1、首先开启Mysql5.6的慢日志。

方法一:登录mysql修改,此方法是临时修改,mysql重启后就会失效

mysql> set global slow_query_log='ON';

Query OK, 0 rows affected (0.00 sec)

mysql> set global slow_query_log_file='/home/mysql5.6/mysql-slow.log';

Query OK, 0 rows affected (0.00 sec)

mysql> set global long_query_time=1;

Query OK, 0 rows affected (0.00 sec)

mysql> show variables like 'slow_query%';

+---------------------+-------------------------------+

| Variable_name | Value |

+---------------------+-------------------------------+

| slow_query_log | ON |

| slow_query_log_file | /home/mysql5.6/mysql-slow.log |

+---------------------+-------------------------------+

2 rows in set (0.00 sec)

mysql> show variables like 'long_query_time';

+-----------------+----------+

| Variable_name | Value |

+-----------------+----------+

| long_query_time | 0.100000 |

+-----------------+----------+

1 row in set (0.00 sec)

方法二:永久修改

编辑配置文件/etc/my.cnf加入如下内容

[mysqld]

slow_query_log = ON

slow_query_log_file = /home/mysql5.6/mysql-slow.log

long_query_time = 1

修改后运行:systemctl restart mysqld

再次登录mysql验证:

使用下面命令验证

mysql> show variables like 'slow_query%';

+---------------------+-------------------------------+

| Variable_name | Value |

+---------------------+-------------------------------+

| slow_query_log | ON |

| slow_query_log_file | /home/mysql5.6/mysql-slow.log |

+---------------------+-------------------------------+

2 rows in set (0.00 sec)

新一点的版本如:MySql5.7/MySql8.0,其中5.7应该和5.6一样,最新版本mysql8.0可以参考其他文章https://blog.csdn.net/phker/article/details/83146676

验证一下,运行一条sql:select sleep(1);

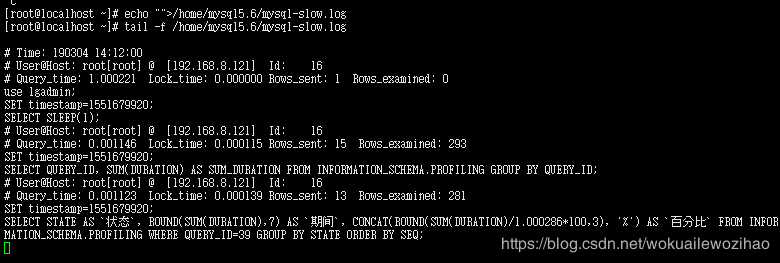

测试成功,查询一条sql产生的日志格式如下:

# Time: 190304 14:12:00

# User@Host: root[root] @ [192.168.8.121] Id: 16

# Query_time: 1.000221 Lock_time: 0.000000 Rows_sent: 1 Rows_examined: 0

use lgadmin;

SET timestamp=1551679920;

SELECT SLEEP(1);

# User@Host: root[root] @ [192.168.8.121] Id: 16

# Query_time: 0.001146 Lock_time: 0.000115 Rows_sent: 15 Rows_examined: 293

SET timestamp=1551679920;

SELECT QUERY_ID, SUM(DURATION) AS SUM_DURATION FROM INFORMATION_SCHEMA.PROFILING GROUP BY QUERY_ID;

# User@Host: root[root] @ [192.168.8.121] Id: 16

# Query_time: 0.001123 Lock_time: 0.000139 Rows_sent: 13 Rows_examined: 281

SET timestamp=1551679920;

SELECT STATE AS `状态`, ROUND(SUM(DURATION),7) AS `期间`, CONCAT(ROUND(SUM(DURATION)/1.000286*100,3), '%') AS `百分比` FROM INFORMATION_SCHEMA.PROFILING WHERE QUERY_ID=39 GROUP BY STATE ORDER BY SEQ;要特别注意一下日志的格式,不同版本mysql的满日至格式稍有不同,因为filebeat和logstash中都需要对日志作匹配。

可以看出,# Time:这行没啥用,基本上是# User@Host: 。。。# Query_time:。。。为一组记录,也就是说一条慢sql产生了三条日志记录;

2、安装filebeat模块

#安装完毕重启Elasticsearch

cd /home/elasticsearch-6.6.1/bin

./elasticsearch-plugin install ingest-geoip

./elasticsearch-plugin install ingest-user-agent3、安装配置filebeat6.6.1

//下载filebeat最新版本,文章发布时为6.6.1,切记Elastic Stack的产品版本要保持一致

[root@localhost home]# curl -L -O https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-6.6.1-linux-x86_64.tar.gz

//解压

[root@localhost home]# tar -zvxf filebeat-6.6.1-linux-x86_64

//进入filebeat-6.6.1-linux-x86_64

[root@localhost home]# cd filebeat-6.6.1-linux-x86_64/

[root@localhost filebeat-6.6.1-linux-x86_64]# ls

data filebeat filebeat.yml LICENSE.txt module NOTICE.txt

fields.yml filebeat.reference.yml kibana logs modules.d README.md

[root@localhost filebeat-6.6.1-linux-x86_64]# vi filebeat.yml

//然后修改filebeat.yml如下,作了一些精简,可直接拷贝:

#需要注意的是收集的日志是文档,filebeat按行读取分割的,所以带来的问题有排除行,合并行等问题,filebeat中使用正则表达式来实现,

#beats系列工具主要是收集数据,对数据的处理能力不强,一般是使用logstash的filter来实现数据过滤

filebeat.inputs:

- type: log

#为true时才会收集paths中设置的日志,我们这里暂时选择关闭,因为filebeat提供了很多已经存在的插件,例如收集nginx、apache、mysql的日志,在module文件夹中

enabled: false

#需要收集的日志地址,多个

paths:

#- /home/mysql5.6/mysql-slow.log

#- c:\programdata\elasticsearch\logs\*

#使用正则去掉不需要的日志行,例如不需要DBG信息

#exclude_lines: ['^DBG']

# 包含行,例如我需要ERR和WARN信息

#include_lines: ['^ERR', '^WARN']

#排除文件,例如paths中出现 /*时,表示某文件夹下所有文件,但可能有些文件日志不需要收集

#exclude_files: ['.gz$']

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 3

setup.kibana:

#选择输出到logstash

output.logstash:

# Logstash的地址

hosts: ["192.168.8.254:5044"]

processors:

- add_host_metadata: ~

- add_cloud_metadata: ~

修改完成后保存即可,接下来修改自带的mysqlmodule:

[root@localhost filebeat-6.6.1-linux-x86_64]# cd module/mysql/

[root@localhost mysql]# ls

error module.yml slowlog

[root@localhost mysql]# cd slowlog/

[root@localhost slowlog]# ls

config ingest manifest.yml

[root@localhost slowlog]# vi manifest.yml

修改 manifest.yml中mysql慢日志所在地址即可:

module_version: "1.0"

var:

- name: paths

default:

#第一步中,设置的mysql慢日志所在地址

- /home/mysql5.6/mysql-slow.log

ingest_pipeline: ingest/pipeline.json

input: config/slowlog.yml

修改完后保存,接下来修改ingest/pipeline.json

[root@localhost slowlog]# cd ingest/

[root@localhost ingest]# ls

pipeline.json

[root@localhost ingest]#vi pipeline.json

这里的文件内容比较多,只用修改一个地方,将patterns修改为

"patterns":[

"^# User@Host: %{USER:mysql.slowlog.user}(\\[[^\\]]+\\])? @ %{HOSTNAME:mysql.slowlog.host} \\[(IP:mysql.slowlog.ip)?\\](\\s*Id:\\s* %{NUMBER:mysql.slowlog.id})?\n# Query_time: %{NUMBER:mysql.slowlog.query_time.sec}\\s* Lock_time: %{NUMBER:mysql.slowlog.lock_time.sec}\\s* Rows_sent: %{NUMBER:mysql.slowlog.rows_sent}\\s* Rows_examined: %{NUMBER:mysql.slowlog.rows_examined}\n(SET timestamp=%{NUMBER:mysql.slowlog.timestamp};\n)?%{GREEDYMULTILINE:mysql.slowlog.query}"

]

注意:patterns用的是正则表达式,匹配的是日志格式,根据mysql版本不同,慢日志的格式可能不太一样,可能需要进行一些修改

修改保存后接着修改 ../config/slowlog.yml

[root@localhost ingest]# cd ../config/

[root@localhost config]# ls

slowlog.yml

[root@localhost config]#vi slowlog.yml

#在最后一行exclude_lines中加入一个匹配:'^# Time',就是将上述日志格式中的# Time开头那一行忽略掉

type: log

paths:

{{ range $i, $path := .paths }}

- {{$path}}

{{ end }}

exclude_files: ['.gz$']

multiline:

pattern: '^# Time|^# User@Host: '

negate: true

match: after

exclude_lines: ['^[\/\w\.]+, Version: .* started with:.*', '^# Time'] # Exclude the header

保存修改后启用我们刚刚修改的mysql module

[root@localhost config]# cd /home/filebeat-6.6.1-linux-x86_64/

[root@localhost filebeat-6.6.1-linux-x86_64]# ./filebeat modules enable mysql

Enabled mysql

//查看已启用的module

[root@localhost filebeat-6.6.1-linux-x86_64]# ./filebeat modules list

Enabled:

mysql

Disabled:

apache2

auditd

elasticsearch

haproxy

icinga

iis

kafka

kibana

logstash

mongodb

nginx

osquery

postgresql

redis

suricata

system

traefik

//接下来可以测试filebeat的效果了

//PS:可以看出来module中的内容其实可以都配在filebeat.xml当中,不过这样不太好管理测试filebeat效果:

运行:

[root@localhost filebeat-6.6.1-linux-x86_64]# ./filebeat -e -c filebeat.yml -d "publish"

2019-03-04T14:29:53.589+0800 INFO instance/beat.go:616 Home path: [/home/filebeat-6.6.1-linux-x86_64] Config path: [/home/filebeat-6.6.1-linux-x86_64] Data path: [/home/filebeat-6.6.1-linux-x86_64/data] Logs path: [/home/filebeat-6.6.1-linux-x86_64/logs]

2019-03-04T14:29:53.590+0800 INFO instance/beat.go:623 Beat UUID: e07be1a2-2174-46e5-aa0b-f636b56a3d30

2019-03-04T14:29:53.590+0800 INFO [seccomp] seccomp/seccomp.go:116 Syscall filter successfully installed

2019-03-04T14:29:53.590+0800 INFO [beat] instance/beat.go:936 Beat info {"system_info": {"beat": {"path": {"config": "/home/filebeat-6.6.1-linux-x86_64", "data": "/home/filebeat-6.6.1-linux-x86_64/data", "home": "/home/filebeat-6.6.1-linux-x86_64", "logs": "/home/filebeat-6.6.1-linux-x86_64/logs"}, "type": "filebeat", "uuid": "e07be1a2-2174-46e5-aa0b-f636b56a3d30"}}}

2019-03-04T14:29:53.590+0800 INFO [beat] instance/beat.go:945 Build info {"system_info": {"build": {"commit": "928f5e3f35fe28c1bd73513ff1cc89406eb212a6", "libbeat": "6.6.1", "time": "2019-02-13T16:12:26.000Z", "version": "6.6.1"}}}

2019-03-04T14:29:53.590+0800 INFO [beat] instance/beat.go:948 Go runtime info {"system_info": {"go": {"os":"linux","arch":"amd64","max_procs":4,"version":"go1.10.8"}}}

2019-03-04T14:29:53.591+0800 INFO [beat] instance/beat.go:952 Host info {"system_info": {"host": {"architecture":"x86_64","boot_time":"2019-02-28T14:39:30+08:00","containerized":true,"name":"localhost.localdomain","ip":["127.0.0.1/8","::1/128","192.168.8.106/24","fe80::872c:6ca:3666:3f88/64","192.168.8.254/22","fe80::aae2:b334:2fd9:6f63/64","192.168.122.1/24"],"kernel_version":"3.10.0-862.14.4.el7.x86_64","mac":["50:9a:4c:77:88:91","50:9a:4c:77:88:92","52:54:00:01:54:80","52:54:00:01:54:80"],"os":{"family":"redhat","platform":"centos","name":"CentOS Linux","version":"7 (Core)","major":7,"minor":5,"patch":1804,"codename":"Core"},"timezone":"CST","timezone_offset_sec":28800,"id":"808d566509d84563b4db09739872d798"}}}

2019-03-04T14:29:53.591+0800 INFO [beat] instance/beat.go:981 Process info {"system_info": {"process": {"capabilities": {"inheritable":null,"permitted":["chown","dac_override","dac_read_search","fowner","fsetid","kill","setgid","setuid","setpcap","linux_immutable","net_bind_service","net_broadcast","net_admin","net_raw","ipc_lock","ipc_owner","sys_module","sys_rawio","sys_chroot","sys_ptrace","sys_pacct","sys_admin","sys_boot","sys_nice","sys_resource","sys_time","sys_tty_config","mknod","lease","audit_write","audit_control","setfcap","mac_override","mac_admin","syslog","wake_alarm","block_suspend"],"effective":["chown","dac_override","dac_read_search","fowner","fsetid","kill","setgid","setuid","setpcap","linux_immutable","net_bind_service","net_broadcast","net_admin","net_raw","ipc_lock","ipc_owner","sys_module","sys_rawio","sys_chroot","sys_ptrace","sys_pacct","sys_admin","sys_boot","sys_nice","sys_resource","sys_time","sys_tty_config","mknod","lease","audit_write","audit_control","setfcap","mac_override","mac_admin","syslog","wake_alarm","block_suspend"],"bounding":["chown","dac_override","dac_read_search","fowner","fsetid","kill","setgid","setuid","setpcap","linux_immutable","net_bind_service","net_broadcast","net_admin","net_raw","ipc_lock","ipc_owner","sys_module","sys_rawio","sys_chroot","sys_ptrace","sys_pacct","sys_admin","sys_boot","sys_nice","sys_resource","sys_time","sys_tty_config","mknod","lease","audit_write","audit_control","setfcap","mac_override","mac_admin","syslog","wake_alarm","block_suspend"],"ambient":null}, "cwd": "/home/filebeat-6.6.1-linux-x86_64", "exe": "/home/filebeat-6.6.1-linux-x86_64/filebeat", "name": "filebeat", "pid": 8650, "ppid": 3937, "seccomp": {"mode":"filter"}, "start_time": "2019-03-04T14:29:52.860+0800"}}}

2019-03-04T14:29:53.591+0800 INFO instance/beat.go:281 Setup Beat: filebeat; Version: 6.6.1

2019-03-04T14:29:56.592+0800 INFO add_cloud_metadata/add_cloud_metadata.go:319 add_cloud_metadata: hosting provider type not detected.

2019-03-04T14:29:56.593+0800 DEBUG [publish] pipeline/consumer.go:137 start pipeline event consumer

2019-03-04T14:29:56.593+0800 INFO [publisher] pipeline/module.go:110 Beat name: localhost.localdomain

2019-03-04T14:29:56.593+0800 INFO [monitoring] log/log.go:117 Starting metrics logging every 30s

2019-03-04T14:29:56.593+0800 INFO instance/beat.go:403 filebeat start running.

2019-03-04T14:29:56.594+0800 INFO registrar/registrar.go:134 Loading registrar data from /home/filebeat-6.6.1-linux-x86_64/data/registry

2019-03-04T14:29:56.594+0800 INFO registrar/registrar.go:141 States Loaded from registrar: 12

2019-03-04T14:29:56.594+0800 WARN beater/filebeat.go:367 Filebeat is unable to load the Ingest Node pipelines for the configured modules because the Elasticsearch output is not configured/enabled. If you have already loaded the Ingest Node pipelines or are using Logstash pipelines, you can ignore this warning.

2019-03-04T14:29:56.594+0800 INFO crawler/crawler.go:72 Loading Inputs: 1

2019-03-04T14:29:56.597+0800 INFO log/input.go:138 Configured paths: [/var/log/mysql/error.log* /var/log/mysqld.log*]

2019-03-04T14:29:56.598+0800 INFO log/input.go:138 Configured paths: [/home/mysql5.6/mysql-slow.log]

2019-03-04T14:29:56.598+0800 INFO crawler/crawler.go:106 Loading and starting Inputs completed. Enabled inputs: 0

2019-03-04T14:29:56.598+0800 INFO cfgfile/reload.go:150 Config reloader started

2019-03-04T14:29:56.600+0800 INFO log/input.go:138 Configured paths: [/var/log/mysql/error.log* /var/log/mysqld.log*]

2019-03-04T14:29:56.600+0800 INFO log/input.go:138 Configured paths: [/home/mysql5.6/mysql-slow.log]

2019-03-04T14:29:56.600+0800 INFO input/input.go:114 Starting input of type: log; ID: 4895000611580543503

2019-03-04T14:29:56.600+0800 INFO input/input.go:114 Starting input of type: log; ID: 17864741715548327178

2019-03-04T14:29:56.600+0800 INFO cfgfile/reload.go:205 Loading of config files completed.

2019-03-04T14:29:56.643+0800 INFO log/harvester.go:255 Harvester started for file: /home/mysql5.6/mysql-slow.loglogstash还没启动,所以会有一个连接错误,暂时不管,只要有日志打印到屏幕就算成功了,同样运行一个sql:select seleep(1);屏幕打印结果如下:

2019-03-04T14:33:05.834+0800 DEBUG [publish] pipeline/processor.go:308 Publish event: {

"@timestamp": "2019-03-04T06:33:05.834Z",

"@metadata": {

"beat": "filebeat",

"type": "doc",

"version": "6.6.1",

"pipeline": "filebeat-6.6.1-mysql-slowlog-pipeline"

},

"offset": 25,

"log": {

"file": {

"path": "/home/mysql5.6/mysql-slow.log"

},

"flags": [

"multiline"

]

},

"event": {

"dataset": "mysql.slowlog"

},

"input": {

"type": "log"

},

"host": {

"name": "localhost.localdomain",

"architecture": "x86_64",

"os": {

"platform": "centos",

"version": "7 (Core)",

"family": "redhat",

"name": "CentOS Linux",

"codename": "Core"

},

"id": "808d566509d84563b4db09739872d798",

"containerized": true

},

"message": "# User@Host: root[root] @ [192.168.8.121] Id: 16\n# Query_time: 1.000215 Lock_time: 0.000000 Rows_sent: 1 Rows_examined: 0\nSET timestamp=1551681183;\nSELECT SLEEP(1);",

"source": "/home/mysql5.6/mysql-slow.log",

"fileset": {

"module": "mysql",

"name": "slowlog"

},

"prospector": {

"type": "log"

},

"beat": {

"version": "6.6.1",

"name": "localhost.localdomain",

"hostname": "localhost.localdomain"

}

}

2019-03-04T14:33:05.835+0800 DEBUG [publish] pipeline/processor.go:308 Publish event: {

"@timestamp": "2019-03-04T06:33:05.834Z",

"@metadata": {

"beat": "filebeat",

"type": "doc",

"version": "6.6.1",

"pipeline": "filebeat-6.6.1-mysql-slowlog-pipeline"

},

"beat": {

"hostname": "localhost.localdomain",

"version": "6.6.1",

"name": "localhost.localdomain"

},

"host": {

"architecture": "x86_64",

"os": {

"version": "7 (Core)",

"family": "redhat",

"name": "CentOS Linux",

"codename": "Core",

"platform": "centos"

},

"id": "808d566509d84563b4db09739872d798",

"containerized": true,

"name": "localhost.localdomain"

},

"source": "/home/mysql5.6/mysql-slow.log",

"message": "# User@Host: root[root] @ [192.168.8.121] Id: 16\n# Query_time: 0.001150 Lock_time: 0.000097 Rows_sent: 15 Rows_examined: 282\nSET timestamp=1551681183;\nSELECT QUERY_ID, SUM(DURATION) AS SUM_DURATION FROM INFORMATION_SCHEMA.PROFILING GROUP BY QUERY_ID;",

"input": {

"type": "log"

},

"fileset": {

"module": "mysql",

"name": "slowlog"

},

"event": {

"dataset": "mysql.slowlog"

},

"prospector": {

"type": "log"

},

"offset": 197,

"log": {

"file": {

"path": "/home/mysql5.6/mysql-slow.log"

},

"flags": [

"multiline"

]

}

}

2019-03-04T14:33:10.835+0800 DEBUG [publish] pipeline/processor.go:308 Publish event: {

"@timestamp": "2019-03-04T06:33:05.835Z",

"@metadata": {

"beat": "filebeat",

"type": "doc",

"version": "6.6.1",

"pipeline": "filebeat-6.6.1-mysql-slowlog-pipeline"

},

"offset": 455,

"log": {

"file": {

"path": "/home/mysql5.6/mysql-slow.log"

},

"flags": [

"multiline"

]

},

"source": "/home/mysql5.6/mysql-slow.log",

"event": {

"dataset": "mysql.slowlog"

},

"prospector": {

"type": "log"

},

"input": {

"type": "log"

},

"fileset": {

"module": "mysql",

"name": "slowlog"

},

"message": "# User@Host: root[root] @ [192.168.8.121] Id: 16\n# Query_time: 0.001018 Lock_time: 0.000137 Rows_sent: 13 Rows_examined: 278\nSET timestamp=1551681183;\nSELECT STATE AS `状态`, ROUND(SUM(DURATION),7) AS `期间`, CONCAT(ROUND(SUM(DURATION)/1.000268*100,3), '%') AS `百分比` FROM INFORMATION_SCHEMA.PROFILING WHERE QUERY_ID=46 GROUP BY STATE ORDER BY SEQ;",

"beat": {

"name": "localhost.localdomain",

"hostname": "localhost.localdomain",

"version": "6.6.1"

},

"host": {

"os": {

"family": "redhat",

"name": "CentOS Linux",

"codename": "Core",

"platform": "centos",

"version": "7 (Core)"

},

"id": "808d566509d84563b4db09739872d798",

"containerized": true,

"name": "localhost.localdomain",

"architecture": "x86_64"

}

}

说明filebeat配置启动成功。

4、安装配置Logstash6.6.1

//下载最新版本,文章发布时为6.6.1,切记Elastic Stack的产品版本要保持一致

[root@localhost home]# curl -L -O https://artifacts.elastic.co/downloads/logstash/logstash-6.6.1.tar.gz

//解压

[root@localhost home]# tar -zvxf logstash-6.6.1

[root@localhost logstash-6.6.1]# ls

bin CONTRIBUTORS Gemfile lib logs logstash-core-plugin-api NOTICE.TXT vendor

config data Gemfile.lock LICENSE.txt logstash-core modules tools x-pack

[root@localhost logstash-6.6.1]# cd config/

[root@localhost config]# ls

jvm.options logstash.yml pipelines.yml

log4j2.properties logstash-sample.conf startup.options

//直接新建一个配置

[root@localhost config]# vi logstash-mysql.conf

# Sample Logstash configuration for creating a simple

# Beats -> Logstash -> Elasticsearch pipeline.

input {

beats {

port => 5044

}

}

#过滤和清理filebeats传过来的日志,作用是把日志里的关键信息拆分出来,比如User、Host、Quer_Time、Lock_Time、timestamp等

filter {

grok {

match => [ "message", "^#\s+User@Host:\s+%{USER:user}\[[^\]]+\]\s+@\s+(?:(?\S*) )?\[(?:%{IP:clientip})?\]\s+Id:\s+%{NUMBER:id}\n# Query_time: %{NUMBER:query_time}\s+Lock_time: %{NUMBER:lock_time}\s+Rows_sent: %{NUMBER:rows_sent}\s+Rows_examined: %{NUMBER:rows_examined}\nSET\s+timestamp=%{NUMBER:timestamp_mysql};\n(?[\s\S]*)"

]

}

date {

match => ["mysql.slowlog.timestamp", "UNIX", "YYYY-MM-dd HH:mm:ss"]

target => "@timestamp"

timezone => "Asia/Chongqing"

}

mutate {

remove_field => ["@version","message","timestamp_mysql"]

}

}

#输出到es中

output {

elasticsearch{

hosts => ["192.168.8.254:9200"]

#这是es中索引的名称,我这里按天创建索引,也可以按月划分

index => "mysql-slow-%{+YYYY.MM.dd}"

}

}

#直接输出到屏幕,方便验证查看

output { stdout {} }

保存后退出,这里还是,需要特别注意grok match中的message匹配,这里就是把filebeat传过来的message字段进行过滤!!如果mysql版本不是5.6,一定要确认下当前mysql慢日志的格式,以便修改正则表达式

[root@localhost config]# ../bin/logstash -f mysql-stdout.conf

Sending Logstash logs to /home/logstash-6.6.1/logs which is now configured via log4j2.properties

[2019-03-04T15:09:14,077][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

[2019-03-04T15:09:14,086][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"6.6.1"}

[2019-03-04T15:09:14,530][INFO ][logstash.config.source.local.configpathloader] No config files found in path {:path=>"/home/logstash-6.6.1/mysql-stdout.conf"}

[2019-03-04T15:09:14,535][ERROR][logstash.config.sourceloader] No configuration found in the configured sources.

[2019-03-04T15:09:14,703][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

[root@localhost logstash-6.6.1]# cd config/

[root@localhost config]# ../bin/logstash -f mysql-stdout.conf

Sending Logstash logs to /home/logstash-6.6.1/logs which is now configured via log4j2.properties

[2019-03-04T15:10:28,792][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

[2019-03-04T15:10:28,802][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"6.6.1"}

[2019-03-04T15:10:33,896][INFO ][logstash.pipeline ] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>4, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50}

[2019-03-04T15:10:34,195][INFO ][logstash.outputs.elasticsearch] Elasticsearch pool URLs updated {:changes=>{:removed=>[], :added=>[http://192.168.8.254:9200/]}}

[2019-03-04T15:10:34,324][WARN ][logstash.outputs.elasticsearch] Restored connection to ES instance {:url=>"http://192.168.8.254:9200/"}

[2019-03-04T15:10:34,357][INFO ][logstash.outputs.elasticsearch] ES Output version determined {:es_version=>6}

[2019-03-04T15:10:34,360][WARN ][logstash.outputs.elasticsearch] Detected a 6.x and above cluster: the `type` event field won't be used to determine the document _type {:es_version=>6}

[2019-03-04T15:10:34,388][INFO ][logstash.outputs.elasticsearch] New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch", :hosts=>["//192.168.8.254:9200"]}

[2019-03-04T15:10:34,394][INFO ][logstash.outputs.elasticsearch] Using mapping template from {:path=>nil}

[2019-03-04T15:10:34,407][INFO ][logstash.outputs.elasticsearch] Attempting to install template {:manage_template=>{"template"=>"logstash-*", "version"=>60001, "settings"=>{"index.refresh_interval"=>"5s"}, "mappings"=>{"_default_"=>{"dynamic_templates"=>[{"message_field"=>{"path_match"=>"message", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false}}}, {"string_fields"=>{"match"=>"*", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false, "fields"=>{"keyword"=>{"type"=>"keyword", "ignore_above"=>256}}}}}], "properties"=>{"@timestamp"=>{"type"=>"date"}, "@version"=>{"type"=>"keyword"}, "geoip"=>{"dynamic"=>true, "properties"=>{"ip"=>{"type"=>"ip"}, "location"=>{"type"=>"geo_point"}, "latitude"=>{"type"=>"half_float"}, "longitude"=>{"type"=>"half_float"}}}}}}}}

[2019-03-04T15:10:34,709][INFO ][logstash.inputs.beats ] Beats inputs: Starting input listener {:address=>"0.0.0.0:5044"}

[2019-03-04T15:10:34,733][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>"main", :thread=>"#"}

[2019-03-04T15:10:34,778][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2019-03-04T15:10:34,789][INFO ][org.logstash.beats.Server] Starting server on port: 5044

[2019-03-04T15:10:34,943][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

启动成功 接下来还是验证一下,运行一条sql:select sleep(1);等待片刻看屏幕是否打印出相关数据:

{

"log" => {

"file" => {

"path" => "/home/mysql5.6/mysql-slow.log"

},

"flags" => [

[0] "multiline"

]

},

"query" => "SELECT SLEEP(1);",

"@timestamp" => 2019-03-04T07:13:15.891Z,

"user" => "root",

"prospector" => {

"type" => "log"

},

"source" => "/home/mysql5.6/mysql-slow.log",

"fileset" => {

"name" => "slowlog",

"module" => "mysql"

},

"offset" => 844,

"rows_sent" => "1",

"lock_time" => "0.000000",

"event" => {

"dataset" => "mysql.slowlog"

},

"clientip" => "192.168.8.121",

"query_time" => "1.000223",

"rows_examined" => "0",

"beat" => {

"version" => "6.6.1",

"name" => "localhost.localdomain",

"hostname" => "localhost.localdomain"

},

"input" => {

"type" => "log"

},

"host" => {

"os" => {

"version" => "7 (Core)",

"family" => "redhat",

"codename" => "Core",

"platform" => "centos",

"name" => "CentOS Linux"

},

"containerized" => true,

"name" => "localhost.localdomain",

"architecture" => "x86_64",

"id" => "808d566509d84563b4db09739872d798"

},

"id" => "16",

"tags" => [

[0] "beats_input_codec_plain_applied"

]

}

{

"log" => {

"file" => {

"path" => "/home/mysql5.6/mysql-slow.log"

},

"flags" => [

[0] "multiline"

]

},

"query" => "SELECT QUERY_ID, SUM(DURATION) AS SUM_DURATION FROM INFORMATION_SCHEMA.PROFILING GROUP BY QUERY_ID;",

"@timestamp" => 2019-03-04T07:13:15.891Z,

"user" => "root",

"prospector" => {

"type" => "log"

},

"fileset" => {

"name" => "slowlog",

"module" => "mysql"

},

"source" => "/home/mysql5.6/mysql-slow.log",

"offset" => 1016,

"rows_sent" => "15",

"lock_time" => "0.000097",

"event" => {

"dataset" => "mysql.slowlog"

},

"clientip" => "192.168.8.121",

"query_time" => "0.001123",

"rows_examined" => "279",

"beat" => {

"version" => "6.6.1",

"name" => "localhost.localdomain",

"hostname" => "localhost.localdomain"

},

"input" => {

"type" => "log"

},

"host" => {

"os" => {

"platform" => "centos",

"version" => "7 (Core)",

"codename" => "Core",

"family" => "redhat",

"name" => "CentOS Linux"

},

"containerized" => true,

"name" => "localhost.localdomain",

"architecture" => "x86_64",

"id" => "808d566509d84563b4db09739872d798"

},

"id" => "16",

"tags" => [

[0] "beats_input_codec_plain_applied"

]

}

{

"log" => {

"file" => {

"path" => "/home/mysql5.6/mysql-slow.log"

},

"flags" => [

[0] "multiline"

]

},

"query" => "SELECT STATE AS `状态`, ROUND(SUM(DURATION),7) AS `期间`, CONCAT(ROUND(SUM(DURATION)/1.000283*100,3), '%') AS `百分比` FROM INFORMATION_SCHEMA.PROFILING WHERE QUERY_ID=53 GROUP BY STATE ORDER BY SEQ;",

"@timestamp" => 2019-03-04T07:13:15.893Z,

"user" => "root",

"prospector" => {

"type" => "log"

},

"source" => "/home/mysql5.6/mysql-slow.log",

"fileset" => {

"name" => "slowlog",

"module" => "mysql"

},

"offset" => 1274,

"rows_sent" => "13",

"lock_time" => "0.000127",

"event" => {

"dataset" => "mysql.slowlog"

},

"clientip" => "192.168.8.121",

"query_time" => "0.000997",

"rows_examined" => "281",

"beat" => {

"version" => "6.6.1",

"name" => "localhost.localdomain",

"hostname" => "localhost.localdomain"

},

"input" => {

"type" => "log"

},

"host" => {

"os" => {

"platform" => "centos",

"version" => "7 (Core)",

"codename" => "Core",

"family" => "redhat",

"name" => "CentOS Linux"

},

"containerized" => true,

"name" => "localhost.localdomain",

"architecture" => "x86_64",

"id" => "808d566509d84563b4db09739872d798"

},

"id" => "16",

"tags" => [

[0] "beats_input_codec_plain_applied"

]

}

验证成功,一共三条记录,每条记录中,user、query、clientid、query_time等都被抽取出来了

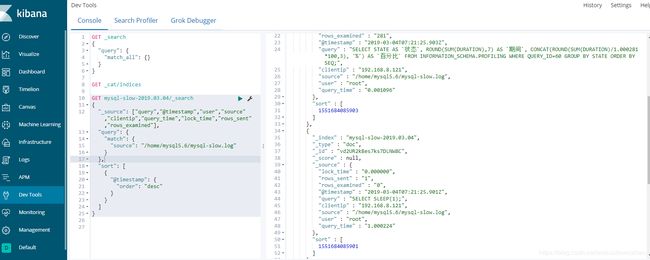

5、进入kibana再次验证es是否有相关数据

ok,mysql慢日志的关键信息都有了,当日志多了就可以用来分析了

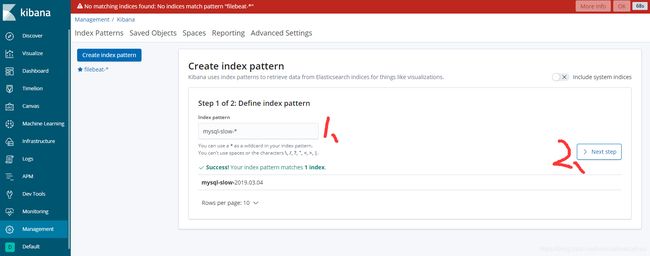

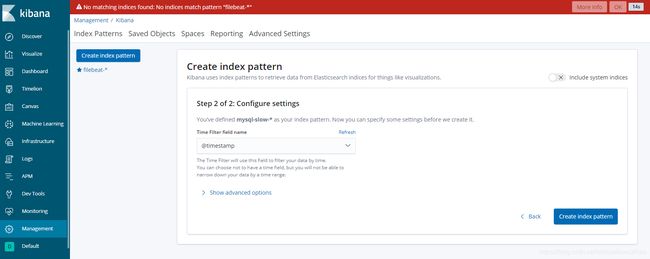

这里顺便说一下,Kibana中的Discover,只接受indexpatterns,例如我们需要分析的索引格式为mysql-slow-*,就在Management中添加一个index pattern即可

回到Discover就可以选择索引了

大功告成~