Tensorflow2.0学习笔记(八)Resnet

目录

1 退化问题?

2 ResNet的残差学习单元(Residual Unit)

3 Resnet18

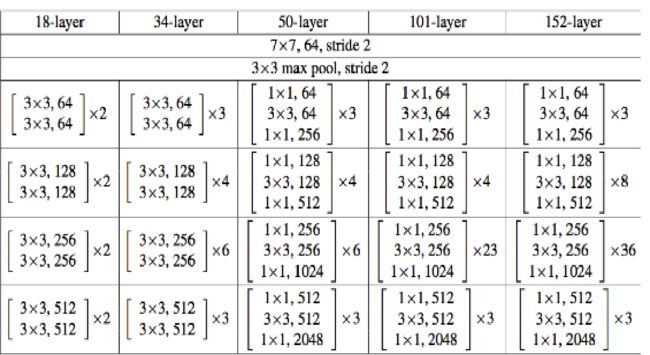

4 Resnet在不同层数时的网络配置

1 退化问题?

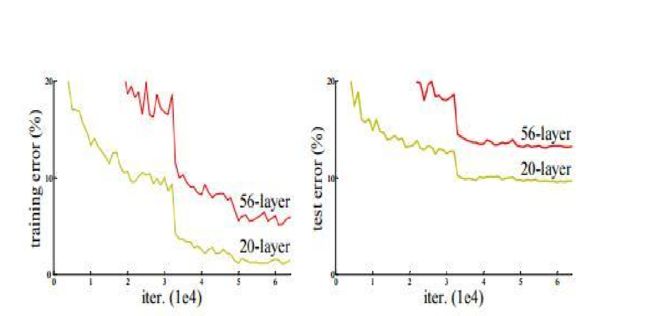

在不断增加神经网络的深度时,会出现一个退化的问题:

准确率会先上升然后达到饱和,再持续增加网络的深度则会导致准确率下降。

这并不是过拟合的问题,原因是不光在测试集上误差增大,训练集本身误差也会增大。

2 ResNet的残差学习单元(Residual Unit)

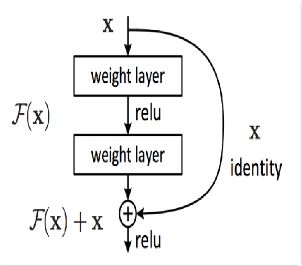

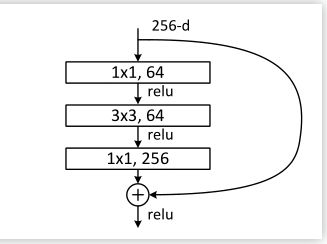

这个Residual block通过shortcut connection实现,通过shortcut将这个block的输入和输出进行加叠,这个简单的加法并不会给网络增加额外的参数和计算量,同时却可以大大增加模型的训练速度、提高训练效果,并且当模型的层数加深时,这个简单的结构能够很好的解决退化问题。

class BasicBlock(layers.Layer):

def __init__(self, filter_num, stride=1):

super(BasicBlock, self).__init__()

self.conv1 = layers.Conv2D(filter_num, (3, 3), strides=stride, padding='same')

self.bn1 = layers.BatchNormalization()

self.relu = layers.Activation('relu')

self.conv2 = layers.Conv2D(filter_num, (3, 3), strides=1, padding='same')

self.bn2 = layers.BatchNormalization()

if stride != 1:

self.downsample = Sequential()

self.downsample.add(layers.Conv2D(filter_num, (1, 1), strides=stride))

else:

self.downsample = lambda x: x

def call(self, inputs, training=None):

out = self.conv1(inputs)

out = self.bn1(out, training=training)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out, training=training)

identity = self.downsample(inputs)

output = layers.add([out, identity])

output = tf.nn.relu(output)

return output3 Resnet18

class ResNet(keras.Model):

def __init__(self, layer_dims, num_classes=2):

super(ResNet, self).__init__()

self.stem = Sequential([layers.Conv2D(64, (3, 3), strides=(1, 1)),

layers.BatchNormalization(),

layers.Activation('relu'),

layers.MaxPool2D(pool_size=(2, 2), strides=(1, 1), padding='same')

])

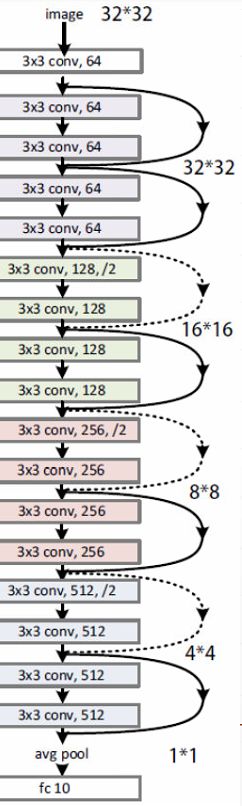

self.layer1 = self.build_resblock(64, layer_dims[0])

self.layer2 = self.build_resblock(128, layer_dims[1], stride=2)

self.layer3 = self.build_resblock(256, layer_dims[2], stride=2)

self.layer4 = self.build_resblock(512, layer_dims[3], stride=2)

self.avgpool = layers.GlobalAveragePooling2D()

self.fc = layers.Dense(num_classes)

def call(self, inputs, training=None):

x = self.stem(inputs, training=training)

x = self.layer1(x, training=training)

x = self.layer2(x, training=training)

x = self.layer3(x, training=training)

x = self.layer4(x, training=training)

x = self.avgpool(x)

x = self.fc(x)

return x

def build_resblock(self, filter_num, blocks, stride=1):

res_blocks = Sequential()

res_blocks.add(BasicBlock(filter_num, stride))

for _ in range(1, blocks):

res_blocks.add(BasicBlock(filter_num, stride=1))

return res_blocks

def resnet18():

return ResNet([2, 2, 2, 2])