kubernetes高可用集群(3)——k8s集群搭建

环境介绍:

| 服务器IP地址 |

主机名 |

安装软件 |

| 192.168.1.101 |

etcd-node01 |

etcd/k8s_master/flannel/docker-ce |

| 192.168.1.102 |

etcd-node02 |

etcd/k8s_master/flannel/docker-ce |

| 192.168.1.103 |

etcd-node03 |

etcd/k8s_master/flannel/docker-ce |

| 192.168.1.104(105) |

nginx-proxy |

Nginx |

| 192.168.10.156 |

docker-node01 |

K8s_node01/flannel/docker-ce |

| 192.168.10.159 |

docker-node09 |

K8s_node02/flannel/docker-ce |

前言:上两篇已经安装完成etcd集群,搭建好flannel网络和docker环境,本节开始安装kubernetes

在此之前确保etcd/flannel/docker正常工作,否则先解决问题再继续。

Kubernetes二进制包下载地址:https://dl.k8s.io/v1.13.2/kubernetes-server-linux-amd64.tar.gz,这个包含所有master和node所需组件

一、在k8s_master节点部署组件

1、创建相关证书

1.1创建并生成CA证书

#cat cat-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

# cat ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Shenzhen",

"ST": "Shenzhen",

"O": "k8s",

"OU": "System"

}

]

}

#cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

1.2创建并生成apiserver证书

# cat apiserver-csr.json

{

"CN": "kubernetes",

"hosts": [

"172.0.0.1",

"127.0.0.1",

"192.168.1.101",

"192.168.1.102",

"192.168.1.103",

"192.168.1.104",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.clauster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Shenzhen",

"ST": "Shenzhen",

"O": "k8s",

"OU": "System"

}

]

}

#cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes apiserver-csr.json | cfssljson -bare apiserver

1.3创建并生成kube-proxy证书

# cat kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Shenzhen",

"ST": "Shenzhen",

"O": "k8s",

"OU": "System"

}

]

}

#cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

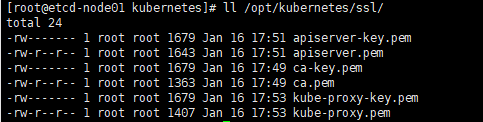

1.4最终生成如下证书文件

2、部署apiserver组件

2.1创建token文件

#head -c 16 /dev/urandom | od -An -t x | tr -d ' '

将生成的token值写入token.csv文件

#cat /opt/kubernetes/cfg/token.csv

56eac0727192ad56dad58c24e4f40eb8,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

注:第一列 随机字符串,head命令生成

第二列 用户名

第三列 UID

第四列 用户组

2.2部署apiserver

#tar -zxvf kubernetes-server-linux-amd64.tar.gz

#cd kubernetes/server/bin

#cp kube-apiserver kube-scheduler kube-controller-manager kubectl /opt/kubernetes/bin

2.3创建apiserver配置文件

#cat /opt/kubernetes/cfg/kube-apiserver

KUBE_APISERVER_OPTIONS="--logtostderr=true \

--v=4 \

--bind-address=192.168.1.101 \

--secure-port=6443 \

--advertise-address=192.168.1.101 \

--allow-privileged=true \

--service-cluster-ip-range=172.0.0.0/16 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \

--authorization-mode=RBAC,Node \

--enable-bootstrap-token-auth \

--token-auth-file=/opt/kubernetes/cfg/token.csv \

--service-node-port-range=10000-65500 \

--tls-cert-file=/opt/kubernetes/ssl/apiserver.pem \

--tls-private-key-file=/opt/kubernetes/ssl/apiserver-key.pem \

--client-ca-file=/opt/kubernetes/ssl/ca.pem \

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \

--etcd-servers=https://192.168.1.101:2379,https://192.168.1.102:2379,https://192.168.1.103:2379 \

--etcd-cafile=/opt/etcd/ssl/ca.pem \

--etcd-certfile=/opt/etcd/ssl/server.pem \

--etcd-keyfile=/opt/etcd/ssl/server-key.pem"

参数说明:

▲--logtostderr=true 启动日志,日志等级--v=4

▲--bind-address 监听地址

▲--secure-port http安全端口

▲--advertise-address 集群通告地址

▲--allow-privileged 启用授权

▲--service-cluster-ip-range Service虚拟IP地址段

▲--enable-admission-plugins 准入控制模块

▲--authorization-mode 认证授权,启用RBAC授权和节点自管理

▲--enable-bootstrap-token-auth 启用TLS bootstrap功能

▲--token-auth-file 指定token文件地址

▲--service-node-port-range Service Node类型默认分配端口范围

▲--etcd-servers etcd集群地址

2.4 systemd管理apiserver

# cat /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-apiserver

ExecStart=/opt/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTIONS

Restart=on-failure

RestartSec=3

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

3、部署schduler组件

3.1 创建schduler配置文件

#cat /opt/kubernetes/cfg/kube-scheduler

KUBE_SCHEDULER_OPTIONS="--logtostderr=true \

--v=4 \

--master=127.0.0.1:8080 \

--leader-elect=true"

3.2 systemd管理schduler

# cat /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-scheduler

ExecStart=/opt/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_OPTIONS

Restart=on-failure

RestartSec=3

[Install]

WantedBy=multi-user.target

4、部署controller-manager组件

4.1 创建controller-manager配置文件

# cat /opt/kubernetes/cfg/kube-controller-manager

KUBE_CONTROLLER_MANAGER_OPTIONS="--logtostderr=true \

--v=4 \

--master=127.0.0.1:8080 \

--leader-elect=true \

--address=127.0.0.1 \

--service-cluster-ip-range=172.0.0.0/16 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \

--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \

--root-ca-file=/opt/kubernetes/ssl/ca.pem "

4.2 systemd管理controller-manager

# cat /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-controller-manager

ExecStart=/opt/kubernetes/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTIONS

Restart=on-failure

RestartSec=3

[Install]

WantedBy=multi-user.target

5、启动k8s_master相关组件

#systemctl daemon-reload

#systemctl enable kube-apiserver

#systemctl enable kube-scheduler

#systemctl enable kube-controller-manager

#systemctl start kube-apiserver

#systemctl start kube-scheduler

#systemctl start kube-controller-manager

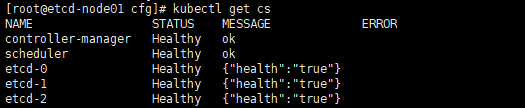

6、通过kubectl工具查看当前集群状态

其他master节点部署方式一样

二、在k8s_node节点部署组件

本节Master apiserver启用TLS认证后,Node节点kubelet组件想要加入集群,必须使用CA签发的有效证书才能与apiserver通信。

注意:以下1、2、3步请在任意一个master节点操作

1、将kubelet-bootstrap用户绑定到系统集群角色

#kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

2、创建kubelet-bootsreap kubeconfig文件

在生成kubernetes证书/opt/kubernetes/ssl目录下执行以下命令生成kubeconfig文件:

2.1指定apiserver内网负载均衡地址

#KUBE_APISERVER="https://192.168.1.104:6443"

#BOOTSTRAP_TOKEN=56eac0727192ad56dad58c24e4f40eb8

2.2设置集群参数

#kubectl config set-cluster kubernetes --certificate-authority=./ca.pem --embed-certs=true --server=${KUBE_APISERVER} --kubeconfig=bootstrap.kubeconfig

2.3设置客户端认证参数

#kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=bootstrap.kubeconfig

2.4设置上下文参数

#kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=bootstrap.kubeconfig

2.5设置默认上下文

#kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

3、创建kube-proxy kubeconfig文件

3.1设置集群参数

#kubectl config set-cluster kubernetes --certificate-authority=./ca.pem --embed-certs=true --server=${KUBE_APISERVER} --kubeconfig=kube-proxy.kubeconfig

3.2设置客户端认证参数

#kubectl config set-credentials kube-proxy --client-key=./kube-proxy-key.pem --client-certificate=./kube-proxy.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig

3.3设置上下文参数

#kubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig

3.4设置默认上下文

#kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

以上3步完成,可以看到在该master节点/opt/kubernetes/ssl目录下新生成两个kubeconfig文件:bootstrap.kubeconfig和kube-proxy.kubeconfig;将这两个文件拷贝到Node节点/opt/kubernetes/cfg目录下。

4、Node节点部署kubelet组件

将前面下载的二进制包中的kubelet和kube-proxy拷贝到/opt/kubernetes/bin目录下

4.1创建kubelet配置文件

# cat /opt/kubernetes/cfg/kubelet

KUBELET_OPTIONS="--logtostderr=true --v=4 --hostname-override=192.168.10.156 \

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \

--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \

--config=/opt/kubernetes/cfg/kubelet.config \

--cert-dir=/opt/kubernetes/ssl \

--pod-infra-container-image=192.168.10.154/library/pause-amd64:3.1"

参数说明:

▲--hostname-override 在集群中显示的主机名

▲--kubeconfig 指定kubeconfig文件位置,会自动生成

▲--bootstrap-kubeconfig 指定刚才生成的bootstrap.kubeconfig文件

▲--cert-dir 颁发证书存放的位置

▲--pod-infra-container-image 管理POD网络的基础容器镜像

其中/opt/kubernetes/cfg/kubelet.config配置如下:

# cat /opt/kubernetes/cfg/kubelet.config

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 192.168.10.156

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS: ["172.0.0.2"]

clusterDomain: cluster.local.

failSwapOn: false

authentication:

anonymous:

enable: true

webhook:

enable: false

4.2 systemctl管理kubelet组件

# cat /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kubelet

ExecStart=/opt/kubernetes/bin/kubelet $KUBELET_OPTIONS

Restart=on-failure

KillMode=process

RestartSec=15s

[Install]

WantedBy=multi-user.target

5、Node节点部署kube-proxy组件

5.1创建kube-proxy配置文件

# cat /opt/kubernetes/cfg/kube-proxy

KUBE_PROXY_OPTIONS="--logtostderr=true --v=4 \

--hostname-override=192.168.10.156 \

--cluster-cidr=172.0.0.0/16 \

--kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig"

5.2 systemctl管理kube-proxy

# cat /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-proxy

ExecStart=/opt/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTIONS

Restart=on-failure

[Install]

WantedBy=multi-user.target

- 启动k8s_node组件

#systemctl daemon-reload

#systemctl enable kubelet

#systemctl enable kube-proxy

#systemctl start kubelet

#systemctl start kube-proxy

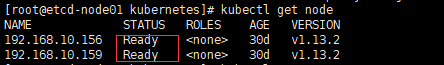

6、Kubelet启动后,在Master审批Node加入集群

启动后还没有加入到及群众,需要手动允许该节点才可以。

在master节点查看请求签名的Node

#kubectl get csr

#kubectl certificate approve XXXXID

#kubectl get node

三、配置Master高可用

所谓的Matsre HA,其实就是APIServer的HA,master的其他组件controller-manager/scheduler都是可以通过etcd做选举(--leader-elect),而APIServer设计的就是可扩展性,所以做到APIServer很容易,只要在前面加一个负载均衡轮询转发请求即可。

比如本例中的192.168.10.104,使用nginx软件做负载均衡,nginx配置如下:

# cat /etc/nginx/nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

# Load dynamic modules. See /usr/share/nginx/README.dynamic.

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.1.101:6443;

server 192.168.1.102:6443;

server 192.168.1.103:6443;

}

server {

listen 6443;

proxy_pass k8s-apiserver;

}

}

实现高可用需增加一个nginx负载均衡节点,可以结合keepalived+nginx做成真正的高可用。在生成apiserver证书时,将负载均衡设备IP地址(结合keepalived后的VIP)加入apiserver-csr.json文件中,为避免出现不必要的问题,最好将nginx节点的实际IP地址与VIP都加入。