英特尔OpenVINO深度学习框架--ubuntu16.04上的安装手记

概述

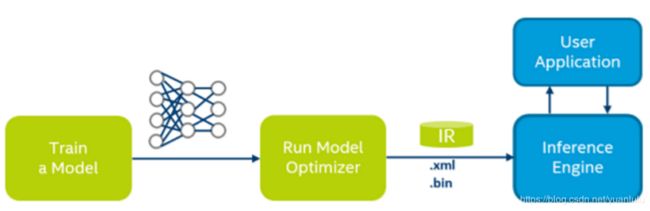

OpenVINO是intel的深度学习工具框架,本质是一个支持intel各种硬件(CPU、集显、FPGA和Movidius VPU)的推理机。

这个工具本身不做训练,但是可以把其它深度学习框架(如 Caffe, TensorFlow, MXNet)训练的模型文件转化为自己支持的格式。

所以OpenVINO分为两部分(github上源码也分为这么两个目录):

- Inference Engine:推理机,使用模型文件产生推理结果

- Model Optimizer:模型优化器,用于把其它框架的模型文件转换为OpenVINO支持的中间格式(Intermediate Representation, 简称 IR)。

经过了解,本工具支持的操作系统有Ubuntu*, CentOS*, Yocto* OS、windows,甚至还有树莓派的官方系统。预计在树莓派上安装Ubuntu也是可以用Neural Compute Stick1/2的。

我觉得OpenVINO的最大优势是提供了很多预训练的模型,要求不高的直接就用了。预训练模型链接 Pretrained Models。

本文介绍主要从github上安装方法。

Model Optimizer简介

Model Optimizer用于执行模型格式转换,并做一些优化,支持的输入格式文件有:

- caffemodel - Caffe models

- pb - TensorFlow models

- params - MXNet models

- onnx - ONNX models

- nnet - Kaldi models.

Model Optimizer有两个目的:

- 生成有效的IR文件 (.xml and .bin) :这一步成功完成是推理机运行的前提。

- 优化模型:有些训练时的算子(如dropout)在推理时完全没有用,就会被去掉。有的几个layer组合等价于一个数学操作,这些层就会被合并。

具体的优化的技术描述:

- BatchNormalization and ScaleShift decomposition: on this stage, BatchNormalization layer is decomposed to Mul → Add → Mul → Add sequence, and ScaleShift layer is decomposed to Mul → Add layers sequence.

- Linear operations merge: on this stage, we merge sequences of Mul and Add operations to the single Mul → Add instance.

For example, if we have BatchNormalization → ScaleShift sequence in our topology, it is replaced with Mul → Add (by the first stage). On the next stage, the latter will be replaced with ScaleShift layer if we have no available Convolution or FullyConnected layer to fuse into (next). - Linear operations fusion: on this stage, the tool fuses Mul and Add operations to Convolution or FullyConnected layers. Notice that it searches for Convolution and FullyConnected layers both backward and forward in the graph (except for Add operation that cannot be fused to Convolution layer in forward direction).

有些优化可以在转换的时候调用相关选项关闭掉。具体见转换脚本的参数文档。

安装model-optimizer

环境准备

准备一个干净的64bit的ubuntu16.04系统。后面所有的操作都是在root下执行的.

升级源目录:

$ apt-get upgrade

$ apt-get install git

下载源码

git clone https://github.com/opencv/dldt.git

配置model-optimizer所需环境

cd dldt/model-optimizer/install_prerequisites/

去掉install_prerequisites.sh里所有的‘sudo -E’(不是删除整行,只需去掉‘sudo -E’),然后执行:

./install_prerequisites.sh

这个脚本会安装依赖的各种东西,包括python、tensorflow、caffe、onnx等。

执行结果:

Installing collected packages: six, protobuf, numpy, markdown, werkzeug, tensorboard, astor, gast, absl-py, grpcio, termcolor, tensorflow, graphviz, idna, certifi, chardet, urllib3, requests, mxnet, decorator, networkx, typing, typing-extensions, onnx

Successfully installed absl-py-0.7.0 astor-0.7.1 certifi-2018.11.29 chardet-3.0.4 decorator-4.3.0 gast-0.2.2 graphviz-0.10.1 grpcio-1.18.0 idna-2.8 markdown-3.0.1 mxnet-1.0.0 networkx-2.2 numpy-1.16.0 onnx-1.3.0 protobuf-3.5.1 requests-2.21.0 six-1.12.0 tensorboard-1.9.0 tensorflow-1.9.0 termcolor-1.1.0 typing-3.6.6 typing-extensions-3.7.2 urllib3-1.24.1 werkzeug-0.14.1

简单测试model-optimizer

用之前《tensorflow 20:搭网络、导出模型、运行模型》这篇博客生成的模型测试一下(下面命令二选一):

#普通转换

$ python3 ./mo.py --input_model ./model/frozen_graph.pb --input_shape [1,28,28,1]

# 把fp32模型量化为fp16,模型大小减小一半

python3 ./mo.py --input_model ./model/frozen_graph.pb --input_shape [1,28,28,1] --data_type FP16

以上命令执行完毕,在当前目录下生成frozen_graph.xml和frozen_graph.bin两个文件。这两个文件拿给推理器使用。

安装inference-engine

初始化git

下载关联的git子项目:

$ cd dldt/inference-engine/

$ git submodule init #初始化子模块

$ git submodule update --recursive #更新子模块

安装依赖软件包

执行install_dependencies.sh:

$ ./install_dependencies.sh

如果提示没有sudo命令,把install_dependencies.sh里面的sudo去掉即可。

下载MKL并解压

从这里下载优化的MKL-ML* GEMM实现。

解压文件。在我的机子上,解压后的目录是/home/user/mklml_lnx_2019.0.1.20180928。

编译

$ mkdir build

$ cd build

下列编译方式二选一。

不编译python:

$ cmake -DCMAKE_BUILD_TYPE=Release -DGEMM=MKL -DMKLROOT=/home/user/mklml_lnx_2019.0.1.20180928 ..

$ make -j8

编译python:

$ pip3 install -r ../ie_bridges/python/requirements.txt

$ cmake -DCMAKE_BUILD_TYPE=Release -DGEMM=MKL -DMKLROOT=/home/user/mklml_lnx_2019.0.1.20180928 -DENABLE_PYTHON=ON -DPYTHON_EXECUTABLE=`which python3.5` -DPYTHON_LIBRARY=/usr/lib/x86_64-linux-gnu/libpython3.5m.so -DPYTHON_INCLUDE_DIR=/usr/include/python3.5 ..

$ make -j8

编译完成的的文件放在dldt/inference-engine/bin/intel64/Release/目录下:

# ls dldt/inference-engine/bin/intel64/Release/

InferenceEngineUnitTests hello_classification

benchmark_app hello_request_classification

calibration_tool hello_shape_infer_ssd

classification_sample lib

classification_sample_async object_detection_sample_ssd

hello_autoresize_classification style_transfer_sample

# ls dldt/inference-engine/bin/intel64/Release/lib/

cldnn_global_custom_kernels libformat_reader.so libinference_engine.so

libHeteroPlugin.so libgflags_nothreads.a libinference_engine_s.a

libMKLDNNPlugin.so libgmock.a libmkldnn.a

libclDNN64.so libgmock_main.a libmock_engine.so

libclDNNPlugin.so libgtest.a libpugixml.a

libcldnn_kernel_selector64.a libgtest_main.a libstb_image.a

libcpu_extension.so libhelpers.a libtest_MKLDNNPlugin.a

到这基本就编译完了,但是貌似啥也永不起来呢。别着急,后面下载model-zoo.

编译过程生成的opencv目录在/home/user/dldt/inference-engine/temp/opencv_4.0.0_ubuntu/,这个目录要用到。

将python路径加入环境变量

$ export PYTHONPATH=$PYTHONPATH:/home/user/dldt/inference-engine/bin/intel64/Release/lib/python_api/python3.5/openvino

这样就可以在python中import openvino了。可以调用open_model_zoo/demos下的python脚本来测试了。

安装model_zoo

下载

$ git clone https://github.com/opencv/open_model_zoo

$ cd open_model_zoo/demos

编译demos

这里需要再环境变量里指定推理机和opencv的位置。

$ mkdir build

$ export InferenceEngine_DIR=/home/user/dldt/inference-engine/build

$ export OpenCV_DIR=/home/user/dldt/inference-engine/temp/opencv_4.0.0_ubuntu/cmake

$ cd build

$ cmake -DCMAKE_BUILD_TYPE=Release ../

$ make

编译完成,所有的二进制文件在当前目录的intel64/Release目录下:

# ls /home/user/open_model_zoo/demos/build/intel64/Release

crossroad_camera_demo object_detection_demo

end2end_video_analytics_ie object_detection_demo_ssd_async

end2end_video_analytics_opencv object_detection_demo_yolov3_async

human_pose_estimation_demo pedestrian_tracker_demo

interactive_face_detection_demo security_barrier_camera_demo

lib segmentation_demo

mask_rcnn_demo smart_classroom_demo

multi-channel-demo super_resolution_demo

下载模型

先准备需要的python包:

$ cd ../../model_downloader/

$ pip3 install pyyaml requests

先打印看看支持的模型:

# ./downloader.py --print_all

Please choose either "--all" or "--name"

usage: downloader.py [-h] [-c CONFIG] [--name NAME] [--all] [--print_all]

[-o OUTPUT_DIR]

optional arguments:

-h, --help show this help message and exit

-c CONFIG, --config CONFIG

path to YML configuration file

--name NAME names of topologies for downloading with comma

separation

--all download all topologies from the configuration file

--print_all print all available topologies

-o OUTPUT_DIR, --output_dir OUTPUT_DIR

path where to save topologies

list_topologies.yml - default configuration file

========== All available topologies ==========

densenet-121

densenet-161

densenet-169

densenet-201

squeezenet1.0

squeezenet1.1

mtcnn-p

mtcnn-r

mtcnn-o

mobilenet-ssd

vgg19

vgg16

ssd512

ssd300

inception-resnet-v2

dilation

googlenet-v1

googlenet-v2

googlenet-v4

alexnet

ssd_mobilenet_v2_coco

resnet-50

resnet-101

resnet-152

googlenet-v3

se-inception

se-resnet-101

se-resnet-152

se-resnet-50

se-resnext-50

se-resnext-101

Sphereface

license-plate-recognition-barrier-0007

age-gender-recognition-retail-0013

age-gender-recognition-retail-0013-fp16

emotions-recognition-retail-0003

emotions-recognition-retail-0003-fp16

face-detection-adas-0001

face-detection-adas-0001-fp16

face-detection-retail-0004

face-detection-retail-0004-fp16

face-person-detection-retail-0002

face-person-detection-retail-0002-fp16

face-reidentification-retail-0095

face-reidentification-retail-0095-fp16

facial-landmarks-35-adas-0001

facial-landmarks-35-adas-0001-fp16

head-pose-estimation-adas-0001

head-pose-estimation-adas-0001-fp16

human-pose-estimation-0001

human-pose-estimation-0001-fp16

landmarks-regression-retail-0009

landmarks-regression-retail-0009-fp16

license-plate-recognition-barrier-0001

license-plate-recognition-barrier-0001-fp16

pedestrian-and-vehicle-detector-adas-0001

pedestrian-and-vehicle-detector-adas-0001-fp16

pedestrian-detection-adas-0002

pedestrian-detection-adas-0002-fp16

person-attributes-recognition-crossroad-0200

person-attributes-recognition-crossroad-0200-fp16

person-detection-action-recognition-0004

person-detection-action-recognition-0004-fp16

person-detection-retail-0002

person-detection-retail-0002-fp16

person-detection-retail-0013

person-detection-retail-0013-fp16

person-reidentification-retail-0031

person-reidentification-retail-0031-fp16

person-reidentification-retail-0076

person-reidentification-retail-0076-fp16

person-reidentification-retail-0079

person-reidentification-retail-0079-fp16

person-vehicle-bike-detection-crossroad-0078

person-vehicle-bike-detection-crossroad-0078-fp16

road-segmentation-adas-0001

road-segmentation-adas-0001-fp16

semantic-segmentation-adas-0001

semantic-segmentation-adas-0001-fp16

single-image-super-resolution-0063

single-image-super-resolution-0063-fp16

single-image-super-resolution-1011

single-image-super-resolution-1011-fp16

single-image-super-resolution-1021

single-image-super-resolution-1021-fp16

text-detection-0001

text-detection-0001-fp16

vehicle-attributes-recognition-barrier-0039

vehicle-attributes-recognition-barrier-0039-fp16

vehicle-detection-adas-0002

vehicle-detection-adas-0002-fp16

vehicle-license-plate-detection-barrier-0106

vehicle-license-plate-detection-barrier-0106-fp16

也可以选择一个网络模型的,这里一股脑全部下载下来

$ ./downloader.py -o ../pretrained_models --all

不的情况下肯定会下载失败的。具体的url都在list_topologies.yml这个文件里。我把里面从谷歌网站下载的都删掉了,生成了一个新的文件china_list.yml。重新执行:

$ ./downloader.py -o ../pretrained_models --all -c china_list.yml

或者指定下载配置文件:

$ ./downloader.py -o ../pretrained_models --name facial-landmarks-35-adas-0001

测试

目前为止,所有下载的模型文件在/home/user/open_model_zoo/pretrained_models。编译的例子和可执行程序在/home/user/dldt/inference-engine/bin/intel64/Release/和/home/user/open_model_zoo/demos/build/intel64/Release/这两个目录下。使用方法请参考《Intel® Distribution of OpenVINO™ Toolkit Documentation

例子文档》

比如我的测试:

$ ./dldt/inference-engine/bin/intel64/Release/object_detection_sample_ssd -i ./test/1.jpg -m ./open_model_zoo/pretrained_models/Retail/object_detection/pedestrian/rmnet_ssd/0013/dldt/person-detection-retail-0013.xml -d CPU

[ INFO ] Image out_0.bmp created!

total inference time: 49.4939

Average running time of one iteration: 49.4939 ms

Throughput: 20.2045 FPS

$ ./open_model_zoo/demos/build/intel64/Release/interactive_face_detection_demo -i ./test/right.avi -m ./open_model_zoo/pretrained_models/Transportation/object_detection/face/pruned_mobilenet_reduced_ssd_shared_weights/dldt/face-detection-adas-0001.xml -m_ag ./open_model_zoo/pretrained_models/Retail/object_attributes/age_gender/dldt/age-gender-recognition-retail-0013.xml -m_hp ./open_model_zoo/pretrained_models/Transportation/object_attributes/headpose/vanilla_cnn/dldt/head-pose-estimation-adas-0001.xml -m_em ./open_model_zoo/pretrained_models/Retail/object_attributes/emotions_recognition/0003/dldt/emotions-recognition-retail-0003.xml -m_lm ./open_model_zoo/pretrained_models/Transportation/object_attributes/facial_landmarks/custom-35-facial-landmarks/dldt/facial-landmarks-35-adas-0001.xml -d CPU -pc -no_show -r

行人检测效果图(框还是很紧凑的):

参考资料

OpenVINO™ Toolkit 官网

OpenVINO github

文综总目录 Documentation

Install the Intel® Distribution of OpenVINO™ toolkit for Linux*

Model Optimizer Developer Guide

Inference Engine Developer Guide

这个厉害了,预训练模型 Pretrained Models

支持的tensorflow算子列表

tensorflow 20:搭网络、导出模型、运行模型

Intel® Distribution of OpenVINO™ Toolkit Documentation

例子文档

英特尔重磅开源OpenVINO™ !附送的预训练模型是最大亮点

手把手教你在NCS2上部署yolov3-tiny检测模型