SpringBoot集成MyBatis多数据源之ODPS(MaxComputer)

1.MyByatis通过多数据源的方式集成ODPS

1.1 新增配置文件

# datasource-saas

spring.datasource.names = odps

spring.datasource.type = com.alibaba.druid.pool.DruidDataSource

spring.datasource.driver-class-name = com.mysql.cj.jdbc.Driver

spring.datasource.url = jdbc:mysql://localhost:3306/test?useUnicode=true&characterEncoding=UTF-8&zeroDateTimeBehavior=convertToNull&serverTimezone=GMT%2B8&allowMultiQueries=true

spring.datasource.username = test

spring.datasource.password = test

spring.datasource.max-total = 100

# datasource-odps

spring.datasource.odps.type = com.alibaba.druid.pool.DruidDataSource

spring.datasource.odps.driverClassName = com.aliyun.odps.jdbc.OdpsDriver

spring.datasource.odps.url = jdbc:odps:http://10.0.0.0/api?project=test&characterEncoding=UTF-8

spring.datasource.odps.username = accessId

spring.datasource.odps.password = accessKey

spring.datasource.odps.druid.defaultAutoCommit = true

spring.datasource.odps.druid.initialSize = 5

spring.datasource.odps.druid.minIdle = 5

spring.datasource.odps.druid.maxActive = 20

spring.datasource.odps.druid.maxWait = 60000

spring.datasource.odps.druid.timeBetweenEvictionRunsMillis = 60000

spring.datasource.odps.druid.minEvictableIdleTimeMillis = 300000

spring.datasource.odps.druid.validationQuery = SELECT 1

spring.datasource.odps.druid.testWhileIdle = true

spring.datasource.odps.druid.testOnBorrow = false

spring.datasource.odps.druid.testOnReturn = false

spring.datasource.odps.druid.poolPreparedStatements = true

spring.datasource.odps.druid.maxPoolPreparedStatementPerConnectionSize = 20

spring.datasource.odps.druid.filters = stat,wall,log4j

spring.datasource.odps.druid.connectionProperties = druid.stat.mergeSql=true;druid.stat.slowSqlMillis=5000

spring.datasource.odps.druid.useGlobalDataSourceStat = true1.2 增加代码配置

新增4个类和1个接口

package com.rada.framework.datasource;

import org.slf4j.LoggerFactory;

import org.springframework.jdbc.datasource.lookup.AbstractRoutingDataSource;

import java.sql.SQLFeatureNotSupportedException;

import java.util.logging.Logger;

public class DynamicDataSource extends AbstractRoutingDataSource {

@Override

protected Object determineCurrentLookupKey() {

return DynamicDataSourceContextHolder.getDataSourceType();

}

}

package com.rada.framework.datasource;

import org.apache.logging.log4j.core.config.Order;

import org.aspectj.lang.JoinPoint;

import org.aspectj.lang.annotation.After;

import org.aspectj.lang.annotation.Aspect;

import org.aspectj.lang.annotation.Before;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.stereotype.Component;

@Aspect

@Order(-1)// 保证该AOP在@Transactional之前执行

@Component

public class DynamicDataSourceAspect {

private static final Logger logger = LoggerFactory.getLogger(DynamicDataSourceAspect.class);

@Before("@annotation(ds)")

public void changeDataSource(JoinPoint point, TargetDataSource ds) throws Throwable {

String dsId = ds.name();

if (!DynamicDataSourceContextHolder.containsDataSource(dsId)) {

logger.error("数据源[{}]不存在,使用默认数据源 > {}", ds.name(), point.getSignature());

} else {

logger.info("Use DataSource : {} > {}", ds.name(), point.getSignature());

DynamicDataSourceContextHolder.setDataSourceType(ds.name());

}

}

@After("@annotation(ds)")

public void restoreDataSource(JoinPoint point, TargetDataSource ds) {

logger.info("Revert DataSource : {} > {}", ds.name(), point.getSignature());

DynamicDataSourceContextHolder.clearDataSourceType();

}

}

package com.rada.framework.datasource;

import java.util.ArrayList;

import java.util.List;

public class DynamicDataSourceContextHolder {

private static final ThreadLocal contextHolder = new ThreadLocal();

public static List dataSourceIds = new ArrayList<>();

public static void setDataSourceType(String dataSourceType) {

contextHolder.set(dataSourceType);

}

public static String getDataSourceType() {

return contextHolder.get();

}

public static void clearDataSourceType() {

contextHolder.remove();

}

/**

* 判断指定DataSrouce当前是否存在

* @param dataSourceId

* @return

*/

public static boolean containsDataSource(String dataSourceId) {

return dataSourceIds.contains(dataSourceId);

}

}

package com.rada.framework.datasource;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.MutablePropertyValues;

import org.springframework.beans.factory.support.BeanDefinitionRegistry;

import org.springframework.beans.factory.support.GenericBeanDefinition;

import org.springframework.boot.jdbc.DataSourceBuilder;

import org.springframework.context.EnvironmentAware;

import org.springframework.context.annotation.ImportBeanDefinitionRegistrar;

import org.springframework.core.env.Environment;

import org.springframework.core.type.AnnotationMetadata;

import javax.sql.DataSource;

import java.util.HashMap;

import java.util.Map;

/**

* Created by LZ on 2019/10/11

*/

public class DynamicDataSourceRegister implements ImportBeanDefinitionRegistrar, EnvironmentAware {

private static final Logger logger = LoggerFactory.getLogger(DynamicDataSourceRegister.class);

// 如配置文件中未指定数据源类型,使用该默认值

private static final Object DATASOURCE_TYPE_DEFAULT = "com.alibaba.druid.pool.DruidDataSource";

// 数据源

private DataSource defaultDataSource;

private Map customDataSources = new HashMap<>();

private static String DB_NAME = "names";

private static String DB_DEFAULT_VALUE = "spring.datasource";

/**

* 加载多数据源配置

*/

@Override

public void setEnvironment(Environment env) {

initDefaultDataSource(env);

initCustomDataSources(env);

}

/**

* 2.0.4 初始化主数据源

*/

private void initDefaultDataSource(Environment env) {

// 读取主数据源

Map dsMap = new HashMap<>();

dsMap.put("type", env.getProperty(DB_DEFAULT_VALUE + "." + "type"));

dsMap.put("driver-class-name", env.getProperty(DB_DEFAULT_VALUE + "." + "driver-class-name"));

dsMap.put("url", env.getProperty(DB_DEFAULT_VALUE + "." + "url"));

dsMap.put("username", env.getProperty(DB_DEFAULT_VALUE + "." + "username"));

dsMap.put("password", env.getProperty(DB_DEFAULT_VALUE + "." + "password"));

defaultDataSource = buildDataSource(dsMap);

}

// 初始化更多数据源

private void initCustomDataSources(Environment env) {

// 读取配置文件获取更多数据源,也可以通过defaultDataSource读取数据库获取更多数据源

String dsPrefixs = env.getProperty(DB_DEFAULT_VALUE + "." + DB_NAME);

// 多个数据源

for (String dsPrefix : dsPrefixs.split(",")) {

Map dsMap = new HashMap<>();

dsMap.put("type", env.getProperty(DB_DEFAULT_VALUE + "." + dsPrefix + ".type"));

dsMap.put("driver-class-name", env.getProperty(DB_DEFAULT_VALUE + "." + dsPrefix + ".driver-class-name"));

dsMap.put("url", env.getProperty(DB_DEFAULT_VALUE + "." + dsPrefix + ".url"));

dsMap.put("username", env.getProperty(DB_DEFAULT_VALUE + "." + dsPrefix + ".username"));

dsMap.put("password", env.getProperty(DB_DEFAULT_VALUE + "." + dsPrefix + ".password"));

DataSource ds = buildDataSource(dsMap);

customDataSources.put(dsPrefix, ds);

}

}

@Override

public void registerBeanDefinitions(AnnotationMetadata importingClassMetadata, BeanDefinitionRegistry registry) {

Map targetDataSources = new HashMap();

// 将主数据源添加到更多数据源中

targetDataSources.put("dataSource", defaultDataSource);

DynamicDataSourceContextHolder.dataSourceIds.add("dataSource");

// 添加更多数据源

targetDataSources.putAll(customDataSources);

for (String key : customDataSources.keySet()) {

DynamicDataSourceContextHolder.dataSourceIds.add(key);

}

// 创建DynamicDataSource

GenericBeanDefinition beanDefinition = new GenericBeanDefinition();

beanDefinition.setBeanClass(DynamicDataSource.class);

beanDefinition.setSynthetic(true);

MutablePropertyValues mpv = beanDefinition.getPropertyValues();

mpv.addPropertyValue("defaultTargetDataSource", defaultDataSource);

mpv.addPropertyValue("targetDataSources", targetDataSources);

registry.registerBeanDefinition("dataSource", beanDefinition);

logger.info("Dynamic DataSource Registry");

}

// 创建DataSource

@SuppressWarnings("unchecked")

public DataSource buildDataSource(Map dsMap) {

try {

Object type = dsMap.get("type");

if (type == null) {

// 默认DataSource

type = DATASOURCE_TYPE_DEFAULT;

}

Class dataSourceType;

dataSourceType = (Class) Class.forName((String) type);

String driverClassName = dsMap.get("driver-class-name").toString();

String url = dsMap.get("url").toString();

String username = dsMap.get("username").toString();

String password = dsMap.get("password").toString();

DataSourceBuilder factory = DataSourceBuilder.create().driverClassName(driverClassName).url(url)

.username(username).password(password).type(dataSourceType);

return factory.build();

} catch (ClassNotFoundException e) {

e.printStackTrace();

}

return null;

}

}

package com.rada.framework.datasource;

import java.lang.annotation.Documented;

import java.lang.annotation.ElementType;

import java.lang.annotation.Retention;

import java.lang.annotation.RetentionPolicy;

import java.lang.annotation.Target;

@Target({ElementType.METHOD, ElementType.TYPE})

@Retention(RetentionPolicy.RUNTIME)

@Documented

public @interface TargetDataSource {

String name();

}

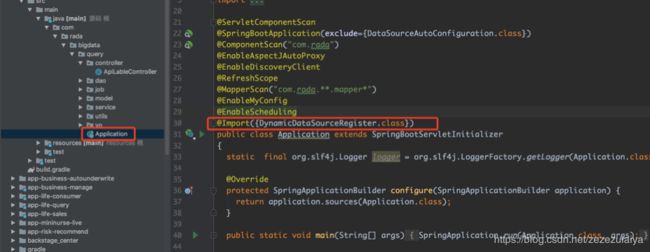

在Application上引入DynamicDataSourceRegister类

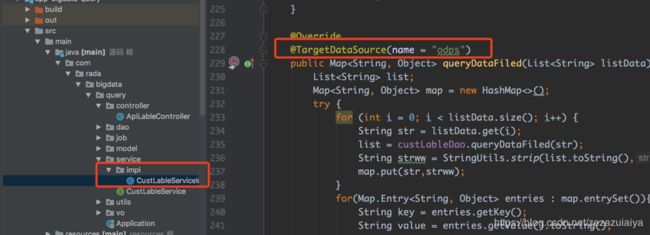

使用方法:

2.因为ODPS限制只能最多5000条,通过TunnelTask导出ODPS超过10000条数据

package com.rada.bigdata.query.test;

import com.aliyun.odps.Instance;

import com.aliyun.odps.Odps;

import com.aliyun.odps.OdpsException;

import com.aliyun.odps.account.Account;

import com.aliyun.odps.account.AliyunAccount;

import com.aliyun.odps.data.Record;

import com.aliyun.odps.data.RecordReader;

import com.aliyun.odps.task.SQLTask;

import com.aliyun.odps.tunnel.TableTunnel;

import com.aliyun.odps.tunnel.TunnelException;

import java.io.IOException;

import java.util.*;

/**

* Created by LZ on 2019/11/18

*/

public class Tunnel {

private static final String accessId = "";

private static final String accessKey = "";

private static final String endPoint = "";

private static final String project = "";

private static final String sql = "select * from test;";

private static final String table = "Tmp_" + UUID.randomUUID().toString().replace("-", "_");//此处使用随机字符串作为临时导出存放数据的表的名字

private static final Odps odps = getOdps();

public static void main(String[] args) {

System.out.println(table);

runSql();

tunnel();

}

/*

* 下载SQLTask的结果。

* */

private static List> tunnel() {

TableTunnel tunnel = new TableTunnel(odps);

try {

TableTunnel.DownloadSession downloadSession = tunnel.createDownloadSession(project, table);

System.out.println("Session Status is : "+ downloadSession.getStatus().toString());

long count = downloadSession.getRecordCount();

System.out.println("RecordCount is: " + count);

RecordReader recordReader = downloadSession.openRecordReader(0, count);

Record record;

List> list = new ArrayList();

while ((record = recordReader.read()) != null) {

list = consumeRecord(record,list);

}

recordReader.close();

return list;

} catch (TunnelException e) {

e.printStackTrace();

} catch (IOException e1) {

e1.printStackTrace();

}

return null;

}

/*

* 保存这条数据。

* 如果数据量少,可以直接打印结果后拷贝。实际使用场景下也可以使用JAVA.IO写到本地文件,或在远端服务器上保存数据结果。

* */

private static List> consumeRecord(Record record,List list) {

Map map = new HashMap<>();

map.put("uuid",record.getString("uuid"));

map.put("holder_id",record.getString("holder_id"));

map.put("agent_id",record.getString("agent_id"));

map.put("datasource",record.getString("datasource"));

map.put("tx_date",record.getString("tx_date"));

list.add(map);

return list;

}

/*

* 运行SQL ,把查询结果保存成临时表,方便后续用Tunnel下载。

* 保存数据的lifecycle此处设置为1天,如果删除步骤失败,也不会浪费过多存储空间。

* */

private static void runSql() {

Instance i;

StringBuilder sb = new StringBuilder("Create Table ").append(table)

.append(" lifecycle 1 as ").append(sql);

try {

System.out.println(sb.toString());

i = SQLTask.run(getOdps(), sb.toString());

i.waitForSuccess();

} catch (OdpsException e) {

e.printStackTrace();

}

}

/*

* 初始化MaxCompute的连接信息。

* */

private static Odps getOdps() {

Account account = new AliyunAccount(accessId, accessKey);

Odps odps = new Odps(account);

odps.setEndpoint(endPoint);

odps.setDefaultProject(project);

return odps;

}

}

如有疑问,请发送邮箱:[email protected]