【kubernetes/k8s源码分析】 ceph csi cephfs plugin 源码分析

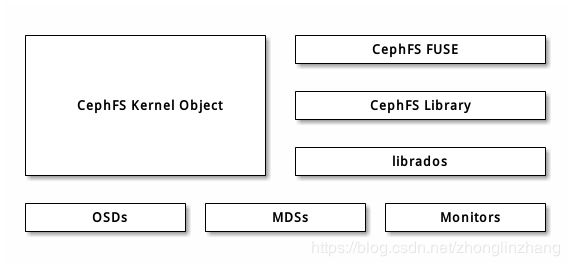

Ceph FS 是一个支持POSIX接口的文件系统,它使用 Ceph 存储集群来存储数据。文件系统对于客户端来说可以方便的挂载到本地使用。

Ceph FS 使用cephfuse模块扩展到用户空间(FUSE)中的文件系统。允许使用libcephfs库与RADOS集群进行直接的应用程序交互。

Cephfs使用方法

- Cephfs kernel module

- Cephfs-fuse

从上面的架构可以看出,Cephfs-fuse的IO path比较长,性能会比Cephfs kernel module的方式差一些

Ceph 文件系统需要至少两个 RADOS 存储池,一个用于数据、一个用于元数据。需考虑:

- 为元数据存储池设置较高的副本水平,因为此存储池丢失任何数据都会导致整个文件系统失效。

- 为元数据存储池分配低延时存储器(像 SSD ),因为它会直接影响到客户端的操作延时。

ceph osd pool create cephfs_data 128

ceph osd pool create cephfs_metadata 128

创建好存储池后,fs new 命令创建文件系统

ceph fs new cephfs cephfs_metadata cephfs_data

图片来源 : 一篇文章让你理解Ceph的三种存储接口(块设备、文件系统、对象存储)

Ceph的块设备优点具有优异的读写性能,缺点不能多处挂载同时读写,主要用在OpenStack上作为虚拟磁盘

Ceph的文件系统缺点读写性能较块设备差,优点具有优异的共享性

启动命令

--endpoint:CSI endpoint (default "unix://tmp/csi.sock")

--metadatastorage:metadata persistence method [node|k8s_configmap]

cephfsplugin --nodeid=master-node --endpoint=unix:///csi/csi.sock --v=5 --drivername=cephfs.csi.ceph.com --metadatastorage=k8s_configmap

1. main函数

根据类型是cephfs,目录为 /var/lib/kubelet/plugins/cephfs,driver name为 cephfs.csi.ceph.com

case cephfsType:

cephfs.PluginFolder = cephfs.PluginFolder + dname

cp, err := util.CreatePersistanceStorage(

cephfs.PluginFolder, *metadataStorage, dname)

if err != nil {

os.Exit(1)

}

driver := cephfs.NewDriver()

driver.Run(dname, *nodeID, *endpoint, *volumeMounter, *mountCacheDir, cp)1.1 CreatePersistanceStorage创建路径初始化cache

在/var/lib/kubelet/plugins/cephfs.csi.ceph.com创建目录 controller与node

// CreatePersistanceStorage creates storage path and initializes new cache

func CreatePersistanceStorage(sPath, metaDataStore, driverName string) (CachePersister, error) {

var err error

if err = createPersistentStorage(path.Join(sPath, "controller")); err != nil {

klog.Errorf("failed to create persistent storage for controller: %v", err)

return nil, err

}

if err = createPersistentStorage(path.Join(sPath, "node")); err != nil {

klog.Errorf("failed to create persistent storage for node: %v", err)

return nil, err

}

cp, err := NewCachePersister(metaDataStore, driverName)

if err != nil {

klog.Errorf("failed to define cache persistence method: %v", err)

return nil, err

}

return cp, err

}

2. NewCachePersister函数返回cahe

根据启动参数metadatastorage创建cache

// NewCachePersister returns CachePersister based on store

func NewCachePersister(metadataStore, driverName string) (CachePersister, error) {

if metadataStore == "k8s_configmap" {

klog.Infof("cache-perister: using kubernetes configmap as metadata cache persister")

k8scm := &K8sCMCache{}

k8scm.Client = NewK8sClient()

k8scm.Namespace = GetK8sNamespace()

return k8scm, nil

} else if metadataStore == "node" {

klog.Infof("cache-persister: using node as metadata cache persister")

nc := &NodeCache{}

nc.BasePath = PluginFolder + "/" + driverName

nc.CacheDir = "controller"

return nc, nil

}

return nil, errors.New("cache-persister: couldn't parse metadatastorage flag")

}2.1 如果metadatastore为k8s_configmap

保存k8s客户端连接,与namespace

// K8sCMCache to store metadata

type K8sCMCache struct {

Client *k8s.Clientset

Namespace string

}2.2 如果metadatastore为node

使用/var/lib/kubelet/plugins/rbd.csi.ceph.com目录

// NodeCache to store metadata

type NodeCache struct {

BasePath string

CacheDir string

}

3. Driver Run函数

路径 pkg/cephfs/driver.go,启动非阻塞grpc,node identityserver controller等工作

// Run start a non-blocking grpc controller,node and identityserver for

// ceph CSI driver which can serve multiple parallel requests

func (fs *Driver) Run(driverName, nodeID, endpoint, volumeMounter, mountCacheDir string, cachePersister util.CachePersister) {

klog.Infof("Driver: %v version: %v", driverName, version)3.1 loadAvailableMounters

ceph-fuse --version

ceph version 12.2.11 (26dc3775efc7bb286a1d6d66faee0ba30ea23eee) luminous (stable)

// Load available ceph mounters installed on system into availableMounters

// Called from driver.go's Run()

func loadAvailableMounters() error {

// #nosec

fuseMounterProbe := exec.Command("ceph-fuse", "--version")

// #nosec

kernelMounterProbe := exec.Command("mount.ceph")

if fuseMounterProbe.Run() == nil {

availableMounters = append(availableMounters, volumeMounterFuse)

}

if kernelMounterProbe.Run() == nil {

availableMounters = append(availableMounters, volumeMounterKernel)

}

if len(availableMounters) == 0 {

return errors.New("no ceph mounters found on system")

}

return nil

}3.2 将配置写入/etc/ceph/ceph.conf文件中

var cephConfig = []byte(`[global]

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

# Workaround for http://tracker.ceph.com/issues/23446

fuse_set_user_groups = false

`)

func writeCephConfig() error {

if err := createCephConfigRoot(); err != nil {

return err

}

return ioutil.WriteFile(cephConfigPath, cephConfig, 0640)

}

剩下与rbd中一样流程

4. CreateVolume

这个 CreateVolume 从 external-provisioner 接受 GRPC 请求 CreateVolumeRequest

validateCreateVolumeRequest 函数 controller 具有 CREATE_DELETE_VOLUME 能力,名字必须需要

// CreateVolume creates a reservation and the volume in backend, if it is not already present

func (cs *ControllerServer) CreateVolume(ctx context.Context, req *csi.CreateVolumeRequest) (*csi.CreateVolumeResponse, error) {

if err := cs.validateCreateVolumeRequest(req); err != nil {

klog.Errorf("CreateVolumeRequest validation failed: %v", err)

return nil, err

}4.1 newVolumeOptions 函数

这个函数主要是取出配置参数,包括 mon secret mounter pool metadata_pool 等,简单说明如下几个函数

// newVolumeOptions generates a new instance of volumeOptions from the provided

// CSI request parameters

func newVolumeOptions(requestName string, size int64, volOptions, secret map[string]string) (*volumeOptions, error) {

var (

opts volumeOptions

err error

)4.1.1 getMonsAndClusterID 函数

这个配置由如下生成,读取从文件 /etc/ceph-csi-config/config.json

--- apiVersion: v1 kind: ConfigMap data: config.json: |- [ { "clusterID": "aaaa", "monitors": [ "192.168.73.64:6789" ] } ] metadata: name: ceph-csi-config

func getMonsAndClusterID(options map[string]string) (string, string, error) {

clusterID, ok := options["clusterID"]

if !ok {

err := fmt.Errorf("clusterID must be set")

return "", "", err

}

if err := validateNonEmptyField(clusterID, "clusterID"); err != nil {

return "", "", err

}

monitors, err := util.Mons(csiConfigFile, clusterID)

if err != nil {

err = fmt.Errorf("failed to fetch monitor list using clusterID (%s)", clusterID)

return "", "", err

}

return monitors, clusterID, err

}4.1.2 getFsID 函数从 ceph 获取 fsName 的 ID

执行命令为 ceph fs get XXXX

{

"mdsmap": {

"epoch": 23,

"flags": 18,

"ever_allowed_features": 0,

"explicitly_allowed_features": 0,

"created": "2019-06-26 18:10:38.833748",

"modified": "2019-06-26 19:04:55.938919",

"tableserver": 0,

"root": 0,

"session_timeout": 60,

"session_autoclose": 300,

"min_compat_client": "-1 (unspecified)",

"max_file_size": 1099511627776,

"last_failure": 0,

"last_failure_osd_epoch": 12,

"compat": {

"compat": {},

"ro_compat": {},

"incompat": {

"feature_1": "base v0.20",

"feature_2": "client writeable ranges",

"feature_3": "default file layouts on dirs",

"feature_4": "dir inode in separate object",

"feature_5": "mds uses versioned encoding",

"feature_6": "dirfrag is stored in omap",

"feature_8": "no anchor table",

"feature_9": "file layout v2",

"feature_10": "snaprealm v2"

}

},

"max_mds": 1,

"in": [

0

],

"up": {

"mds_0": 14111

},

"failed": [],

"damaged": [],

"stopped": [],

"info": {

"gid_14111": {

"gid": 14111,

"name": "lin",

"rank": 0,

"incarnation": 20,

"state": "up:active",

"state_seq": 10,

"addr": "192.168.73.64:6801/557824889",

"addrs": {

"addrvec": [

{

"type": "v2",

"addr": "192.168.73.64:6800",

"nonce": 557824889

},

{

"type": "v1",

"addr": "192.168.73.64:6801",

"nonce": 557824889

}

]

},

"export_targets": [],

"features": 4611087854031667000,

"flags": 0

}

},

"data_pools": [

1

],

"metadata_pool": 2,

"enabled": true,

"fs_name": "cephfs",

"balancer": "",

"standby_count_wanted": 0

},

"id": 1

}

func getFscID(monitors string, cr *util.Credentials, fsName string) (int64, error) {

// ceph fs get myfs --format=json

// {"mdsmap":{...},"id":2}

var fsDetails CephFilesystemDetails

err := execCommandJSON(&fsDetails,

"ceph",

"-m", monitors,

"--id", cr.ID,

"--key="+cr.Key,

"-c", util.CephConfigPath,

"fs", "get", fsName, "--format=json",

)

if err != nil {

return 0, err

}

return fsDetails.ID, nil

}4.1.3 getMetadataPool 函数获取 metadata pool

执行命令 ceph fs ls

[

{

"name": "cephfs",

"metadata_pool": "cephfs_metadata",

"metadata_pool_id": 2,

"data_pool_ids": [

1

],

"data_pools": [

"cephfs_data"

]

}

]

func getMetadataPool(monitors string, cr *util.Credentials, fsName string) (string, error) {

// ./tbox ceph fs ls --format=json

// [{"name":"myfs","metadata_pool":"myfs-metadata","metadata_pool_id":4,...},...]

var filesystems []CephFilesystem

err := execCommandJSON(&filesystems,

"ceph",

"-m", monitors,

"--id", cr.ID,

"--key="+cr.Key,

"-c", util.CephConfigPath,

"fs", "ls", "--format=json",

)

if err != nil {

return "", err

}

for _, fs := range filesystems {

if fs.Name == fsName {

return fs.MetadataPool, nil

}

}

return "", fmt.Errorf("fsName (%s) not found in Ceph cluster", fsName)

}

5 createBackingVolume 函数

5.1 mountCephRoot 函数

这个就是创建个目录,进行 mount 操作,如果使用的为 ceph-fuse,则执行命令

ceph-fuse [ -m monaddr:port ] mountpoint [ fuse options ]

ceph-fuse [/var/lib/kubelet/plugins/cephfs.csi.ceph.com/controller/volumes/root-csi-vol-95828fc0-9940-11e9-be2e-469b72e7fa8c -m 192.168.73.64:6789 -c /etc/ceph/ceph.conf -n client.admin --key=***stripped*** -r / -o nonempty --client_mds_namespace=cephfs

func mountCephRoot(volID volumeID, volOptions *volumeOptions, adminCr *util.Credentials) error {

cephRoot := getCephRootPathLocal(volID)

// Root path is not set for dynamically provisioned volumes

// Access to cephfs's / is required

volOptions.RootPath = "/"

if err := createMountPoint(cephRoot); err != nil {

return err

}

m, err := newMounter(volOptions)

if err != nil {

return fmt.Errorf("failed to create mounter: %v", err)

}

if err = m.mount(cephRoot, adminCr, volOptions); err != nil {

return fmt.Errorf("error mounting ceph root: %v", err)

}

return nil

}5.2 设置 volume 配额

CephFS 允许给系统内的任意目录设置配额,这个配额可以限制目录树中这一点以下的字节数或者文件数,用户空间客户端( libcephfs 、 ceph-fuse )已经支持配额了

参考:http://docs.ceph.org.cn/cephfs/quota/

setfattr [-n ceph.quota.max_bytes -v 2147483648 /var/lib/kubelet/plugins/cephfs.csi.ceph.com/controller/volumes/root-csi-vol-95828fc0-9940-11e9-be2e-469b72e7fa8c/csi-volumes/csi-vol-95828fc0-9940-11e9-be2e-469b72e7fa8c-creating]

if bytesQuota > 0 {

if err := setVolumeAttribute(volRootCreating, "ceph.quota.max_bytes", fmt.Sprintf("%d", bytesQuota)); err != nil {

return err

}

}5.3 ceph 存储池绑定目录

参考: http://docs.ceph.com/docs/master/cephfs/file-layouts/

setfattr [-n ceph.dir.layout.pool -v cephfs_data /var/lib/kubelet/plugins/cephfs.csi.ceph.com/controller/volumes/root-csi-vol-95828fc0-9940-11e9-be2e-469b72e7fa8c/csi-volumes/csi-vol-95828fc0-9940-11e9-be2e-469b72e7fa8c-creating]

if err := setVolumeAttribute(volRootCreating, "ceph.dir.layout.pool", volOptions.Pool); err != nil {

return fmt.Errorf("%v\ncephfs: Does pool '%s' exist?", err, volOptions.Pool)

}5.4 ceph 目录设置 namespace

参考: http://docs.ceph.com/docs/master/cephfs/file-layouts/

EXEC setfattr [-n ceph.dir.layout.pool_namespace -v ns-csi-vol-95828fc0-9940-11e9-be2e-469b72e7fa8c /var/lib/kubelet/plugins/cephfs.csi.ceph.com/controller/volumes/root-csi-vol-95828fc0-9940-11e9-be2e-469b72e7fa8c/csi-volumes/csi-vol-95828fc0-9940-11e9-be2e-469b72e7fa8c-creating]

if err := setVolumeAttribute(volRootCreating, "ceph.dir.layout.pool_namespace", getVolumeNamespace(volID)); err != nil {

return err

}

6. 创建用户以及授权

client.user-csi-vol-95828fc0-9940-11e9-be2e-469b72e7fa8c

key: AQB6ZxVdtbiKFRAA5rmmasFJP4oUZNRRorSExQ==

caps: [mds] allow rw path=/csi-volumes/csi-vol-95828fc0-9940-11e9-be2e-469b72e7fa8c

caps: [mon] allow r

caps: [osd] allow rw pool=cephfs_data namespace=ns-csi-vol-95828fc0-9940-11e9-be2e-469b72e7fa8c

ceph [-m 192.168.73.64:6789 -n client.admin --key=***stripped*** -c /etc/ceph/ceph.conf -f json auth get-or-create client.user-csi-vol-95828fc0-9940-11e9-be2e-469b72e7fa8c mds allow rw path=/csi-volumes/csi-vol-95828fc0-9940-11e9-be2e-469b72e7fa8c mon allow r osd allow rw pool=cephfs_data namespace=ns-csi-vol-95828fc0-9940-11e9-be2e-469b72e7fa8c]

func createCephUser(volOptions *volumeOptions, adminCr *util.Credentials, volID volumeID) (*cephEntity, error) {

adminID, userID := genUserIDs(adminCr, volID)

return getSingleCephEntity(

"-m", volOptions.Monitors,

"-n", adminID,

"--key="+adminCr.Key,

"-c", util.CephConfigPath,

"-f", "json",

"auth", "get-or-create", userID,

// User capabilities

"mds", fmt.Sprintf("allow rw path=%s", getVolumeRootPathCeph(volID)),

"mon", "allow r",

"osd", fmt.Sprintf("allow rw pool=%s namespace=%s", volOptions.Pool, getVolumeNamespace(volID)),

)

}

7. NodeStageVolume 函数

主要目的是把 ceph 目录 mount 到 node 节点

比较简单,前面巴拉巴拉获得一大堆参数最后还是调用 ceph-fuse 进行 mount 操作

EXEC ceph-fuse [/var/lib/kubelet/plugins/kubernetes.io/csi/pv/pvc-9a6c28bf-993c-11e9-a85f-080027603363/globalmount -m 192.168.73.64:6789 -c /etc/ceph/ceph.conf -n client.user-csi-vol-95828fc0-9940-11e9-be2e-469b72e7fa8c --key=***stripped*** -r /csi-volumes/csi-vol-95828fc0-9940-11e9-be2e-469b72e7fa8c -o nonempty --client_mds_namespace=cephfs

7.1 缓存 volume mount

并记录文件 /mount-cache-dir/cephfs.csi.ceph.com/cephfs-mount-cache-XXXXXXXXXXXXXXX.json

{

"driverVersion": "1.0.0",

"volumeID": "0001-0004-aaaa-0000000000000001-95828fc0-9940-11e9-be2e-469b72e7fa8c",

"mounter": "",

"secrets": {

"YWRtaW5JRA==": "YWRtaW4=",

"YWRtaW5LZXk=": "QVFDVVB4TmRpYmRGTmhBQXJmQ1FoS2R5NlJNMDZVZFdibUtpTUE9PQ=="

},

"stagingPath": "/var/lib/kubelet/plugins/kubernetes.io/csi/pv/pvc-9a6c28bf-993c-11e9-a85f-080027603363/globalmount",

"targetPaths": {

"/var/lib/kubelet/pods/65331fcb-9941-11e9-a85f-080027603363/volumes/kubernetes.io~csi/pvc-9a6c28bf-993c-11e9-a85f-080027603363/mount": false

},

"createTime": "2019-06-28T01:09:42.276407161Z"

}

func (mc *volumeMountCacheMap) nodeStageVolume(volID, stagingTargetPath, mounter string, secrets map[string]string) error {

lastTargetPaths := make(map[string]bool)

me, ok := volumeMountCache.volumes[volID]

me = volumeMountCacheEntry{DriverVersion: version}

me.VolumeID = volID

me.Secrets = encodeCredentials(secrets)

me.StagingPath = stagingTargetPath

me.TargetPaths = lastTargetPaths

me.Mounter = mounter

me.CreateTime = time.Now()

volumeMountCache.volumes[volID] = me

return mc.nodeCacheStore.Create(genVolumeMountCacheFileName(volID), me)

}执行完结果如下:

ceph-fuse on /var/lib/kubelet/plugins/kubernetes.io/csi/pv/pvc-9a6c28bf-993c-11e9-a85f-080027603363/globalmount type fuse.ceph-fuse (rw,nosuid,nodev,relatime,user_id=0,group_id=0,allow_other)

8. NodePublishVolume 函数

把 mount 到 node 节点在 mount 到 pod 路径,容器启动直接可以挂载目录

8.1 bindMount 函数

比较简单粗暴,直接使用 mount 命令,把 node 节点 mount 到 pod 的目录,执行完结果为:

ceph-fuse on /var/lib/kubelet/pods/65331fcb-9941-11e9-a85f-080027603363/volumes/kubernetes.io~csi/pvc-9a6c28bf-993c-11e9-a85f-080027603363/mount type fuse.ceph-fuse (rw,nosuid,nodev,relatime,user_id=0,group_id=0,allow_other)

func bindMount(from, to string, readOnly bool, mntOptions []string) error {

mntOptionSli := strings.Join(mntOptions, ",")

if err := execCommandErr("mount", "-o", mntOptionSli, from, to); err != nil {

return fmt.Errorf("failed to bind-mount %s to %s: %v", from, to, err)

}

if readOnly {

mntOptionSli += ",remount"

if err := execCommandErr("mount", "-o", mntOptionSli, to); err != nil {

return fmt.Errorf("failed read-only remount of %s: %v", to, err)

}

}

return nil

}

create volume 总结

- 使用 ceph-fuse 或者 kernel 方式 Mount

- 并创建目录,设置属性,包括配额,与资源pool关联,设置namespace

- 进行 umount 操作

Dockerfile

FROM centos:7

LABEL maintainers="Kubernetes Authors"

LABEL description="CephFS CSI Plugin"ENV CEPH_VERSION "mimic"

RUN yum install -y centos-release-ceph && \

yum install -y kmod ceph-common ceph-fuse attr && \

yum clean allCOPY cephfsplugin /cephfsplugin

RUN chmod +x /cephfsplugin && \

mkdir -p /var/log/cephENTRYPOINT ["/cephfsplugin"]