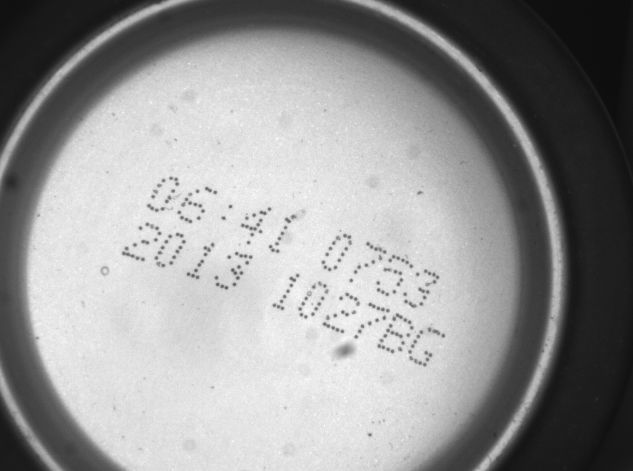

印刷喷码字符识别,数段字符识别:易拉罐底字符识别开发说明书

本系统分为:图像预处理,单易拉罐定位,字符区域块定位,字符识别四大块。

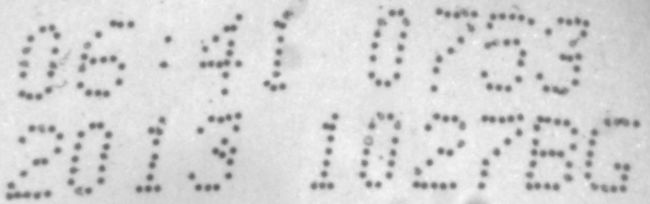

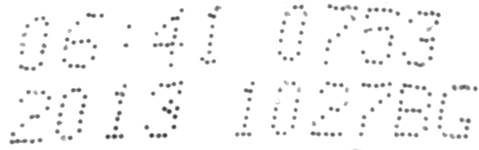

1、图像预处理包括,对不均匀光照的处理,通过直方图拉伸等手段对图像进行亮度区域选择,突出字符区域的亮度分布。(说明:由于系统实时性的要求,尽量减少预处理过程。)

2、单易拉罐定位:当一幅图像中出现多个易拉罐的时候,首先要定位到单个易拉罐,分析易拉罐的形状,可以采用基于易拉罐外形的形状匹配思路来定位到单个易拉罐或者采用基于圆检测可以采用hough圆检测或者小波变换的圆检测来定位到单个易拉罐;

3、字符区域块定位:由于字符区域块具有旋转的特点,这里采用形态学的思路,先使字符区域膨胀粘连成一个整体,然后检测轮廓,通过长宽比和面积筛选轮廓,可以确定字符区域块的位置,最后根据字符区域块的轮廓拟合出的矩形的倾斜角度做矫正就可以得到字符区域块的水平矫正后的区域。

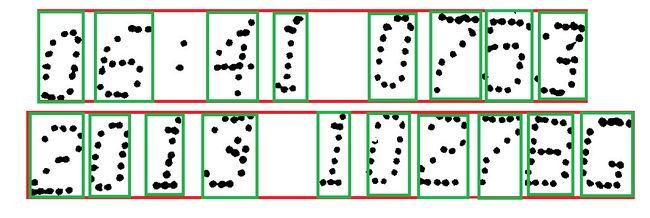

4、字符识别:包括单个字符区域的分割,字符样本筛选和处理,字符训练,字符识别。

(1)单个字符的分割:在第三步处理好的倾斜矫正后的图像上,先垂直投影,将字符区域行分割出来;对于单行的字符区域,做水平投影,然后根据字符宽固定的思路做字符分割,分割出单个的字符。

(2)对单个的字符按类别分类好,并对样本进行初步的预处理,增强,归一化。

将所有的样本归一化为28*28区域大小。

(3)采用CNN卷积神经网络对字符进行训练。

本项目采用的卷积结构说明:

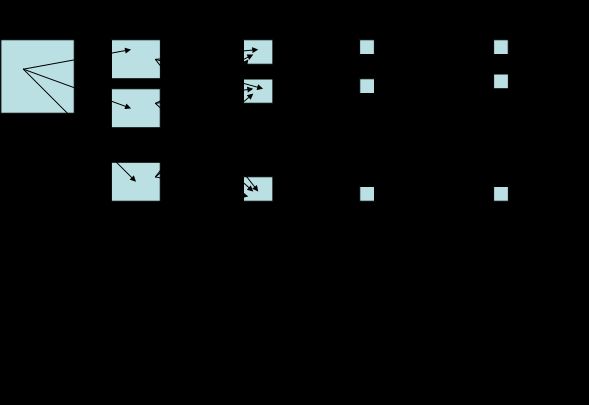

第一层仅具有一个特征映射是输入图像本身。在下面的层中,每个特征映射保持一定数目的独特的内核(权重的二维阵列),等于先前层中的特征映射的数量。在特征图中的每个内核的大小是相同的,并且是一个设计参数。在特征图中的像素值是通过卷积其内核与前一层中的相应特征映射推导。在最后一层的特征映射的数目等于输出选项的数目。例如在0-9数字识别,会有在输出层10的特征图,并具有最高的像素值的特征映射将结果。

#include ".\cnn.h"

#include /*用到了time函数,所以要有这个头文件*/

CCNN::CCNN(void)

{

ConstructNN();

}

CCNN::~CCNN(void)

{

DeleteNN();

}

#ifndef max

#define max(a,b) (((a) > (b)) ? (a) : (b))

#endif

////////////////////////////////////

void CCNN::ConstructNN()

////////////////////////////////////

{

int i;

m_nLayer = 5;

m_Layer = new Layer[m_nLayer];

m_Layer[0].pLayerPrev = NULL;

for(i=1; i1];

m_Layer[0].Construct ( INPUT_LAYER, 1, 29, 0, 0 );

m_Layer[1].Construct ( CONVOLUTIONAL, 6, 13, 5, 2 );

m_Layer[2].Construct ( CONVOLUTIONAL, 50, 5, 5, 2 );

m_Layer[3].Construct ( FULLY_CONNECTED, 100, 1, 5, 1 );

m_Layer[4].Construct ( FULLY_CONNECTED, 10, 1, 1, 1 );

}

///////////////////////////////

void CCNN::DeleteNN()

///////////////////////////////

{

//SaveWeights("weights_updated.txt");

for(int i=0; i//////////////////////////////////////////////

void CCNN::LoadWeightsRandom()

/////////////////////////////////////////////

{

int i, j, k, m;

srand((unsigned)time(0));

for ( i=1; ifor( j=0; j0.05 * RANDOM_PLUS_MINUS_ONE;

for(k=0; km_nFeatureMap; k++)

for(m=0; m < m_Layer[i].m_KernelSize * m_Layer[i].m_KernelSize; m++)

m_Layer[i].m_FeatureMap[j].kernel[k][m] = 0.05 * RANDOM_PLUS_MINUS_ONE;

}

}

}

//////////////////////////////////////////////

void CCNN::LoadWeights(char *FileName)

/////////////////////////////////////////////

{

int i, j, k, m, n;

FILE *f;

if((f = fopen(FileName, "r")) == NULL) return;

for ( i=1; ifor( j=0; j"%lg ", &m_Layer[i].m_FeatureMap[j].bias);

for(k=0; km_nFeatureMap; k++)

for(m=0; m < m_Layer[i].m_KernelSize * m_Layer[i].m_KernelSize; m++)

fscanf(f, "%lg ", &m_Layer[i].m_FeatureMap[j].kernel[k][m]);

}

}

fclose(f);

}

//////////////////////////////////////////////

void CCNN::SaveWeights(char *FileName)

/////////////////////////////////////////////

{

int i, j, k, m;

FILE *f;

if((f = fopen(FileName, "w")) == NULL) return;

for ( i=1; ifor( j=0; j"%lg ", m_Layer[i].m_FeatureMap[j].bias);

for(k=0; km_nFeatureMap; k++)

for(m=0; m < m_Layer[i].m_KernelSize * m_Layer[i].m_KernelSize; m++)

{

fprintf(f, "%lg ", m_Layer[i].m_FeatureMap[j].kernel[k][m]);

}

}

}

fclose(f);

}

//////////////////////////////////////////////////////////////////////////

int CCNN::Calculate(double *input, double *output)

//////////////////////////////////////////////////////////////////////////

{

int i, j;

//copy input to layer 0

for(i=0; i0].m_nFeatureMap; i++)

for(j=0; j < m_Layer[0].m_FeatureSize * m_Layer[0].m_FeatureSize; j++)

m_Layer[0].m_FeatureMap[0].value[j] = input[j];

//forward propagation

//calculate values of neurons in each layer

for(i=1; i//initialization of feature maps to ZERO

for(j=0; j//forward propagation from layer[i-1] to layer[i]

m_Layer[i].Calculate();

}

//copy last layer values to output

for(i=0; i1].m_nFeatureMap; i++)

output[i] = m_Layer[m_nLayer-1].m_FeatureMap[i].value[0];

///================================

/*FILE *f;

char fileName[100];

for(i=0; i

///==================================

//get index of highest scoring output feature

j = 0;

for(i=1; i1 ].m_nFeatureMap; i++)

if(output[i] > output[j]) j = i;

return j;

}

///////////////////////////////////////////////////////////

void CCNN::BackPropagate(double *desiredOutput, double eta)

///////////////////////////////////////////////////////////

{

int i ;

//derivative of the error in last layer

//calculated as difference between actual and desired output (eq. 2)

for(i=0; i1].m_nFeatureMap; i++)

{

m_Layer[m_nLayer-1].m_FeatureMap[i].dError[0] =

m_Layer[m_nLayer-1].m_FeatureMap[i].value[0] - desiredOutput[i];

}

double mse=0.0;

for ( i=0; i<10; i++ )

{

mse += m_Layer[m_nLayer-1].m_FeatureMap[i].dError[0] * m_Layer[m_nLayer-1].m_FeatureMap[i].dError[0];

}

//backpropagate through rest of the layers

for(i=m_nLayer-1; i>0; i--)

{

m_Layer[i].BackPropagate(1, eta);

}

///================================

//for debugging: write dError for each feature map in each layer to a file

/*

FILE *f;

char fileName[100];

for(i=0; im_nFeatureMap; k++)

{

for(int m=0; m < m_Layer[i].m_KernelSize * m_Layer[i].m_KernelSize; m++)

{

fprintf(f, "%10.7lg\n", m_Layer[i].m_FeatureMap[j].dErr_wrtw[k][m]);

}

fprintf(f, "\n\n\n");

}

}

fclose(f);

}

///==================================

*/

int t=0;

}

////////////////////////////////////////////////

void CCNN::CalculateHessian( )

////////////////////////////////////////////////

{

int i, j, k ;

//2nd derivative of the error wrt Xn in last layer

//it is always 1

//Xn is the output after applying SIGMOID

for(i=0; i1].m_nFeatureMap; i++)

{

m_Layer[m_nLayer-1].m_FeatureMap[i].dError[0] = 1.0;

}

//backpropagate through rest of the layers

for(i=m_nLayer-1; i>0; i--)

{

m_Layer[i].BackPropagate(2, 0);

}

//average over the number of samples used

for(i=1; ifor(j=0; jfor(k=0; km_nFeatureMap; k++)

{

for(int m=0; m < m_Layer[i].m_KernelSize * m_Layer[i].m_KernelSize; m++)

{

m_Layer[i].m_FeatureMap[j].diagHessian[k][m] /= g_cCountHessianSample;

}

}

}

}

////-------------------------------

//saving to files for debugging

/*FILE *f;

char fileName[100];

for(i=1; im_nFeatureMap; k++)

{

for(int m=0; m < m_Layer[i].m_KernelSize * m_Layer[i].m_KernelSize; m++)

{

fprintf(f, "%10.7lg\n", m_Layer[i].m_FeatureMap[j].diagHessian[k][m]);

}

fprintf(f, "\n\n\n");

}

}

fclose(f);

}*/

///---------------------------------------

int t=0;

}

/////////////////////////////////////////////////////////////////////////////////////////////////////

void Layer::Construct(int type, int nFeatureMap, int FeatureSize, int KernelSize, int SamplingFactor)

/////////////////////////////////////////////////////////////////////////////////////////////////////

{

m_type = type;

m_nFeatureMap = nFeatureMap;

m_FeatureSize = FeatureSize;

m_KernelSize = KernelSize;

m_SamplingFactor = SamplingFactor;

m_FeatureMap = new FeatureMap[ m_nFeatureMap ];

for(int j=0; jthis;

m_FeatureMap[j].Construct( );

}

}

/////////////////////////

void Layer::Delete()

/////////////////////////

{

for(int j=0; j///////////////////////////////////////////////////

void Layer::Calculate() //forward propagation

///////////////////////////////////////////////////

{

for(int i=0; i//initialize feature map to bias

for(int k=0; k < m_FeatureSize * m_FeatureSize; k++)

{

m_FeatureMap[i].value[k] = m_FeatureMap[i].bias;

}

//calculate effect of each feature map in previous layer

//on this feature map in this layer

for(int j=0; jm_nFeatureMap; j++)

{

m_FeatureMap[i].Calculate(

pLayerPrev->m_FeatureMap[j].value, //input feature map

j //index of input feature map

);

}

//SIGMOD function

for(int j=0; j < m_FeatureSize * m_FeatureSize; j++)

{

m_FeatureMap[i].value[j] = 1.7159 * tanh(0.66666667 * m_FeatureMap[i].value[j]);

}

//print(i); //for debugging

}

}

///////////////////////////////////////////////////////////////

void Layer::BackPropagate(int dOrder, double etaLearningRate)

//////////////////////////////////////////////////////////////

{

//find dError (2nd derivative) wrt the actual output Yn of this layer

//Note that SIGMOID was applied to Yn to get Xn during forward propagation

//We already have dErr_wrt_dXn calculated in CCNN :: BackPropagate and

//use the following equation to get dErr_wrt_dYn

//dErr_wrt_dYn = InverseSIGMOID(Xn)^2 * dErr_wrt_dXn

for(int i=0; ifor(int j=0; j < m_FeatureSize * m_FeatureSize; j++)

{

double temp = DSIGMOID(m_FeatureMap[i].value[j]);

if(dOrder == 2) temp *= temp;

m_FeatureMap[i].dError[j] = temp * m_FeatureMap[i].dError[j];

}

}

//clear dError wrt weights

for(int i=0; i//clear dError wrt Xn in previous layer.

//This is input to the previous layer for backpropagation

for(int i=0; im_nFeatureMap; i++)

pLayerPrev->m_FeatureMap[i].ClearDError();

//Backpropagate

for(int i=0; i//derivative of error wrt bias

for(int j=0; j//calculate effect of this feature map on each feature map in the revious layer

for(int j=0; jm_nFeatureMap; j++)

{

m_FeatureMap[i].BackPropagate(

pLayerPrev->m_FeatureMap[j].value, //input feature map

j, //index of input feature map

pLayerPrev->m_FeatureMap[j].dError, //dErr_wrt_Xn for previous layer

dOrder //order of derivative

);

}

}

//update weights (for backporpagation) or diagonal hessian (for 2nd order backpropagation)

double epsilon, divisor;

for(int i=0; iif(dOrder == 1)

{

divisor = max(0, m_FeatureMap[i].diagHessianBias) + dMicronLimitParameter;

epsilon = etaLearningRate / divisor;

m_FeatureMap[i].bias -= epsilon * m_FeatureMap[i].dErr_wrtb;

}

else

{

m_FeatureMap[i].diagHessianBias += m_FeatureMap[i].dErr_wrtb;

}

for(int j=0; jm_nFeatureMap; j++)

{

for(int k=0; k < m_KernelSize * m_KernelSize; k++)

{

if(dOrder == 1)

{

divisor = max(0, m_FeatureMap[i].kernel[j][k]) + dMicronLimitParameter;

epsilon = etaLearningRate / divisor;

m_FeatureMap[i].kernel[j][k] -= epsilon * m_FeatureMap[i].dErr_wrtw[j][k];

}

else

{

m_FeatureMap[i].diagHessian[j][k] += m_FeatureMap[i].dErr_wrtw[j][k];

}

}

}

}

}

//////////////////////////////////////////////////////////////////////////////////////////

void FeatureMap::Construct( )

//////////////////////////////////////////////////////////////////////////////////////////

{

if(pLayer->m_type == INPUT_LAYER) m_nFeatureMapPrev = 0;

else m_nFeatureMapPrev = pLayer->pLayerPrev->m_nFeatureMap;

int FeatureSize = pLayer->m_FeatureSize;

int KernelSize = pLayer->m_KernelSize;

//neuron values

value = new double [ FeatureSize * FeatureSize ];

//error in neuron values

dError = new double [ FeatureSize * FeatureSize ];

//weights kernel

kernel = new double* [ m_nFeatureMapPrev ];

for(int i=0; inew double [KernelSize * KernelSize];

//initialize

bias = 0.05 * RANDOM_PLUS_MINUS_ONE;

for(int j=0; j < KernelSize * KernelSize; j++) kernel[i][j] = 0.05 * RANDOM_PLUS_MINUS_ONE;

}

//diagHessian

diagHessian = new double* [ m_nFeatureMapPrev ];

for(int i=0; inew double [KernelSize * KernelSize];

//derivative of error wrt kernel weights

dErr_wrtw = new double* [ m_nFeatureMapPrev ];

for(int i=0; inew double [KernelSize * KernelSize];

}

///////////////////////////

void FeatureMap::Delete()

///////////////////////////

{

delete[] value;

delete[] dError;

for(int i=0; i////////////////////////////

void FeatureMap::Clear()

/////////////////////////////

{

for(int i=0; i < pLayer->m_FeatureSize * pLayer->m_FeatureSize; i++) value[i] = 0.0;

}

////////////////////////////////

void FeatureMap::ClearDError()

/////////////////////////////////

{

for(int i=0; i < pLayer->m_FeatureSize * pLayer->m_FeatureSize; i++) dError[i] = 0.0;

}

///////////////////////////////////

void FeatureMap::ClearDiagHessian()

///////////////////////////////////

{

diagHessianBias = 0;

for(int i=0; i < m_nFeatureMapPrev; i++)

for(int j=0; j < pLayer->m_KernelSize * pLayer->m_KernelSize; j++) diagHessian[i][j] = 0.0;

}

////////////////////////////////

void FeatureMap::ClearDErrWRTW()

////////////////////////////////

{

dErr_wrtb = 0;

for(int i=0; i < m_nFeatureMapPrev; i++)

for(int j=0; j < pLayer->m_KernelSize * pLayer->m_KernelSize; j++) dErr_wrtw[i][j] = 0.0;

}

//////////////////////////////////////////////////////////////////////////////////////////////////////

void FeatureMap::Calculate(double *valueFeatureMapPrev, int idxFeatureMapPrev )

//////////////////////////////////////////////////////////////////////////////////////////////////////

//calculate effect of a feature map in previous layer on this feature map in this layer

// valueFeatureMapPrev: feature map in previous layer

// idxFeatureMapPrev : index of feature map in previous layer

{

int isize = pLayer->pLayerPrev->m_FeatureSize; //feature size in previous layer

int ksize = pLayer->m_KernelSize;

int step_size = pLayer->m_SamplingFactor;

int k = 0;

for(int row0 = 0; row0 <= isize - ksize; row0 += step_size)

for(int col0 = 0; col0 <= isize - ksize; col0 += step_size)

value[k++] += Convolute(valueFeatureMapPrev, isize, row0, col0, kernel[idxFeatureMapPrev], ksize);

}

//////////////////////////////////////////////////////////////////////////////////////////////////////

double FeatureMap::Convolute(double *input, int size, int r0, int c0, double *weight, int kernel_size)

//////////////////////////////////////////////////////////////////////////////////////////////////////

{

int i, j, k = 0;

double summ = 0;

for(i = r0; i < r0 + kernel_size; i++)

for(j = c0; j < c0 + kernel_size; j++)

summ += input[i * size + j] * weight[k++];

return summ;

}

/////////////////////////////////////////////////////////////////////////////////////

void FeatureMap::BackPropagate(double *valueFeatureMapPrev, int idxFeatureMapPrev,

double *dErrorFeatureMapPrev, int dOrder )

/////////////////////////////////////////////////////////////////////////////////////

//calculate effect of this feature map on a feature map in previous layer

//note that previous layer is next in backpropagation

// valueFeatureMapPrev: feature map in previous layer

// idxFeatureMapPrev : index of feature map in previous layer

// dErrorFeatureMapPrev: dError wrt neuron values in the FM in prev layer

{

int isize = pLayer->pLayerPrev->m_FeatureSize; //size of FM in previous layer

int ksize = pLayer->m_KernelSize; //kernel size

int step_size = pLayer->m_SamplingFactor; //subsampling factor

int row0, col0, k;

k = 0;

for(row0 = 0; row0 <= isize - ksize; row0 += step_size)

{

for(col0 = 0; col0 <= isize - ksize; col0 += step_size)

{

for(int i=0; ifor(int j=0; j//get dError wrt output for feature map in the previous layer

double temp = kernel[idxFeatureMapPrev][i * ksize + j];

if(dOrder == 1)

dErrorFeatureMapPrev[(row0 + i) * isize + (j + col0)] += dError[k] * temp;

else

dErrorFeatureMapPrev[(row0 + i) * isize + (j + col0)] += dError[k] * temp * temp;

//get dError wrt kernel wights

temp = valueFeatureMapPrev[(row0 + i) * isize + (j + col0)];

if(dOrder == 1)

dErr_wrtw[idxFeatureMapPrev][i * ksize + j] += dError[k] * temp;

else

dErr_wrtw[idxFeatureMapPrev][i * ksize + j] += dError[k] * temp * temp;

}

}

k++;

}

}

}

在这项研究中实现显示在上图中。在每一层的特征映射(FM),以及它们的大小的数列的每个层下。例如第1层有6个特征图,每一个尺寸为13×13的。内核各特征映射在一个层中含有的数目也示于图中。例如,W [6] [25]根据第2层的书面指示对每个特征图中该层有6个内核(等于调频的前层中的数量),每个都具有25的权重(5x5的阵列)。除了这些权重,每个FM有一个偏置的重量(它的重要性,请参考任何NN文本)。每个像素具有另一个参数SF(采样系数),它会在向前传播部分进行说明。其他三个参数DBIAS,dErrorW和dErrorFM将在后面章节传播来解释。图中的每个层下面的最后两行给像素(神经元)和重量,在整个层的总数目。

采用CNN训练的全职保存在一个txt或者xml文件中。

(4)字符识别:对输入的单个字符,采用3.2中预处理,然后输入到3.3中的卷积核,通过BP达到一个结果就是该字符的识别结果。

#include

#include

#include "opencv2/core/core.hpp"

#include "opencv2/highgui/highgui.hpp"

#include /*用到了time函数,所以要有这个头文件*/

#include

//这个语句很重要--

if( (strcmp(FileInfo.name,".") != 0 ) &&(strcmp(FileInfo.name,"..") != 0)) {

string newPath = folderPath + "\\" + FileInfo.name;

cout<" " <//根目录下下的子目录名字就是label名,如果没有子目录则其为根目录下

labelTemp = atoi(FileInfo.name);

// printf("%d\n",labelTemp);

dfsFolder(newPath);

}

}else {

string finalName = folderPath + "\\" + FileInfo.name;

//将所有的文件名写入一个txt文件--

// cout << FileInfo.name << "\t";

// printf("%d\t",label);

// cout << folderPath << "\\" << FileInfo.name << " " <

//将文件名字和label名字(子目录名字赋值给向量)--

imgNames.push_back(finalName);

}

}while (_findnext(Handle, &FileInfo) == 0);

_findclose(Handle);

}

void initTrainImage(){

readConfig(configFile, trainSetPosPath);

string folderPath = trainSetPosPath;

dfsFolder(folderPath);

}

//CNN识别初始化部分

void init(){

m_bTestSetOpen = m_bTrainingSetOpen = false;

m_bStatusTraining = false;

m_bStatusTesting = false;

m_iCountTotal = 0;

m_iCountError = 0;

m_dDispX = new double[g_cVectorSize * g_cVectorSize];

m_dDispY = new double[g_cVectorSize * g_cVectorSize];

m_etaLearningRate = 0.00005;

}

void Calculate(IplImage *img){

//// Also create a color image (for display)

//IplImage *colorimg = cvCreateImage( inputsz, IPL_DEPTH_8U, 3 );

//get image data

int index = 0;

for(int i = 0; i < img->height; ++i){

for(int j = 0; j < img->width; ++j){

index = i*img->width + j;

grayArray[index] = ((uchar*)(img->imageData + i*img->widthStep))[j] ; //为像素赋值

}

}

int ii, jj;

//copy gray scale image to a double input vector in -1 to 1 range

// one is white, -one is black

for ( ii=0; ii1.0 ;

for ( ii=0; iifor ( jj=0; jjint idxVector = 1 + jj + g_cVectorSize * (1 + ii);

int idxImage = jj + g_cImageSize * ii;

inputVector[ idxVector ] = double(255 - grayArray[ idxImage ])/128.0 - 1.0;

}

}

//call forward propagation function of CNN

m_iOutput = m_cnn.Calculate(inputVector, outputVector);

}

uchar lut[256];

CvMat* lut_mat;

IplImage * dehist(IplImage * src_image, int _brightness, int _contrast){

IplImage *dst_image = cvCreateImage(cvGetSize(src_image),8,src_image->nChannels);

cvCopy(src_image,dst_image);

int brightness = _brightness - 100;

int contrast = _contrast - 100;

int i;

float max_value = 0;

if( contrast > 0 )

{

double delta = 127.*contrast/100;

double a = 255./(255. - delta*2);

double b = a*(brightness - delta);

for( i = 0; i < 256; i++ )

{

int v = cvRound(a*i + b);

if( v < 0 )

v = 0;

if( v > 255 )

v = 255;

lut[i] = (uchar)v;

}

}

else

{

double delta = -128.*contrast/100;

double a = (256.-delta*2)/255.;

double b = a*brightness + delta;

for( i = 0; i < 256; i++ )

{

int v = cvRound(a*i + b);

if( v < 0 )

v = 0;

if( v > 255 )

v = 255;

lut[i] = (uchar)v;

}

}

lut_mat = cvCreateMatHeader( 1, 256, CV_8UC1 );

cvSetData( lut_mat, lut, 0 );

cvLUT( src_image, dst_image, lut_mat );

if(showSteps)

cvShowImage( "dst_image", dst_image );

IplImage* gray_image = cvCreateImage(cvGetSize(src_image),8,1);

cvCvtColor(dst_image, gray_image, CV_BGR2GRAY);

IplImage* bin_image = cvCreateImage(cvGetSize(src_image),8,1);

// int blockSize = 11;

// int constValue = 9;

// cvAdaptiveThreshold(gray_image, bin_image, 255, CV_ADAPTIVE_THRESH_MEAN_C, CV_THRESH_BINARY, blockSize, constValue);

cvThreshold(gray_image,bin_image,1,255,CV_THRESH_BINARY+CV_THRESH_OTSU);

if(showSteps)

cvShowImage( "bin_image", bin_image );

// OpenClose(bin_image,bin_image,-1);

//cvErode(bin_image,bin_image,element,1);

//cvDilate(bin_image,bin_image,element,8);

cvReleaseImage(&dst_image);

cvReleaseImage(&gray_image);

return bin_image;

}

IplImage * projectY(IplImage * src){

IplImage *imgBin = cvCreateImage(cvGetSize(src),8,src->nChannels);

cvCopy(src,imgBin);

cvNot(src,imgBin);

//Y轴投影 确定字符具体区域

IplImage* painty=cvCreateImage( cvGetSize(imgBin),IPL_DEPTH_8U, 1 );

cvZero(painty);

int* h=new int[imgBin->height];

memset(h,0,imgBin->height*4);

int x,y;

CvScalar s,t;

for(y=0;yheight;y++)

{

for(x=0;xwidth;x++)

{

s=cvGet2D(imgBin,y,x);

if(s.val[0]==0)

h[y]++;

}

}

//将y投影后,值小于50的赋值为0

for(y=0;yheight;y++) {

if((imgBin->width-h[y]) <= 13)

h[y] = imgBin->width;

// printf("%d ",imgBin->width-h[y]);

}

//将Y轴上 很窄的线段,即横着的很长的细线直接抹掉

for(x=0;xheight;x++) {

for(y=x;yheight;y++) {

if( (h[x] == h[y])&&(h[y] == painty->width)&&(y-x <= 3) ){

for(int i=x;i<=y;i++){

h[i] = painty->width;

}

}

if( (h[x] != painty->width)&&(h[y] == painty->width)&&(y-x <= 3) ){

for(int i=x;i<=y;i++){

h[i] = painty->width;

}

}

}

}

for(y=0;yheight;y++)

{

for(x=0;x0]=255;

cvSet2D(painty,y,x,t);

}

}

//确定Y轴字符的收尾,确定字符区域的高度

//查找paintx首尾两端的x轴坐标

int xLeft = 0;

for(x=0;xheight-2;x++){

if ( cvGet2D(painty,x,painty->width - 1).val[0]== 0 ){

xLeft = x;

break;

}

if( (cvGet2D(painty,x,painty->width - 1).val[0] == 255)&&(cvGet2D(painty,x+1,painty->width - 1).val[0] == 0) ){

xLeft = x;

break;

}

}

if(showSteps)

cout<<"列字符区域上边字符起始点是:"<int xRight = 0;

for(x=painty->height-1; x>0 ;x--){

if ( cvGet2D(painty,x,painty->width - 1).val[0]== 0 ){

xRight = x;

break;

}

if( (cvGet2D(painty,x,painty->width - 1).val[0]== 255)&&(cvGet2D(painty,x-1,painty->width - 1).val[0] == 0) ){

xRight = x;

break;

}

}

if(xRight == 0) xRight = painty->height;

if(showSteps)

cout<<"列字符区域下边字符起始点是:"<if(showSteps){

cvNamedWindow("水平积分投影",1);

cvShowImage("水平积分投影",painty);

}

IplImage * image = cvCreateImage(cvSize((src->width),xRight - xLeft + 4),8,src->nChannels);

CvRect rect;

rect.height = xRight - xLeft+4 ;

rect.width = src->width;

rect.x = 0;

rect.y = xLeft-2;

cvSetImageROI(src,rect);

cvCopy(src,image);

cvResetImageROI(src);

cvReleaseImage(&imgBin);

// cvReleaseImage(&src);

cvReleaseImage(&painty);

cvNamedWindow("image1",1);

cvShowImage("image1",image);

return image;

}

IplImage* projectX(IplImage * src){

// cvSmooth(src,src,CV_BLUR,3,3,0,0);

IplImage * srcTemp = cvCreateImage(cvGetSize(src),8,src->nChannels);

cvCopy(src,srcTemp);

cvThreshold(src,src,50,255,CV_THRESH_BINARY_INV+CV_THRESH_OTSU);

IplImage* paintx=cvCreateImage(cvGetSize(src),IPL_DEPTH_8U, 1 );

cvZero(paintx);

int* v=new int[src->width];

int tempx=src->height;

// CvPoint xPoint;

int tempy=src->width;

memset(v,0,src->width*4);

int x,y;

CvScalar s,t;

for(x=0;xwidth;x++){

for(y=0;yheight;y++){

s=cvGet2D(src,y,x);

if(s.val[0]==0)

v[x]++;

}

}

//将x投影后,值小于2的赋值为0

for(x=0;xwidth;x++) {

if((src->height-v[x]) <= 3)

v[x] = src->height;

// printf("%d ",src->height-v[x]);

}

//若投影后出现很细的竖直黑线,将这条黑线去掉

for(x=0;xwidth;x++) {

for(y=x;ywidth;y++) {

if( (v[x] == v[y])&&(v[y] == paintx->height)&&(y-x < 3) ){

for(int i=x;i<=y;i++){

v[i] = paintx->height;

}

}

if( (v[x] != paintx->width)&&(v[y] == paintx->width)&&(y-x <= 3) ){

for(int i=x;i<=y;i++){

v[i] = paintx->height;

}

}

}

}

for(x=0;xwidth;x++){

for(y=0;y0]=255;

cvSet2D(paintx,y,x,t);

}

}

//查找paintx首尾两端的x轴坐标

int xLeft = 0;

for(x=0;xwidth;x++){

if( (cvGet2D(paintx,paintx->height - 3,x).val[0] == 255)&&(cvGet2D(paintx,paintx->height - 3,x+1).val[0] == 0) ){

xLeft = x;

break;

}

}

if(showSteps)

cout<<"行字符区域左边字符起始点是:"<int xRight = 0;

for(x=paintx->width-1; x>0 ;x--){

if( (cvGet2D(paintx,paintx->height - 1,x).val[0]== 255)&&(cvGet2D(paintx,paintx->height - 1,x-1).val[0] == 0) ){

xRight = x;

break;

}

}

if(showSteps)

cout<<"行字符区域左边字符起始点是:"<if(showSteps){

cvNamedWindow("二值图像",1);

cvNamedWindow("垂直积分投影",1);

cvShowImage("二值图像",src);

cvShowImage("垂直积分投影",paintx);

}

IplImage * image = cvCreateImage(cvSize((xRight - xLeft),src->height),8,src->nChannels);

CvRect rect;

rect.height = src->height;

rect.width = xRight - xLeft;

rect.x = xLeft;

rect.y = 0;

cvSetImageROI(srcTemp,rect);

cvCopy(srcTemp,image);

cvResetImageROI(srcTemp);

cvReleaseImage(&src);

cvReleaseImage(&srcTemp);

cvReleaseImage(&paintx);

//cvNamedWindow("image",1);

//cvShowImage("image",image);

//cvWaitKey(0);

return image;

}

char recVifcode(IplImage *src){

// Grayscale img pointer

if (!src){

cout << "ERROR: Bad image file: " << endl;

// continue;

}

CvSize inputsz = cvSize(28,28);

// Grayscale img pointer

IplImage* img = cvCreateImage( inputsz, IPL_DEPTH_8U, 1 );

// create some GUI

if(showSteps){

cvNamedWindow("Image", CV_WINDOW_AUTOSIZE);

cvMoveWindow("Image", inputsz.height, inputsz.width);

}

cvResize(src,img,CV_INTER_CUBIC);

Calculate(img);

if(showSteps){

cvShowImage("Image",img);

}

/*IplImage* imgDistorted = cvCreateImage( inputsz, IPL_DEPTH_8U, 1 );

cvCopy(img,imgDistorted);

for(int i = 0; i < imgDistorted->height; ++i){

for(int j = 0; j < imgDistorted->width; ++j){

((uchar*)(imgDistorted->imageData + i*imgDistorted->widthStep))[j] =

(unsigned char) int (255 - 255 * (inputVector[(i+1)*g_cVectorSize + j + 1] + 1)/2);

}

}

if(showSteps){

cvNamedWindow("imgDistorted", CV_WINDOW_AUTOSIZE);

cvShowImage("imgDistorted",imgDistorted);

}*/

// cout<<"识别结果为:"<

char outputChar = NULL;

if(m_iOutput == 0) outputChar = '0';

if(m_iOutput == 1) outputChar = '1';

if(m_iOutput == 2) outputChar = '2';

if(m_iOutput == 3) outputChar = '3';

if(m_iOutput == 4) outputChar = '4';

if(m_iOutput == 5) outputChar = '5';

if(m_iOutput == 6) outputChar = '6';

if(m_iOutput == 7) outputChar = '7';

if(m_iOutput == 8) outputChar = '8';

if(m_iOutput == 9) outputChar = '9';

if(m_iOutput == 10) outputChar = 'A';

if(m_iOutput == 11) outputChar = 'B';

if(m_iOutput == 12) outputChar = 'U';

if(m_iOutput == 13) outputChar = 'D';

if(m_iOutput == 14) outputChar = 'P';

if(m_iOutput == 15) outputChar = 'R';

if(m_iOutput == 16) outputChar = 'S';

if(m_iOutput == 17) outputChar = 'T';

if(m_iOutput == 18) outputChar = 'U';

if(m_iOutput == 19) outputChar = 'X';

if(m_iOutput == 20) outputChar = 'Y';

// cout<<"识别结果为:"<

return outputChar;

}

void saveImages(IplImage * image){

SYSTEMTIME stTime;

GetLocalTime(&stTime);

char pVideoName[256];

sprintf(pVideoName, ".\\%d_%d_%d_%d_%d_%d_%d", stTime.wYear, stTime.wMonth, stTime.wDay, stTime.wHour, stTime.wMinute, stTime.wSecond, stTime.wMilliseconds);

char image_name[500] ;

sprintf_s(image_name,500, "%s%s%s", "result\\", pVideoName, ".bmp");//保存的图片名

cvSaveImage(image_name, image);

}

vector<char> segChar(IplImage * src,int x){

vector<char>imageRecLine1; //保存第一行的识别结果

vector<char>imageRecLine2;//保存第二行的识别结果

if((x!=1)&&(x!=2)){

printf("error! 必须指定是第几行!");

// return NULL;

}

// cvErode(src,src,element,1);

if(showSteps)

cvShowImage( "src", src );

//想垂直方向投影,确定字符区域的首尾,用于分割出单个字符

IplImage * image = projectX(src);

if(x == 1){//第一行

//================判断第一个字符是否为 1 ===========================/

CvRect rectFirst;

rectFirst.height = image->height;

rectFirst.width = image->width/numLine1;

rectFirst.x = 0;

rectFirst.y = 0;

IplImage * imageRoiFirst = cvCreateImage(cvSize(rectFirst.width,rectFirst.height),8,image->nChannels);

cvSetImageROI(image,rectFirst);

cvCopy(image,imageRoiFirst);

cvResetImageROI(image);

if(saveImage)

saveImages(imageRoiFirst);

char recResultFirst = recVifcode(imageRoiFirst);

if(recResultFirst == '1'){

/*cout<<"wwwwwwwwwwwwwwwwwwwwwwwww"<

imageRecLine2.push_back(recResultFirst);//保存第二行的第一个字符1的识别结果

const int firstLen = 20;

for(int i=0;i1 ;i++){

CvRect rect;

rect.height = image->height;

rect.width = (image->width-firstLen)/(numLine1-1);

rect.x = i*(image->width-firstLen)/(numLine1-1) + firstLen;

rect.y = 0;

IplImage * imageRoi = cvCreateImage(cvSize(rect.width,rect.height),8,image->nChannels);

cvSetImageROI(image,rect);

cvCopy(image,imageRoi);

cvResetImageROI(image);

cvShowImage("1",imageRoi);

cvWaitKey(0);

if(saveImage)

saveImages(imageRoi);

//调用识别部分代码

char recResult = recVifcode(imageRoi);

imageRecLine2.push_back(recResult);//保存第二行的识别结果

}

}else{

for(int i=0;iheight;

rect.width = image->width/numLine1;

rect.x = i*image->width/numLine1;

rect.y = 0;

IplImage * imageRoi = cvCreateImage(cvSize(rect.width,rect.height),8,image->nChannels);

cvSetImageROI(image,rect);

cvCopy(image,imageRoi);

cvResetImageROI(image);

cvShowImage("1",imageRoi);

cvWaitKey(0);

if(saveImage)

saveImages(imageRoi);

//调用识别部分代码

char recResult = recVifcode(imageRoi);

imageRecLine1.push_back(recResult);//保存第二行的识别结果

}

}

return imageRecLine1;

}

if(x == 2){//第一行

//================判断第一个字符是否为 1 ===========================/

CvRect rectFirst;

rectFirst.height = image->height;

rectFirst.width = image->width/numLine2;

rectFirst.x = 0;

rectFirst.y = 0;

IplImage * imageRoiFirst = cvCreateImage(cvSize(rectFirst.width,rectFirst.height),8,image->nChannels);

cvSetImageROI(image,rectFirst);

cvCopy(image,imageRoiFirst);

cvResetImageROI(image);

if(saveImage)

saveImages(imageRoiFirst);

char recResultFirst = recVifcode(imageRoiFirst);

if(recResultFirst == '1'){

/*cout<<"wwwwwwwwwwwwwwwwwwwwwwwww"<

imageRecLine2.push_back(recResultFirst);//保存第二行的第一个字符1的识别结果

const int firstLen = 20;

for(int i=0;i1 ;i++){

CvRect rect;

rect.height = image->height;

rect.width = (image->width-firstLen)/(numLine2-1);

rect.x = i*(image->width-firstLen)/(numLine2-1) + firstLen;

rect.y = 0;

IplImage * imageRoi = cvCreateImage(cvSize(rect.width,rect.height),8,image->nChannels);

cvSetImageROI(image,rect);

cvCopy(image,imageRoi);

cvResetImageROI(image);

cvShowImage("1",imageRoi);

cvWaitKey(0);

if(saveImage)

saveImages(imageRoi);

//调用识别部分代码

char recResult = recVifcode(imageRoi);

imageRecLine2.push_back(recResult);//保存第二行的识别结果

}

}else{

for(int i=0;iheight;

rect.width = image->width/numLine2;

rect.x = i*image->width/numLine2;

rect.y = 0;

IplImage * imageRoi = cvCreateImage(cvSize(rect.width,rect.height),8,image->nChannels);

cvSetImageROI(image,rect);

cvCopy(image,imageRoi);

cvResetImageROI(image);

cvShowImage("1",imageRoi);

cvWaitKey(0);

if(saveImage)

saveImages(imageRoi);

//调用识别部分代码

char recResult = recVifcode(imageRoi);

imageRecLine2.push_back(recResult);//保存第二行的识别结果

}

}

return imageRecLine2;

}

}

void printResult(vector<char> imageRecLine){

int recNum = imageRecLine.size();

for(int iNum=0;iNumcout <cout <void processingTotal(){

initTrainImage();

char * weightFileName = "Weight.txt";

m_cnn.LoadWeights(weightFileName);

int imgNum = imgNames.size();

for(int iNum=0;iNumcout<cout<1);

if(!src) continue;

// //////////////////////////////////////////////////////////////////////////////

// Mat image(src);

// Mat dst = image.clone();

//// for ( int i = 1; i < 480; i = i + 2 ){

// int i = 30;

// bilateralFilter ( image, dst, i, i*2, i/2 );

// imshow( "image", image );

// imshow( "dst", dst );

// waitKey ( 0 );

//// }

// ///////////////////////////////////////////////////////////////////////////

getStartTime();

IplImage * tgray = cvCreateImage(cvGetSize(src),8,1);

cvCvtColor(src,tgray,CV_BGR2GRAY);

IplImage * bin_image = cvCreateImage(cvGetSize(src),8,1);

bin_image = dehist(src,83,134);

cvErode(bin_image,bin_image,element,12);

if(showSteps)

cvShowImage( "bin_image1", bin_image );

CvMemStorage *mems=cvCreateMemStorage();

CvSeq *contours;

cvFindContours( bin_image, mems,

&contours,sizeof(CvContour),CV_RETR_LIST, CV_CHAIN_APPROX_SIMPLE);

// cvDrawContours(src, contours, CV_RGB(0,0,255), CV_RGB(255, 0, 0), 2, 2, 8, cvPoint(0,0));

cvClearMemStorage( mems );

if(showSteps){

cvNamedWindow("cvDrawContours",1);

cvShowImage("cvDrawContours",src);

}

CvRect rect;

// char image_name[100];

CvSeq* first_seq = contours;

// int image_num = 0;

for( contours=first_seq; contours != 0; contours = contours->h_next ){

rect = cvBoundingRect(contours);

cout<" "<" "<" "<//根据轮廓大小信息做筛选

if( (rect.width > 440)||(rect.height > 300)||

// (rect.width/rect.height > 5)||

(rect.width*rect.height < 4500)||

(rect.width < 100)||(rect.height < 50)||

(rect.x < 0)||(rect.y < 0)||

(rect.x > src->width)||(rect.y > src->height)

) continue;

else{

CvPoint pt1;pt1.x = rect.x; pt1.y = rect.y;

CvPoint pt2;pt2.x = rect.x + rect.width; pt2.y = rect.y + rect.height;

if(showSteps){

cvRectangle(src,pt1,pt2,CV_RGB(255,0,255),2/*CV_FILLED*/,CV_AA,0);

cout<" "<" "<" "<if(showSteps){

cvNamedWindow("cvDrawContours",1);

cvShowImage("cvDrawContours",src);

}

//扣出字符区域后,在对小图的字符区域做二值化,滤波,然后roi,旋转矫正??????????

CvRect rectTemple;

rectTemple.x = rect.x - 5;

rectTemple.y = rect.y - 5;

rectTemple.width = rect.width + 10;

rectTemple.height = rect.height + 10;

IplImage* tempImage = cvCreateImage(cvSize(rectTemple.width,rectTemple.height),8,1);

cvSetImageROI(tgray,rectTemple);

cvCopy(tgray,tempImage);

cvResetImageROI(tgray);

cvThreshold(tempImage,tempImage,1,255,CV_THRESH_BINARY+CV_THRESH_OTSU);

if(showSteps){

cvNamedWindow("tempImage",1);

cvShowImage("tempImage",tempImage);

}

CvBox2D box_outer = cvMinAreaRect2(contours);

// cout<

//旋转矫正tempImage

IplImage* tempImageRotate = cvCreateImage(cvGetSize(tempImage),8,1);

float m[6];

// Matrix m looks like:

//

// [ m0 m1 m2 ] ===> [ A11 A12 b1 ]

// [ m3 m4 m5 ] [ A21 A22 b2 ]

//

CvMat M = cvMat (2, 3, CV_32F, m);

int w = tempImage->width;

int h = tempImage->height;

if( (box_outer.angle+90.)<45 ){

// 仅仅旋转

int factor = 1;

m[0] = (float) (factor * cos ((-1)*(box_outer.angle+90.)/2. * 2 * CV_PI / 180.));

m[1] = (float) (factor * sin ((-1)*(box_outer.angle+90.)/2. * 2 * CV_PI / 180.));

m[3] = (-1)*m[1];

m[4] = m[0];

// 将旋转中心移至图像中间

m[2] = w * 0.5f;

m[5] = h * 0.5f;

// dst(x,y) = A * src(x,y) + b

}

if( (box_outer.angle+90.)>45 ){

// 仅仅旋转

int factor = 1;

m[0] = (float) (factor * cos ((-1)*(box_outer.angle)/2. * 2 * CV_PI / 180.));

m[1] = (float) (factor * sin ((-1)*(box_outer.angle)/2. * 2 * CV_PI / 180.));

m[3] = (-1)*m[1];

m[4] = m[0];

// 将旋转中心移至图像中间

m[2] = w * 0.5f;

m[5] = h * 0.5f;

// dst(x,y) = A * src(x,y) + b

}

cvZero (tempImageRotate);

cvGetQuadrangleSubPix (tempImage, tempImageRotate, &M);

if(showSteps){

cvNamedWindow("ROI",1);

cvShowImage("ROI",tempImageRotate);

}

cvReleaseImage( &tempImage );

//====================对tempImageRotate做Y轴投影===================//

IplImage* imageCharOk = projectY(tempImageRotate);

//直接上下 均分 分割出上下两行

CvRect rectTop;

rectTop.x = 0;

rectTop.y = 0;

rectTop.width = imageCharOk->width ;

rectTop.height = imageCharOk->height/2;

IplImage* imageCharOkTop = cvCreateImage(cvSize(rectTop.width,rectTop.height),8,1);

cvSetImageROI(imageCharOk,rectTop);

cvCopy(imageCharOk,imageCharOkTop);

cvResetImageROI(imageCharOk);

if(showSteps){

cvNamedWindow("imageCharOkTop",1);

cvShowImage("imageCharOkTop",imageCharOkTop);

}

vector<char> results = segChar(imageCharOkTop,1);

printResult(results);

CvRect rectBottom;

rectBottom.x = 0;

rectBottom.y = 0 + imageCharOk->height/2;

rectBottom.width = imageCharOk->width ;

rectBottom.height = imageCharOk->height/2;

IplImage* imageCharOkBottom = cvCreateImage(cvSize(rectBottom.width,rectBottom.height),8,1);

cvSetImageROI(imageCharOk,rectBottom);

cvCopy(imageCharOk,imageCharOkBottom);

cvResetImageROI(imageCharOk);

if(showSteps){

cvNamedWindow("imageCharOkBottom",1);

cvShowImage("imageCharOkBottom",imageCharOkBottom);

}

vector<char> results2 = segChar(imageCharOkBottom,2);

printResult(results2);

}

}

getEndTime();

printf("%f 毫秒\n",dfTim);

cvWaitKey(0);

cvReleaseImage( &src );

cvReleaseImage( &tgray );

cvReleaseImage( &bin_image );

}

}

void main(){

init();

processingTotal();

}