(二).v4l2 :先讲应用层操作的顺序

工作流程:

打开设备--> 检查和设备设备属性-->设置帧格式-->设置一种输入输出的方法(缓冲区管理)-->循环获取数据-->关闭设备

1. 打开视频设备

fd = open("/dev/video0",O_RDWR);2.查询视频设备能力 VIDIOC_QUERYCAP

ioctl_ops(fd,VIDIOC_QUERYCAP,&cap);

if(!(cap.capabilities & V4L2_CAP_VIDEO_CAPTURE)){

perror("this is not cap");

return -1;

}

struct v4l2_capability cap;

struct v4l2_capability {

__u8 driver[16]; 驱动名词

__u8 card[32]; 设备名字

__u8 bus_info[32]; 设备在系统中的位置

__u32 version; 驱动版本号

__u32 capabilities; 设置支持的操作

__u32 device_caps;

__u32 reserved[3];

};

/* Values for 'capabilities' field */

#define V4L2_CAP_VIDEO_CAPTURE 0x00000001 /* Is a video capture device */

#define V4L2_CAP_VIDEO_OUTPUT 0x00000002 /* Is a video output device */

#define V4L2_CAP_VIDEO_OVERLAY 0x00000004 /* Can do video overlay */

#define V4L2_CAP_VBI_CAPTURE 0x00000010 /* Is a raw VBI capture device */

#define V4L2_CAP_VBI_OUTPUT 0x00000020 /* Is a raw VBI output device */

#define V4L2_CAP_SLICED_VBI_CAPTURE 0x00000040 /* Is a sliced VBI capture device */

#define V4L2_CAP_SLICED_VBI_OUTPUT 0x00000080 /* Is a sliced VBI output device */

#define V4L2_CAP_RDS_CAPTURE 0x00000100 /* RDS data capture */

#define V4L2_CAP_VIDEO_OUTPUT_OVERLAY 0x00000200 /* Can do video output overlay */

#define V4L2_CAP_HW_FREQ_SEEK 0x00000400 /* Can do hardware frequency seek */

#define V4L2_CAP_RDS_OUTPUT 0x00000800 /* Is an RDS encoder */

一般我们需要的是 V4L2_CAP_VIDEO_CAPTURE

调用底层 例如:

static int vidioc_querycap(struct file *file, void *priv,

struct v4l2_capability *cap)

{

struct vivid_dev *dev = video_drvdata(file);

strcpy(cap->driver, "vivid");

strcpy(cap->card, "vivid");

snprintf(cap->bus_info, sizeof(cap->bus_info),

"platform:%s", dev->v4l2_dev.name);

cap->capabilities = dev->vid_cap_caps | dev->vid_out_caps |

dev->vbi_cap_caps | dev->vbi_out_caps |

dev->radio_rx_caps | dev->radio_tx_caps |

dev->sdr_cap_caps | V4L2_CAP_DEVICE_CAPS;

return 0;

}

3.查询视频设备输出的格式 // 一般可以不做直接设置即可

VIDIOC_ENUM_FMT

int ioctl(int fd, int request, struct v4l2_fmtdesc *argp);

/*

* F O R M A T E N U M E R A T I O N

*/

struct v4l2_fmtdesc {

__u32 index; /* Format number */

__u32 type; /* enum v4l2_buf_type */

__u32 flags;

__u8 description[32]; /* Description string */

__u32 pixelformat; /* Format fourcc */

__u32 reserved[4];

};

4.设置视频采集格式

1. 设置摄像头的格式

2. 设置图片的长度,宽度,图片格式

//VIDIOC_S_FMT

my_fmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE ;

my_fmt.fmt.pix.width = 720;

my_fmt.fmt.pix.height = 576;

my_fmt.fmt.pix.pixelformat = V4L2_PIX_FMT_YUYV;

ioctl(fd, VIDIOC_S_FMT, &my_fmt);

/**

* struct v4l2_format - stream data format

* @type: enum v4l2_buf_type; type of the data stream

* @pix: definition of an image format

* @pix_mp: definition of a multiplanar image format

* @win: definition of an overlaid image

* @vbi: raw VBI capture or output parameters

* @sliced: sliced VBI capture or output parameters

* @raw_data: placeholder for future extensions and custom formats

*/

struct v4l2_format {

__u32 type;

union {

struct v4l2_pix_format pix; /* V4L2_BUF_TYPE_VIDEO_CAPTURE */

struct v4l2_pix_format_mplane pix_mp; /* V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE */

struct v4l2_window win; /* V4L2_BUF_TYPE_VIDEO_OVERLAY */

struct v4l2_vbi_format vbi; /* V4L2_BUF_TYPE_VBI_CAPTURE */

struct v4l2_sliced_vbi_format sliced; /* V4L2_BUF_TYPE_SLICED_VBI_CAPTURE */

struct v4l2_sdr_format sdr; /* V4L2_BUF_TYPE_SDR_CAPTURE */

struct v4l2_meta_format meta; /* V4L2_BUF_TYPE_META_CAPTURE */

__u8 raw_data[200]; /* user-defined */

} fmt;

};

/*

* V I D E O I M A G E F O R M A T

*/

struct v4l2_pix_format {

__u32 width;

__u32 height;

__u32 pixelformat;

__u32 field; /* enum v4l2_field */

__u32 bytesperline; /* for padding, zero if unused */

__u32 sizeimage;

__u32 colorspace; /* enum v4l2_colorspace */

__u32 priv; /* private data, depends on pixelformat */

__u32 flags; /* format flags (V4L2_PIX_FMT_FLAG_*) */

union {

/* enum v4l2_ycbcr_encoding */

__u32 ycbcr_enc;

/* enum v4l2_hsv_encoding */

__u32 hsv_enc;

};

__u32 quantization; /* enum v4l2_quantization */

__u32 xfer_func; /* enum v4l2_xfer_func */

};

/* RGB formats */

#define V4L2_PIX_FMT_RGB332 v4l2_fourcc('R', 'G', 'B', '1') /* 8 RGB-3-3-2 */

#define V4L2_PIX_FMT_RGB444 v4l2_fourcc('R', '4', '4', '4') /* 16 xxxxrrrr ggggbbbb */

#define V4L2_PIX_FMT_ARGB444 v4l2_fourcc('A', 'R', '1', '2') /* 16 aaaarrrr ggggbbbb */

#define V4L2_PIX_FMT_XRGB444 v4l2_fourcc('X', 'R', '1', '2') /* 16 xxxxrrrr ggggbbbb */

#define V4L2_PIX_FMT_RGB555 v4l2_fourcc('R', 'G', 'B', 'O') /* 16 RGB-5-5-5 */

#define V4L2_PIX_FMT_ARGB555 v4l2_fourcc('A', 'R', '1', '5') /* 16 ARGB-1-5-5-5 */

#define V4L2_PIX_FMT_XRGB555 v4l2_fourcc('X', 'R', '1', '5') /* 16 XRGB-1-5-5-5 */

#define V4L2_PIX_FMT_RGB565 v4l2_fourcc('R', 'G', 'B', 'P') /* 16 RGB-5-6-5 */

....

/* Grey formats */

#define V4L2_PIX_FMT_GREY v4l2_fourcc('G', 'R', 'E', 'Y') /* 8 Greyscale */

#define V4L2_PIX_FMT_Y4 v4l2_fourcc('Y', '0', '4', ' ') /* 4 Greyscale */

#define V4L2_PIX_FMT_Y6 v4l2_fourcc('Y', '0', '6', ' ') /* 6 Greyscale */

#define V4L2_PIX_FMT_Y10 v4l2_fourcc('Y', '1', '0', ' ') /* 10 Greyscale */

#define V4L2_PIX_FMT_Y12 v4l2_fourcc('Y', '1', '2', ' ') /* 12 Greyscale */

....

调用底层: 例如

static int vidioc_s_fmt_vid_cap(struct file *file, void *priv,

struct v4l2_format *f)

{

struct comp_fh *fh = priv;

struct most_video_dev *mdev = fh->mdev;

return comp_set_format(mdev, VIDIOC_S_FMT, f);

}

static int comp_set_format(struct most_video_dev *mdev, unsigned int cmd,

struct v4l2_format *format)

{

if (format->fmt.pix.pixelformat != V4L2_PIX_FMT_MPEG)

return -EINVAL;

if (cmd == VIDIOC_TRY_FMT)

return 0;

comp_set_format_struct(format);

return 0;

}

static void comp_set_format_struct(struct v4l2_format *f)

{

f->fmt.pix.width = 8;

f->fmt.pix.height = 8;

f->fmt.pix.pixelformat = V4L2_PIX_FMT_MPEG;

f->fmt.pix.bytesperline = 0;

f->fmt.pix.sizeimage = 188 * 2;

f->fmt.pix.colorspace = V4L2_COLORSPACE_REC709;

f->fmt.pix.field = V4L2_FIELD_NONE;

f->fmt.pix.priv = 0;

}

5.获取视频采集格式 // 查看一下是否自己设置成功,当然也可以不设置

VIDOC_G_FMT

调用底层: 例如

static int vidioc_g_fmt_vid_cap(struct file *file, void *priv,

struct v4l2_format *f)

{

comp_set_format_struct(f);

return 0;

}

这里又设置了一遍 !!!6.申请内核空间的视频缓冲区

1. 需要申请的缓冲区个数

2.申请的格式及映射方式

//VIDIOC_REQBUFS

my_requestbuffer.count = 4;

my_requestbuffer.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

my_requestbuffer.memory = V4L2_MEMORY_MMAP;

ioctl(fd, VIDIOC_REQBUFS, &my_requestbuffer);

struct v4l2_requestbuffers v4l2_buf;

struct v4l2_requestbuffers {

__u32 count;

__u32 type; /* enum v4l2_buf_type */

__u32 memory; /* enum v4l2_memory */

__u32 reserved[2];

};

enum v4l2_buf_type { / 看之前的结构体

V4L2_BUF_TYPE_VIDEO_CAPTURE = 1,

V4L2_BUF_TYPE_VIDEO_OUTPUT = 2,

V4L2_BUF_TYPE_VIDEO_OVERLAY = 3,

V4L2_BUF_TYPE_VBI_CAPTURE = 4,

V4L2_BUF_TYPE_VBI_OUTPUT = 5,

V4L2_BUF_TYPE_SLICED_VBI_CAPTURE = 6,

V4L2_BUF_TYPE_SLICED_VBI_OUTPUT = 7,

V4L2_BUF_TYPE_VIDEO_OUTPUT_OVERLAY = 8,

V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE = 9,

V4L2_BUF_TYPE_VIDEO_OUTPUT_MPLANE = 10,

V4L2_BUF_TYPE_SDR_CAPTURE = 11,

V4L2_BUF_TYPE_SDR_OUTPUT = 12,

V4L2_BUF_TYPE_META_CAPTURE = 13,

/* Deprecated, do not use */

V4L2_BUF_TYPE_PRIVATE = 0x80,

};

enum v4l2_memory { // 看之前的结构体

V4L2_MEMORY_MMAP = 1,

V4L2_MEMORY_USERPTR = 2,

V4L2_MEMORY_OVERLAY = 3,

V4L2_MEMORY_DMABUF = 4,

};

调用底层 : 例如

/* vb2 ioctl helpers */

int vb2_ioctl_reqbufs(struct file *file, void *priv,

struct v4l2_requestbuffers *p)

{

struct video_device *vdev = video_devdata(file);

int res = vb2_verify_memory_type(vdev->queue, p->memory, p->type);

if (res)

return res;

if (vb2_queue_is_busy(vdev, file))

return -EBUSY;

res = vb2_core_reqbufs(vdev->queue, p->memory, &p->count);

/* If count == 0, then the owner has released all buffers and he

is no longer owner of the queue. Otherwise we have a new owner. */

if (res == 0)

vdev->queue->owner = p->count ? file->private_data : NULL;

return res;

}

EXPORT_SYMBOL_GPL(vb2_ioctl_reqbufs);7.查询内核空间视频缓冲区信息

ioctl_ops(fd,VIDIOC_QUERYBUF,&v_buffer);

struct v4l2_buffer v_buffer;

struct v4l2_buffer {

__u32 index;

__u32 type;

__u32 bytesused;

__u32 flags;

__u32 field;

struct timeval timestamp;

struct v4l2_timecode timecode;

__u32 sequence;

/* memory location */

__u32 memory;

union {

__u32 offset;

unsigned long userptr;

struct v4l2_plane *planes;

__s32 fd;

} m;

__u32 length;

__u32 reserved2;

__u32 reserved;

};

/**

* struct v4l2_buffer - video buffer info

* @index: id number of the buffer

* @type: enum v4l2_buf_type; buffer type (type == *_MPLANE for

* multiplanar buffers);

* @bytesused: number of bytes occupied by data in the buffer (payload);

* unused (set to 0) for multiplanar buffers

* @flags: buffer informational flags

* @field: enum v4l2_field; field order of the image in the buffer

* @timestamp: frame timestamp

* @timecode: frame timecode

* @sequence: sequence count of this frame

* @memory: enum v4l2_memory; the method, in which the actual video data is

* passed

* @offset: for non-multiplanar buffers with memory == V4L2_MEMORY_MMAP;

* offset from the start of the device memory for this plane,

* (or a "cookie" that should be passed to mmap() as offset)

* @userptr: for non-multiplanar buffers with memory == V4L2_MEMORY_USERPTR;

* a userspace pointer pointing to this buffer

* @fd: for non-multiplanar buffers with memory == V4L2_MEMORY_DMABUF;

* a userspace file descriptor associated with this buffer

* @planes: for multiplanar buffers; userspace pointer to the array of plane

* info structs for this buffer

* @length: size in bytes of the buffer (NOT its payload) for single-plane

* buffers (when type != *_MPLANE); number of elements in the

* planes array for multi-plane buffers

*

* Contains data exchanged by application and driver using one of the Streaming

* I/O methods.

*/

调用底层: 如下

int vb2_ioctl_querybuf(struct file *file, void *priv, struct v4l2_buffer *p)

{

struct video_device *vdev = video_devdata(file);

/* No need to call vb2_queue_is_busy(), anyone can query buffers. */

return vb2_querybuf(vdev->queue, p);

}8.根据查询的缓冲区信息进行内存映射mmap

struct v4l2_buffer my_buffer; // 系统给的结构体

struct v4l2_buffer {

__u32 index;

__u32 type;

__u32 bytesused;

__u32 flags;

__u32 field;

struct timeval timestamp;

struct v4l2_timecode timecode;

__u32 sequence;

/* memory location */

__u32 memory;

union {

__u32 offset; // 偏移量 (4个缓冲区)

unsigned long userptr;

struct v4l2_plane *planes;

__s32 fd;

} m;

__u32 length; // 长度

__u32 reserved2;

__u32 reserved;

};

struct mmap_addr{

void *addr;

int length;

};

struct mmap_addr *addr;

addr = calloc(4, sizeof(struct mmap_addr));

for(i = 0; i < 4; i++)

{

my_buffer.index = i;

my_buffer.type = V4L2_CAP_VIDEO_CAPTURE;

my_buffer.memory = V4L2_MEMORY_MMAP;

ioctl(fd, VIDIOC_QUERYBUF, &my_buffer); //查询内核缓冲区信息

//mmap

addr[i].length = my_buffer.length;

addr[i].addr = mmap(NULL, my_buffer.length, PROT_READ | PROT_WRITE, MAP_SHARED, fd, my_buffer.m.offset);

}

ioctl(fd, VIDIOC_QBUF,&my_buffer); // 投放一个空的视频缓冲区

9.投放一个空的视频缓冲区视频

ioctl(fd, VIDIOC_QBUF,&my_buffer); // 投放一个空的视频缓冲区

调用底层: 例如

int vb2_ioctl_qbuf(struct file *file, void *priv, struct v4l2_buffer *p)

{

struct video_device *vdev = video_devdata(file);

if (vb2_queue_is_busy(vdev, file))

return -EBUSY;

return vb2_qbuf(vdev->queue, p);

}

int vb2_qbuf(struct vb2_queue *q, struct v4l2_buffer *b)

{

int ret;

if (vb2_fileio_is_active(q)) {

dprintk(1, "file io in progress\n");

return -EBUSY;

}

ret = vb2_queue_or_prepare_buf(q, b, "qbuf");

return ret ? ret : vb2_core_qbuf(q, b->index, b);

}

10.启动视频采集

//VIDIOC_STREAMON

int v4l2_type;

v4l2_type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

ioctl(fd, VIDIOC_STREAMON, &v4l2_type);

调用底层: 例如

int vb2_ioctl_streamon(struct file *file, void *priv, enum v4l2_buf_type i)

{

struct video_device *vdev = video_devdata(file);

if (vb2_queue_is_busy(vdev, file))

return -EBUSY;

return vb2_streamon(vdev->queue, i);

}

int vb2_core_streamon(struct vb2_queue *q, unsigned int type):

if (q->queued_count >= q->min_buffers_needed) {

ret = v4l_vb2q_enable_media_source(q);

if (ret)

return ret;

ret = vb2_start_streaming(q);

if (ret)

return ret;

}

static int vb2_start_streaming(struct vb2_queue *q) :

list_for_each_entry(vb, &q->queued_list, queued_entry)

__enqueue_in_driver(vb);

/* Tell the driver to start streaming */

q->start_streaming_called = 1;

ret = call_qop(q, start_streaming, q,

atomic_read(&q->owned_by_drv_count));

/*

* Forcefully reclaim buffers if the driver did not

* correctly return them to vb2.

*/

for (i = 0; i < q->num_buffers; ++i) {

vb = q->bufs[i];

if (vb->state == VB2_BUF_STATE_ACTIVE)

vb2_buffer_done(vb, VB2_BUF_STATE_QUEUED);

}11.等待视频采集完成 select

FD_ZERO(&rfd);

FD_SET(fd, &rfd);

ret = select(fd+1, &rfd, NULL, NULL, NULL);

if(ret > 0){

get_buffer(fd);

}else{

return -1;

}12.从视频缓冲区获取一个已有视频数据的buffer, 进行数据处理

memeset(&my_buffer, 0, sizeof(my_buffer));

my_buffer.type = V4L2_CAP_VIDEO_CAPTURE;

my_buffer.memory = V4L2_MEMORY_MMAP;

ioctl(fd, VIDIOC_DQBUF, &my_buffer);

这里就可以对数据进行处理,

例如写入到文本中。

在投放一个空的缓冲区入内核。

ioctl(fd, VIDIOC_QBUF, &my_buffer);

调用底层: 例如

int vb2_ioctl_dqbuf(struct file *file, void *priv, struct v4l2_buffer *p)

{

struct video_device *vdev = video_devdata(file);

if (vb2_queue_is_busy(vdev, file))

return -EBUSY;

return vb2_dqbuf(vdev->queue, p, file->f_flags & O_NONBLOCK);

}

int vb2_dqbuf(struct vb2_queue *q, struct v4l2_buffer *b, bool nonblocking)

{

int ret;

if (vb2_fileio_is_active(q)) {

dprintk(1, "file io in progress\n");

return -EBUSY;

}

if (b->type != q->type) {

dprintk(1, "invalid buffer type\n");

return -EINVAL;

}

ret = vb2_core_dqbuf(q, NULL, b, nonblocking);

/*

* After calling the VIDIOC_DQBUF V4L2_BUF_FLAG_DONE must be

* cleared.

*/

b->flags &= ~V4L2_BUF_FLAG_DONE;

return ret;

}

EXPORT_SYMBOL_GPL(vb2_dqbuf);13.关闭视频设备

//free

v4l2_type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

ioctl(fd, VIDIOC_STREAMOFF, &v4l2_type);

for(i = 0; i < 4; i++){

munmap(addr[i].addr, addr[i].length);

}

free(addr);

close(fd);

其他的相关的 :

设置视频的制式和帧格式 : 制式有 PAL, NTSC 帧格式包括宽度和高度等。

int ioctl( int fd, int request, struct v4l2_ftmdesc *argp);

int ioctl(int fd, int request, struct v4l2_format *argp);

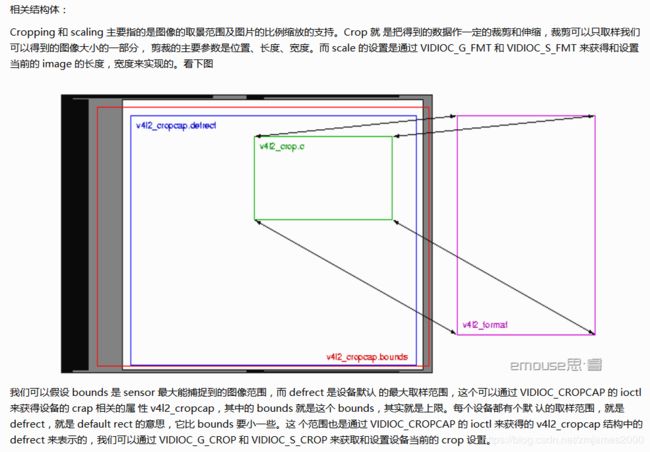

v4l2_cropcap 结构体用来设置摄像头捕捉能力,在捕捉视频时应先设置v4l2_cropcap的type域:

在通过VIDIO_CROPCAP操作命令获取设备的捕捉能力的参数,保存在v4l2_cropcap结构体中,包括bounds(最大捕捉方框的左上角坐标宽高),defrect默认捕捉方框的左上角坐标和宽高)等

v4l2_format结构体用来设置摄像头的视频制式,帧格式等。用VIDIO_S_FMT来设置

图像的缩放VIDIO_CROPCAP

int ioctl(int fd, int request, struct v4l2_cropcap *argp);

参考来源: 思睿