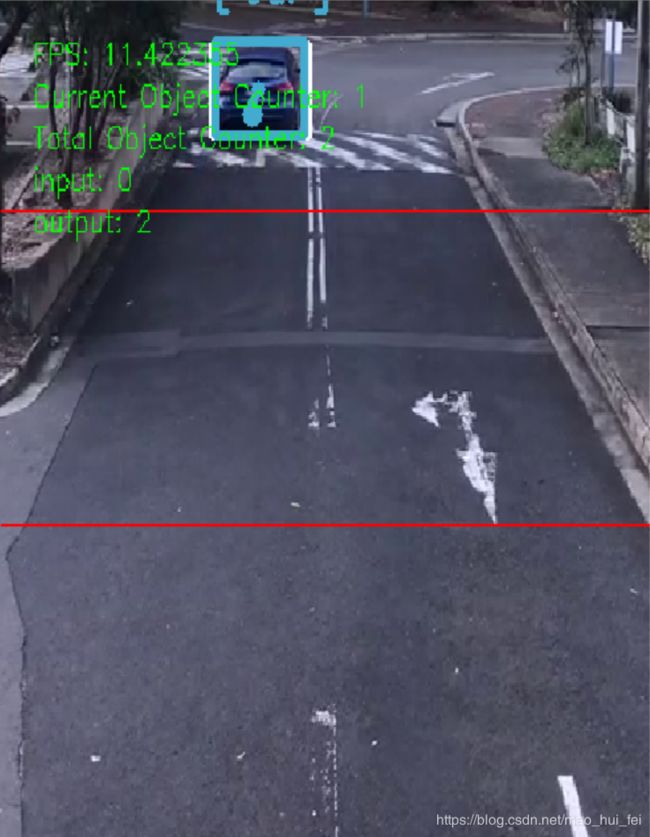

车流统计系统demo暂存

2020.6.27.2:16

加入丢帧策略,可以通过Frame_jiange控制送帧间隔。目前我的显卡2060,1280*720d的图,丢两帧后,几乎可以实时。

#! /usr/bin/env python

# -*- coding: utf-8 -*-

'''

卡尔曼滤波算法分为两个过程,预测和更新。该算法将目标的运动状态定义为8个正态分布的向量。

预测:当目标经过移动,通过上一帧的目标框和速度等参数,预测出当前帧的目标框位置和速度等参数。

更新:预测值和观测值,两个正态分布的状态进行线性加权,得到目前系统预测的状态。

匈牙利算法:解决的是一个分配问题,在MOT主要步骤中的计算相似度的,得到了前后两帧的相似度矩阵。匈牙利算法就是通过求解这个相似度矩阵,从而解决前后两帧真正匹配的目标。这部分sklearn库有对应的函数linear_assignment来进行求解。

SORT算法中是通过前后两帧IOU来构建相似度矩阵

'''

from __future__ import division, print_function, absolute_import

import os

import datetime

from timeit import time

import warnings

import cv2

import numpy as np

import argparse

from PIL import Image

from yolo import YOLO

from deep_sort import preprocessing

from deep_sort import nn_matching

from deep_sort.detection import Detection

from deep_sort.tracker import Tracker

from tools import generate_detections as gdet

from deep_sort.detection import Detection as ddet

from collections import deque

from keras import backend

from PIL import Image, ImageDraw, ImageFont#显示汉字用

backend.clear_session()

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--input",help="path to input video", default = "./test_video/test.avi")

ap.add_argument("-c", "--class",help="name of class", default = "car")

args = vars(ap.parse_args())

pts = [deque(maxlen=30) for _ in range(9999)]

warnings.filterwarnings('ignore')

# initialize a list of colors to represent each possible class label

np.random.seed(100)

COLORS = np.random.randint(0, 255, size=(200, 3),

dtype="uint8")

'''

#功能函数,只是用来往图片中显示汉字

#示例 img = cv2ImgAddText(cv2.imread('img1.jpg'), "大家好,我是片天边的云彩", 10, 65, (0, 0, 139), 20)

参数说明:

img:OpenCV图片格式的图片

text:要写入的汉字

left:字符坐标x值

top:字符坐标y值

textColor:字体颜色

:textSize:字体大小

'''

def cv2ImgAddText(img, text, left, top, textColor=(0, 255, 0), textSize=20):

if (isinstance(img, np.ndarray)): # 判断是否OpenCV图片类型

img = Image.fromarray(cv2.cvtColor(img, cv2.COLOR_BGR2RGB))

# 创建一个可以在给定图像上绘图的对象

draw = ImageDraw.Draw(img)

# 字体的格式

fontStyle = ImageFont.truetype(

"font/simsun.ttc", textSize, encoding="utf-8")

# 绘制文本

draw.text((left, top), text, textColor, font=fontStyle)

# 转换回OpenCV格式

return cv2.cvtColor(np.asarray(img), cv2.COLOR_RGB2BGR)

#主处理函数

def main(yolo):

start = time.time()

#Definition of the parameters

max_cosine_distance = 0.5 #余弦距离的控制阈值

nn_budget = None

nms_max_overlap = 0.3 #非极大抑制的阈值

counter = []

#deep_sort

model_filename = 'model_data/market1501.pb'

encoder = gdet.create_box_encoder(model_filename,batch_size=1)

metric = nn_matching.NearestNeighborDistanceMetric("cosine", max_cosine_distance, nn_budget)

tracker = Tracker(metric)

#写视频是否使能

writeVideo_flag = True

#writeVideo_flag = False

Frame_jiange = 3 #每2帧取一幅图片

NumFrame=0

#video_path = "./output/output.avi"

#video_capture = cv2.VideoCapture(args["input"])

video_capture = cv2.VideoCapture('./input/diyun_1.mp4')

# 其中0表示打开内置摄像头,1表示打开外接摄像头

if video_capture.isOpened(): # VideoCaputre对象是否成功打开

print('打开摄像头或者视频成功')

readVideo_flag = True

else:

print('打开摄像头或者视频失败')

readVideo_flag = False

if writeVideo_flag:

# Define the codec and create VideoWriter object

w = int(video_capture.get(3))# 返回视频的宽

h = int(video_capture.get(4))# 返回视频的高

w1 =1280 # 返回视频的宽

h1 =720 # 返回视频的高

#fourcc = cv2.VideoWriter_fourcc(*'MJPG') #avi格式

fourcc = cv2.VideoWriter_fourcc(*'mp4v')#MP4格式

#out = cv2.VideoWriter('./output/'+args["input"][43:57]+ "_" + args["class"] + '_output.avi', fourcc, 15, (w, h))

out = cv2.VideoWriter('./output/' + "diyun_1" + '_output.mp4', fourcc, 15, (w1, h1))

list_file = open('detection.txt', 'w')

frame_index = -1

fps = 0.0

#车辆记数一些参数

flag_fangxiang_threshold_0 =[] # 表示进来,且过了远处临界点(下面代称:第一个临界点)

flag_fangxiang_threshold_1 =[] # 表示出去,且过了近处临界点(下面代称:第二个临界点)

for i in range(1000):

flag_fangxiang_threshold_0.append(0)

flag_fangxiang_threshold_1.append(0)

input_counter = 0 # 进来车辆记数

output_counter = 0 # 出去车辆记数

flag_fangxiang = 2 # 临时调试用出去车辆用,为1代表进来,为2代表出去,这个值应该判断根据车的运动方向进行判断,和ID绑定在一起,且注意鲁棒性

detections=[]

boxs=[]

while readVideo_flag:

ret, frame00 = video_capture.read() # frame shape 640*480*3

if ret != True:

break

NumFrame=NumFrame+1

#print('绝对帧数NumFrame', NumFrame)

t1 = time.time()

count=0

i = int(0)

#缩放,缩放到一定比例

frame0 = cv2.resize(frame00, (1280,720), interpolation=cv2.INTER_AREA)

[height0, width0, tongdao0] = frame0.shape

frame = frame0[200:678, 267:920] # 裁剪坐标为[y0:y1, x0:x1 1280*720视频

# 按照视频高度比例

threshold_00 = (int)(height0 * 1 / 4)

threshold_10 = (int)(height0 * 1 / 2)

threshold_0 = (int)(threshold_00 * 720 / 1080)

threshold_1 = (int)(threshold_10 * 720 / 1080)

if NumFrame % Frame_jiange == 0: # 取余每间隔 Frame_jiange 帧处理一次

#print('不取该帧', NumFrame)

#continue

#frame = frame0[450:850, 50:450] # 裁剪坐标为[y0:y1, x0:x1 540*960视频

#frame = frame0[900:1700, 100:900] # 裁剪坐标为[y0:y1, x0:x1 1920*1080视频

#frame = frame0[200:678, 267:920] # 裁剪坐标为[y0:y1, x0:x1 1280*720视频

#cv2.rectangle(frame0, (267, 200), (867, 678), (0, 0, 255), 2)

[height,width,tongdao]=frame.shape

#print('宽度', width)

#print('高度', height)

# # print('通道数', tongdao)

#540*960视频

# threshold_0 = 100

# threshold_1 = 250

#1920*1080视频

# threshold_0 = 200

# threshold_1 = 500

#print('threshold_0', threshold_0)

#print('threshold_1', threshold_1)

#画临界线

#cv2.line(frame, (0, threshold_0), (width, threshold_0), (0, 0, 255), 2)

#cv2.line(frame, (0, threshold_1), (width, threshold_1), (0, 0, 255), 2)

#原图

#cv2.line(frame0, (0, threshold_0), (width0, threshold_0), (0, 0, 255), 2)

#cv2.line(frame0, (0, threshold_1), (width0, threshold_1), (0, 0, 255), 2)

# image = Image.fromarray(frame)

image = Image.fromarray(frame[...,::-1]) #bgr to rgb

boxs,class_names = yolo.detect_image(image)

features = encoder(frame,boxs)

# score to 1.0 here).

detections = [Detection(bbox, 1.0, feature) for bbox, feature in zip(boxs, features)]

# Run non-maxima suppression.

boxes = np.array([d.tlwh for d in detections])

scores = np.array([d.confidence for d in detections])

#NMS:非极大值抑制

indices = preprocessing.non_max_suppression(boxes, nms_max_overlap, scores)

detections = [detections[i] for i in indices]

# Call the tracker

#预测:当目标经过移动,通过上一帧的目标框和速度等参数,预测出当前帧的目标框位置和速度等参数。

#卡尔曼滤波可以根据Tracks状态预测下一帧的目标框状态

tracker.predict()

#更新:预测值和观测值,两个正态分布的状态进行线性加权,得到目前系统预测的状态。

#卡尔曼滤波更新是对观测值(匹配上的Track)和估计值更新所有track的状态

tracker.update(detections)

#i = int(0)

indexIDs = []

c = []

boxes = []

center_zong = []#将所有检测出的框的中点放入center总中

#画yolo v3检测出的矩形框

for det in detections:

bbox = det.to_tlbr()

cv2.rectangle(frame,(int(bbox[0]), int(bbox[1])), (int(bbox[2]), int(bbox[3])),(255,255,255), 2)

for track in tracker.tracks:

if not track.is_confirmed() or track.time_since_update > 1:

continue

#boxes.append([track[0], track[1], track[2], track[3]])

#print('track.track_id', track.track_id)

indexIDs.append(int(track.track_id))

#print('indexIDs', indexIDs)

counter.append(int(track.track_id))#这里将每一帧的id都放入,且不清除(因为初始化是在while外面),在下面用set函数进行去除相同元素

#print('counter', counter)

bbox = track.to_tlbr()

color = [int(c) for c in COLORS[indexIDs[i] % len(COLORS)]]

# 画deepsort 预测出的矩形框

cv2.rectangle(frame, (int(bbox[0]), int(bbox[1])), (int(bbox[2]), int(bbox[3])),(color), 3)

cv2.putText(frame,str(track.track_id),(int(bbox[0]), int(bbox[1] -50)),0, 5e-3 * 150, (color),2)

if len(class_names) > 0:

class_name = class_names[0]

cv2.putText(frame, str(class_names[0]),(int(bbox[0]), int(bbox[1] -20)),0, 5e-3 * 150, (color),2)

i += 1

#bbox_center_point(x,y)

# 每个多目标的center[1]表示纵坐标

center = (int(((bbox[0])+(bbox[2]))/2),int(((bbox[1])+(bbox[3]))/2))

center_zong.append(center)

#print('center_zong', center_zong)

#track_id[center]

pts[track.track_id].append(center)

thickness = 5

#center point

cv2.circle(frame, (center), 1, color, thickness)

#draw motion path画运动轨迹

for j in range(1, len(pts[track.track_id])):

if pts[track.track_id][j - 1] is None or pts[track.track_id][j] is None:

continue

thickness = int(np.sqrt(64 / float(j + 1)) * 2)

cv2.line(frame,(pts[track.track_id][j-1]), (pts[track.track_id][j]),(color),thickness)

#cv2.putText(frame, str(class_names[j]),(int(bbox[0]), int(bbox[1] -20)),0, 5e-3 * 150, (255,255,255),2)

#新一个循环,用来进行多目标框的计数

for indexID in indexIDs:

#print('209 indexID',indexID)

weizhi=indexIDs.index(indexID)#该方法返回查找对象的索引位置,如果没有找到对象则抛出异常。

#print('211 weizhi', weizhi)

if flag_fangxiang==2: #如果这辆车方向是出去的

if center_zong[weizhi][1] < threshold_1 and center_zong[weizhi][1] > threshold_0: #当出去方向的车进入第二个临界线

flag_fangxiang_threshold_1[indexID] = 1 #标志位置1

flag_fangxiang_threshold_0[indexID] = 1 #标志位置1

print('出去车辆经过第二个临界点且未经过第一个临界点')

if flag_fangxiang_threshold_1[indexID] == 1: #当进来方向车越过第二个临界线

if center_zong[weizhi][1] < threshold_0: #判断是否小于第一个临界线

print('出去车辆经过第一个临界点')

output_counter = output_counter+1 #如果小于,出去车辆加1

flag_fangxiang_threshold_1[indexID] = 0

print('出去车辆个数',output_counter)

if flag_fangxiang_threshold_0[indexID] == 1: # 当进来方向车越过第一个临界线

if center_zong[weizhi][1] > threshold_1: #判断是否大于第二个临界线

print('进来车辆经过第二个临界点')

input_counter = input_counter+1 #如果大于,进来车辆加1

flag_fangxiang_threshold_0[indexID] = 0

print('进来车辆个数',input_counter)

count = len(set(counter)) #set() 函数创建一个无序不重复元素集,可进行关系测试,删除重复数据,还可以计算交集、差集、并集等。

#截取的小图显示 # 参数解释:图像,文字内容, 坐标 , 字体,大小,颜色,字体厚度

# cv2.putText(frame, "FPS: %f"%(fps), (int(20), int(30)), 0, 0.5, (0,255,0),1)

# cv2.putText(frame, "Current Object Counter: "+str(i), (int(20), int(50)), 0, 0.5, (0,255,0),1)

# cv2.putText(frame, "Total Object Counter: "+str(count),(int(20), int(70)),0, 0.5, (0,255,0),1)

# cv2.putText(frame, "input: "+str(input_counter), (int(20), int(90)),0, 0.5, (0,255,0),1)

# cv2.putText(frame, "output: " + str(output_counter), (int(20), int(110)),0, 0.5, (0,255,0), 1)

# cv2.putText(frame, "FPS: %f" % (fps), (int(20), int(30)), 0, 1.5, (0, 255, 0), 2)

# cv2.putText(frame, "Current Object Counter: " + str(i), (int(20), int(60)), 0, 1.5, (0, 255, 0), 2)

# cv2.putText(frame, "Total Object Counter: " + str(count), (int(20), int(90)), 0, 1.5, (0, 255, 0), 2)

# cv2.putText(frame, "input: " + str(input_counter), (int(20), int(120)), 0, 1.5, (0, 255, 0), 2)

# cv2.putText(frame, "output: " + str(output_counter), (int(20), int(150)), 0, 1.5, (0, 255, 0), 2)

#画临界线

cv2.line(frame, (0, threshold_0), (width0, threshold_0), (0, 255, 255), 2)

cv2.line(frame, (0, threshold_1), (width0, threshold_1), (0, 255, 255), 2)

#标题

frame0 = cv2ImgAddText(frame0, "车流统计系统", 900, 30, (255, 255, 255), 50)

frame0 = cv2ImgAddText(frame0, "地点:杭州博奥路某大桥", 20, 670, (0, 255, 255), 20)

frame0 = cv2ImgAddText(frame0, "——by 狄云", 1150, 670, (0, 255, 255), 20)

#送入yolo v3的框

cv2.rectangle(frame0, (267, 200), (920, 678), (255, 255, 255), 2)

cv2.putText(frame0, "FPS: %f" % (fps), (int(20), int(40)), 0, 1.2, (0, 255, 0), 2)

cv2.putText(frame0, "Current Object Counter: " + str(i), (int(20), int(70)), 0, 0.8, (0, 255, 0), 2)

cv2.putText(frame0, "Total Object Counter: " + str(count), (int(20), int(100)), 0, 0.8, (0, 255, 0), 2)

cv2.putText(frame0, "input: " + str(input_counter), (int(20), int(150)), 0, 1.2, (0, 255, 0), 2)

cv2.putText(frame0, "output: " + str(output_counter), (int(20), int(200)), 0, 1.2, (0, 255, 0), 2)

cv2.namedWindow("YOLO3_Deep_SORT", 0);

# cv2.resizeWindow('YOLO3_Deep_SORT', 1024, 768);

cv2.imshow('YOLO3_Deep_SORT', frame0)

if writeVideo_flag:

#save a frame

out.write(frame0)

frame_index = frame_index + 1

list_file.write(str(frame_index)+' ')

if len(boxs) != 0:

for i in range(0,len(boxs)):

list_file.write(str(boxs[i][0]) + ' '+str(boxs[i][1]) + ' '+str(boxs[i][2]) + ' '+str(boxs[i][3]) + ' ')

list_file.write('\n')

fps = ( fps + (1./(time.time()-t1)) ) / 2

#fps = 30

#print(set(counter))

# Press Q to stop!

if cv2.waitKey(1) & 0xFF == ord('q'):

break

print(" ")

print("[Finish]")

end = time.time()

if len(pts[track.track_id]) != None:

print(args["input"][43:57]+": "+ str(count) + " " + str(class_name) +' Found')

else:

print("[No Found]")

video_capture.release()

if writeVideo_flag:

out.release()

list_file.close()

cv2.destroyAllWindows()

if __name__ == '__main__':

main(YOLO())

2020.6.27.1:32

可视化显示为全图(全部处理为1280*720),送入yolo v3为截取有用的部分矩形图,白色框,并加入一些汉字显示。

#! /usr/bin/env python

# -*- coding: utf-8 -*-

'''

卡尔曼滤波算法分为两个过程,预测和更新。该算法将目标的运动状态定义为8个正态分布的向量。

预测:当目标经过移动,通过上一帧的目标框和速度等参数,预测出当前帧的目标框位置和速度等参数。

更新:预测值和观测值,两个正态分布的状态进行线性加权,得到目前系统预测的状态。

匈牙利算法:解决的是一个分配问题,在MOT主要步骤中的计算相似度的,得到了前后两帧的相似度矩阵。匈牙利算法就是通过求解这个相似度矩阵,从而解决前后两帧真正匹配的目标。这部分sklearn库有对应的函数linear_assignment来进行求解。

SORT算法中是通过前后两帧IOU来构建相似度矩阵

'''

from __future__ import division, print_function, absolute_import

import os

import datetime

from timeit import time

import warnings

import cv2

import numpy as np

import argparse

from PIL import Image

from yolo import YOLO

from deep_sort import preprocessing

from deep_sort import nn_matching

from deep_sort.detection import Detection

from deep_sort.tracker import Tracker

from tools import generate_detections as gdet

from deep_sort.detection import Detection as ddet

from collections import deque

from keras import backend

from PIL import Image, ImageDraw, ImageFont#显示汉字用

backend.clear_session()

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--input",help="path to input video", default = "./test_video/test.avi")

ap.add_argument("-c", "--class",help="name of class", default = "car")

args = vars(ap.parse_args())

pts = [deque(maxlen=30) for _ in range(9999)]

warnings.filterwarnings('ignore')

# initialize a list of colors to represent each possible class label

np.random.seed(100)

COLORS = np.random.randint(0, 255, size=(200, 3),

dtype="uint8")

'''

#功能函数,只是用来往图片中显示汉字

#示例 img = cv2ImgAddText(cv2.imread('img1.jpg'), "大家好,我是片天边的云彩", 10, 65, (0, 0, 139), 20)

参数说明:

img:OpenCV图片格式的图片

text:要写入的汉字

left:字符坐标x值

top:字符坐标y值

textColor:字体颜色

:textSize:字体大小

'''

def cv2ImgAddText(img, text, left, top, textColor=(0, 255, 0), textSize=20):

if (isinstance(img, np.ndarray)): # 判断是否OpenCV图片类型

img = Image.fromarray(cv2.cvtColor(img, cv2.COLOR_BGR2RGB))

# 创建一个可以在给定图像上绘图的对象

draw = ImageDraw.Draw(img)

# 字体的格式

fontStyle = ImageFont.truetype(

"font/simsun.ttc", textSize, encoding="utf-8")

# 绘制文本

draw.text((left, top), text, textColor, font=fontStyle)

# 转换回OpenCV格式

return cv2.cvtColor(np.asarray(img), cv2.COLOR_RGB2BGR)

#主处理函数

def main(yolo):

start = time.time()

#Definition of the parameters

max_cosine_distance = 0.5 #余弦距离的控制阈值

nn_budget = None

nms_max_overlap = 0.3 #非极大抑制的阈值

counter = []

#deep_sort

model_filename = 'model_data/market1501.pb'

encoder = gdet.create_box_encoder(model_filename,batch_size=1)

metric = nn_matching.NearestNeighborDistanceMetric("cosine", max_cosine_distance, nn_budget)

tracker = Tracker(metric)

#写视频是否使能

#writeVideo_flag = True

writeVideo_flag = False

#video_path = "./output/output.avi"

#video_capture = cv2.VideoCapture(args["input"])

video_capture = cv2.VideoCapture('./input/diyun_1.mp4')

# 其中0表示打开内置摄像头,1表示打开外接摄像头

if video_capture.isOpened(): # VideoCaputre对象是否成功打开

print('打开摄像头或者视频成功')

readVideo_flag = True

else:

print('打开摄像头或者视频失败')

readVideo_flag = False

if writeVideo_flag:

# Define the codec and create VideoWriter object

w = int(video_capture.get(3))# 返回视频的宽

h = int(video_capture.get(4))# 返回视频的高

w1 =400 # 返回视频的宽

h1 =400 # 返回视频的高

#fourcc = cv2.VideoWriter_fourcc(*'MJPG') #avi格式

fourcc = cv2.VideoWriter_fourcc(*'mp4v')#MP4格式

#out = cv2.VideoWriter('./output/'+args["input"][43:57]+ "_" + args["class"] + '_output.avi', fourcc, 15, (w, h))

out = cv2.VideoWriter('./output/' + "diyun_1" + '_output.mp4', fourcc, 15, (w1, h1))

list_file = open('detection.txt', 'w')

frame_index = -1

fps = 0.0

#车辆记数一些参数

flag_fangxiang_threshold_0 =[] # 表示进来,且过了远处临界点(下面代称:第一个临界点)

flag_fangxiang_threshold_1 =[] # 表示出去,且过了近处临界点(下面代称:第二个临界点)

for i in range(1000):

flag_fangxiang_threshold_0.append(0)

flag_fangxiang_threshold_1.append(0)

input_counter = 0 # 进来车辆记数

output_counter = 0 # 出去车辆记数

flag_fangxiang = 2 # 临时调试用出去车辆用,为1代表进来,为2代表出去,这个值应该判断根据车的运动方向进行判断,和ID绑定在一起,且注意鲁棒性

while readVideo_flag:

ret, frame00 = video_capture.read() # frame shape 640*480*3

if ret != True:

break

#缩放,缩放到一定比例

frame0 = cv2.resize(frame00, (1280,720), interpolation=cv2.INTER_AREA)

[height0, width0, tongdao0] = frame0.shape

#frame = frame0[450:850, 50:450] # 裁剪坐标为[y0:y1, x0:x1 540*960视频

#frame = frame0[900:1700, 100:900] # 裁剪坐标为[y0:y1, x0:x1 1920*1080视频

frame = frame0[200:678, 267:920] # 裁剪坐标为[y0:y1, x0:x1 1280*720视频

#cv2.rectangle(frame0, (267, 200), (867, 678), (0, 0, 255), 2)

[height,width,tongdao]=frame.shape

#print('宽度', width)

#print('高度', height)

# # print('通道数', tongdao)

t1 = time.time()

#540*960视频

# threshold_0 = 100

# threshold_1 = 250

#1920*1080视频

# threshold_0 = 200

# threshold_1 = 500

#按照视频高度比例

threshold_00 = (int) (height0*1/3)

threshold_10 = (int) (height0*2/5)

threshold_0 = (int) (threshold_00*720/1080)

threshold_1 = (int) (threshold_10*720/1080)

#print('threshold_0', threshold_0)

#print('threshold_1', threshold_1)

#画临界线

#cv2.line(frame, (0, threshold_0), (width, threshold_0), (0, 0, 255), 2)

#cv2.line(frame, (0, threshold_1), (width, threshold_1), (0, 0, 255), 2)

#原图

#cv2.line(frame0, (0, threshold_0), (width0, threshold_0), (0, 0, 255), 2)

#cv2.line(frame0, (0, threshold_1), (width0, threshold_1), (0, 0, 255), 2)

# image = Image.fromarray(frame)

image = Image.fromarray(frame[...,::-1]) #bgr to rgb

boxs,class_names = yolo.detect_image(image)

features = encoder(frame,boxs)

# score to 1.0 here).

detections = [Detection(bbox, 1.0, feature) for bbox, feature in zip(boxs, features)]

# Run non-maxima suppression.

boxes = np.array([d.tlwh for d in detections])

scores = np.array([d.confidence for d in detections])

#NMS:非极大值抑制

indices = preprocessing.non_max_suppression(boxes, nms_max_overlap, scores)

detections = [detections[i] for i in indices]

# Call the tracker

#预测:当目标经过移动,通过上一帧的目标框和速度等参数,预测出当前帧的目标框位置和速度等参数。

#卡尔曼滤波可以根据Tracks状态预测下一帧的目标框状态

tracker.predict()

#更新:预测值和观测值,两个正态分布的状态进行线性加权,得到目前系统预测的状态。

#卡尔曼滤波更新是对观测值(匹配上的Track)和估计值更新所有track的状态

tracker.update(detections)

i = int(0)

indexIDs = []

c = []

boxes = []

center_zong = []#将所有检测出的框的中点放入center总中

#画yolo v3检测出的矩形框

for det in detections:

bbox = det.to_tlbr()

cv2.rectangle(frame,(int(bbox[0]), int(bbox[1])), (int(bbox[2]), int(bbox[3])),(255,255,255), 2)

for track in tracker.tracks:

if not track.is_confirmed() or track.time_since_update > 1:

continue

#boxes.append([track[0], track[1], track[2], track[3]])

#print('track.track_id', track.track_id)

indexIDs.append(int(track.track_id))

#print('indexIDs', indexIDs)

counter.append(int(track.track_id))#这里将每一帧的id都放入,且不清除(因为初始化是在while外面),在下面用set函数进行去除相同元素

#print('counter', counter)

bbox = track.to_tlbr()

color = [int(c) for c in COLORS[indexIDs[i] % len(COLORS)]]

# 画deepsort 预测出的矩形框

cv2.rectangle(frame, (int(bbox[0]), int(bbox[1])), (int(bbox[2]), int(bbox[3])),(color), 3)

cv2.putText(frame,str(track.track_id),(int(bbox[0]), int(bbox[1] -50)),0, 5e-3 * 150, (color),2)

if len(class_names) > 0:

class_name = class_names[0]

cv2.putText(frame, str(class_names[0]),(int(bbox[0]), int(bbox[1] -20)),0, 5e-3 * 150, (color),2)

i += 1

#bbox_center_point(x,y)

# 每个多目标的center[1]表示纵坐标

center = (int(((bbox[0])+(bbox[2]))/2),int(((bbox[1])+(bbox[3]))/2))

center_zong.append(center)

#print('center_zong', center_zong)

#track_id[center]

pts[track.track_id].append(center)

thickness = 5

#center point

cv2.circle(frame, (center), 1, color, thickness)

#draw motion path画运动轨迹

for j in range(1, len(pts[track.track_id])):

if pts[track.track_id][j - 1] is None or pts[track.track_id][j] is None:

continue

thickness = int(np.sqrt(64 / float(j + 1)) * 2)

cv2.line(frame,(pts[track.track_id][j-1]), (pts[track.track_id][j]),(color),thickness)

#cv2.putText(frame, str(class_names[j]),(int(bbox[0]), int(bbox[1] -20)),0, 5e-3 * 150, (255,255,255),2)

#新一个循环,用来进行多目标框的计数

for indexID in indexIDs:

#print('209 indexID',indexID)

weizhi=indexIDs.index(indexID)#该方法返回查找对象的索引位置,如果没有找到对象则抛出异常。

#print('211 weizhi', weizhi)

if flag_fangxiang==2: #如果这辆车方向是出去的

if center_zong[weizhi][1] < threshold_1 and center_zong[weizhi][1] > threshold_0: #当出去方向的车进入第二个临界线

flag_fangxiang_threshold_1[indexID] = 1 #标志位置1

flag_fangxiang_threshold_0[indexID] = 1 #标志位置1

print('出去车辆经过第二个临界点且未经过第一个临界点')

if flag_fangxiang_threshold_1[indexID] == 1: #当进来方向车越过第二个临界线

if center_zong[weizhi][1] < threshold_0: #判断是否小于第一个临界线

print('出去车辆经过第一个临界点')

output_counter = output_counter+1 #如果小于,出去车辆加1

flag_fangxiang_threshold_1[indexID] = 0

print('出去车辆个数',output_counter)

if flag_fangxiang_threshold_0[indexID] == 1: # 当进来方向车越过第一个临界线

if center_zong[weizhi][1] > threshold_1: #判断是否大于第二个临界线

print('进来车辆经过第二个临界点')

input_counter = input_counter+1 #如果大于,进来车辆加1

flag_fangxiang_threshold_0[indexID] = 0

print('进来车辆个数',input_counter)

count = len(set(counter)) #set() 函数创建一个无序不重复元素集,可进行关系测试,删除重复数据,还可以计算交集、差集、并集等。

#截取的小图显示 # 参数解释:图像,文字内容, 坐标 , 字体,大小,颜色,字体厚度

# cv2.putText(frame, "FPS: %f"%(fps), (int(20), int(30)), 0, 0.5, (0,255,0),1)

# cv2.putText(frame, "Current Object Counter: "+str(i), (int(20), int(50)), 0, 0.5, (0,255,0),1)

# cv2.putText(frame, "Total Object Counter: "+str(count),(int(20), int(70)),0, 0.5, (0,255,0),1)

# cv2.putText(frame, "input: "+str(input_counter), (int(20), int(90)),0, 0.5, (0,255,0),1)

# cv2.putText(frame, "output: " + str(output_counter), (int(20), int(110)),0, 0.5, (0,255,0), 1)

# cv2.putText(frame, "FPS: %f" % (fps), (int(20), int(30)), 0, 1.5, (0, 255, 0), 2)

# cv2.putText(frame, "Current Object Counter: " + str(i), (int(20), int(60)), 0, 1.5, (0, 255, 0), 2)

# cv2.putText(frame, "Total Object Counter: " + str(count), (int(20), int(90)), 0, 1.5, (0, 255, 0), 2)

# cv2.putText(frame, "input: " + str(input_counter), (int(20), int(120)), 0, 1.5, (0, 255, 0), 2)

# cv2.putText(frame, "output: " + str(output_counter), (int(20), int(150)), 0, 1.5, (0, 255, 0), 2)

#画临界线

cv2.line(frame, (0, threshold_0), (width0, threshold_0), (0, 255, 255), 2)

cv2.line(frame, (0, threshold_1), (width0, threshold_1), (0, 255, 255), 2)

#标题

frame0 = cv2ImgAddText(frame0, "车流统计系统", 900, 30, (255, 255, 255), 50)

frame0 = cv2ImgAddText(frame0, "地点:杭州博奥路某大桥", 20, 670, (0, 255, 255), 20)

frame0 = cv2ImgAddText(frame0, "——by 狄云", 1150, 670, (0, 255, 255), 20)

#送入yolo v3的框

cv2.rectangle(frame0, (267, 200), (920, 678), (255, 255, 255), 2)

cv2.putText(frame0, "FPS: %f" % (fps), (int(20), int(40)), 0, 1.2, (0, 255, 0), 2)

cv2.putText(frame0, "Current Object Counter: " + str(i), (int(20), int(70)), 0, 0.8, (0, 255, 0), 2)

cv2.putText(frame0, "Total Object Counter: " + str(count), (int(20), int(100)), 0, 0.8, (0, 255, 0), 2)

cv2.putText(frame0, "input: " + str(input_counter), (int(20), int(150)), 0, 1.2, (0, 255, 0), 2)

cv2.putText(frame0, "output: " + str(output_counter), (int(20), int(200)), 0, 1.2, (0, 255, 0), 2)

cv2.namedWindow("YOLO3_Deep_SORT", 0);

# cv2.resizeWindow('YOLO3_Deep_SORT', 1024, 768);

cv2.imshow('YOLO3_Deep_SORT', frame0)

if writeVideo_flag:

#save a frame

out.write(frame)

frame_index = frame_index + 1

list_file.write(str(frame_index)+' ')

if len(boxs) != 0:

for i in range(0,len(boxs)):

list_file.write(str(boxs[i][0]) + ' '+str(boxs[i][1]) + ' '+str(boxs[i][2]) + ' '+str(boxs[i][3]) + ' ')

list_file.write('\n')

fps = ( fps + (1./(time.time()-t1)) ) / 2

#fps = 30

#print(set(counter))

# Press Q to stop!

if cv2.waitKey(1) & 0xFF == ord('q'):

break

print(" ")

print("[Finish]")

end = time.time()

if len(pts[track.track_id]) != None:

print(args["input"][43:57]+": "+ str(count) + " " + str(class_name) +' Found')

else:

print("[No Found]")

video_capture.release()

if writeVideo_flag:

out.release()

list_file.close()

cv2.destroyAllWindows()

if __name__ == '__main__':

main(YOLO())

2020.6.26.17:50

进出的车辆都能计数

#! /usr/bin/env python

# -*- coding: utf-8 -*-

'''

卡尔曼滤波算法分为两个过程,预测和更新。该算法将目标的运动状态定义为8个正态分布的向量。

预测:当目标经过移动,通过上一帧的目标框和速度等参数,预测出当前帧的目标框位置和速度等参数。

更新:预测值和观测值,两个正态分布的状态进行线性加权,得到目前系统预测的状态。

匈牙利算法:解决的是一个分配问题,在MOT主要步骤中的计算相似度的,得到了前后两帧的相似度矩阵。匈牙利算法就是通过求解这个相似度矩阵,从而解决前后两帧真正匹配的目标。这部分sklearn库有对应的函数linear_assignment来进行求解。

SORT算法中是通过前后两帧IOU来构建相似度矩阵

'''

from __future__ import division, print_function, absolute_import

import os

import datetime

from timeit import time

import warnings

import cv2

import numpy as np

import argparse

from PIL import Image

from yolo import YOLO

from deep_sort import preprocessing

from deep_sort import nn_matching

from deep_sort.detection import Detection

from deep_sort.tracker import Tracker

from tools import generate_detections as gdet

from deep_sort.detection import Detection as ddet

from collections import deque

from keras import backend

backend.clear_session()

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--input",help="path to input video", default = "./test_video/test.avi")

ap.add_argument("-c", "--class",help="name of class", default = "car")

args = vars(ap.parse_args())

pts = [deque(maxlen=30) for _ in range(9999)]

warnings.filterwarnings('ignore')

# initialize a list of colors to represent each possible class label

np.random.seed(100)

COLORS = np.random.randint(0, 255, size=(200, 3),

dtype="uint8")

def main(yolo):

start = time.time()

#Definition of the parameters

max_cosine_distance = 0.5 #余弦距离的控制阈值

nn_budget = None

nms_max_overlap = 0.3 #非极大抑制的阈值

counter = []

#deep_sort

model_filename = 'model_data/market1501.pb'

encoder = gdet.create_box_encoder(model_filename,batch_size=1)

metric = nn_matching.NearestNeighborDistanceMetric("cosine", max_cosine_distance, nn_budget)

tracker = Tracker(metric)

#video_path = "./output/output.avi"

#video_capture = cv2.VideoCapture(args["input"])

video_capture = cv2.VideoCapture('./input/14.mp4')

# 其中0表示打开内置摄像头,1表示打开外接摄像头

if video_capture.isOpened(): # VideoCaputre对象是否成功打开

print('打开摄像头或者视频成功')

writeVideo_flag = True

else:

print('打开摄像头或者视频失败')

writeVideo_flag = False

if writeVideo_flag:

# Define the codec and create VideoWriter object

w = int(video_capture.get(3))# 返回视频的宽

h = int(video_capture.get(4))# 返回视频的高

fourcc = cv2.VideoWriter_fourcc(*'MJPG')

#out = cv2.VideoWriter('./output/'+args["input"][43:57]+ "_" + args["class"] + '_output.avi', fourcc, 15, (w, h))

#out = cv2.VideoWriter('./output/' + "14" + '_output.avi', fourcc, 15, (w, h))

list_file = open('detection.txt', 'w')

frame_index = -1

fps = 0.0

#车辆记数一些参数

flag_fangxiang_threshold_0 =[] # 表示进来,且过了远处临界点(下面代称:第一个临界点)

flag_fangxiang_threshold_1 =[] # 表示出去,且过了近处临界点(下面代称:第二个临界点)

for i in range(1000):

flag_fangxiang_threshold_0.append(0)

flag_fangxiang_threshold_1.append(0)

input_counter = 0 # 进来车辆记数

output_counter = 0 # 出去车辆记数

flag_fangxiang = 2 # 临时调试用出去车辆用,为1代表进来,为2代表出去,这个值应该判断根据车的运动方向进行判断,和ID绑定在一起,且注意鲁棒性

while writeVideo_flag:

ret, frame0 = video_capture.read() # frame shape 640*480*3

if ret != True:

break

# frame = frame0.crop((90, 450, 450, 850)) # (left, upper, right, lower)

frame = frame0[450:850, 50:450] # 裁剪坐标为[y0:y1, x0:x1 540*960视频

#frame = frame0[900:1700, 100:900] # 裁剪坐标为[y0:y1, x0:x1 1920*1080视频

[width,height,tongdao]=frame.shape

# print('宽度', width)

# # print('高度', height)

# # print('通道数', tongdao)

t1 = time.time()

#540*960视频

threshold_0 = 100

threshold_1 = 250

#1920*1080视频

# threshold_0 = 200

# threshold_1 = 500

#画临界线

cv2.line(frame, (1, threshold_0), (399, threshold_0), (0, 0, 255), 1)

cv2.line(frame, (1, threshold_1), (399, threshold_1), (0, 0, 255), 1)

# image = Image.fromarray(frame)

image = Image.fromarray(frame[...,::-1]) #bgr to rgb

boxs,class_names = yolo.detect_image(image)

features = encoder(frame,boxs)

# score to 1.0 here).

detections = [Detection(bbox, 1.0, feature) for bbox, feature in zip(boxs, features)]

# Run non-maxima suppression.

boxes = np.array([d.tlwh for d in detections])

scores = np.array([d.confidence for d in detections])

#NMS:非极大值抑制

indices = preprocessing.non_max_suppression(boxes, nms_max_overlap, scores)

detections = [detections[i] for i in indices]

# Call the tracker

#预测:当目标经过移动,通过上一帧的目标框和速度等参数,预测出当前帧的目标框位置和速度等参数。

#卡尔曼滤波可以根据Tracks状态预测下一帧的目标框状态

tracker.predict()

#更新:预测值和观测值,两个正态分布的状态进行线性加权,得到目前系统预测的状态。

#卡尔曼滤波更新是对观测值(匹配上的Track)和估计值更新所有track的状态

tracker.update(detections)

i = int(0)

indexIDs = []

c = []

boxes = []

center_zong = []#将所有检测出的框的中点放入center总中

#画yolo v3检测出的矩形框

for det in detections:

bbox = det.to_tlbr()

cv2.rectangle(frame,(int(bbox[0]), int(bbox[1])), (int(bbox[2]), int(bbox[3])),(255,255,255), 2)

for track in tracker.tracks:

if not track.is_confirmed() or track.time_since_update > 1:

continue

#boxes.append([track[0], track[1], track[2], track[3]])

print('track.track_id', track.track_id)

indexIDs.append(int(track.track_id))

print('indexIDs', indexIDs)

counter.append(int(track.track_id))#这里将每一帧的id都放入,且不清除(因为初始化是在while外面),在下面用set函数进行去除相同元素

#print('counter', counter)

bbox = track.to_tlbr()

color = [int(c) for c in COLORS[indexIDs[i] % len(COLORS)]]

# 画deepsort 预测出的矩形框

cv2.rectangle(frame, (int(bbox[0]), int(bbox[1])), (int(bbox[2]), int(bbox[3])),(color), 3)

cv2.putText(frame,str(track.track_id),(int(bbox[0]), int(bbox[1] -50)),0, 5e-3 * 150, (color),2)

if len(class_names) > 0:

class_name = class_names[0]

cv2.putText(frame, str(class_names[0]),(int(bbox[0]), int(bbox[1] -20)),0, 5e-3 * 150, (color),2)

i += 1

#bbox_center_point(x,y)

# 每个多目标的center[1]表示纵坐标

center = (int(((bbox[0])+(bbox[2]))/2),int(((bbox[1])+(bbox[3]))/2))

center_zong.append(center)

print('center_zong', center_zong)

#track_id[center]

pts[track.track_id].append(center)

thickness = 5

#center point

cv2.circle(frame, (center), 1, color, thickness)

#draw motion path画运动轨迹

for j in range(1, len(pts[track.track_id])):

if pts[track.track_id][j - 1] is None or pts[track.track_id][j] is None:

continue

thickness = int(np.sqrt(64 / float(j + 1)) * 2)

cv2.line(frame,(pts[track.track_id][j-1]), (pts[track.track_id][j]),(color),thickness)

#cv2.putText(frame, str(class_names[j]),(int(bbox[0]), int(bbox[1] -20)),0, 5e-3 * 150, (255,255,255),2)

#新一个循环,用来进行多目标框的计数

for indexID in indexIDs:

print('209 indexID',indexID)

weizhi=indexIDs.index(indexID)#该方法返回查找对象的索引位置,如果没有找到对象则抛出异常。

print('211 weizhi', weizhi)

if flag_fangxiang==2: #如果这辆车方向是出去的

if center_zong[weizhi][1] < threshold_1 and center_zong[weizhi][1] > threshold_0: #当出去方向的车进入第二个临界线

flag_fangxiang_threshold_1[indexID] = 1 #标志位置1

flag_fangxiang_threshold_0[indexID] = 1 #标志位置1

print('出去车辆经过第二个临界点且未经过第一个临界点')

if flag_fangxiang_threshold_1[indexID] == 1: #当进来方向车越过第二个临界线

if center_zong[weizhi][1] < threshold_0: #判断是否小于第一个临界线

print('出去车辆经过第一个临界点')

output_counter = output_counter+1 #如果小于,出去车辆加1

flag_fangxiang_threshold_1[indexID] = 0

print('出去车辆个数',output_counter)

if flag_fangxiang_threshold_0[indexID] == 1: # 当进来方向车越过第一个临界线

if center_zong[weizhi][1] > threshold_1: #判断是否大于第二个临界线

print('进来车辆经过第二个临界点')

input_counter = input_counter+1 #如果大于,进来车辆加1

flag_fangxiang_threshold_0[indexID] = 0

print('进来车辆个数',input_counter)

count = len(set(counter)) #set() 函数创建一个无序不重复元素集,可进行关系测试,删除重复数据,还可以计算交集、差集、并集等。

#截取的小图显示 # 参数解释:图像,文字内容, 坐标 , 字体,大小,颜色,字体厚度

cv2.putText(frame, "FPS: %f"%(fps), (int(20), int(30)), 0, 0.5, (0,255,0),1)

cv2.putText(frame, "Current Object Counter: "+str(i), (int(20), int(50)), 0, 0.5, (0,255,0),1)

cv2.putText(frame, "Total Object Counter: "+str(count),(int(20), int(70)),0, 0.5, (0,255,0),1)

cv2.putText(frame, "input: "+str(input_counter), (int(20), int(90)),0, 0.5, (0,255,0),1)

cv2.putText(frame, "output: " + str(output_counter), (int(20), int(110)),0, 0.5, (0,255,0), 1)

cv2.namedWindow("YOLO3_Deep_SORT", 0);

# cv2.resizeWindow('YOLO3_Deep_SORT', 1024, 768);

cv2.imshow('YOLO3_Deep_SORT', frame)

# if writeVideo_flag:

# #save a frame

# #out.write(frame)

# frame_index = frame_index + 1

# list_file.write(str(frame_index)+' ')

# if len(boxs) != 0:

# for i in range(0,len(boxs)):

# list_file.write(str(boxs[i][0]) + ' '+str(boxs[i][1]) + ' '+str(boxs[i][2]) + ' '+str(boxs[i][3]) + ' ')

# list_file.write('\n')

fps = ( fps + (1./(time.time()-t1)) ) / 2

#print(set(counter))

# Press Q to stop!

if cv2.waitKey(1) & 0xFF == ord('q'):

break

print(" ")

print("[Finish]")

end = time.time()

if len(pts[track.track_id]) != None:

print(args["input"][43:57]+": "+ str(count) + " " + str(class_name) +' Found')

else:

print("[No Found]")

#video_capture.release()

if writeVideo_flag:

#out.release()

list_file.close()

#cv2.destroyAllWindows()

if __name__ == '__main__':

main(YOLO())

2020.6.25.17:02

已经实现多目标下,出去车辆计数,但是进来车辆并不能计数(还未写)。

#! /usr/bin/env python

# -*- coding: utf-8 -*-

'''

卡尔曼滤波算法分为两个过程,预测和更新。该算法将目标的运动状态定义为8个正态分布的向量。

预测:当目标经过移动,通过上一帧的目标框和速度等参数,预测出当前帧的目标框位置和速度等参数。

更新:预测值和观测值,两个正态分布的状态进行线性加权,得到目前系统预测的状态。

匈牙利算法:解决的是一个分配问题,在MOT主要步骤中的计算相似度的,得到了前后两帧的相似度矩阵。匈牙利算法就是通过求解这个相似度矩阵,从而解决前后两帧真正匹配的目标。这部分sklearn库有对应的函数linear_assignment来进行求解。

SORT算法中是通过前后两帧IOU来构建相似度矩阵

'''

from __future__ import division, print_function, absolute_import

import os

import datetime

from timeit import time

import warnings

import cv2

import numpy as np

import argparse

from PIL import Image

from yolo import YOLO

from deep_sort import preprocessing

from deep_sort import nn_matching

from deep_sort.detection import Detection

from deep_sort.tracker import Tracker

from tools import generate_detections as gdet

from deep_sort.detection import Detection as ddet

from collections import deque

from keras import backend

backend.clear_session()

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--input",help="path to input video", default = "./test_video/test.avi")

ap.add_argument("-c", "--class",help="name of class", default = "car")

args = vars(ap.parse_args())

pts = [deque(maxlen=30) for _ in range(9999)]

warnings.filterwarnings('ignore')

# initialize a list of colors to represent each possible class label

np.random.seed(100)

COLORS = np.random.randint(0, 255, size=(200, 3),

dtype="uint8")

def main(yolo):

start = time.time()

#Definition of the parameters

max_cosine_distance = 0.5 #余弦距离的控制阈值

nn_budget = None

nms_max_overlap = 0.3 #非极大抑制的阈值

counter = []

#deep_sort

model_filename = 'model_data/market1501.pb'

encoder = gdet.create_box_encoder(model_filename,batch_size=1)

metric = nn_matching.NearestNeighborDistanceMetric("cosine", max_cosine_distance, nn_budget)

tracker = Tracker(metric)

#video_path = "./output/output.avi"

#video_capture = cv2.VideoCapture(args["input"])

video_capture = cv2.VideoCapture('./input/14_1.mp4')

# 其中0表示打开内置摄像头,1表示打开外接摄像头

if video_capture.isOpened(): # VideoCaputre对象是否成功打开

print('打开摄像头或者视频成功')

writeVideo_flag = True

else:

print('打开摄像头或者视频失败')

writeVideo_flag = False

if writeVideo_flag:

# Define the codec and create VideoWriter object

w = int(video_capture.get(3))# 返回视频的宽

h = int(video_capture.get(4))# 返回视频的高

fourcc = cv2.VideoWriter_fourcc(*'MJPG')

#out = cv2.VideoWriter('./output/'+args["input"][43:57]+ "_" + args["class"] + '_output.avi', fourcc, 15, (w, h))

#out = cv2.VideoWriter('./output/' + "14" + '_output.avi', fourcc, 15, (w, h))

list_file = open('detection.txt', 'w')

frame_index = -1

fps = 0.0

#车辆记数一些参数

flag_fangxiang_threshold_0 =[] # 表示进来,且过了远处临界点(下面代称:第一个临界点)

flag_fangxiang_threshold_1 =[] # 表示出去,且过了近处临界点(下面代称:第二个临界点)

for i in range(1000):

flag_fangxiang_threshold_0.append(0)

flag_fangxiang_threshold_1.append(0)

input_counter = 0 # 进来车辆记数

output_counter = 0 # 出去车辆记数

flag_fangxiang = 2 # 临时调试用出去车辆用,为1代表进来,为2代表出去,这个值应该判断根据车的运动方向进行判断,和ID绑定在一起,且注意鲁棒性

while writeVideo_flag:

ret, frame0 = video_capture.read() # frame shape 640*480*3

if ret != True:

break

# frame = frame0.crop((90, 450, 450, 850)) # (left, upper, right, lower)

frame = frame0[450:850, 50:450] # 裁剪坐标为[y0:y1, x0:x1

[width,height,tongdao]=frame.shape

# print('宽度', width)

# # print('高度', height)

# # print('通道数', tongdao)

t1 = time.time()

threshold_0 = 100

threshold_1 = 250

#画临界线

cv2.line(frame, (1, threshold_0), (399, threshold_0), (0, 0, 255), 1)

cv2.line(frame, (1, threshold_1), (399, threshold_1), (0, 0, 255), 1)

# image = Image.fromarray(frame)

image = Image.fromarray(frame[...,::-1]) #bgr to rgb

boxs,class_names = yolo.detect_image(image)

features = encoder(frame,boxs)

# score to 1.0 here).

detections = [Detection(bbox, 1.0, feature) for bbox, feature in zip(boxs, features)]

# Run non-maxima suppression.

boxes = np.array([d.tlwh for d in detections])

scores = np.array([d.confidence for d in detections])

#NMS:非极大值抑制

indices = preprocessing.non_max_suppression(boxes, nms_max_overlap, scores)

detections = [detections[i] for i in indices]

# Call the tracker

#预测:当目标经过移动,通过上一帧的目标框和速度等参数,预测出当前帧的目标框位置和速度等参数。

#卡尔曼滤波可以根据Tracks状态预测下一帧的目标框状态

tracker.predict()

#更新:预测值和观测值,两个正态分布的状态进行线性加权,得到目前系统预测的状态。

#卡尔曼滤波更新是对观测值(匹配上的Track)和估计值更新所有track的状态

tracker.update(detections)

i = int(0)

indexIDs = []

c = []

boxes = []

center_zong = []#将所有检测出的框的中点放入center总中

#画yolo v3检测出的矩形框

for det in detections:

bbox = det.to_tlbr()

cv2.rectangle(frame,(int(bbox[0]), int(bbox[1])), (int(bbox[2]), int(bbox[3])),(255,255,255), 2)

for track in tracker.tracks:

if not track.is_confirmed() or track.time_since_update > 1:

continue

#boxes.append([track[0], track[1], track[2], track[3]])

print('track.track_id', track.track_id)

indexIDs.append(int(track.track_id))

print('indexIDs', indexIDs)

counter.append(int(track.track_id))#这里将每一帧的id都放入,且不清除(因为初始化是在while外面),在下面用set函数进行去除相同元素

#print('counter', counter)

bbox = track.to_tlbr()

color = [int(c) for c in COLORS[indexIDs[i] % len(COLORS)]]

# 画deepsort 预测出的矩形框

cv2.rectangle(frame, (int(bbox[0]), int(bbox[1])), (int(bbox[2]), int(bbox[3])),(color), 3)

cv2.putText(frame,str(track.track_id),(int(bbox[0]), int(bbox[1] -50)),0, 5e-3 * 150, (color),2)

if len(class_names) > 0:

class_name = class_names[0]

cv2.putText(frame, str(class_names[0]),(int(bbox[0]), int(bbox[1] -20)),0, 5e-3 * 150, (color),2)

i += 1

#bbox_center_point(x,y)

# 每个多目标的center[1]表示纵坐标

center = (int(((bbox[0])+(bbox[2]))/2),int(((bbox[1])+(bbox[3]))/2))

center_zong.append(center)

print('center_zong', center_zong)

# if flag_fangxiang==1: #如果这辆车方向是进来的

# if center[1] > threshold_0: #当进来方向的车进入第一个临界线

# flag_fangxiang_threshold_0=1 #标志位置1

# if flag_fangxiang_threshold_0 == 1: #当进来方向车越过第一个临界线

# if center[1] > threshold_1: #判断是否大于第二个临界线

# input_counter = input_counter+1 #如果大于,进来车辆加1

# flag_fangxiang_threshold_0=0

# print('进来车辆个数:',input_counter)

# if flag_fangxiang==2: #如果这辆车方向是出去的

# if center[1] < threshold_1 and center[1] > threshold_0: #当出去方向的车进入第二个临界线

# flag_fangxiang_threshold_1[track.track_id]=1 #标志位置1

# print('出去车辆经过第二个临界点且未经过第一个临界点')

# if flag_fangxiang_threshold_1[track.track_id] == 1: #当进来方向车越过第一个临界线

# if center[1] < threshold_0: #判断是否大于第二个临界线

# print('出去车辆经过第一个临界点')

# output_counter = output_counter+1 #如果大于,进来车辆加1

# flag_fangxiang_threshold_1[track.track_id]=0

# print('出去车辆个数',output_counter)

#track_id[center]

pts[track.track_id].append(center)

thickness = 5

#center point

cv2.circle(frame, (center), 1, color, thickness)

#draw motion path画运动轨迹

for j in range(1, len(pts[track.track_id])):

if pts[track.track_id][j - 1] is None or pts[track.track_id][j] is None:

continue

thickness = int(np.sqrt(64 / float(j + 1)) * 2)

cv2.line(frame,(pts[track.track_id][j-1]), (pts[track.track_id][j]),(color),thickness)

#cv2.putText(frame, str(class_names[j]),(int(bbox[0]), int(bbox[1] -20)),0, 5e-3 * 150, (255,255,255),2)

#新一个循环,用来进行多目标框的计数

for indexID in indexIDs:

print('209 indexID',indexID)

weizhi=indexIDs.index(indexID)#该方法返回查找对象的索引位置,如果没有找到对象则抛出异常。

print('211 weizhi', weizhi)

if flag_fangxiang==2: #如果这辆车方向是出去的

if center_zong[weizhi][1] < threshold_1 and center_zong[weizhi][1] > threshold_0: #当出去方向的车进入第二个临界线

flag_fangxiang_threshold_1[indexID]=1 #标志位置1

print('出去车辆经过第二个临界点且未经过第一个临界点')

if flag_fangxiang_threshold_1[indexID] == 1: #当进来方向车越过第一个临界线

if center_zong[weizhi][1] < threshold_0: #判断是否大于第二个临界线

print('出去车辆经过第一个临界点')

output_counter = output_counter+1 #如果大于,进来车辆加1

flag_fangxiang_threshold_1[indexID] = 0

print('出去车辆个数',output_counter)

count = len(set(counter)) #set() 函数创建一个无序不重复元素集,可进行关系测试,删除重复数据,还可以计算交集、差集、并集等。

#截取的小图显示 # 参数解释:图像,文字内容, 坐标 , 字体,大小,颜色,字体厚度

cv2.putText(frame, "FPS: %f"%(fps), (int(20), int(30)), 0, 0.5, (0,255,0),1)

cv2.putText(frame, "Current Object Counter: "+str(i), (int(20), int(50)), 0, 0.5, (0,255,0),1)

cv2.putText(frame, "Total Object Counter: "+str(count),(int(20), int(70)),0, 0.5, (0,255,0),1)

cv2.putText(frame, "input: "+str(input_counter), (int(20), int(90)),0, 0.5, (0,255,0),1)

cv2.putText(frame, "output: " + str(output_counter), (int(20), int(110)),0, 0.5, (0,255,0), 1)

cv2.namedWindow("YOLO3_Deep_SORT", 0);

# cv2.resizeWindow('YOLO3_Deep_SORT', 1024, 768);

cv2.imshow('YOLO3_Deep_SORT', frame)

# if writeVideo_flag:

# #save a frame

# #out.write(frame)

# frame_index = frame_index + 1

# list_file.write(str(frame_index)+' ')

# if len(boxs) != 0:

# for i in range(0,len(boxs)):

# list_file.write(str(boxs[i][0]) + ' '+str(boxs[i][1]) + ' '+str(boxs[i][2]) + ' '+str(boxs[i][3]) + ' ')

# list_file.write('\n')

fps = ( fps + (1./(time.time()-t1)) ) / 2

#print(set(counter))

# Press Q to stop!

if cv2.waitKey(1) & 0xFF == ord('q'):

break

print(" ")

print("[Finish]")

end = time.time()

if len(pts[track.track_id]) != None:

print(args["input"][43:57]+": "+ str(count) + " " + str(class_name) +' Found')

else:

print("[No Found]")

video_capture.release()

if writeVideo_flag:

#out.release()

list_file.close()

cv2.destroyAllWindows()

if __name__ == '__main__':

main(YOLO())

2020.6.25.14:48

已经实现单目标下,出去车辆计数,但是多目标和进来车辆并不能计数。

#! /usr/bin/env python

# -*- coding: utf-8 -*-

'''

卡尔曼滤波算法分为两个过程,预测和更新。该算法将目标的运动状态定义为8个正态分布的向量。

预测:当目标经过移动,通过上一帧的目标框和速度等参数,预测出当前帧的目标框位置和速度等参数。

更新:预测值和观测值,两个正态分布的状态进行线性加权,得到目前系统预测的状态。

匈牙利算法:解决的是一个分配问题,在MOT主要步骤中的计算相似度的,得到了前后两帧的相似度矩阵。匈牙利算法就是通过求解这个相似度矩阵,从而解决前后两帧真正匹配的目标。这部分sklearn库有对应的函数linear_assignment来进行求解。

SORT算法中是通过前后两帧IOU来构建相似度矩阵

'''

from __future__ import division, print_function, absolute_import

import os

import datetime

from timeit import time

import warnings

import cv2

import numpy as np

import argparse

from PIL import Image

from yolo import YOLO

from deep_sort import preprocessing

from deep_sort import nn_matching

from deep_sort.detection import Detection

from deep_sort.tracker import Tracker

from tools import generate_detections as gdet

from deep_sort.detection import Detection as ddet

from collections import deque

from keras import backend

backend.clear_session()

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--input",help="path to input video", default = "./test_video/test.avi")

ap.add_argument("-c", "--class",help="name of class", default = "car")

args = vars(ap.parse_args())

pts = [deque(maxlen=30) for _ in range(9999)]

warnings.filterwarnings('ignore')

# initialize a list of colors to represent each possible class label

np.random.seed(100)

COLORS = np.random.randint(0, 255, size=(200, 3),

dtype="uint8")

def main(yolo):

start = time.time()

#Definition of the parameters

max_cosine_distance = 0.5 #余弦距离的控制阈值

nn_budget = None

nms_max_overlap = 0.3 #非极大抑制的阈值

counter = []

#deep_sort

model_filename = 'model_data/market1501.pb'

encoder = gdet.create_box_encoder(model_filename,batch_size=1)

metric = nn_matching.NearestNeighborDistanceMetric("cosine", max_cosine_distance, nn_budget)

tracker = Tracker(metric)

#video_path = "./output/output.avi"

#video_capture = cv2.VideoCapture(args["input"])

video_capture = cv2.VideoCapture('./input/14.mp4')

# 其中0表示打开内置摄像头,1表示打开外接摄像头

if video_capture.isOpened(): # VideoCaputre对象是否成功打开

print('打开摄像头或者视频成功')

writeVideo_flag = True

else:

print('打开摄像头或者视频失败')

writeVideo_flag = False

if writeVideo_flag:

# Define the codec and create VideoWriter object

w = int(video_capture.get(3))# 返回视频的宽

h = int(video_capture.get(4))# 返回视频的高

fourcc = cv2.VideoWriter_fourcc(*'MJPG')

#out = cv2.VideoWriter('./output/'+args["input"][43:57]+ "_" + args["class"] + '_output.avi', fourcc, 15, (w, h))

#out = cv2.VideoWriter('./output/' + "14" + '_output.avi', fourcc, 15, (w, h))

list_file = open('detection.txt', 'w')

frame_index = -1

fps = 0.0

#车辆记数一些参数

flag_fangxiang_threshold_0 = 0 # 表示进来,且过了远处临界点(下面代称:第一个临界点)

flag_fangxiang_threshold_1 = 0 # 表示出去,且过了近处临界点(下面代称:第二个临界点)

input_counter = 0 # 进来车辆记数

output_counter = 0 # 出去车辆记数

flag_fangxiang = 2 # 临时调试用出去车辆用,为1代表进来,为2代表出去,这个值应该判断根据车的运动方向进行判断,和ID绑定在一起,且注意鲁棒性

while writeVideo_flag:

ret, frame0 = video_capture.read() # frame shape 640*480*3

if ret != True:

break

# frame = frame0.crop((90, 450, 450, 850)) # (left, upper, right, lower)

frame = frame0[450:850, 50:450] # 裁剪坐标为[y0:y1, x0:x1

[width,height,tongdao]=frame.shape

# print('宽度', width)

# # print('高度', height)

# # print('通道数', tongdao)

t1 = time.time()

threshold_0 = 100

threshold_1 = 250

#画临界线

cv2.line(frame, (1, threshold_0), (399, threshold_0), (0, 0, 255), 1)

cv2.line(frame, (1, threshold_1), (399, threshold_1), (0, 0, 255), 1)

# image = Image.fromarray(frame)

image = Image.fromarray(frame[...,::-1]) #bgr to rgb

boxs,class_names = yolo.detect_image(image)

features = encoder(frame,boxs)

# score to 1.0 here).

detections = [Detection(bbox, 1.0, feature) for bbox, feature in zip(boxs, features)]

# Run non-maxima suppression.

boxes = np.array([d.tlwh for d in detections])

scores = np.array([d.confidence for d in detections])

#NMS:非极大值抑制

indices = preprocessing.non_max_suppression(boxes, nms_max_overlap, scores)

detections = [detections[i] for i in indices]

# Call the tracker

#预测:当目标经过移动,通过上一帧的目标框和速度等参数,预测出当前帧的目标框位置和速度等参数。

#卡尔曼滤波可以根据Tracks状态预测下一帧的目标框状态

tracker.predict()

#更新:预测值和观测值,两个正态分布的状态进行线性加权,得到目前系统预测的状态。

#卡尔曼滤波更新是对观测值(匹配上的Track)和估计值更新所有track的状态

tracker.update(detections)

i = int(0)

indexIDs = []

c = []

boxes = []

for det in detections:

bbox = det.to_tlbr()

cv2.rectangle(frame,(int(bbox[0]), int(bbox[1])), (int(bbox[2]), int(bbox[3])),(255,255,255), 2)

for track in tracker.tracks:

if not track.is_confirmed() or track.time_since_update > 1:

continue

#boxes.append([track[0], track[1], track[2], track[3]])

indexIDs.append(int(track.track_id))

counter.append(int(track.track_id))

bbox = track.to_tlbr()

color = [int(c) for c in COLORS[indexIDs[i] % len(COLORS)]]

cv2.rectangle(frame, (int(bbox[0]), int(bbox[1])), (int(bbox[2]), int(bbox[3])),(color), 3)

cv2.putText(frame,str(track.track_id),(int(bbox[0]), int(bbox[1] -50)),0, 5e-3 * 150, (color),2)

if len(class_names) > 0:

class_name = class_names[0]

cv2.putText(frame, str(class_names[0]),(int(bbox[0]), int(bbox[1] -20)),0, 5e-3 * 150, (color),2)

i += 1

#bbox_center_point(x,y)

# center[1]表示纵坐标

center = (int(((bbox[0])+(bbox[2]))/2),int(((bbox[1])+(bbox[3]))/2))

if flag_fangxiang==1: #如果这辆车方向是进来的

if center[1] > threshold_0: #当进来方向的车进入第一个临界线

flag_fangxiang_threshold_0=1 #标志位置1

if flag_fangxiang_threshold_0 == 1: #当进来方向车越过第一个临界线

if center[1] > threshold_1: #判断是否大于第二个临界线

input_counter = input_counter+1 #如果大于,进来车辆加1

flag_fangxiang_threshold_0=0

print('进来车辆个数:',input_counter)

if flag_fangxiang==2: #如果这辆车方向是出去的

if center[1] < threshold_1 and center[1] > threshold_0: #当出去方向的车进入第二个临界线

flag_fangxiang_threshold_1=1 #标志位置1

print('出去车辆经过第二个临界点且未经过第一个临界点')

if flag_fangxiang_threshold_1 == 1: #当进来方向车越过第一个临界线

if center[1] < threshold_0: #判断是否大于第二个临界线

print('出去车辆经过第一个临界点')

output_counter = output_counter+1 #如果大于,进来车辆加1

flag_fangxiang_threshold_1=0

print('出去车辆个数',output_counter)

#track_id[center]

pts[track.track_id].append(center)

thickness = 5

#center point

cv2.circle(frame, (center), 1, color, thickness)

#draw motion path画运动轨迹

for j in range(1, len(pts[track.track_id])):

if pts[track.track_id][j - 1] is None or pts[track.track_id][j] is None:

continue

thickness = int(np.sqrt(64 / float(j + 1)) * 2)

cv2.line(frame,(pts[track.track_id][j-1]), (pts[track.track_id][j]),(color),thickness)

#cv2.putText(frame, str(class_names[j]),(int(bbox[0]), int(bbox[1] -20)),0, 5e-3 * 150, (255,255,255),2)

count = len(set(counter))

#截取的小图显示 # 参数解释:图像,文字内容, 坐标 , 字体,大小,颜色,字体厚度

cv2.putText(frame, "FPS: %f"%(fps), (int(20), int(30)), 0, 0.5, (0,255,0),1)

cv2.putText(frame, "Current Object Counter: "+str(i), (int(20), int(50)), 0, 0.5, (0,255,0),1)

cv2.putText(frame, "Total Object Counter: "+str(count),(int(20), int(70)),0, 0.5, (0,255,0),1)

cv2.putText(frame, "input: "+str(input_counter), (int(20), int(90)),0, 0.5, (0,255,0),1)

cv2.putText(frame, "output: " + str(output_counter), (int(20), int(110)),0, 0.5, (0,255,0), 1)

cv2.namedWindow("YOLO3_Deep_SORT", 0);

# cv2.resizeWindow('YOLO3_Deep_SORT', 1024, 768);

cv2.imshow('YOLO3_Deep_SORT', frame)

if writeVideo_flag:

#save a frame

#out.write(frame)

frame_index = frame_index + 1

list_file.write(str(frame_index)+' ')

if len(boxs) != 0:

for i in range(0,len(boxs)):

list_file.write(str(boxs[i][0]) + ' '+str(boxs[i][1]) + ' '+str(boxs[i][2]) + ' '+str(boxs[i][3]) + ' ')

list_file.write('\n')

fps = ( fps + (1./(time.time()-t1)) ) / 2

#print(set(counter))

# Press Q to stop!

if cv2.waitKey(1) & 0xFF == ord('q'):

break

print(" ")

print("[Finish]")

end = time.time()

if len(pts[track.track_id]) != None:

print(args["input"][43:57]+": "+ str(count) + " " + str(class_name) +' Found')

else:

print("[No Found]")

video_capture.release()

if writeVideo_flag:

#out.release()

list_file.close()

cv2.destroyAllWindows()

if __name__ == '__main__':

main(YOLO())

2020.6.24.17:02

修改center纵坐标bug,调整可视化界面

#! /usr/bin/env python

# -*- coding: utf-8 -*-

'''

卡尔曼滤波算法分为两个过程,预测和更新。该算法将目标的运动状态定义为8个正态分布的向量。

预测:当目标经过移动,通过上一帧的目标框和速度等参数,预测出当前帧的目标框位置和速度等参数。

更新:预测值和观测值,两个正态分布的状态进行线性加权,得到目前系统预测的状态。

匈牙利算法:解决的是一个分配问题,在MOT主要步骤中的计算相似度的,得到了前后两帧的相似度矩阵。匈牙利算法就是通过求解这个相似度矩阵,从而解决前后两帧真正匹配的目标。这部分sklearn库有对应的函数linear_assignment来进行求解。

SORT算法中是通过前后两帧IOU来构建相似度矩阵

'''

from __future__ import division, print_function, absolute_import

import os

import datetime

from timeit import time

import warnings

import cv2

import numpy as np

import argparse

from PIL import Image

from yolo import YOLO

from deep_sort import preprocessing

from deep_sort import nn_matching

from deep_sort.detection import Detection

from deep_sort.tracker import Tracker

from tools import generate_detections as gdet

from deep_sort.detection import Detection as ddet

from collections import deque

from keras import backend

backend.clear_session()

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--input",help="path to input video", default = "./test_video/test.avi")

ap.add_argument("-c", "--class",help="name of class", default = "car")

args = vars(ap.parse_args())

pts = [deque(maxlen=30) for _ in range(9999)]

warnings.filterwarnings('ignore')

# initialize a list of colors to represent each possible class label

np.random.seed(100)

COLORS = np.random.randint(0, 255, size=(200, 3),

dtype="uint8")

def main(yolo):

start = time.time()

#Definition of the parameters

max_cosine_distance = 0.5 #余弦距离的控制阈值

nn_budget = None

nms_max_overlap = 0.3 #非极大抑制的阈值

counter = []

#deep_sort

model_filename = 'model_data/market1501.pb'

encoder = gdet.create_box_encoder(model_filename,batch_size=1)

metric = nn_matching.NearestNeighborDistanceMetric("cosine", max_cosine_distance, nn_budget)

tracker = Tracker(metric)

#video_path = "./output/output.avi"

#video_capture = cv2.VideoCapture(args["input"])

video_capture = cv2.VideoCapture('./input/14.mp4')

# 其中0表示打开内置摄像头,1表示打开外接摄像头

if video_capture.isOpened(): # VideoCaputre对象是否成功打开

print('打开摄像头或者视频成功')

writeVideo_flag = True

else:

print('打开摄像头或者视频失败')

writeVideo_flag = False

if writeVideo_flag:

# Define the codec and create VideoWriter object

w = int(video_capture.get(3))# 返回视频的宽

h = int(video_capture.get(4))# 返回视频的高

fourcc = cv2.VideoWriter_fourcc(*'MJPG')

#out = cv2.VideoWriter('./output/'+args["input"][43:57]+ "_" + args["class"] + '_output.avi', fourcc, 15, (w, h))

#out = cv2.VideoWriter('./output/' + "14" + '_output.avi', fourcc, 15, (w, h))

list_file = open('detection.txt', 'w')

frame_index = -1

fps = 0.0

#车辆记数一些参数

flag_fangxiang_threshold_0 = 0 # 表示进来,且过了远处临界点(下面代称:第一个临界点)

flag_fangxiang_threshold_1 = 0 # 表示出去,且过了近处临界点(下面代称:第二个临界点)

input_counter = 0 # 进来车辆记数

output_counter = 0 # 出去车辆记数

flag_fangxiang = 2 # 临时调试用出去车辆用,为1代表进来,为2代表出去,这个值应该判断根据车的运动方向进行判断,和ID绑定在一起,且注意鲁棒性

while writeVideo_flag:

ret, frame0 = video_capture.read() # frame shape 640*480*3

if ret != True:

break

# frame = frame0.crop((90, 450, 450, 850)) # (left, upper, right, lower)

frame = frame0[450:850, 50:450] # 裁剪坐标为[y0:y1, x0:x1

[width,height,tongdao]=frame.shape

# print('宽度', width)

# # print('高度', height)

# # print('通道数', tongdao)

t1 = time.time()

threshold_0 = 100

threshold_1 = 250

#画临界线

cv2.line(frame, (1, threshold_0), (399, threshold_0), (0, 0, 255), 1)

cv2.line(frame, (1, threshold_1), (399, threshold_1), (0, 0, 255), 1)

# image = Image.fromarray(frame)

image = Image.fromarray(frame[...,::-1]) #bgr to rgb

boxs,class_names = yolo.detect_image(image)

features = encoder(frame,boxs)

# score to 1.0 here).

detections = [Detection(bbox, 1.0, feature) for bbox, feature in zip(boxs, features)]

# Run non-maxima suppression.

boxes = np.array([d.tlwh for d in detections])

scores = np.array([d.confidence for d in detections])

#NMS:非极大值抑制

indices = preprocessing.non_max_suppression(boxes, nms_max_overlap, scores)

detections = [detections[i] for i in indices]

# Call the tracker

#预测:当目标经过移动,通过上一帧的目标框和速度等参数,预测出当前帧的目标框位置和速度等参数。

#卡尔曼滤波可以根据Tracks状态预测下一帧的目标框状态

tracker.predict()

#更新:预测值和观测值,两个正态分布的状态进行线性加权,得到目前系统预测的状态。

#卡尔曼滤波更新是对观测值(匹配上的Track)和估计值更新所有track的状态

tracker.update(detections)

i = int(0)

indexIDs = []

c = []

boxes = []

for det in detections:

bbox = det.to_tlbr()

cv2.rectangle(frame,(int(bbox[0]), int(bbox[1])), (int(bbox[2]), int(bbox[3])),(255,255,255), 2)

for track in tracker.tracks:

if not track.is_confirmed() or track.time_since_update > 1:

continue

#boxes.append([track[0], track[1], track[2], track[3]])

indexIDs.append(int(track.track_id))

counter.append(int(track.track_id))

bbox = track.to_tlbr()

color = [int(c) for c in COLORS[indexIDs[i] % len(COLORS)]]

cv2.rectangle(frame, (int(bbox[0]), int(bbox[1])), (int(bbox[2]), int(bbox[3])),(color), 3)

cv2.putText(frame,str(track.track_id),(int(bbox[0]), int(bbox[1] -50)),0, 5e-3 * 150, (color),2)

if len(class_names) > 0:

class_name = class_names[0]

cv2.putText(frame, str(class_names[0]),(int(bbox[0]), int(bbox[1] -20)),0, 5e-3 * 150, (color),2)

i += 1

#bbox_center_point(x,y)

# center[1]表示纵坐标

center = (int(((bbox[0])+(bbox[2]))/2),int(((bbox[1])+(bbox[3]))/2))

if flag_fangxiang==1: #如果这辆车方向是进来的

if center[1] > threshold_0: #当进来方向的车进入第一个临界线

flag_fangxiang_threshold_0=1 #标志位置1

if flag_fangxiang_threshold_0 == 1: #当进来方向车越过第一个临界线

if center[1] > threshold_1: #判断是否大于第二个临界线

input_counter = input_counter+1 #如果大于,进来车辆加1

print('进来车辆个数:',input_counter)

if flag_fangxiang==2: #如果这辆车方向是出去的

if center[1] < threshold_1: #当出去方向的车进入第二个临界线

flag_fangxiang_threshold_1=1 #标志位置1

print('出去车辆经过第二个临界点', flag_fangxiang_threshold_1)

if flag_fangxiang_threshold_1 == 1: #当进来方向车越过第一个临界线

if center[1] < threshold_0: #判断是否大于第二个临界线

output_counter = output_counter+1 #如果大于,进来车辆加1

print('出去车辆个数',output_counter)

#track_id[center]

pts[track.track_id].append(center)

thickness = 5

#center point

cv2.circle(frame, (center), 1, color, thickness)

#draw motion path

for j in range(1, len(pts[track.track_id])):

if pts[track.track_id][j - 1] is None or pts[track.track_id][j] is None:

continue

thickness = int(np.sqrt(64 / float(j + 1)) * 2)

cv2.line(frame,(pts[track.track_id][j-1]), (pts[track.track_id][j]),(color),thickness)

#cv2.putText(frame, str(class_names[j]),(int(bbox[0]), int(bbox[1] -20)),0, 5e-3 * 150, (255,255,255),2)

count = len(set(counter))

#截取的小图显示 # 参数解释:图像,文字内容, 坐标 , 字体,大小,颜色,字体厚度

cv2.putText(frame, "FPS: %f"%(fps), (int(20), int(30)), 0, 0.5, (0,255,0),1)

cv2.putText(frame, "Current Object Counter: "+str(i), (int(20), int(50)), 0, 0.5, (0,255,0),1)

cv2.putText(frame, "Total Object Counter: "+str(count),(int(20), int(70)),0, 0.5, (0,255,0),1)

cv2.putText(frame, "input: "+str(input_counter), (int(20), int(90)),0, 0.5, (0,255,0),1)

cv2.putText(frame, "output: " + str(output_counter), (int(20), int(110)),0, 0.5, (0,255,0), 1)

cv2.namedWindow("YOLO3_Deep_SORT", 0);

# cv2.resizeWindow('YOLO3_Deep_SORT', 1024, 768);

cv2.imshow('YOLO3_Deep_SORT', frame)

if writeVideo_flag:

#save a frame

#out.write(frame)

frame_index = frame_index + 1

list_file.write(str(frame_index)+' ')

if len(boxs) != 0:

for i in range(0,len(boxs)):

list_file.write(str(boxs[i][0]) + ' '+str(boxs[i][1]) + ' '+str(boxs[i][2]) + ' '+str(boxs[i][3]) + ' ')

list_file.write('\n')

fps = ( fps + (1./(time.time()-t1)) ) / 2

#print(set(counter))

# Press Q to stop!

if cv2.waitKey(1) & 0xFF == ord('q'):

break

print(" ")

print("[Finish]")

end = time.time()

if len(pts[track.track_id]) != None:

print(args["input"][43:57]+": "+ str(count) + " " + str(class_name) +' Found')

else:

print("[No Found]")

video_capture.release()

if writeVideo_flag:

#out.release()

list_file.close()

cv2.destroyAllWindows()

if __name__ == '__main__':

main(YOLO())

2020.6.24.16:43

加入显示临界线

#! /usr/bin/env python

# -*- coding: utf-8 -*-

'''

卡尔曼滤波算法分为两个过程,预测和更新。该算法将目标的运动状态定义为8个正态分布的向量。

预测:当目标经过移动,通过上一帧的目标框和速度等参数,预测出当前帧的目标框位置和速度等参数。

更新:预测值和观测值,两个正态分布的状态进行线性加权,得到目前系统预测的状态。

匈牙利算法:解决的是一个分配问题,在MOT主要步骤中的计算相似度的,得到了前后两帧的相似度矩阵。匈牙利算法就是通过求解这个相似度矩阵,从而解决前后两帧真正匹配的目标。这部分sklearn库有对应的函数linear_assignment来进行求解。

SORT算法中是通过前后两帧IOU来构建相似度矩阵

'''

from __future__ import division, print_function, absolute_import

import os

import datetime

from timeit import time

import warnings

import cv2

import numpy as np

import argparse

from PIL import Image

from yolo import YOLO

from deep_sort import preprocessing

from deep_sort import nn_matching

from deep_sort.detection import Detection

from deep_sort.tracker import Tracker

from tools import generate_detections as gdet

from deep_sort.detection import Detection as ddet

from collections import deque

from keras import backend

backend.clear_session()

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--input",help="path to input video", default = "./test_video/test.avi")

ap.add_argument("-c", "--class",help="name of class", default = "car")

args = vars(ap.parse_args())

pts = [deque(maxlen=30) for _ in range(9999)]

warnings.filterwarnings('ignore')

# initialize a list of colors to represent each possible class label

np.random.seed(100)

COLORS = np.random.randint(0, 255, size=(200, 3),

dtype="uint8")

def main(yolo):

start = time.time()

#Definition of the parameters

max_cosine_distance = 0.5 #余弦距离的控制阈值

nn_budget = None

nms_max_overlap = 0.3 #非极大抑制的阈值

counter = []

#deep_sort

model_filename = 'model_data/market1501.pb'

encoder = gdet.create_box_encoder(model_filename,batch_size=1)

metric = nn_matching.NearestNeighborDistanceMetric("cosine", max_cosine_distance, nn_budget)

tracker = Tracker(metric)

#video_path = "./output/output.avi"

#video_capture = cv2.VideoCapture(args["input"])

video_capture = cv2.VideoCapture('./input/14.mp4')

# 其中0表示打开内置摄像头,1表示打开外接摄像头

if video_capture.isOpened(): # VideoCaputre对象是否成功打开

print('打开摄像头或者视频成功')

writeVideo_flag = True

else:

print('打开摄像头或者视频失败')

writeVideo_flag = False

if writeVideo_flag:

# Define the codec and create VideoWriter object

w = int(video_capture.get(3))# 返回视频的宽

h = int(video_capture.get(4))# 返回视频的高

fourcc = cv2.VideoWriter_fourcc(*'MJPG')

#out = cv2.VideoWriter('./output/'+args["input"][43:57]+ "_" + args["class"] + '_output.avi', fourcc, 15, (w, h))

#out = cv2.VideoWriter('./output/' + "14" + '_output.avi', fourcc, 15, (w, h))

list_file = open('detection.txt', 'w')

frame_index = -1

fps = 0.0

#车辆记数一些参数

flag_fangxiang_threshold_0 = 0 # 表示进来,且过了远处临界点(下面代称:第一个临界点)

flag_fangxiang_threshold_1 = 0 # 表示出去,且过了近处临界点(下面代称:第二个临界点)

input_counter = 0 # 进来车辆记数

output_counter = 0 # 出去车辆记数

flag_fangxiang = 2 # 临时调试用出去车辆用,为1代表进来,为2代表出去,这个值应该判断根据车的运动方向进行判断,和ID绑定在一起,且注意鲁棒性

while writeVideo_flag:

ret, frame0 = video_capture.read() # frame shape 640*480*3

if ret != True:

break

# frame = frame0.crop((90, 450, 450, 850)) # (left, upper, right, lower)

frame = frame0[450:850, 50:450] # 裁剪坐标为[y0:y1, x0:x1

[width,height,tongdao]=frame.shape

# print('宽度', width)

# # print('高度', height)

# # print('通道数', tongdao)

t1 = time.time()

threshold_0 = 100

threshold_1 = 250

#画临界线

cv2.line(frame, (1, threshold_0), (399, threshold_0), (0, 0, 255), 1)

cv2.line(frame, (1, threshold_1), (399, threshold_1), (0, 0, 255), 1)

# image = Image.fromarray(frame)

image = Image.fromarray(frame[...,::-1]) #bgr to rgb

boxs,class_names = yolo.detect_image(image)

features = encoder(frame,boxs)

# score to 1.0 here).

detections = [Detection(bbox, 1.0, feature) for bbox, feature in zip(boxs, features)]

# Run non-maxima suppression.

boxes = np.array([d.tlwh for d in detections])

scores = np.array([d.confidence for d in detections])

#NMS:非极大值抑制

indices = preprocessing.non_max_suppression(boxes, nms_max_overlap, scores)

detections = [detections[i] for i in indices]

# Call the tracker

#预测:当目标经过移动,通过上一帧的目标框和速度等参数,预测出当前帧的目标框位置和速度等参数。

#卡尔曼滤波可以根据Tracks状态预测下一帧的目标框状态

tracker.predict()

#更新:预测值和观测值,两个正态分布的状态进行线性加权,得到目前系统预测的状态。

#卡尔曼滤波更新是对观测值(匹配上的Track)和估计值更新所有track的状态

tracker.update(detections)

i = int(0)

indexIDs = []

c = []

boxes = []

for det in detections:

bbox = det.to_tlbr()

cv2.rectangle(frame,(int(bbox[0]), int(bbox[1])), (int(bbox[2]), int(bbox[3])),(255,255,255), 2)

for track in tracker.tracks:

if not track.is_confirmed() or track.time_since_update > 1:

continue

#boxes.append([track[0], track[1], track[2], track[3]])

indexIDs.append(int(track.track_id))

counter.append(int(track.track_id))

bbox = track.to_tlbr()

color = [int(c) for c in COLORS[indexIDs[i] % len(COLORS)]]

cv2.rectangle(frame, (int(bbox[0]), int(bbox[1])), (int(bbox[2]), int(bbox[3])),(color), 3)

cv2.putText(frame,str(track.track_id),(int(bbox[0]), int(bbox[1] -50)),0, 5e-3 * 150, (color),2)

if len(class_names) > 0:

class_name = class_names[0]

cv2.putText(frame, str(class_names[0]),(int(bbox[0]), int(bbox[1] -20)),0, 5e-3 * 150, (color),2)

i += 1

#bbox_center_point(x,y)

center = (int(((bbox[0])+(bbox[2]))/2),int(((bbox[1])+(bbox[3]))/2))

if flag_fangxiang==1: #如果这辆车方向是进来的

if center[0] > threshold_0: #当进来方向的车进入第一个临界线

flag_fangxiang_threshold_0=1 #标志位置1

if flag_fangxiang_threshold_0 == 1: #当进来方向车越过第一个临界线

if center[0] > threshold_1: #判断是否大于第二个临界线

input_counter = input_counter+1 #如果大于,进来车辆加1

print('进来车辆个数:',input_counter)

if flag_fangxiang==2: #如果这辆车方向是出去的

if center[0] < threshold_1: #当出去方向的车进入第二个临界线

flag_fangxiang_threshold_1=1 #标志位置1

print('出去车辆经过第二个临界点', flag_fangxiang_threshold_1)

if flag_fangxiang_threshold_0 == 1: #当进来方向车越过第一个临界线

if center[0] < threshold_0: #判断是否大于第二个临界线

output_counter = output_counter+1 #如果大于,进来车辆加1

print('出去车辆个数',output_counter)

#track_id[center]

pts[track.track_id].append(center)

thickness = 5

#center point

cv2.circle(frame, (center), 1, color, thickness)

#draw motion path

for j in range(1, len(pts[track.track_id])):

if pts[track.track_id][j - 1] is None or pts[track.track_id][j] is None:

continue

thickness = int(np.sqrt(64 / float(j + 1)) * 2)

cv2.line(frame,(pts[track.track_id][j-1]), (pts[track.track_id][j]),(color),thickness)

#cv2.putText(frame, str(class_names[j]),(int(bbox[0]), int(bbox[1] -20)),0, 5e-3 * 150, (255,255,255),2)

count = len(set(counter))

# cv2.putText(frame, "Total Object Counter: "+str(count),(int(20), int(120)),0, 5e-3 * 200, (0,255,0),2)

# cv2.putText(frame, "Current Object Counter: "+str(i),(int(20), int(80)),0, 5e-3 * 200, (0,255,0),2)

# cv2.putText(frame, "FPS: %f"%(fps),(int(20), int(40)),0, 5e-3 * 200, (0,255,0),3)

#截取的小图显示 # 参数解释:图像,文字内容, 坐标 , 字体,大小,颜色,字体厚度

cv2.putText(frame, "Total Object Counter: "+str(count),(int(20), int(120)),0, 0.5, (0,255,0),1)

cv2.putText(frame, "Current Object Counter: "+str(i), (int(20), int(80)), 0, 0.5, (0,255,0),1)

cv2.putText(frame, "FPS: %f"%(fps), (int(20), int(40)), 0, 1.2, (0,255,0),1)

cv2.namedWindow("YOLO3_Deep_SORT", 0);

# cv2.resizeWindow('YOLO3_Deep_SORT', 1024, 768);

cv2.imshow('YOLO3_Deep_SORT', frame)

if writeVideo_flag:

#save a frame

#out.write(frame)

frame_index = frame_index + 1

list_file.write(str(frame_index)+' ')

if len(boxs) != 0:

for i in range(0,len(boxs)):

list_file.write(str(boxs[i][0]) + ' '+str(boxs[i][1]) + ' '+str(boxs[i][2]) + ' '+str(boxs[i][3]) + ' ')

list_file.write('\n')

fps = ( fps + (1./(time.time()-t1)) ) / 2

#print(set(counter))

# Press Q to stop!

if cv2.waitKey(1) & 0xFF == ord('q'):

break

print(" ")

print("[Finish]")

end = time.time()

if len(pts[track.track_id]) != None:

print(args["input"][43:57]+": "+ str(count) + " " + str(class_name) +' Found')

else:

print("[No Found]")

video_capture.release()

if writeVideo_flag:

#out.release()

list_file.close()

cv2.destroyAllWindows()

if __name__ == '__main__':

main(YOLO())

2020.6.24.16.10

#! /usr/bin/env python

# -*- coding: utf-8 -*-

'''

卡尔曼滤波算法分为两个过程,预测和更新。该算法将目标的运动状态定义为8个正态分布的向量。

预测:当目标经过移动,通过上一帧的目标框和速度等参数,预测出当前帧的目标框位置和速度等参数。

更新:预测值和观测值,两个正态分布的状态进行线性加权,得到目前系统预测的状态。

匈牙利算法:解决的是一个分配问题,在MOT主要步骤中的计算相似度的,得到了前后两帧的相似度矩阵。匈牙利算法就是通过求解这个相似度矩阵,从而解决前后两帧真正匹配的目标。这部分sklearn库有对应的函数linear_assignment来进行求解。

SORT算法中是通过前后两帧IOU来构建相似度矩阵

'''

from __future__ import division, print_function, absolute_import

import os

import datetime

from timeit import time

import warnings

import cv2

import numpy as np

import argparse

from PIL import Image

from yolo import YOLO

from deep_sort import preprocessing

from deep_sort import nn_matching

from deep_sort.detection import Detection

from deep_sort.tracker import Tracker

from tools import generate_detections as gdet

from deep_sort.detection import Detection as ddet

from collections import deque

from keras import backend

backend.clear_session()

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--input",help="path to input video", default = "./test_video/test.avi")

ap.add_argument("-c", "--class",help="name of class", default = "car")

args = vars(ap.parse_args())

pts = [deque(maxlen=30) for _ in range(9999)]

warnings.filterwarnings('ignore')

# initialize a list of colors to represent each possible class label

np.random.seed(100)

COLORS = np.random.randint(0, 255, size=(200, 3),

dtype="uint8")

def main(yolo):

start = time.time()

#Definition of the parameters

max_cosine_distance = 0.5 #余弦距离的控制阈值

nn_budget = None

nms_max_overlap = 0.3 #非极大抑制的阈值

counter = []

#deep_sort

model_filename = 'model_data/market1501.pb'

encoder = gdet.create_box_encoder(model_filename,batch_size=1)

metric = nn_matching.NearestNeighborDistanceMetric("cosine", max_cosine_distance, nn_budget)

tracker = Tracker(metric)

#video_path = "./output/output.avi"

#video_capture = cv2.VideoCapture(args["input"])

video_capture = cv2.VideoCapture('./input/14.mp4')

# 其中0表示打开内置摄像头,1表示打开外接摄像头

if video_capture.isOpened(): # VideoCaputre对象是否成功打开

print('打开摄像头或者视频成功')

writeVideo_flag = True

else:

print('打开摄像头或者视频失败')

writeVideo_flag = False

if writeVideo_flag:

# Define the codec and create VideoWriter object

w = int(video_capture.get(3))# 返回视频的宽

h = int(video_capture.get(4))# 返回视频的高

fourcc = cv2.VideoWriter_fourcc(*'MJPG')

#out = cv2.VideoWriter('./output/'+args["input"][43:57]+ "_" + args["class"] + '_output.avi', fourcc, 15, (w, h))

#out = cv2.VideoWriter('./output/' + "14" + '_output.avi', fourcc, 15, (w, h))

list_file = open('detection.txt', 'w')

frame_index = -1

fps = 0.0

while writeVideo_flag:

ret, frame0 = video_capture.read() # frame shape 640*480*3

if ret != True:

break

# frame = frame0.crop((90, 450, 450, 850)) # (left, upper, right, lower)

frame = frame0[450:850, 50:450] # 裁剪坐标为[y0:y1, x0:x1

t1 = time.time()

# image = Image.fromarray(frame)

image = Image.fromarray(frame[...,::-1]) #bgr to rgb

boxs,class_names = yolo.detect_image(image)

features = encoder(frame,boxs)

# score to 1.0 here).

detections = [Detection(bbox, 1.0, feature) for bbox, feature in zip(boxs, features)]

# Run non-maxima suppression.

boxes = np.array([d.tlwh for d in detections])

scores = np.array([d.confidence for d in detections])

#NMS:非极大值抑制

indices = preprocessing.non_max_suppression(boxes, nms_max_overlap, scores)

detections = [detections[i] for i in indices]

# Call the tracker

#预测:当目标经过移动,通过上一帧的目标框和速度等参数,预测出当前帧的目标框位置和速度等参数。

#卡尔曼滤波可以根据Tracks状态预测下一帧的目标框状态

tracker.predict()

#更新:预测值和观测值,两个正态分布的状态进行线性加权,得到目前系统预测的状态。

#卡尔曼滤波更新是对观测值(匹配上的Track)和估计值更新所有track的状态

tracker.update(detections)

i = int(0)

indexIDs = []

c = []

boxes = []

for det in detections:

bbox = det.to_tlbr()

cv2.rectangle(frame,(int(bbox[0]), int(bbox[1])), (int(bbox[2]), int(bbox[3])),(255,255,255), 2)

for track in tracker.tracks:

if not track.is_confirmed() or track.time_since_update > 1:

continue

#boxes.append([track[0], track[1], track[2], track[3]])

indexIDs.append(int(track.track_id))

counter.append(int(track.track_id))

bbox = track.to_tlbr()

color = [int(c) for c in COLORS[indexIDs[i] % len(COLORS)]]

cv2.rectangle(frame, (int(bbox[0]), int(bbox[1])), (int(bbox[2]), int(bbox[3])),(color), 3)

cv2.putText(frame,str(track.track_id),(int(bbox[0]), int(bbox[1] -50)),0, 5e-3 * 150, (color),2)

if len(class_names) > 0:

class_name = class_names[0]

cv2.putText(frame, str(class_names[0]),(int(bbox[0]), int(bbox[1] -20)),0, 5e-3 * 150, (color),2)

i += 1

#bbox_center_point(x,y)

center = (int(((bbox[0])+(bbox[2]))/2),int(((bbox[1])+(bbox[3]))/2))

flag_fangxiang=0 #表示进来

flag_fangxiang_threshold_0=0 #表示进来,且过了远处临界点(下面代称:第一个临界点)

flag_fangxiang=1 #表示出去

flag_fangxiang_threshold_1 = 0 # 表示出去,且过了近处临界点(下面代称:第二个临界点)

input_counter=0 #进来车辆记数

output_counter = 0 #出去车辆记数

threshold_0=50

threshold_1=100

cv2.line(frame, (0,threshold_0),(0,threshold_1), (color), thickness)

flag_fangxiang=1#调试用,这个值应该判断

if flag_fangxiang==1: #如果这辆车方向是进来的

if center > threshold_0: #当进来方向的车进入第一个临界线

flag_fangxiang_threshold_0=1 #标志位置1

if flag_fangxiang_threshold_0 == 1: #当进来方向车越过第一个临界线

if center > threshold_1: #判断是否大于第二个临界线

input_counter = input_counter+1 #如果大于,进来车辆加1

print('进来车辆个数:',input_counter)