Binder原理是很清楚,但是调用细节每次看了又忘,好吧,干脆再写篇文章梳理一次,也方便之后查阅。

一、定义

Binder: Android平台上的一种跨进程通信的方式。

二、为什么选Binder:

- 内存拷贝1次:比socket 2次好,比共享内存0次差,总体算是不错的;

- 基于C/S架构,结构清晰,两端相对独立,适合Android架构;

- 安全性:通信双方身份验证,可获取UID/PID;

综合考虑选择Binder

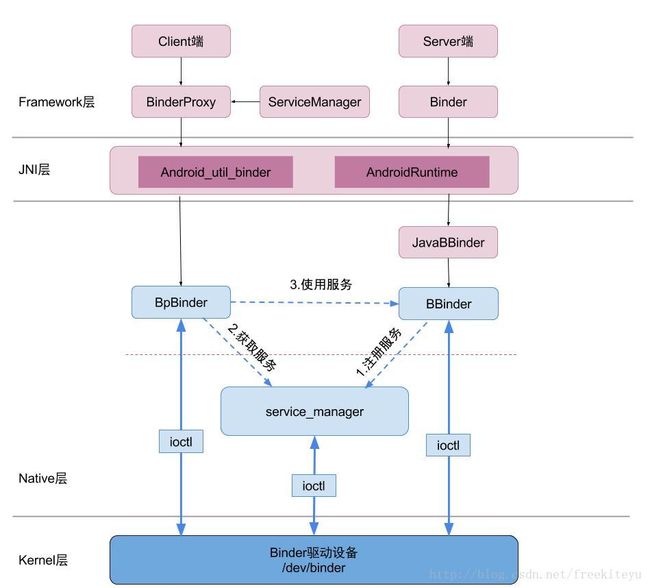

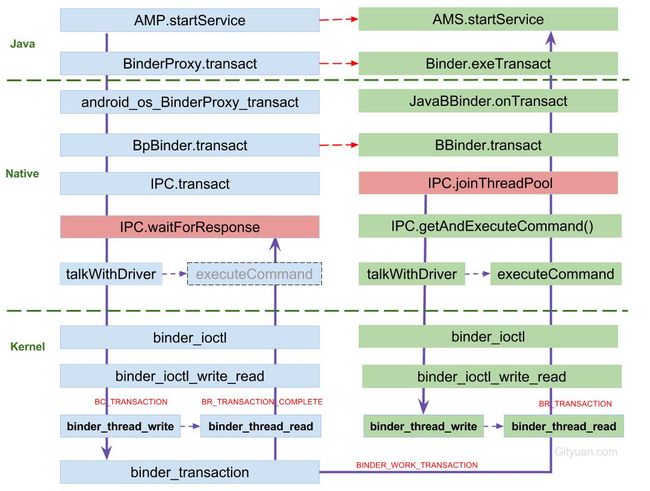

三、应用层到Binder驱动调用流程

以getMemoryInfo为例梳理Binder调用流程:代码 Android N(为什么用N的代码,因为O之后AMS的binder调用改成了aidl方式了,具体Stub文件只能在编译的时候才会生成,太麻烦还不如看N来得直接,反正原理都一样。)

#ActivityManager

public void getMemoryInfo(MemoryInfo outInfo) {

2367 try {

2368 ActivityManagerNative.getDefault().getMemoryInfo(outInfo);

2369 } catch (RemoteException e) {

2370 throw e.rethrowFromSystemServer();

2371 }

2372 }

#ActivityManagerNative

88 static public IActivityManager getDefault() {

89 return gDefault.get();

90 }

private static final Singleton gDefault = new Singleton() {

3095 protected IActivityManager create() {

3096 IBinder b = ServiceManager.getService("activity");

3097 if (false) {

3098 Log.v("ActivityManager", "default service binder = " + b);

3099 }

3100 IActivityManager am = asInterface(b);

3101 if (false) {

3102 Log.v("ActivityManager", "default service = " + am);

3103 }

3104 return am;

3105 }

3106 };

先获取AMS向ServiceManager注册的BinderProxy, 系统服务需要先在ServiceManager注册然后才能被获取到使用,而APP的组件service只需要向AMS注册就好了。

static public IActivityManager asInterface(IBinder obj) {

73 if (obj == null) {

74 return null;

75 }

76 IActivityManager in =

77 (IActivityManager)obj.queryLocalInterface(descriptor);

78 if (in != null) {

79 return in;

80 }

81

82 return new ActivityManagerProxy(obj);

83

创建ActivityManagerProxy,并传入BinderProxy, asInterface相当于获取本地服务 or 远程服务代理,(queryLocalInterfacec出来就是本地服务)。

ActivityManagerProxy中有对应的方法,mRemote就是proxy初始化时传入的BinderProxy。

#ActivityManagerProxy

public void getMemoryInfo(ActivityManager.MemoryInfo outInfo) throws RemoteException {

5006 Parcel data = Parcel.obtain();

5007 Parcel reply = Parcel.obtain();

5008 data.writeInterfaceToken(IActivityManager.descriptor);

5009 mRemote.transact(GET_MEMORY_INFO_TRANSACTION, data, reply, 0);

5010 reply.readException();

5011 outInfo.readFromParcel(reply);

5012 data.recycle();

5013 reply.recycle();

5014 }

打包数据为Parcel,并通过binder调用transact传递数据,这里选择Parcel目的主要有两点:

- 跨进程服务包含不同接口,每个接口的参数数量和类型都不一样,这里Parcel提供了所有基本数据类型的读写接口,对于非基本数据类型需要开发者拆分为然后写入到Parcel中(读也一样)。

- Parcel可以打包为一个整体在进程间通信。

frameworks/base/core/java/android/os/Binder.java

final class BinderProxy implements IBinder {

...

public boolean transact(int code, Parcel data, Parcel reply, int flags) throws RemoteException {

Binder.checkParcel(this, code, data, "Unreasonably large binder buffer");

if (Binder.isTracingEnabled()) { Binder.getTransactionTracker().addTrace(); }

return transactNative(code, data, reply, flags);

}

...

}

transactNative是native方法,那就走jni.

frameworks/base/core/jni/android_util_Binder.cpp

static jboolean android_os_BinderProxy_transact(JNIEnv* env, jobject obj,

jint code, jobject dataObj, jobject replyObj, jint flags) // throws RemoteException

{

Parcel* data = parcelForJavaObject(env, dataObj);

if (data == NULL) {

return JNI_FALSE;

}

Parcel* reply = parcelForJavaObject(env, replyObj);

if (reply == NULL && replyObj != NULL) {

return JNI_FALSE;

}

...

IBinder* target = (IBinder*)

env->GetLongField(obj, gBinderProxyOffsets.mObject);

...

status_t err = target->transact(code, *data, reply, flags);

...

return JNI_FALSE;

}

这里就是把java数据转为C++数据,然后交给BpBinder。通过JNI之后,正式进入Native层。

frameworks/native/libs/binder/BpBinder.cpp

status_t BpBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

// Once a binder has died, it will never come back to life.

if (mAlive) {

status_t status = IPCThreadState::self()->transact(

mHandle, code, data, reply, flags);

if (status == DEAD_OBJECT) mAlive = 0;

return status;

}

return DEAD_OBJECT;

}

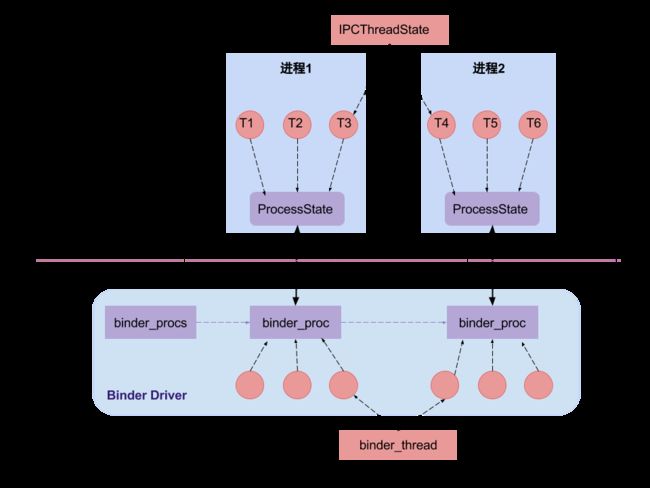

这里直接调用IPCThreadState对应的方法来发送请求到binder驱动。IPCThreadState是一个线程的实例。

这里简单捋一下进程与线程的关系:

ProcessState表示当前binder请求对应的进程

frameworks/native/libs/binder/ProcessState.cpp

#define DEFAULT_MAX_BINDER_THREADS 15

ProcessState::ProcessState(const char *driver)

: mDriverName(String8(driver))

, mDriverFD(open_driver(driver)) //open dev/binder驱动

, mVMStart(MAP_FAILED)

, mThreadCountLock(PTHREAD_MUTEX_INITIALIZER)

, mThreadCountDecrement(PTHREAD_COND_INITIALIZER)

, mExecutingThreadsCount(0)

, mMaxThreads(DEFAULT_MAX_BINDER_THREADS)//设置支持的最大线程数15

, mStarvationStartTimeMs(0)

, mManagesContexts(false)

, mBinderContextCheckFunc(NULL)

, mBinderContextUserData(NULL)

, mThreadPoolStarted(false)

, mThreadPoolSeq(1)

{

if (mDriverFD >= 0) {

mVMStart = mmap(0, BINDER_VM_SIZE, PROT_READ, MAP_PRIVATE | MAP_NORESERVE, mDriverFD, 0);

if (mVMStart == MAP_FAILED) {

// *sigh*

ALOGE("Using /dev/binder failed: unable to mmap transaction memory.\n");

close(mDriverFD);

mDriverFD = -1;

mDriverName.clear();

}

}

LOG_ALWAYS_FATAL_IF(mDriverFD < 0, "Binder driver could not be opened. Terminating.");

}

这里最重要的原则就是:任何使用Binder机制的进程都必须要对/dev/binder设备进行open和mmap才能使用!跟驱动通信你总得把驱动打开把,当然open驱动的过程会有一些初始化工作,比如创建binder_proc进程对象等,这里不铺开了,另外你还需要一块buffer放数据吧,mmap申请一块物理内存,用户空间与内核空间同时映射到这块内存,这也就是为什么内存只拷贝1次的原因。

看了进程再回到线程:IPCThreadState,具体通信细节交给线程来处理。

好再看一张关系图,搞定!

那么接下来看IPCThreadState执行transact做了什么

status_t IPCThreadState::transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags)

{

status_t err = data.errorCheck();

...

if (err == NO_ERROR) {

err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, NULL);

}

...

if ((flags & TF_ONE_WAY) == 0) {

...

if (reply) {

err = waitForResponse(reply);

} else {

Parcel fakeReply;

err = waitForResponse(&fakeReply);

}

...

return err;

}

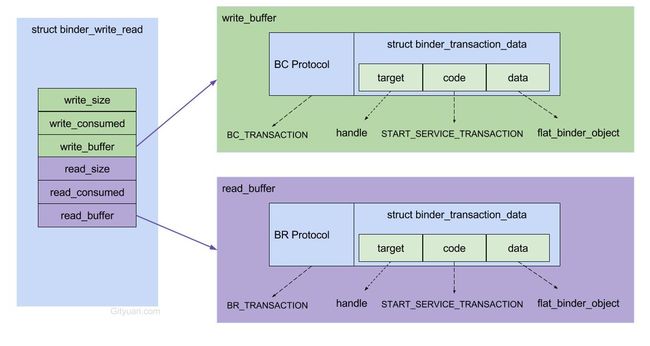

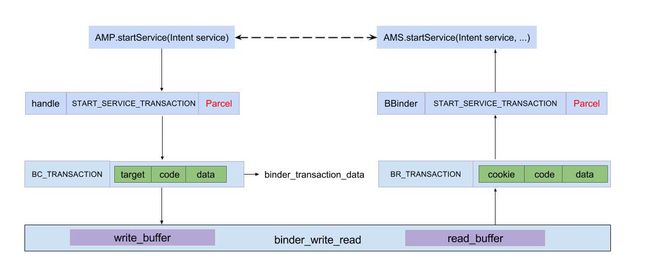

这里主要就两点:writeTransactionData,写入数据打包成binder_transaction_data数据结构,然后通过waitForResponse等待返回结果,如果是one way的就不等了。

另外这里开始用到了Binder协议,因为马上就要跟kernel binder通信了,进入人家的地盘得按它的规矩来,Binder协议分为控制协议和驱动协议,控制协议就是通过ioctl(syscall)与Binder通信的协议。

具体协议参考之前文章:Android通信方式篇(五)-Binder机制(Kernel层)

继续看:

status_t IPCThreadState::writeTransactionData(int32_t cmd, uint32_t binderFlags,

int32_t handle, uint32_t code, const Parcel& data, status_t* statusBuffer)

{

binder_transaction_data tr;

tr.target.ptr = 0; /* Don't pass uninitialized stack data to a remote process */

tr.target.handle = handle;

tr.code = code;

tr.flags = binderFlags;

tr.cookie = 0;

tr.sender_pid = 0;

tr.sender_euid = 0;

const status_t err = data.errorCheck();

if (err == NO_ERROR) {

tr.data_size = data.ipcDataSize();

tr.data.ptr.buffer = data.ipcData();

tr.offsets_size = data.ipcObjectsCount()*sizeof(binder_size_t);

tr.data.ptr.offsets = data.ipcObjects();

} else if (statusBuffer) {

tr.flags |= TF_STATUS_CODE;

*statusBuffer = err;

tr.data_size = sizeof(status_t);

tr.data.ptr.buffer = reinterpret_cast(statusBuffer);

tr.offsets_size = 0;

tr.data.ptr.offsets = 0;

} else {

return (mLastError = err);

}

mOut.writeInt32(cmd);

mOut.write(&tr, sizeof(tr));

return NO_ERROR;

}

writeTransactionData打包好数据:binder_transaction_data,并通过mOut.write,这就是向mmap申请的buffer写数据了。

每个IPCThreadState中都有一对Parcel变量:mIn、mOut。相当于两根数据管道:

- mIn 用来接收来自Binder设备的数据,默认大小为256字节;

- mOut用来存储发往Binder设备的数据,默认大小为256字节。

最后由waitForResponse执行talkWithDriver

status_t IPCThreadState::talkWithDriver(bool doReceive)

{

…

ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr)

...

}

这里mProcess->mDriverFD是对应打开binder设备时的fd,BINDER_WRITE_READ对应具体的控制协议(就是告诉binder driver你要干嘛),bwr存储了类型为binder_write_read的数据,而binder_write_read详细数据结构看下图:

binder_ioctl函数对应了ioctl系统调用处理。

那么接下来正式进入kernel层。

kernel/msm-3.18/drivers/staging/android/binder.c

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

4635{

switch (cmd) {

case BINDER_WRITE_READ:

4661 ret = binder_ioctl_write_read(filp, cmd, arg, thread);

4662 if (ret)

4663 goto err;

4664 break;

...

}

}

对应BINDER_WRITE_READ的操作在binder_ioctl_write_read

4497static int binder_ioctl_write_read(struct file *filp,

4498 unsigned int cmd, unsigned long arg,

4499 struct binder_thread *thread)

4500{

4501 int ret = 0;

4502 struct binder_proc *proc = filp->private_data;

4503 unsigned int size = _IOC_SIZE(cmd);

4504 void __user *ubuf = (void __user *)arg;

4505 struct binder_write_read bwr;

...

4511 if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {

4512 ret = -EFAULT;

4513 goto out;

4514 }

...

4521 if (bwr.write_size > 0) {

4522 ret = binder_thread_write(proc, thread,

4523 bwr.write_buffer,

4524 bwr.write_size,

4525 &bwr.write_consumed);

...

4533 }

4534 if (bwr.read_size > 0) {

4535 ret = binder_thread_read(proc, thread, bwr.read_buffer,

4536 bwr.read_size,

4537 &bwr.read_consumed,

4538 filp->f_flags & O_NONBLOCK);

...

4549 }

...

4560 return ret;

4561}

如果bar.write.size>0,则调用binder_thread_write,如果bwr.read_size > 0,则调用binder_thread_read。

这里我们看binder_thread_write,我们之前封装的驱动协议命令是:BC_TRANSACTION,那么定位到这来:

4static int binder_thread_write(struct binder_proc *proc,

3385 struct binder_thread *thread,

3386 binder_uintptr_t binder_buffer, size_t size,

3387 binder_size_t *consumed)

3388{

…

3611 case BC_TRANSACTION:

3612 case BC_REPLY: {

3613 struct binder_transaction_data tr;

3614

3615 if (copy_from_user(&tr, ptr, sizeof(tr)))

3616 return -EFAULT;

3617 ptr += sizeof(tr);

3618 binder_transaction(proc, thread, &tr,

3619 cmd == BC_REPLY, 0);

3620 break;

3621 }

...

}

执行binder_transaction,这个方法是对一次binder事务的处理。

static void binder_transaction(struct binder_proc *proc,

struct binder_thread *thread,

struct binder_transaction_data *tr, int reply){

//根据各种判定,获取以下信息:

struct binder_thread *target_thread; //目标线程

struct binder_proc *target_proc; //目标进程

struct binder_node *target_node; //目标binder节点

struct list_head *target_list; //目标TODO队列

wait_queue_head_t *target_wait; //目标等待队列

...

//分配两个结构体内存

struct binder_transaction *t = kzalloc(sizeof(*t), GFP_KERNEL);

struct binder_work *tcomplete = kzalloc(sizeof(*tcomplete), GFP_KERNEL);

//从target_proc分配一块buffer【见小节3.2】

t->buffer = binder_alloc_buf(target_proc, tr->data_size,

for (; offp < off_end; offp++) {

switch (fp->type) {

case BINDER_TYPE_BINDER: ...

case BINDER_TYPE_WEAK_BINDER: ...

case BINDER_TYPE_HANDLE: ...

case BINDER_TYPE_WEAK_HANDLE: ...

case BINDER_TYPE_FD: ...

}

}

//向目标进程的target_list添加BINDER_WORK_TRANSACTION事务

t->work.type = BINDER_WORK_TRANSACTION;

list_add_tail(&t->work.entry, target_list);

//向当前线程的todo队列添加BINDER_WORK_TRANSACTION_COMPLETE事务

tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE;

list_add_tail(&tcomplete->entry, &thread->todo);

if (target_wait)

wake_up_interruptible(target_wait);

return;

}

将发起端数据拷贝到接收端进程的buffer结构体,让server端去read。刚好写完一半,接下来就不打算写了,照猫画虎,原理是相似的。

接下来附上几张图:

更多细节参考gityuan.com的binder系列文章。好了天色不早了,狗命要紧!!!