HIVE环境搭建杂交版

windows系统HIVE伪分布式

1、Hadoop环境配置:

环境变量配置:java_home=C:\Program Files\Java\jdk1.8.0_201

hadoop_home=D:\hadoop-2.9.2

path中添加%HADOOP_HOME%\bin、%HADOOP_HOME%\sbin

hadoop文件配置修改:安装目录下hadoop\etc\hadoop

hdfs-site.xml

dfs.replication

1

dfs.namenode.name.dir

/D:/hadoop-2.9.2/data/namenode

dfs.datanode.data.dir

/D:/hadoop-2.9.2/data/datanode

core-stie.xml

hadoop.tmp.dir

/D:/hadoop-2.9.2/workplace/tmp

dfs.name.dir

/D:/hadoop-2.9.2/workplace/name

fs.default.name

hdfs://127.0.0.1:9000

mapred-site.xml

mapreduce.framework.name

yarn

mapred.job.tracker

127.0.0.1:9001

yarn-site.xml

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.aux-services.mapreduce.shuffle.class

org.apache.hadoop.mapred.ShuffleHandler

hadoop配置文件bin目录下.cmd文件补齐,有些版本缺,看别人的资料写的,错了,好像是winutils文件

start-all.cmd,会启动hdfs和yarn服务。jps查看进程。stop-all.cmd

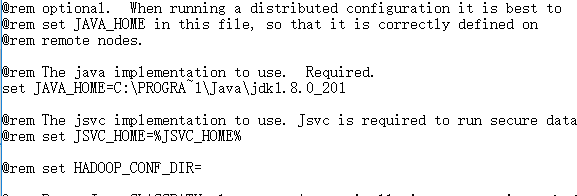

报错解决:Error: JAVA_HOME is incorrectly set.

将hadoop-env.cmd文件中java_home=%java_home%修改如下,用PROGRA~1代替Program Files,避免路径空格导致报错

http://localhost:50070/节点管理

http://localhost:8088/资源管理,hadoop3.x端口为9870

HDFS上创建目录

hadoop fs -mkdir /tmp

hadoop fs -chmod g+w /tmp

hadoop fs -mkdir /hive

hadoop fs -mkdir /hive/warehouse

hadoop fs -chmod g+w /hive/warehouse

2、hive环境配置

2.1 HIVE环境变量

HIVE_HOME=E:\apache-hive-3.1.2-bin

PATH中添加:%HIVE_HOME%\bin

2.2将HIVE配置文件目录hive/conf下面几个文件重命名

hive-default.xml.template重命名hive-site.xml

hive-env.sh.template重命名hive-env.sh

hive-exec-log4j.properties.template 重命名hive-exec-log4j.properties

hive-log4j.properties.template重命名hive-log4j.properties

2.3HIVE配置文件目录hive/conf下修改hive-env.sh文件的内容

HADOOP_HOME=E:\hadoop-2.9.2

HIVE_CONF_DIR=E:\apache-hive-3.1.2-bin\conf

HIVE_AUX_JARS_PATH=E:\apache-hive-3.1.2-bin\lib

2.4HIVE配置文件目录hive/conf下修改hive-site.xml文件中的内容,将相应的参数名称修改为对应值,文件路径为创建的文件目录所在位置

创建相应的文件夹在配置文件目录下:scratch_dir、resources_dir、query_logs_dir、operation_dir

hive.metastore.warehouse.dir

E:/apache-hive-3.1.2-bin/warehouse

hive.exec.scratchdir

E:/apache-hive-3.1.2-bin/hive

hive.exec.local.scratchdir

E:/apache-hive-3.1.2-bin/scratch_dir

hive.downloaded.resources.dir

E:/apache-hive-3.1.2-bin/resources_dir

hive.querylog.location

E:/apache-hive-3.1.2-bin/querylog_dir

Location of Hive run time structured log file

hive.server2.logging.operation.log.location

E:/apache-hive-3.1.2-bin/operation_dir

javax.jdo.option.ConnectionURL

#别忘了在mysql或者其他数据库中创建hive库,编码一定要是latin

jdbc:mysql://127.0.0.1:3306/hive?createDatabaseIfNotExist=true

javax.jdo.option.ConnectionDriverName

com.mysql.jdbc.Driver

#别忘了在mysql或者其他数据库中创建hive用户,并授予所有权限

javax.jdo.option.ConnectionUserName

hive

javax.jdo.option.ConnectionPassword

hive

hive.metastore.schema.verification

false

HIVE配置文件目录hive/conf下hivemetastore-site.xml文件创建

hive.metastore.warehouse.dir

E:/apache-hive-3.1.2-bin/warehouse

hive.metastore.local

false

javax.jdo.option.ConnectionURL

jdbc:mysql://127.0.0.1:3306/hive?serverTimezone=UTC

javax.jdo.option.ConnectionDriverName

com.mysql.jdbc.Driver

javax.jdo.option.ConnectionUserName

hive

javax.jdo.option.ConnectionPassword

hive

HIVE配置文件目录hive/conf下metastore-site.xml文件创建

hive.metastore.warehouse.dir

E:/apache-hive-3.1.2-bin/warehouse

hive.metastore.local

true

复制hive-log4j2.properties.template重命名为hive-log4j2.properties,替换status = INFO开始的内容,看了下好像基本一样,这个,以后有机会去查查为什么,也不知道这样是干嘛,反正就是为了把环境搭建出来。

status = INFO

name = HiveLog4j2

packages = org.apache.hadoop.hive.ql.log

# list of properties

property.hive.log.level = INFO

property.hive.root.logger = DRFA

property.hive.log.dir = hive_log

property.hive.log.file = hive.log

property.hive.perflogger.log.level = INFO

# list of all appenders

appenders = console, DRFA

# console appender

appender.console.type = Console

appender.console.name = console

appender.console.target = SYSTEM_ERR

appender.console.layout.type = PatternLayout

appender.console.layout.pattern = %d{ISO8601} %5p [%t] %c{2}: %m%n

# daily rolling file appender

appender.DRFA.type = RollingRandomAccessFile

appender.DRFA.name = DRFA

appender.DRFA.fileName = ${hive.log.dir}/${hive.log.file}

# Use %pid in the filePattern to append @ to the filename if you want separate log files for different CLI session

appender.DRFA.filePattern = ${hive.log.dir}/${hive.log.file}.%d{yyyy-MM-dd}

appender.DRFA.layout.type = PatternLayout

appender.DRFA.layout.pattern = %d{ISO8601} %5p [%t] %c{2}: %m%n

appender.DRFA.policies.type = Policies

appender.DRFA.policies.time.type = TimeBasedTriggeringPolicy

appender.DRFA.policies.time.interval = 1

appender.DRFA.policies.time.modulate = true

appender.DRFA.strategy.type = DefaultRolloverStrategy

appender.DRFA.strategy.max = 30

# list of all loggers

loggers = NIOServerCnxn, ClientCnxnSocketNIO, DataNucleus, Datastore, JPOX, PerfLogger

logger.NIOServerCnxn.name = org.apache.zookeeper.server.NIOServerCnxn

logger.NIOServerCnxn.level = WARN

logger.ClientCnxnSocketNIO.name = org.apache.zookeeper.ClientCnxnSocketNIO

logger.ClientCnxnSocketNIO.level = WARN

logger.DataNucleus.name = DataNucleus

logger.DataNucleus.level = ERROR

logger.Datastore.name = Datastore

logger.Datastore.level = ERROR

logger.JPOX.name = JPOX

logger.JPOX.level = ERROR

logger.ApacheHttp.name=org.apache.http

logger.ApacheHttp.level = INFO

logger.PerfLogger.name = org.apache.hadoop.hive.ql.log.PerfLogger

logger.PerfLogger.level = ${hive.perflogger.log.level}

# root logger

rootLogger.level = ${hive.log.level}

rootLogger.appenderRefs = root

rootLogger.appenderRef.root.ref = ${hive.root.logger}

2.5hive配置文件bin目录下.cmd文件补齐,有些版本缺,看别人的资料写的

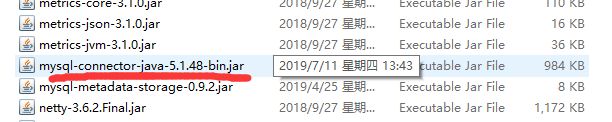

2.6hive配置文件lib目录下添加mysql驱动文件,访问数据库的必备文件

2.7元数据库初始化hive --service schematool

但是调试了好久的bug,还是没有通过这个命令初始化元数据库,最后通过一篇文章看到直接从apache-hive-2.3.6-bin\scripts\metastore\upgrade\mysql目录下找到元数据库初始化的sql文件,直接在数据库中运行

metastore : hive --service metastore

hiveserver2:hive --service hiveserver2

控制台启动hive --service cli就可以使用了

头疼的是hiveserver2启动会出现各种各样的bug,版本高的好像bug比较多,祝各位好运了