【机器学习】Logistic Regression逻辑回归原理与java实现

【机器学习】Logistic Regression逻辑回归原理与java实现

- 1、基于概率的机器学习算法

- 2、逻辑回归算法原理

- 2.1、分离超平面

- 2.2、阈值函数

- 2.3、样本概率

- 2.4、损失函数

- 3、基于梯度下降法的模型训练

- 4、java实现

1、基于概率的机器学习算法

机器学习算法可以分为基于概率、基于距离、基于树和基于神经网络四类。基于概率的机器学习算法本质上是计算每个样本属于对应类别的概率,然后利用极大似然估计法对模型进行训练。基于概率的机器学习算法的损失函数为负的log似然函数。

基于概率的机器学习算法包括朴素贝叶斯算法、Logistic Regression算法、Softmax Regression算法和Factorization Machine算法等。

2、逻辑回归算法原理

2.1、分离超平面

Logistic Regression算法是二分类线性分类算法,分离超平面采用线性函数:

W x + b = 0 Wx + b = 0 Wx+b=0

x x x是样本特征矩阵,特征数为 m m m,其中 W ( 1 ∗ m ) W(1*m) W(1∗m)是权重矩阵。通过分类超平面可以将数据分成正负两个类别,类别为正的样本标签标记为1,类别为负的样本标签标记为0。

2.2、阈值函数

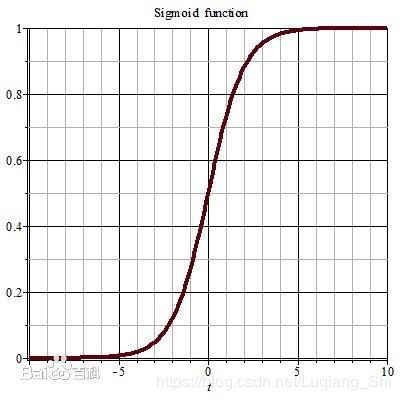

通过阈值函数可以将样本到分离超平面的距离映射到不同的类别,Logistic Regression算法中阈值函数采用Sigmoid函数:

f ( x ) = 1 1 + e − x f(x) = \frac{1}{{1 + {e^{ - x}}}} f(x)=1+e−x1

sigmoid函数的图像如下:

对于样本 x x x,其到分离超平面的几何距离 D D D为:

D = W x + b D = Wx + b D=Wx+b

2.3、样本概率

假设样本 x x x为正类别,则其概率为:

p ( y = 1 ∣ x , W , b ) = σ ( W x + b ) = 1 1 + e − ( W x + b ) p\left( {y = 1\left| {x,W,b} \right.} \right) = \sigma \left( {Wx + b} \right) = \frac{1}{{1 + {e^{ - \left( {Wx + b} \right)}}}} p(y=1∣x,W,b)=σ(Wx+b)=1+e−(Wx+b)1

负类别样本的概率:

p ( y = 0 ∣ x , W , b ) = 1 − p ( y = 1 ∣ x , W , b ) = e − ( W x + b ) 1 + e − ( W x + b ) p\left( {y = 0\left| {x,W,b} \right.} \right) = 1 - p\left( {y = 1\left| {x,W,b} \right.} \right) = \frac{{{e^{ - \left( {Wx + b} \right)}}}}{{1 + {e^{ - \left( {Wx + b} \right)}}}} p(y=0∣x,W,b)=1−p(y=1∣x,W,b)=1+e−(Wx+b)e−(Wx+b)

将两种类别合并,属于类别 y y y的概率为:

p ( y ∣ x , W , b ) = σ ( W x + b ) y ( 1 − σ ( W x + b ) ) 1 − y p\left( {y\left| {x,W,b} \right.} \right) = \sigma {\left( {Wx + b} \right)^y}{\left( {1 - \sigma \left( {Wx + b} \right)} \right)^{1 - y}} p(y∣x,W,b)=σ(Wx+b)y(1−σ(Wx+b))1−y

2.4、损失函数

设训练数据集有 n n n个训练样本 { ( x 1 , y 1 ) , ( x 2 , y 2 ) , ⋯ , ( x n , y n ) } \left\{ {\left( {{x_1},{y_1}} \right),\left( {{x_2},{y_2}} \right), \cdots ,\left( {{x_n},{y_n}} \right)} \right\} {(x1,y1),(x2,y2),⋯,(xn,yn)},其似然函数为:

L W , b = ∏ i = 1 n [ σ ( W x i + b ) y i ( 1 − σ ( W x i + b ) ) 1 − y i ] {L_{W,b}} = \prod\limits_{i = 1}^n {\left[ {\sigma {{\left( {W{x_i} + b} \right)}^{{y_i}}}{{\left( {1 - \sigma \left( {W{x_i} + b} \right)} \right)}^{1 - {y_i}}}} \right]} LW,b=i=1∏n[σ(Wxi+b)yi(1−σ(Wxi+b))1−yi]

Logistic Regression算法的损失函数为负的log似然函数:

l W , b = − 1 n ∑ i = 1 n [ y i log ( σ ( W x i + b ) ) + ( 1 − y i ) log ( 1 − σ ( W x i + b ) ) ] {l_{W,b}} = - \frac{1}{n}\sum\limits_{i = 1}^n {\left[ {{y_i}\log \left( {\sigma \left( {W{x_i} + b} \right)} \right) + \left( {1 - {y_i}} \right)\log \left( {1 - \sigma \left( {W{x_i} + b} \right)} \right)} \right]} lW,b=−n1i=1∑n[yilog(σ(Wxi+b))+(1−yi)log(1−σ(Wxi+b))]

模型训练是为了求取最优的权值矩阵 W W W和偏置 b b b,将模型训练问题转化为最小化损失函数:

min W , b l W , b \mathop {\min }\limits_{W,b} {l_{W,b}} W,bminlW,b

3、基于梯度下降法的模型训练

本博文中,最小化损失函数的求解采用梯度下降法。

第一步:初始化权重矩阵 W = W 0 W=W_0 W=W0和偏置 b = b 0 b=b_0 b=b0。

第二步:重复如下过程:

计算参数的梯度下降方向:

∂ W i = − ∂ l W , b ∂ W ∣ W i \partial {W_i} = - \frac{{\partial {l_{W,b}}}}{{\partial W}}\left| {_{{W_i}}} \right. ∂Wi=−∂W∂lW,b∣Wi

∂ b i = − ∂ l W , b ∂ b ∣ b i \partial {b_i} = - \frac{{\partial {l_{W,b}}}}{{\partial b}}\left| {_{{b_i}}} \right. ∂bi=−∂b∂lW,b∣bi

选择步长 α \alpha α

更新参数:

W i + 1 = W i + α ⋅ ∂ W i {W_{i + 1}} = {W_i} + \alpha \cdot \partial {W_i} Wi+1=Wi+α⋅∂Wi

b i + 1 = b i + α ⋅ ∂ b i {b_{i + 1}} = {b_i} + \alpha \cdot \partial {b_i} bi+1=bi+α⋅∂bi

第三步:判断是否达到终止条件。

假设 x i j x_i^j xij是样本 x i x_i xi的第 j j j个特征分量, w j w_j wj为权重矩阵 W W W的第 j j j个分量,取 w 0 = b {w_0} = b w0=b,则权重矩阵中第 j j j个分量的梯度方向为:

∂ w j = − 1 n ∑ i = 1 n ( y i − σ ( W x i + b ) ) x i j \partial {w_j} = - \frac{1}{n}\sum\limits_{i = 1}^n {\left( {{y_i} - \sigma \left( {W{x_i} + b} \right)} \right)} x_i^j ∂wj=−n1i=1∑n(yi−σ(Wxi+b))xij

4、java实现

完整代码和数据样本地址:https://github.com/shiluqiang/Logistic_Regression_java

首先:导入样本特征和标签。

import java.io.*;

public class LoadData {

//导入样本特征

public static double[][] Loadfeature(String filename) throws IOException{

File f = new File(filename);

FileInputStream fip = new FileInputStream(f);

// 构建FileInputStream对象

InputStreamReader reader = new InputStreamReader(fip,"UTF-8");

// 构建InputStreamReader对象

StringBuffer sb = new StringBuffer();

while(reader.ready()) {

sb.append((char) reader.read());

}

reader.close();

fip.close();

//将读入的数据流转换为字符串

String sb1 = sb.toString();

//按行将字符串分割,计算二维数组行数

String [] a = sb1.split("\n");

int n = a.length;

System.out.println("二维数组行数为:" + n);

//计算二维数组列数

String [] a0 = a[0].split("\t");

int m = a0.length;

System.out.println("二维数组列数为:" + m);

double [][] feature = new double[n][m];

for (int i = 0; i < n; i ++) {

String [] tmp = a[i].split("\t");

for(int j = 0; j < m; j ++) {

if (j == m-1) {

feature[i][j] = (double) 1;

}

else {

feature[i][j] = Double.parseDouble(tmp[j]);

}

}

}

return feature;

}

//导入样本标签

public static double[] LoadLabel(String filename) throws IOException{

File f = new File(filename);

FileInputStream fip = new FileInputStream(f);

// 构建FileInputStream对象

InputStreamReader reader = new InputStreamReader(fip,"UTF-8");

// 构建InputStreamReader对象,编码与写入相同

StringBuffer sb = new StringBuffer();

while(reader.ready()) {

sb.append((char) reader.read());

}

reader.close();

fip.close();

//将读入的数据流转换为字符串

String sb1 = sb.toString();

//按行将字符串分割,计算二维数组行数

String [] a = sb1.split("\n");

int n = a.length;

System.out.println("二维数组行数为:" + n);

//计算二维数组列数

String [] a0 = a[0].split("\t");

int m = a0.length;

System.out.println("二维数组列数为:" + m);

double [] Label = new double[n];

for (int i = 0; i < n; i ++) {

String [] tmp = a[i].split("\t");

Label[i] = Double.parseDouble(tmp[m-1]);

}

return Label;

}

}

然后,利用梯度下降算法优化Logistic Regression模型。

public class LRtrainGradientDescent {

int paraNum; //权重参数的个数

double rate; //学习率

int samNum; //样本个数

double [][] feature; //样本特征矩阵

double [] Label;//样本标签

int maxCycle; //最大迭代次数

public LRtrainGradientDescent(double [][] feature, double [] Label, int paraNum,double rate, int samNum,int maxCycle) {

this.feature = feature;

this.Label = Label;

this.maxCycle = maxCycle;

this.paraNum = paraNum;

this.rate = rate;

this.samNum = samNum;

}

// 权值矩阵初始化

public double [] ParaInitialize(int paraNum) {

double [] W = new double[paraNum];

for (int i = 0; i < paraNum; i ++) {

W[i] = 1.0;

}

return W;

}

//计算每次迭代后的预测误差

public double [] PreVal(int samNum,int paraNum, double [][] feature,double [] W) {

double [] Preval = new double[samNum];

for (int i = 0; i< samNum; i ++) {

double tmp = 0;

for(int j = 0; j < paraNum; j ++) {

tmp += feature[i][j] * W[j];

}

Preval[i] = Sigmoid.sigmoid(tmp);

}

return Preval;

}

//计算误差率

public double error_rate(int samNum, double [] Label, double [] Preval) {

double sum_err = 0.0;

for(int i = 0; i < samNum; i ++) {

sum_err += Math.pow(Label[i] - Preval[i], 2);

}

return sum_err;

}

//LR模型训练

public double[] Updata(double [][] feature, double[] Label, int maxCycle, double rate) {

// 先计算样本个数和特征个数

int samNum = feature.length;

int paraNum = feature[0].length;

//初始化权重矩阵

double [] W = ParaInitialize(paraNum);

// 循环迭代优化权重矩阵

for (int i = 0; i < maxCycle; i ++) {

// 每次迭代后,样本预测值

double [] Preval = PreVal(samNum,paraNum,feature,W);

double sum_err = error_rate(samNum,Label,Preval);

if (i % 10 == 0) {

System.out.println("第" + i + "次迭代的预测误差为:" + sum_err);

}

//预测值与标签的误差

double [] err = new double[samNum];

for(int j = 0; j < samNum; j ++) {

err[j] = Label[j] - Preval[j];

}

// 计算权重矩阵的梯度方向

double [] Delt_W = new double[paraNum];

for (int n = 0 ; n < paraNum; n ++) {

double tmp = 0;

for(int m = 0; m < samNum; m ++) {

tmp += feature[m][n] * err[m];

}

Delt_W[n] = tmp / samNum;

}

for(int m = 0; m < paraNum; m ++) {

W[m] = W[m] + rate * Delt_W[m];

}

}

return W;

}

}

Sigmoid函数

public class Sigmoid {

public static double sigmoid(double x) {

double i = 1.0;

double y = i / (i + Math.exp(-x));

return y;

}

}

Logistic Regression模型参数和测试结果存储。

import java.io.*;

public class SaveModel {

public static void savemodel(String filename, double [] W) throws IOException{

File f = new File(filename);

// 构建FileOutputStream对象

FileOutputStream fip = new FileOutputStream(f);

// 构建OutputStreamWriter对象

OutputStreamWriter writer = new OutputStreamWriter(fip,"UTF-8");

//计算模型矩阵的元素个数

int n = W.length;

StringBuffer sb = new StringBuffer();

for (int i = 0; i < n-1; i ++) {

sb.append(String.valueOf(W[i]));

sb.append("\t");

}

sb.append(String.valueOf(W[n-1]));

String sb1 = sb.toString();

writer.write(sb1);

writer.close();

fip.close();

}

public static void saveresults(String filename, double [] pre_results) throws IOException{

File f = new File(filename);

// 构建FileOutputStream对象

FileOutputStream fip = new FileOutputStream(f);

// 构建OutputStreamWriter对象

OutputStreamWriter writer = new OutputStreamWriter(fip,"UTF-8");

//计算预测结果的个数

int n = pre_results.length;

StringBuffer sb = new StringBuffer();

for (int i = 0; i < n-1; i ++) {

sb.append(String.valueOf(pre_results[i]));

sb.append("\n");

}

sb.append(String.valueOf(pre_results[n-1]));

String sb1 = sb.toString();

writer.write(sb1);

writer.close();

fip.close();

}

}

主类。

import java.io.*;

public class LRMain {

public static void main(String[] args) throws IOException{

// filename

String filename = "data.txt";

// 导入样本特征和标签

double [][] feature = LoadData.Loadfeature(filename);

double [] Label = LoadData.LoadLabel(filename);

// 参数设置

int samNum = feature.length;

int paraNum = feature[0].length;

double rate = 0.01;

int maxCycle = 1000;

// LR模型训练

LRtrainGradientDescent LR = new LRtrainGradientDescent(feature,Label,paraNum,rate,samNum,maxCycle);

double [] W = LR.Updata(feature, Label, maxCycle, rate);

//保存模型

String model_path = "wrights.txt";

SaveModel.savemodel(model_path, W);

//模型测试

double [] pre_results = LRTest.lrtest(paraNum, samNum, feature, W);

//保存测试结果

String results_path = "pre_results.txt";

SaveModel.saveresults(results_path, pre_results);

}

}