讯飞语音唤醒、识别、合成

文章目录

- 讯飞SDK的使用

- 官网SDK所提供的的文件

- 官网Demo的使用

- 自己新建工程导包步骤

- 语音唤醒

- 语音识别

- 在线语音合成

讯飞SDK的使用

Demo链接:

语音唤醒 [link] (https://download.csdn.net/download/the_only_god/11376540)

语音识别 [link]

(https://download.csdn.net/download/the_only_god/11376705)

语音合成 [link] (https://download.csdn.net/download/the_only_god/11376705)

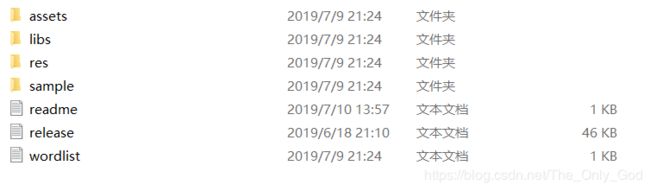

官网SDK所提供的的文件

官网链接 [link] (https://www.xfyun.cn/)

- assets 和 res中的是资源文件。

- libs中的是关键类库文件,语音唤醒、离线命令词识别、在线语音合成所需要的类均在其中的Msc.jar文件中。

- smaple官网所提供的的例子。

- readme(呵呵)

- wordlist你在官网下载SDK时设置的语音唤醒词。

- realesse无关紧要,看看可以加深你对这个库的理解(调bug时可能救你一命)。

官网Demo的使用

新建android工程 —>file —>new —> import moudle —> 把smaple中的mscV5PlusDemo导入就OK了,然后根据编译器右下角的提示来就可以成功运行Demo了(挺好玩的)。一般所提供的的smaple,不仅仅包含你所选择的功能,还可能包含一些其它多功能。之后你就可以从mscV5PlusDemo中复制粘贴到自己app中。

自己新建工程导包步骤

- 将libs文件复制粘贴到 app中(最好只粘armeabi-v7a和Msc.jar经验之谈),右键Msc.jar点击add as library。

- 在main中建立jnLib文件,将armebi-v7a粘贴过去。

- 将assets复制到main下。

- 在srings.xml中添加app_id即讯飞给你的授权码。

然后你就可以用包里的各种语音相关函数了(前提是你得会用)

语音唤醒

特别简单

- 创建VoiceWakeuper对象

private VoiceWakeuper mIvw;mIvw = VoiceWakeuper.createWakeuper(this, null);- 设置参数

mIvw = VoiceWakeuper.getWakeuper();

if (mIvw != null) {

resultString = "";

// 清空参数

mIvw.setParameter(SpeechConstant.PARAMS, null);

// 唤醒门限值,根据资源携带的唤醒词个数按照“id:门限;id:门限”的格式传入

mIvw.setParameter(SpeechConstant.IVW_THRESHOLD, "0:" + curThresh);

// 设置唤醒模式

mIvw.setParameter(SpeechConstant.IVW_SST, "wakeup");

// 设置持续进行唤醒

mIvw.setParameter(SpeechConstant.KEEP_ALIVE, "1");

// 设置闭环优化网络模式

mIvw.setParameter(SpeechConstant.IVW_NET_MODE, "0");

// 设置唤醒资源路径

mIvw.setParameter(SpeechConstant.IVW_RES_PATH, getResource());

// 设置唤醒录音保存路径,保存最近一分钟的音频

mIvw.setParameter(SpeechConstant.IVW_AUDIO_PATH, Environment.getExternalStorageDirectory().getPath() + "/msc/ivw.wav");

mIvw.setParameter(SpeechConstant.AUDIO_FORMAT, "wav");

// 如有需要,设置 NOTIFY_RECORD_DATA 以实时通过 onEvent 返回录音音频流字节

//mIvw.setParameter( SpeechConstant.NOTIFY_RECORD_DATA, "1" );

} - 获取资源路径

private String getResource() {

final String resPath = ResourceUtil.generateResourcePath(WakeDemo.this, RESOURCE_TYPE.assets, "ivw/"+getString(R.string.app_id)+".jet");

Log.d( TAG, "resPath: "+resPath );

return resPath;

}- 设置唤醒监听器

private WakeuperListener mWakeuperListener = new WakeuperListener() {

@Override

public void onResult(WakeuperResult result) {

Log.d(TAG, "onResult");

if(!"1".equalsIgnoreCase(keep_alive)) {

setRadioEnable(true);

}

try {

String text = result.getResultString();

JSONObject object;

object = new JSONObject(text);

StringBuffer buffer = new StringBuffer();

buffer.append("【RAW】 "+text);

buffer.append("\n");

buffer.append("【操作类型】"+ object.optString("sst"));

buffer.append("\n");

buffer.append("【唤醒词id】"+ object.optString("id"));

buffer.append("\n");

buffer.append("【得分】" + object.optString("score"));

buffer.append("\n");

buffer.append("【前端点】" + object.optString("bos"));

buffer.append("\n");

buffer.append("【尾端点】" + object.optString("eos"));

resultString =buffer.toString();

} catch (JSONException e) {

resultString = "结果解析出错";

e.printStackTrace();

}

textView.setText(resultString);

}

@Override

public void onError(SpeechError error) {

showTip(error.getPlainDescription(true));

setRadioEnable(true);

}

@Override

public void onBeginOfSpeech() {

}

@Override

public void onEvent(int eventType, int isLast, int arg2, Bundle obj) {

switch( eventType ){

// EVENT_RECORD_DATA 事件仅在 NOTIFY_RECORD_DATA 参数值为 真 时返回

case SpeechEvent.EVENT_RECORD_DATA:

final byte[] audio = obj.getByteArray( SpeechEvent.KEY_EVENT_RECORD_DATA );

Log.i( TAG, "ivw audio length: "+audio.length );

break;

}

}

@Override

public void onVolumeChanged(int volume) {

}

};

@Override

protected void onDestroy() {

super.onDestroy();

Log.d(TAG, "onDestroy WakeDemo");

// 销毁合成对象

mIvw = VoiceWakeuper.getWakeuper();

if (mIvw != null) {

mIvw.destroy();

}

}- 开始监听

mIvw.startListening(mWakeuperListener);这就OK了

语音识别

- 创建语音识别对象

private SpeechRecognizer mAsr;mAsr = SpeechRecognizer.createRecognizer(this, mInitListener); private InitListener mInitListener = new InitListener() {

@Override

public void onInit(int code) {

Log.d(TAG, "SpeechRecognizer init() code = " + code);

if (code != ErrorCode.SUCCESS) {

showTip("初始化失败,错误码:"+code+",请点击网址https://www.xfyun.cn/document/error-code查询解决方案");

}

}

};- 构建语法

//构建语法

private void buildGrammer() {

mLocalGrammar = FucUtil.readFile(this, "function.bnf", "utf-8");

// 本地-构建语法文件,生成语法id

text.setText(FucUtil.readFile(this,"myfunction","utf-8"));

mContent = new String(mLocalGrammar);

//mAsr.setParameter(SpeechConstant.PARAMS, null);

// 设置文本编码格式

mAsr.setParameter(SpeechConstant.TEXT_ENCODING, "utf-8");

// 设置引擎类型

mAsr.setParameter(SpeechConstant.ENGINE_TYPE, SpeechConstant.TYPE_LOCAL);

// 设置语法构建路径

mAsr.setParameter(ResourceUtil.GRM_BUILD_PATH, grmPath);

// 设置资源路径

mAsr.setParameter(ResourceUtil.ASR_RES_PATH, getResourcePath());

ret = mAsr.buildGrammar(GRAMMAR_TYPE_BNF, mContent, grammarListener);

}语法构建监听器

//初始化语法构建监听器

GrammarListener grammarListener = new GrammarListener() {

@Override

public void onBuildFinish(String grammarId, SpeechError error) {

if (error == null) {

mLocalGrammarID = grammarId;

//showTip("语法构建成功:" + grammarId);

} else {

showTip("语法构建失败,错误码:" + error.getErrorCode() + ",请点击网址https://www.xfyun.cn/document/error-code查询解决方案");

}

}

};3.设置语音识别参数

//设置语音识别参数

public void setParam() {

boolean result = true;

// 清空参数

//mAsr.setParameter(SpeechConstant.PARAMS, null);

// 设置识别引擎

mAsr.setParameter(SpeechConstant.ENGINE_TYPE, SpeechConstant.TYPE_LOCAL);

// 设置本地识别资源

mAsr.setParameter(ResourceUtil.ASR_RES_PATH, getResourcePath());

// 设置语法构建路径

mAsr.setParameter(ResourceUtil.GRM_BUILD_PATH, grmPath);

// 设置返回结果格式

mAsr.setParameter(SpeechConstant.RESULT_TYPE, "json");

// 设置本地识别使用语法id

mAsr.setParameter(SpeechConstant.LOCAL_GRAMMAR, mLocalGrammarID);

mAsr.setParameter(SpeechConstant.KEY_SPEECH_TIMEOUT, "-1");

mAsr.setParameter(SpeechConstant.VAD_BOS, "4000");

mAsr.setParameter(SpeechConstant.VAD_EOS, "1000");

// 设置识别的门限值

mAsr.setParameter(SpeechConstant.MIXED_THRESHOLD, "30");

//mediaPlayer.release();

//ret = mAsr.startListening(mRecognizerListener);

if (ret != ErrorCode.SUCCESS) {

showTip("识别失败,错误码: " + ret);

}

}- 语音识别监听器

private RecognizerListener mRecognizerListener = new RecognizerListener() {

@Override

public void onVolumeChanged(int volume, byte[] data) {

showTip("当前正在说话,音量大小:" + volume);

Log.d(TAG, "返回音频数据:"+data.length);

}

@Override

public void onResult(final RecognizerResult result, boolean isLast) {

if (null != result && !TextUtils.isEmpty(result.getResultString())) {

Log.d(TAG, "recognizer result:" + result.getResultString());

String text = "";

if (mResultType.equals("json")) {

text = JsonParser.parseGrammarResult(result.getResultString(), mEngineType);

} else if (mResultType.equals("xml")) {

text = XmlParser.parseNluResult(result.getResultString());

}else{

text = result.getResultString();

}

// 显示

((EditText) findViewById(R.id.isr_text)).setText(text);

} else {

Log.d(TAG, "recognizer result : null");

}

}

@Override

public void onEndOfSpeech() {

// 此回调表示:检测到了语音的尾端点,已经进入识别过程,不再接受语音输入

showTip("结束说话");

}

@Override

public void onBeginOfSpeech() {

// 此回调表示:sdk内部录音机已经准备好了,用户可以开始语音输入

showTip("开始说话");

}

@Override

public void onError(SpeechError error) {

showTip("onError Code:" + error.getErrorCode());

}

@Override

public void onEvent(int eventType, int arg1, int arg2, Bundle obj) {

// 以下代码用于获取与云端的会话id,当业务出错时将会话id提供给技术支持人员,可用于查询会话日志,定位出错原因

// 若使用本地能力,会话id为null

// if (SpeechEvent.EVENT_SESSION_ID == eventType) {

// String sid = obj.getString(SpeechEvent.KEY_EVENT_SESSION_ID);

// Log.d(TAG, "session id =" + sid);

// }

}

};

private void showTip(final String str) {

runOnUiThread(new Runnable() {

@Override

public void run() {

mToast.setText(str);

mToast.show();

}

});

}- 开始监听

mAsr.startListening(mRecognizerListener);是不是so easy

在线语音合成

还是按套路来

- 创建语音合成对象

private SpeechSynthesizer mTts; mTts = SpeechSynthesizer.createSynthesizer(this, mTtsInitListener); //初始化监听

private InitListener mTtsInitListener = new InitListener() {

@Override

public void onInit(int code) {

if (code != ErrorCode.SUCCESS) {

showTip("初始化失败,错误码:" + code + ",请点击网址https://www.xfyun.cn/document/error-code查询解决方案");

} else {

// 初始化成功,之后可以调用startSpeaking方法

// 注:有的开发者在onCreate方法中创建完合成对象之后马上就调用startSpeaking进行合成,

// 正确的做法是将onCreate中的startSpeaking调用移至这里

//Speaking("欢迎使用voice");

}

}

};- 设置语音合成参数

//语音合成参数设置

private void setTtsParam() {

// 清空参数

mTts.setParameter(SpeechConstant.PARAMS, null);

//设置合成

//设置使用云端引擎

mTts.setParameter(SpeechConstant.ENGINE_TYPE, SpeechConstant.TYPE_CLOUD);

//设置发音人

mTts.setParameter(SpeechConstant.VOICE_NAME, voicerCloud);

//mTts.setParameter(SpeechConstant.TTS_DATA_NOTIFY,"1");//支持实时音频流抛出,仅在synthesizeToUri条件下支持

//设置合成语速、音调、音量、音频流类型

mTts.setParameter(SpeechConstant.SPEED, mSharedPreferences.getString("speed_preference", "50"));

mTts.setParameter(SpeechConstant.PITCH, mSharedPreferences.getString("pitch_preference", "50"));

mTts.setParameter(SpeechConstant.VOLUME, mSharedPreferences.getString("volume_preference", "50"));

mTts.setParameter(SpeechConstant.STREAM_TYPE, mSharedPreferences.getString("stream_preference", "3"));

// 设置播放合成音频打断音乐播放,默认为true

mTts.setParameter(SpeechConstant.KEY_REQUEST_FOCUS, "true");

// 设置音频保存路径,保存音频格式支持pcm、wav,设置路径为sd卡请注意WRITE_EXTERNAL_STORAGE权限

mTts.setParameter(SpeechConstant.AUDIO_FORMAT, "wav");

mTts.setParameter(SpeechConstant.TTS_AUDIO_PATH, Environment.getExternalStorageDirectory() + "/msc/tts.wav");

}- 语音合成监听器

*/

private SynthesizerListener mTtsListener = new SynthesizerListener() {

@Override

public void onSpeakBegin() {

showTip("开始播放");

}

@Override

public void onSpeakPaused() {

showTip("暂停播放");

}

@Override

public void onSpeakResumed() {

showTip("继续播放");

}

@Override

public void onBufferProgress(int percent, int beginPos, int endPos,

String info) {

// 合成进度

mPercentForBuffering = percent;

showTip(String.format(getString(R.string.tts_toast_format),

mPercentForBuffering, mPercentForPlaying));

}

@Override

public void onSpeakProgress(int percent, int beginPos, int endPos) {

// 播放进度

mPercentForPlaying = percent;

showTip(String.format(getString(R.string.tts_toast_format),

mPercentForBuffering, mPercentForPlaying));

}

@Override

public void onCompleted(SpeechError error) {

if (error == null) {

showTip("播放完成");

} else if (error != null) {

showTip(error.getPlainDescription(true));

}

}

@Override

public void onEvent(int eventType, int arg1, int arg2, Bundle obj) {

// 以下代码用于获取与云端的会话id,当业务出错时将会话id提供给技术支持人员,可用于查询会话日志,定位出错原因

// 若使用本地能力,会话id为null

// if (SpeechEvent.EVENT_SESSION_ID == eventType) {

// String sid = obj.getString(SpeechEvent.KEY_EVENT_SESSION_ID);

// Log.d(TAG, "session id =" + sid);

// }

//实时音频流输出参考

/*if (SpeechEvent.EVENT_TTS_BUFFER == eventType) {

byte[] buf = obj.getByteArray(SpeechEvent.KEY_EVENT_TTS_BUFFER);

Log.e("MscSpeechLog", "buf is =" + buf);

}*/

}

};- 开始合成

mTts.startSpeaking(text, mTtsListener);** It’s so easy too ! **