文本预处理:词袋模型(bag of words,BOW)、TF-IDF

*** 这篇博客主要整理介绍文本预处理中的词袋模型(bag of words,BOW)和TF-IDF。 ***

一、词袋模型(bag of words,BOW)

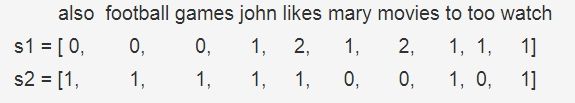

词袋模型能够把一个句子转化为向量表示,是比较简单直白的一种方法,它不考虑句子中单词的顺序,只考虑词表(vocabulary)中单词在这个句子中的出现次数。下面直接来看一个例子吧(例子直接用wiki上的例子):

"John likes to watch movies, Mary likes movies too"

"John also likes to watch football games"

对于这两个句子,我们要用词袋模型把它转化为向量表示,这两个句子形成的词表(不去停用词)为:

[‘also’, ‘football’, ‘games’, ‘john’, ‘likes’, ‘mary’, ‘movies’, ‘to’, ‘too’, ‘watch’]

scikit-learn中的CountVectorizer()函数实现了BOW模型,下面来看看用法:

from sklearn.feature_extraction.text import CountVectorizer

corpus = [

"John likes to watch movies, Mary likes movies too",

"John also likes to watch football games",

]

vectorizer = CountVectorizer()

X = vectorizer.fit_transform(corpus)

print(vectorizer.get_feature_names())

print(X.toarray())

#输出结果:

#['also', 'football', 'games', 'john', 'likes', 'mary', 'movies', 'to', 'too', 'watch']

#[[0 0 0 1 2 1 2 1 1 1]

# [1 1 1 1 1 0 0 1 0 1]]

二、TF-IDF(Term Frequency / Inverse Document Frequency,词频-逆文本频率)

BOW模型有很多缺点,首先它没有考虑单词之间的顺序,其次它无法反应出一个句子的关键词,比如下面这个句子:

"John likes to play football, Mary likes too"

这个句子若用BOW模型,它的词表为:[‘football’, ‘john’, ‘likes’, ‘mary’, ‘play’, ‘to’, ‘too’],则词向量表示为:[1 1 2 1 1 1 1]。若根据BOW模型提取这个句子的关键词,则为 “like”,但是显然这个句子的关键词应该为 “football”。而TF-IDF则可以解决这个问题。TF-IDF看名字也知道包括两部分TF和IDF,TF(Term Frequency,词频)的公式为:

TF-IDF值越大说明这个词越重要,也可以说这个词是关键词。关于关键词的判断示例,可以参考TF-IDF与余弦相似性的应用(一):自动提取关键词

下面来看看实际使用,sklearn中封装TF-IDF方法,并且也提供了示例:

from sklearn.feature_extraction.text import TfidfVectorizer

corpus = [

'This is the first document.',

'This document is the second document.',

'And this is the third one.',

'Is this the first document?',

]

vectorizer = TfidfVectorizer()

X = vectorizer.fit_transform(corpus)

print(vectorizer.get_feature_names())

print(X)

print(X.toarray())

"""

['and', 'document', 'first', 'is', 'one', 'second', 'the', 'third', 'this']

(0, 8) 0.38408524091481483

(0, 3) 0.38408524091481483

(0, 6) 0.38408524091481483

(0, 2) 0.5802858236844359

(0, 1) 0.46979138557992045

(1, 8) 0.281088674033753

(1, 3) 0.281088674033753

(1, 6) 0.281088674033753

(1, 1) 0.6876235979836938

(1, 5) 0.5386476208856763

(2, 8) 0.267103787642168

(2, 3) 0.267103787642168

(2, 6) 0.267103787642168

(2, 0) 0.511848512707169

(2, 7) 0.511848512707169

(2, 4) 0.511848512707169

(3, 8) 0.38408524091481483

(3, 3) 0.38408524091481483

(3, 6) 0.38408524091481483

(3, 2) 0.5802858236844359

(3, 1) 0.46979138557992045

[[0. 0.46979139 0.58028582 0.38408524 0. 0.

0.38408524 0. 0.38408524]

[0. 0.6876236 0. 0.28108867 0. 0.53864762

0.28108867 0. 0.28108867]

[0.51184851 0. 0. 0.26710379 0.51184851 0.

0.26710379 0.51184851 0.26710379]

[0. 0.46979139 0.58028582 0.38408524 0. 0.

0.38408524 0. 0.38408524]]

"""