自然语言处理深度生成模型相关资源、会议和论文分享

本资源整理了自然语言处理相关深度生成模型资源,会议和相关的一些前沿论文,分享给需要的朋友。

本资源整理自:https://github.com/FranxYao/Deep-Generative-Models-for-Natural-Language-Processing

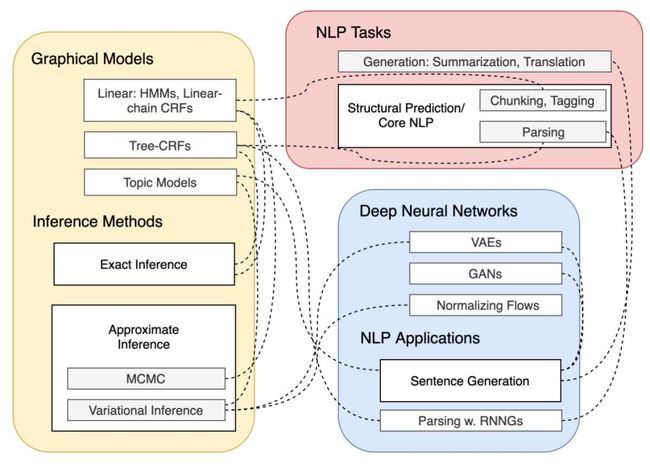

当谈到深层生成模型时,通常指三个模型族:变分自动编码器(VAEs)、生成对抗网络(GANs)和归一化流(Normalizing Flows)。

在这三大模型家族中,我们将更多地关注VAE相关的模型,因为它们更有效。GAN是否真的有效仍然是一个悬而未决的问题。GANs的有效性更像是判别器(discriminator)的正则化,而不是“生成”部分。

自然语言处理的VAE模型涉及许多离散结构。对这些结构的推断既复杂又聪明。本资源整理了相关的一些资源、论文和会议。

资源部分

图形模型基础

在我们旅程开始之前,DGMs的基础是建立在概率图形模型上的。所以我们首先要了解这些模型。

推荐三门不错的课程:

Blei's Foundation of Graphical Models course, STAT 6701 at Columbia

Xing's Probabilistic Graphical Models, 10-708 at CMU

Collins' Natural Language Processing, COMS 4995 at Columbia

两本不错的书:

Pattern Recognition and Machine Learning. Christopher M. Bishop. 2006

Machine Learning: A Probabilistic Perspective. Kevin P. Murphy. 2012

深度生成模型

分享一些DGMS相关不错的资源:

Wilker Aziz's DGM Landscape

A Tutorial on Deep Latent Variable Models of Natural Language (link), EMNLP 18

Yoon Kim, Sam Wiseman and Alexander M. Rush, Havard

Deep Generative Models for Natural Language Processing, Ph.D. Thesis 17

Yishu Miao, Oxford

Stanford CS 236, Deep Generative Models (link)

NYU Deep Generative Models

U Toronto CS 2541 Differentiable Inference and Generative Models, CS 2547 Learning Discrete Latent Structures.

相关知识点思维导图

不一定全面正确,待补充。

NLP相关

主要关注两个主题:生成和结构推理

生成部分

Generating Sentences from a Continuous Space, CoNLL 15

Samuel R. Bowman, Luke Vilnis, Oriol Vinyals, Andrew M. Dai, Rafal Jozefowicz, Samy Bengio

Spherical Latent Spaces for Stable Variational Autoencoders, EMNLP 18

Jiacheng Xu and Greg Durrett, UT Austin

Semi-amortized variational autoencoders, ICML 18

Yoon Kim, Sam Wiseman, Andrew C. Miller, David Sontag, Alexander M. Rush, Havard

Lagging Inference Networks and Posterior Collapse in Variational Autoencoders, ICLR 19

Junxian He, Daniel Spokoyny, Graham Neubig, Taylor Berg-Kirkpatrick

Avoiding Latent Variable Collapse with Generative Skip Models, AISTATS 19

Adji B. Dieng, Yoon Kim, Alexander M. Rush, David M. Blei

结构推理

这部分整理结构推理相关的工作,涉及自然语言处理分块,标记和解析三个部分任务。

An introduction to Conditional Random Fields. Charles Sutton and Andrew McCallum. 2012

Linear-chain CRFs. Modeling, inference and parameter estimation

Inside-Outside and Forward-Backward Algorithms Are Just Backprop. Jason Eisner. 2016.

Differentiable Dynamic Programming for Structured Prediction and Attention. Arthur Mensch and Mathieu Blondel. ICML 2018

To differentiate the max operator in dynamic programming.

Structured Attention Networks. ICLR 2017

Yoon Kim, Carl Denton, Luong Hoang, Alexander M. Rush

Recurrent Neural Network Grammars. NAACL 16

Chris Dyer, Adhiguna Kuncoro, Miguel Ballesteros, and Noah Smith.

Unsupervised Recurrent Neural Network Grammars, NAACL 19

Yoon Kin, Alexander Rush, Lei Yu, Adhiguna Kuncoro, Chris Dyer, and Gabor Melis

Differentiable Perturb-and-Parse: Semi-Supervised Parsing with a Structured Variational Autoencoder, ICLR 19

Caio Corro, Ivan Titov, Edinburgh

离散Reparamterization的一些技巧

Categorical Reparameterization with Gumbel-Softmax. ICLR 2017

Eric Jang, Shixiang Gu, Ben Poole

The Concrete Distribution: A Continuous Relaxation of Discrete Random Variables. ICLR 2017

Chris J. Maddison, Andriy Mnih, and Yee Whye Teh

Reparameterizable Subset Sampling via Continuous Relaxations. IJCAI 2019

Sang Michael Xie and Stefano Ermon

Stochastic Beams and Where to Find Them: The Gumbel-Top-k Trick for Sampling Sequences Without Replacement. ICML 19

Wouter Kool, Herke van Hoof, Max Welling

机器学习相关

机器学习相关部分,首先从VAE开始。

VAEs

Auto-Encoding Variational Bayes, Arxiv 13

Diederik P. Kingma, Max Welling

Variational Inference: A Review for Statisticians, Arxiv 18

David M. Blei, Alp Kucukelbir, Jon D. McAuliffe

Stochastic Backpropagation through Mixture Density Distributions, Arxiv 16

Alex Graves

Reparameterization Gradients through Acceptance-Rejection Sampling Algorithms. AISTATS 2017

Christian A. Naesseth, Francisco J. R. Ruiz, Scott W. Linderman, David M. Blei

Reparameterizing the Birkhoff Polytope for Variational Permutation Inference. AISTATS 2018

Scott W. Linderman, Gonzalo E. Mena, Hal Cooper, Liam Paninski, John P. Cunningham.

Implicit Reparameterization Gradients. NeurIPS 2018.

Michael Figurnov, Shakir Mohamed, and Andriy Mnih

GANs

Generative Adversarial Networks, NIPS 14

Ian J. Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, Yoshua Bengio

Towards principled methods for training generative adversarial networks, ICLR 2017

Martin Arjovsky and Leon Bottou

Wasserstein GAN

Martin Arjovsky, Soumith Chintala, Léon Bottou

Normalizing Flows相关

Variational Inference with Normalizing Flows, ICML 15

Danilo Jimenez Rezende, Shakir Mohamed

Improved Variational Inference with Inverse Autoregressive Flow

Diederik P Kingma, Tim Salimans, Rafal Jozefowicz, Xi Chen, Ilya Sutskever, Max Welling

Learning About Language with Normalizing Flows

Graham Neubig, CMU, slides

Latent Normalizing Flows for Discrete Sequences. ICML 2019.

Zachary M. Ziegler and Alexander M. Rush

Reflections and Critics

需要补充更多论文

Do Deep Generative Models Know What They Don't Know? ICLR 2019

Eric Nalisnick, Akihiro Matsukawa, Yee Whye Teh, Dilan Gorur, Balaji Lakshminarayanan

更多一些应用

篇章和多样化

Paraphrase Generation with Latent Bag of Words. NeurIPS 2019.

Yao Fu, Yansong Feng, and John P. Cunningham

A Deep Generative Framework for Paraphrase Generation, AAAI 18

Ankush Gupta, Arvind Agarwal, Prawaan Singh, Piyush Rai

Generating Informative and Diverse Conversational Responses via Adversarial Information Maximization, NIPS 18

Yizhe Zhang, Michel Galley, Jianfeng Gao, Zhe Gan, Xiujun Li, Chris Brockett, Bill Dolan

主题相关语言生成

Discovering Discrete Latent Topics with Neural Variational Inference, ICML 17

Yishu Miao, Edward Grefenstette, Phil Blunsom. Oxford

Topic-Guided Variational Autoencoders for Text Generation, NAACL 19

Wenlin Wang, Zhe Gan, Hongteng Xu, Ruiyi Zhang, Guoyin Wang, Dinghan Shen, Changyou Chen, Lawrence Carin. Duke & MS & Infinia & U Buffalo

TopicRNN: A Recurrent Neural Network with Long-Range Semantic Dependency, ICLR 17

Adji B. Dieng, Chong Wang, Jianfeng Gao, John William Paisley

Topic Compositional Neural Language Model, AISTATS 18

Wenlin Wang, Zhe Gan, Wenqi Wang, Dinghan Shen, Jiaji Huang, Wei Ping, Sanjeev Satheesh, Lawrence Carin

Topic Aware Neural Response Generation, AAAI 17

Chen Xing, Wei Wu, Yu Wu, Jie Liu, Yalou Huang, Ming Zhou, Wei-Ying Ma

往期精品内容推荐

斯坦福NLP大佬Chris Manning新课-《信息检索和网页搜索2019》分析

元学习-从小样本学习到快速强化学习-ICML2019

新书-计算机视觉、机器人及机器学习线性代数基础-最新版分享

多任务强化学习蒸馏与迁移学习

深度学习实战-从源码解密AlphGo Zero背后基本原理

2018/2019/校招/春招/秋招/自然语言处理/深度学习/机器学习知识要点及面试笔记

最新深度学习面试题目及答案集锦

历史最全-16个推荐系统开放公共数据集整理分享

一文告诉你Adam、AdamW、Amsgrad区别和联系,助你实现Super-convergence的终极目标

基于深度学习的文本分类6大算法-原理、结构、论文、源码打包分享

2018-深度学习与自然语言处理-最新教材推荐

李宏毅-深度学习与生成对抗学习基础-2018年(春)课程分享