概述

由于大部分DB软件都带自身的高可用,而却对IO性能要就极高,所以k8s云中使用本地硬盘最好

k8s机器信息 版本1.13

node1 10.16.16.119 master节点

node2 10.16.16.120 master节点

node3 10.16.16.68

node4 10.16.16.68

硬盘信息

/data/disks hdd10k硬盘 node1 node2 node3 node4 均有

/data/fask-disks ssd 10k硬盘 node3 node4有

master节点全部可用于work pod

#kubectl taint nodes --all node-role.kubernetes.io/master-

网络为weave

部署hdd

git clone https://github.com/kubernetes-sigs/sig-storage-local-static-provisioner.git

cd ./sig-storage-local-static-provisioner/

存储类的创建 (Creating a StorageClass (1.9+))

查看默认信息(注意名称)

more provisioner/deployment/kubernetes/example/default_example_storageclass.yaml

# Only create this for K8s 1.9+

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-storage

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

# Supported policies: Delete, Retain

reclaimPolicy: Delete

kubectl create -f provisioner/deployment/kubernetes/example/default_example_storageclass.yaml

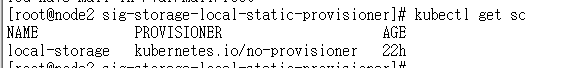

kubectl get sc

创建daemonset服务管理local storage (Creating local persistent volumes)

生产模板

helm template ./helm/provisioner > ./provisioner/deployment/kubernetes/provisioner_generated.yaml

改变模板内容

因为priorityClassName: system-node-critical 所以namespace不能为default 变为kube-system

storageClassMap 中的信息要对应storageclass中的名字 并且 mountDir 和 hostDIR为需要监控的文件目录 本例中hdd 为/data/disks ssd为/data/fast-disks

vi ./provisioner/deployment/kubernetes/provisioner_generated.yaml

---

# Source: provisioner/templates/provisioner.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: local-provisioner-config

namespace: kube-system

labels:

heritage: "Tiller"

release: "release-name"

chart: provisioner-2.3.0

data:

storageClassMap: |

local-storage:

hostDir: /data/disks

mountDir: /data/disks

blockCleanerCommand:

- "/scripts/shred.sh"

- "2"

volumeMode: Filesystem

fsType: ext4

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: local-volume-provisioner

namespace: kube-system

labels:

app: local-volume-provisioner

heritage: "Tiller"

release: "release-name"

chart: provisioner-2.3.0

spec:

selector:

matchLabels:

app: local-volume-provisioner

template:

metadata:

labels:

app: local-volume-provisioner

spec:

serviceAccountName: local-storage-admin

priorityClassName: system-node-critical

containers:

- image: "quay.io/external_storage/local-volume-provisioner:v2.3.0"

name: provisioner

securityContext:

privileged: true

env:

- name: MY_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: MY_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: JOB_CONTAINER_IMAGE

value: "quay.io/external_storage/local-volume-provisioner:v2.3.0"

volumeMounts:

- mountPath: /etc/provisioner/config

name: provisioner-config

readOnly: true

- mountPath: /dev

name: provisioner-dev

- mountPath: /data/disks/

name: local-disks

mountPropagation: "HostToContainer"

volumes:

- name: provisioner-config

configMap:

name: local-provisioner-config

- name: provisioner-dev

hostPath:

path: /dev

- name: local-disks

hostPath:

path: /data/disks/

---

# Source: provisioner/templates/provisioner-service-account.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: local-storage-admin

namespace: kube-system

labels:

heritage: "Tiller"

release: "release-name"

chart: provisioner-2.3.0

---

# Source: provisioner/templates/provisioner-cluster-role-binding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: local-storage-provisioner-pv-binding

labels:

heritage: "Tiller"

release: "release-name"

chart: provisioner-2.3.0

subjects:

- kind: ServiceAccount

name: local-storage-admin

namespace: kube-system

roleRef:

kind: ClusterRole

name: system:persistent-volume-provisioner

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: local-storage-provisioner-node-clusterrole

labels:

heritage: "Tiller"

release: "release-name"

chart: provisioner-2.3.0

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: local-storage-provisioner-node-binding

labels:

heritage: "Tiller"

release: "release-name"

chart: provisioner-2.3.0

subjects:

- kind: ServiceAccount

name: local-storage-admin

namespace: kube-system

roleRef:

kind: ClusterRole

name: local-storage-provisioner-node-clusterrole

apiGroup: rbac.authorization.k8s.io

---

# Source: provisioner/templates/namespace.yaml

配置完成后

创建

kubectl create -f ./provisioner/deployment/kubernetes/provisioner_generated.yaml

观察

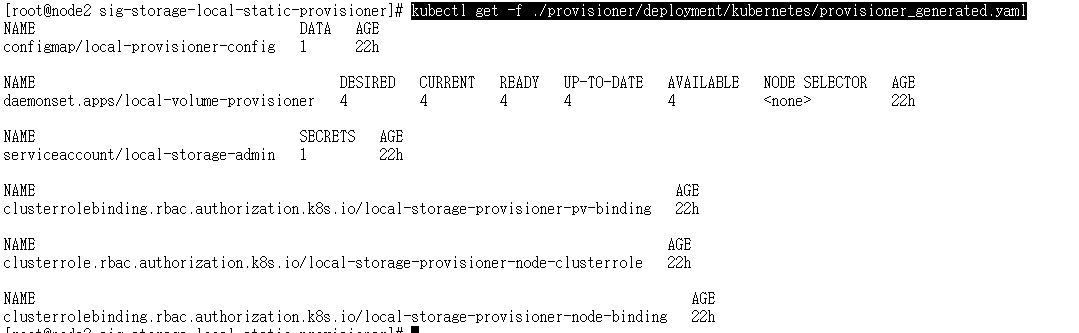

kubectl get -f ./provisioner/deployment/kubernetes/provisioner_generated.yaml

创建hdd 的pv

在node1-node4上依次执行,size为大小

for vol in vol1 vol2 vol3 vol4 vol5 vol6; do

mkdir -p /data/disks/$vol

mount -t tmpfs -o size=100g $vol /data/disks/$vol

done

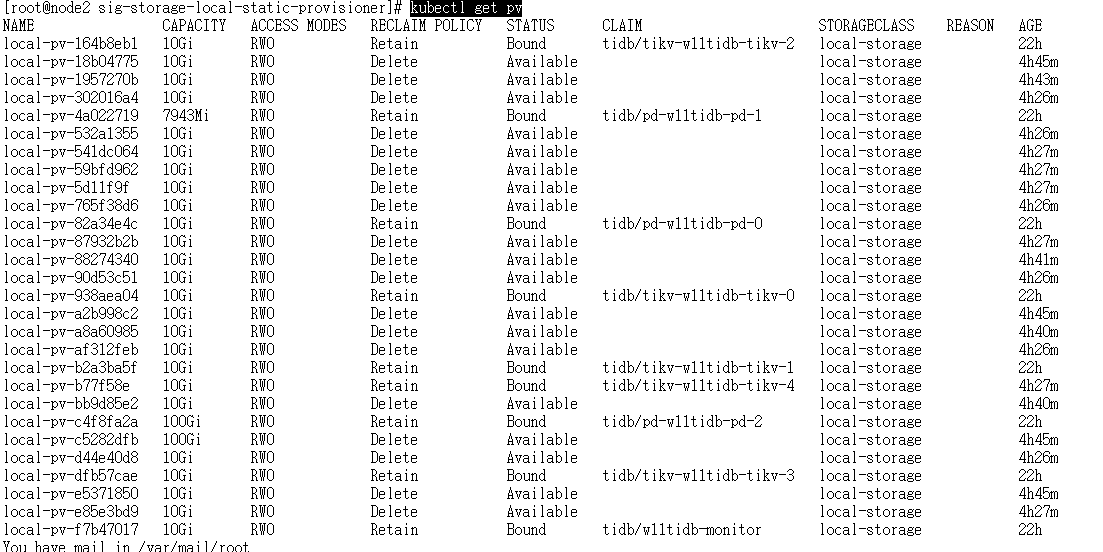

执行收我们发现pv创建完毕

kubectl get pv

查看pv的详细信息

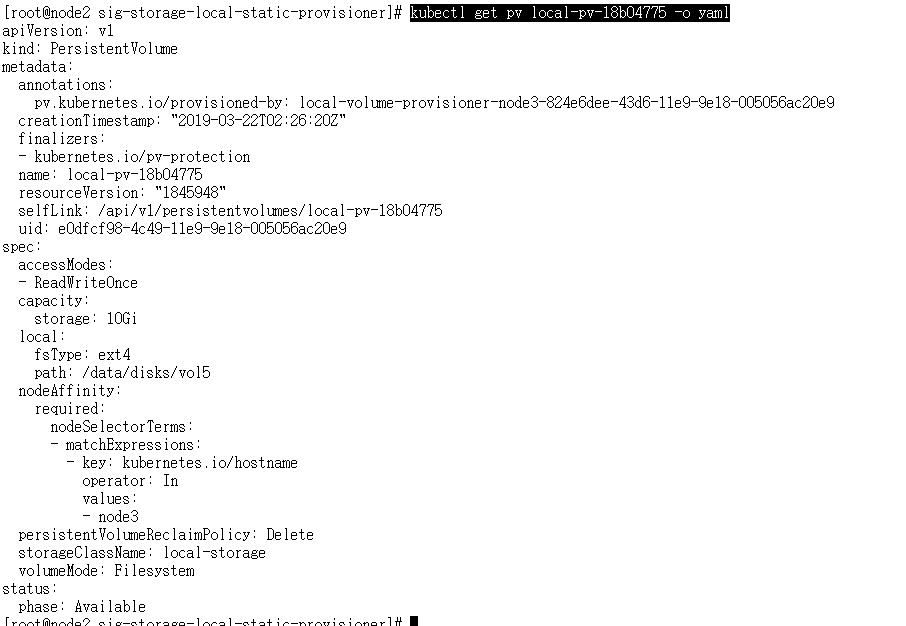

kubectl get pv local-pv-18b04775 -o yaml

创建ssh管理 使用HELM

给node3 node4 打上ssd label标识,说明此机器上有ssd

kubectl label nodes node3 disktype.ssd=true

kubectl label nodes node4 disktype.ssd=true

kubectl get nodes --show-labels

查看helm定制信息

helm inspect ./helm/provisioner

我们需要定制的有:

vi w11.config

common:

namespace: kube-system

configMapName: "ssd-local-provisioner-config"

classes:

#配置挂载信息

- name: ssd-local-storage

hostDir: /data/fast-disks

mountDir: /data/fast-disks

fsType: ext4

blockCleanerCommand:

- "/scripts/shred.sh"

- "2"

#是否转签storage class

storageClass: "true"

storageClass:

reclaimPolicy: Delete

daemonset:

name: "ssd-local-volume-provisioner"

#节点亲和 只在ssd的节点上创建

nodeSelector:

disktype.ssd: true

serviceAccount: ssd-local-storage-admin

helm template ./helm/provisioner -f ./w11.config

安装

helm install --name=ssd-local ./helm/provisioner -f ./w11.config

检查

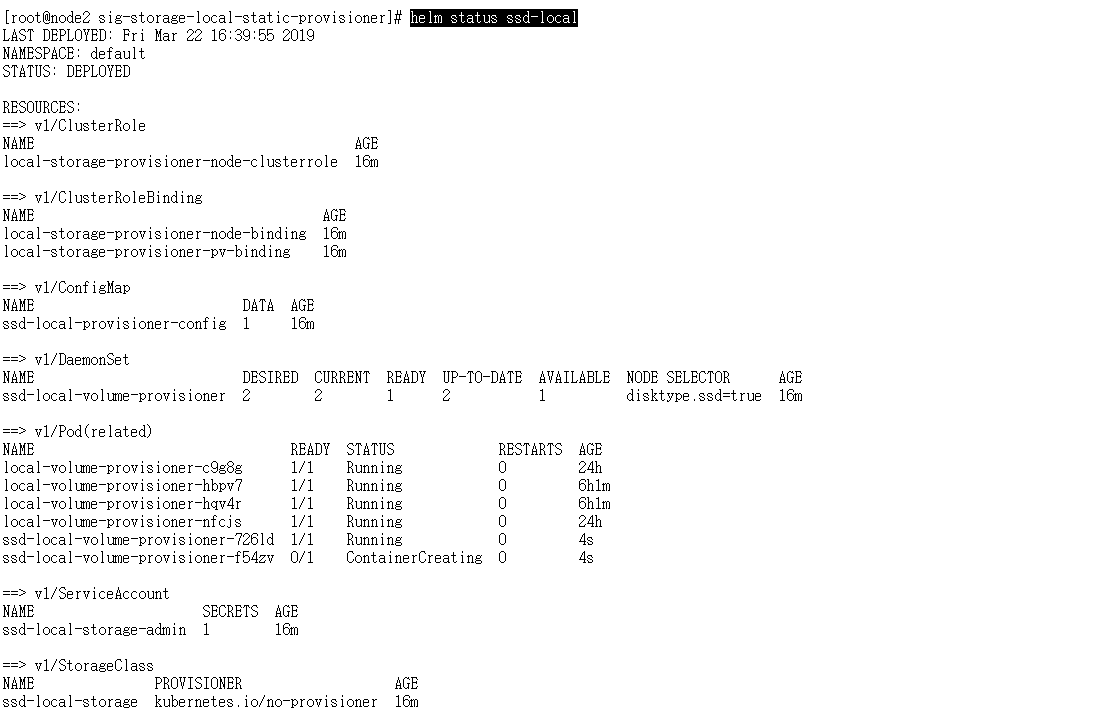

helm status ssd-local

创建pv

在node3 node4 上创建

for ssd in ssd1 ssd2 ssd3 ssd4 ssd5 ssd6; do

mkdir -p /data/fast-disks/$ssd

mount -t tmpfs -o size=10g $ssd /data/fast-disks/$ssd

done

检查

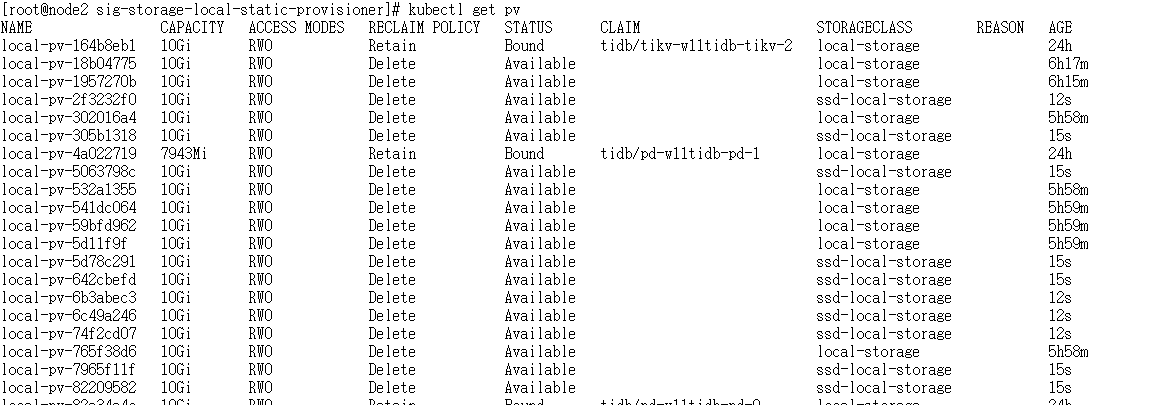

kubectl get pv

后续问题

IO的隔离

tmpfs的扩容