Spark2.1.1中用各种模式运行计算圆周率的官方Demo

1使用单机local模式提交任务

2使用独立的Spark集群模式提交任务

3使用Spark集群 +Hadoop集群的模式提交任务

3.1用yarn-client模式执行计算程序

3.1.1操作步骤和方法

3.1.2常见错误解决

3.1.2.1 Yarn applicationhas already ended!

3.1.2.1.1主要错误信息

3.1.2.1.2错误原因

3.1.2.1.3解决方法

3.1.2.2 Required executormemory (1024+384 MB)

3.1.2.2.1主要错误信息

3.1.2.2.2错误原因

3.1.2.2.3解决方法

3.2用yarn-cluster模式执行计算程序

3.2.1操作步骤和方法

关键字: Linux CentOS Hadoop Spark yarn-client yarn-cluster

版本号:CentOS7 Hadoop2.8.0 Spark2.1.1

说明:本文主要使用Spark单机模式(也就是Local模式)、Spark独立集群模式(也就是Standalone模式)、Spark集群+Haddop集群模式(也就是Yarn-Client模式和Yarn-Cluster模式)来运行Spark官方提供的计算圆周率的Demo。

1 使用单机local模式提交任务

local模式也就是本地模式,也就是在本地机器上单机执行程序。使用这个模式的话,并不需要启动Hadoop集群,也不需要启动Spark集群,只要有一台机器上安装了JDK、Scala、Spark即可运行。

进入到Spark2.1.1的安装目录,命令是:

cd /opt/spark/spark-2.1.1-bin-hadoop2.7执行命令,用单机模式运行计算圆周率的Demo:

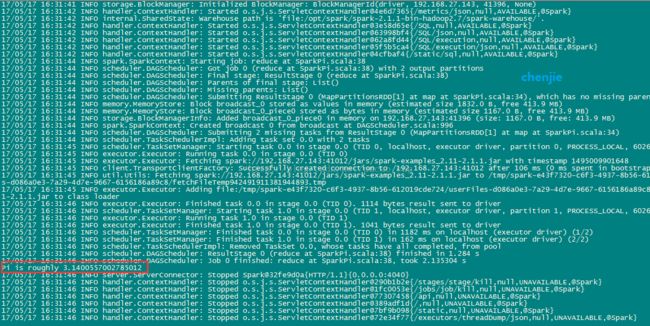

./bin/spark-submit --class org.apache.spark.examples.SparkPi --master local examples/jars/spark-examples_2.11-2.1.1.jar如图:

稍等几秒,计算即可完成

如图:

完整信息是:

[root@hserver1 ~]# cd /opt/spark/spark-2.1.1-bin-hadoop2.7

[root@hserver1 spark-2.1.1-bin-hadoop2.7]# ./bin/spark-submit--class org.apache.spark.examples.SparkPi --master localexamples/jars/spark-examples_2.11-2.1.1.jar

17/05/17 16:31:38 INFO spark.SparkContext: Running Spark version2.1.1

17/05/17 16:31:39 WARN util.NativeCodeLoader: Unable to loadnative-hadoop library for your platform... using builtin-java classes whereapplicable

17/05/17 16:31:39 INFO spark.SecurityManager: Changing view acls to:root

17/05/17 16:31:39 INFO spark.SecurityManager: Changing modify aclsto: root

17/05/17 16:31:39 INFO spark.SecurityManager: Changing view aclsgroups to:

17/05/17 16:31:39 INFO spark.SecurityManager: Changing modify aclsgroups to:

17/05/17 16:31:39 INFO spark.SecurityManager: SecurityManager:authentication disabled; ui acls disabled; users with view permissions: Set(root); groups withview permissions: Set(); users withmodify permissions: Set(root); groups with modify permissions: Set()

17/05/17 16:31:40 INFO util.Utils: Successfully started service'sparkDriver' on port 41012.

17/05/17 16:31:40 INFO spark.SparkEnv: Registering MapOutputTracker

17/05/17 16:31:40 INFO spark.SparkEnv: RegisteringBlockManagerMaster

17/05/17 16:31:40 INFO storage.BlockManagerMasterEndpoint: Usingorg.apache.spark.storage.DefaultTopologyMapper for getting topology information

17/05/17 16:31:40 INFO storage.BlockManagerMasterEndpoint:BlockManagerMasterEndpoint up

17/05/17 16:31:40 INFO storage.DiskBlockManager: Created localdirectory at /tmp/blockmgr-48295dbe-32ae-4bdb-a4e2-9fada0294094

17/05/17 16:31:40 INFO memory.MemoryStore: MemoryStore started withcapacity 413.9 MB

17/05/17 16:31:40 INFO spark.SparkEnv: RegisteringOutputCommitCoordinator

17/05/17 16:31:41 INFO util.log: Logging initialized @4545ms

17/05/17 16:31:41 INFO server.Server: jetty-9.2.z-SNAPSHOT

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@5118388b{/jobs,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@15a902e7{/jobs/json,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@7876d598{/jobs/job,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@4a3e3e8b{/jobs/job/json,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@5af28b27{/stages,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@71104a4{/stages/json,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@4985cbcb{/stages/stage,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@72f46e16{/stages/stage/json,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@3c9168dc{/stages/pool,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@332a7fce{/stages/pool/json,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@549621f3{/storage,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@54361a9{/storage/json,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@32232e55{/storage/rdd,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@5217f3d0{/storage/rdd/json,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@37ebc9d8{/environment,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@293bb8a5{/environment/json,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@2416a51{/executors,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@6fa590ba{/executors/json,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@6e9319f{/executors/threadDump,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@72e34f77{/executors/threadDump/json,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@7bf9b098{/static,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@389adf1d{/,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@77307458{/api,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@1fc0053e{/jobs/job/kill,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@290b1b2e{/stages/stage/kill,null,AVAILABLE,@Spark}

17/05/17 16:31:41 INFO server.ServerConnector: StartedSpark@32fe9d0a{HTTP/1.1}{0.0.0.0:4040}

17/05/17 16:31:41 INFO server.Server: Started @4957ms

17/05/17 16:31:41 INFO util.Utils: Successfully started service'SparkUI' on port 4040.

17/05/17 16:31:41 INFO ui.SparkUI: Bound SparkUI to 0.0.0.0, andstarted at http://192.168.27.143:4040

17/05/17 16:31:41 INFO spark.SparkContext: Added JARfile:/opt/spark/spark-2.1.1-bin-hadoop2.7/examples/jars/spark-examples_2.11-2.1.1.jarat spark://192.168.27.143:41012/jars/spark-examples_2.11-2.1.1.jar withtimestamp 1495009901648

17/05/17 16:31:41 INFO executor.Executor: Starting executor IDdriver on host localhost

17/05/17 16:31:41 INFO util.Utils: Successfully started service'org.apache.spark.network.netty.NettyBlockTransferService' on port 41396.

17/05/17 16:31:41 INFO netty.NettyBlockTransferService: Servercreated on 192.168.27.143:41396

17/05/17 16:31:41 INFO storage.BlockManager: Usingorg.apache.spark.storage.RandomBlockReplicationPolicy for block replicationpolicy

17/05/17 16:31:41 INFO storage.BlockManagerMaster: RegisteringBlockManager BlockManagerId(driver, 192.168.27.143, 41396, None)

17/05/17 16:31:41 INFO storage.BlockManagerMasterEndpoint:Registering block manager 192.168.27.143:41396 with 413.9 MB RAM,BlockManagerId(driver, 192.168.27.143, 41396, None)

17/05/17 16:31:41 INFO storage.BlockManagerMaster: RegisteredBlockManager BlockManagerId(driver, 192.168.27.143, 41396, None)

17/05/17 16:31:41 INFO storage.BlockManager: Initialized BlockManager:BlockManagerId(driver, 192.168.27.143, 41396, None)

17/05/17 16:31:42 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@4e6d7365{/metrics/json,null,AVAILABLE,@Spark}

17/05/17 16:31:42 INFO internal.SharedState: Warehouse path is 'file:/opt/spark/spark-2.1.1-bin-hadoop2.7/spark-warehouse/'.

17/05/17 16:31:42 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@3e58d65e{/SQL,null,AVAILABLE,@Spark}

17/05/17 16:31:42 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@63998bf4{/SQL/json,null,AVAILABLE,@Spark}

17/05/17 16:31:42 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@62a8fd44{/SQL/execution,null,AVAILABLE,@Spark}

17/05/17 16:31:42 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5f5b5ca4{/SQL/execution/json,null,AVAILABLE,@Spark}

17/05/17 16:31:42 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@4cfbaf4{/static/sql,null,AVAILABLE,@Spark}

17/05/17 16:31:44 INFO spark.SparkContext: Starting job: reduce atSparkPi.scala:38

17/05/17 16:31:44 INFO scheduler.DAGScheduler: Got job 0 (reduce atSparkPi.scala:38) with 2 output partitions

17/05/17 16:31:44 INFO scheduler.DAGScheduler: Final stage:ResultStage 0 (reduce at SparkPi.scala:38)

17/05/17 16:31:44 INFO scheduler.DAGScheduler: Parents of finalstage: List()

17/05/17 16:31:44 INFO scheduler.DAGScheduler: Missing parents:List()

17/05/17 16:31:44 INFO scheduler.DAGScheduler: SubmittingResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34), which has nomissing parents

17/05/17 16:31:44 INFO memory.MemoryStore: Block broadcast_0 storedas values in memory (estimated size 1832.0 B, free 413.9 MB)

17/05/17 16:31:44 INFO memory.MemoryStore: Block broadcast_0_piece0stored as bytes in memory (estimated size 1167.0 B, free 413.9 MB)

17/05/17 16:31:44 INFO storage.BlockManagerInfo: Addedbroadcast_0_piece0 in memory on 192.168.27.143:41396 (size: 1167.0 B, free:413.9 MB)

17/05/17 16:31:44 INFO spark.SparkContext: Created broadcast 0 frombroadcast at DAGScheduler.scala:996

17/05/17 16:31:44 INFO scheduler.DAGScheduler: Submitting 2 missingtasks from ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34)

17/05/17 16:31:44 INFO scheduler.TaskSchedulerImpl: Adding task set0.0 with 2 tasks

17/05/17 16:31:45 INFO scheduler.TaskSetManager: Starting task 0.0in stage 0.0 (TID 0, localhost, executor driver, partition 0, PROCESS_LOCAL,6026 bytes)

17/05/17 16:31:45 INFO executor.Executor: Running task 0.0 in stage0.0 (TID 0)

17/05/17 16:31:45 INFO executor.Executor: Fetchingspark://192.168.27.143:41012/jars/spark-examples_2.11-2.1.1.jar with timestamp1495009901648

17/05/17 16:31:45 INFO client.TransportClientFactory: Successfullycreated connection to /192.168.27.143:41012 after 106 ms (0 ms spent inbootstraps)

17/05/17 16:31:45 INFO util.Utils: Fetchingspark://192.168.27.143:41012/jars/spark-examples_2.11-2.1.1.jar to/tmp/spark-e43f7320-c6f3-4937-8b56-612019cde724/userFiles-d086a0e3-7a29-4d7e-9667-6156186a89c8/fetchFileTemp942491911381944893.tmp

17/05/17 16:31:45 INFO executor.Executor: Addingfile:/tmp/spark-e43f7320-c6f3-4937-8b56-612019cde724/userFiles-d086a0e3-7a29-4d7e-9667-6156186a89c8/spark-examples_2.11-2.1.1.jarto class loader

17/05/17 16:31:46 INFO executor.Executor: Finished task 0.0 in stage0.0 (TID 0). 1114 bytes result sent to driver

17/05/17 16:31:46 INFO scheduler.TaskSetManager: Starting task 1.0in stage 0.0 (TID 1, localhost, executor driver, partition 1, PROCESS_LOCAL,6026 bytes)

17/05/17 16:31:46 INFO executor.Executor: Running task 1.0 in stage0.0 (TID 1)

17/05/17 16:31:46 INFO executor.Executor: Finished task 1.0 in stage0.0 (TID 1). 1041 bytes result sent to driver

17/05/17 16:31:46 INFO scheduler.TaskSetManager: Finished task 0.0in stage 0.0 (TID 0) in 1182 ms on localhost (executor driver) (1/2)

17/05/17 16:31:46 INFO scheduler.TaskSetManager: Finished task 1.0in stage 0.0 (TID 1) in 162 ms on localhost (executor driver) (2/2)

17/05/17 16:31:46 INFO scheduler.TaskSchedulerImpl: Removed TaskSet0.0, whose tasks have all completed, from pool

17/05/17 16:31:46 INFO scheduler.DAGScheduler: ResultStage 0 (reduceat SparkPi.scala:38) finished in 1.284 s

17/05/17 16:31:46 INFO scheduler.DAGScheduler: Job 0 finished:reduce at SparkPi.scala:38, took 2.135304 s

Pi is roughly 3.1400557002785012

17/05/17 16:31:46 INFO server.ServerConnector: StoppedSpark@32fe9d0a{HTTP/1.1}{0.0.0.0:4040}

17/05/17 16:31:46 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@290b1b2e{/stages/stage/kill,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@1fc0053e{/jobs/job/kill,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@77307458{/api,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@389adf1d{/,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@7bf9b098{/static,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@72e34f77{/executors/threadDump/json,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@6e9319f{/executors/threadDump,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@6fa590ba{/executors/json,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@2416a51{/executors,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@293bb8a5{/environment/json,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@37ebc9d8{/environment,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@5217f3d0{/storage/rdd/json,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@32232e55{/storage/rdd,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@54361a9{/storage/json,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@549621f3{/storage,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@332a7fce{/stages/pool/json,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@3c9168dc{/stages/pool,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@72f46e16{/stages/stage/json,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@4985cbcb{/stages/stage,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@71104a4{/stages/json,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@5af28b27{/stages,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@4a3e3e8b{/jobs/job/json,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@7876d598{/jobs/job,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@15a902e7{/jobs/json,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@5118388b{/jobs,null,UNAVAILABLE,@Spark}

17/05/17 16:31:46 INFO ui.SparkUI: Stopped Spark web UI athttp://192.168.27.143:4040

17/05/17 16:31:46 INFO spark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpointstopped!

17/05/17 16:31:46 INFO memory.MemoryStore: MemoryStore cleared

17/05/17 16:31:46 INFO storage.BlockManager: BlockManager stopped

17/05/17 16:31:46 INFO storage.BlockManagerMaster:BlockManagerMaster stopped

17/05/17 16:31:46 INFOscheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint:OutputCommitCoordinator stopped!

17/05/17 16:31:46 INFO spark.SparkContext: Successfully stoppedSparkContext

17/05/17 16:31:46 INFO util.ShutdownHookManager: Shutdown hook called

17/05/17 16:31:46 INFO util.ShutdownHookManager: Deleting directory/tmp/spark-e43f7320-c6f3-4937-8b56-612019cde724

[root@hserver1 spark-2.1.1-bin-hadoop2.7]#2 使用独立的Spark集群模式提交任务

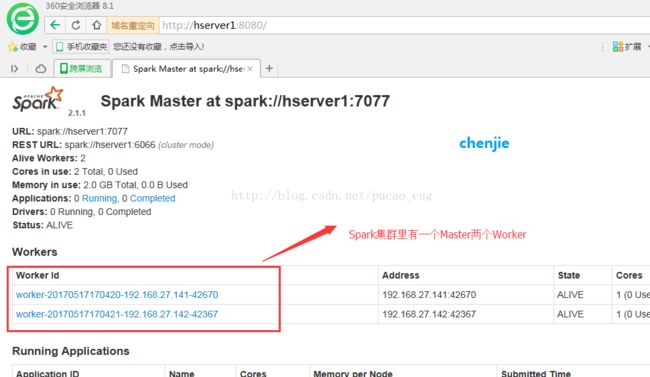

这种模式也就是Standalone模式,使用独立的Spark集群模式提交任务,需要先启动Spark集群,但是不需要启动Hadoop集群。启动Spark集群的方法是进入$SPARK_HOME/sbin目录下,执行start-all.sh脚本,启动成功后,可以访问下面的地址看是否成功:

http://Spark的Marster机器的IP:8080/

如图:

说明:Spark集群启动成功后。开始执行下面的一系列命令。

进入到Spark2.1.1的安装目录,命令是:

cd /opt/spark/spark-2.1.1-bin-hadoop2.7执行命令,用Standalone模式运行计算圆周率的Demo:

./bin/spark-submit --class org.apache.spark.examples.SparkPi --master spark://192.168.27.143:7077 examples/jars/spark-examples_2.11-2.1.1.jar如图:

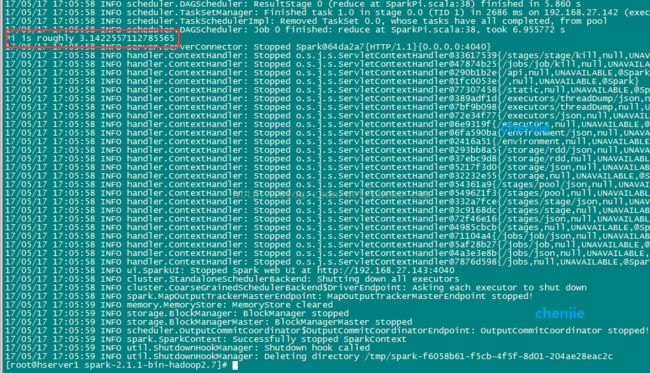

稍等几秒就OK了

如图:

完整信息是:

[root@hserver1 sbin]# cd /opt/spark/spark-2.1.1-bin-hadoop2.7

[root@hserver1 spark-2.1.1-bin-hadoop2.7]# ./bin/spark-submit--class org.apache.spark.examples.SparkPi --master spark://192.168.27.143:7077examples/jars/spark-examples_2.11-2.1.1.jar

17/05/17 17:05:44 INFO spark.SparkContext: Running Spark version2.1.1

17/05/17 17:05:45 WARN util.NativeCodeLoader: Unable to loadnative-hadoop library for your platform... using builtin-java classes whereapplicable

17/05/17 17:05:45 INFO spark.SecurityManager: Changing view acls to:root

17/05/17 17:05:45 INFO spark.SecurityManager: Changing modify aclsto: root

17/05/17 17:05:45 INFO spark.SecurityManager: Changing view aclsgroups to:

17/05/17 17:05:45 INFO spark.SecurityManager: Changing modify aclsgroups to:

17/05/17 17:05:45 INFO spark.SecurityManager: SecurityManager:authentication disabled; ui acls disabled; users with view permissions: Set(root); groups withview permissions: Set(); users withmodify permissions: Set(root); groups with modify permissions: Set()

17/05/17 17:05:46 INFO util.Utils: Successfully started service'sparkDriver' on port 39853.

17/05/17 17:05:46 INFO spark.SparkEnv: Registering MapOutputTracker

17/05/17 17:05:46 INFO spark.SparkEnv: RegisteringBlockManagerMaster

17/05/17 17:05:46 INFO storage.BlockManagerMasterEndpoint: Usingorg.apache.spark.storage.DefaultTopologyMapper for getting topology information

17/05/17 17:05:46 INFO storage.BlockManagerMasterEndpoint:BlockManagerMasterEndpoint up

17/05/17 17:05:46 INFO storage.DiskBlockManager: Created localdirectory at /tmp/blockmgr-50829b10-3ea0-4feb-b495-c23ada2aa90e

17/05/17 17:05:46 INFO memory.MemoryStore: MemoryStore started withcapacity 413.9 MB

17/05/17 17:05:46 INFO spark.SparkEnv: RegisteringOutputCommitCoordinator

17/05/17 17:05:47 INFO util.log: Logging initialized @4733ms

17/05/17 17:05:47 INFO server.Server: jetty-9.2.z-SNAPSHOT

17/05/17 17:05:47 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@7876d598{/jobs,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@4a3e3e8b{/jobs/json,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@5af28b27{/jobs/job,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@71104a4{/jobs/job/json,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@4985cbcb{/stages,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@72f46e16{/stages/json,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@3c9168dc{/stages/stage,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@332a7fce{/stages/stage/json,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@549621f3{/stages/pool,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@54361a9{/stages/pool/json,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@32232e55{/storage,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5217f3d0{/storage/json,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@37ebc9d8{/storage/rdd,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@293bb8a5{/storage/rdd/json,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@2416a51{/environment,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@6fa590ba{/environment/json,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@6e9319f{/executors,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@72e34f77{/executors/json,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@7bf9b098{/executors/threadDump,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@389adf1d{/executors/threadDump/json,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@77307458{/static,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@1fc0053e{/,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@290b1b2e{/api,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@47874b25{/jobs/job/kill,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@33617539{/stages/stage/kill,null,AVAILABLE,@Spark}

17/05/17 17:05:47 INFO server.ServerConnector: StartedSpark@64da2a7{HTTP/1.1}{0.0.0.0:4040}

17/05/17 17:05:47 INFO server.Server: Started @5201ms

17/05/17 17:05:47 INFO util.Utils: Successfully started service'SparkUI' on port 4040.

17/05/17 17:05:47 INFO ui.SparkUI: Bound SparkUI to 0.0.0.0, andstarted at http://192.168.27.143:4040

17/05/17 17:05:47 INFO spark.SparkContext: Added JARfile:/opt/spark/spark-2.1.1-bin-hadoop2.7/examples/jars/spark-examples_2.11-2.1.1.jarat spark://192.168.27.143:39853/jars/spark-examples_2.11-2.1.1.jar withtimestamp 1495011947714

17/05/17 17:05:47 INFO client.StandaloneAppClient$ClientEndpoint:Connecting to master spark://192.168.27.143:7077...

17/05/17 17:05:48 INFO client.TransportClientFactory: Successfullycreated connection to /192.168.27.143:7077 after 73 ms (0 ms spent inbootstraps)

17/05/17 17:05:48 INFO cluster.StandaloneSchedulerBackend: Connectedto Spark cluster with app ID app-20170517170548-0000

17/05/17 17:05:48 INFO util.Utils: Successfully started service'org.apache.spark.network.netty.NettyBlockTransferService' on port 42511.

17/05/17 17:05:48 INFO netty.NettyBlockTransferService: Servercreated on 192.168.27.143:42511

17/05/17 17:05:48 INFO storage.BlockManager: Usingorg.apache.spark.storage.RandomBlockReplicationPolicy for block replicationpolicy

17/05/17 17:05:48 INFO storage.BlockManagerMaster: RegisteringBlockManager BlockManagerId(driver, 192.168.27.143, 42511, None)

17/05/17 17:05:48 INFO storage.BlockManagerMasterEndpoint:Registering block manager 192.168.27.143:42511 with 413.9 MB RAM,BlockManagerId(driver, 192.168.27.143, 42511, None)

17/05/17 17:05:48 INFO storage.BlockManagerMaster: RegisteredBlockManager BlockManagerId(driver, 192.168.27.143, 42511, None)

17/05/17 17:05:48 INFO storage.BlockManager: InitializedBlockManager: BlockManagerId(driver, 192.168.27.143, 42511, None)

17/05/17 17:05:48 INFO client.StandaloneAppClient$ClientEndpoint:Executor added: app-20170517170548-0000/0 onworker-20170517170420-192.168.27.141-42670 (192.168.27.141:42670) with 1 cores

17/05/17 17:05:48 INFO cluster.StandaloneSchedulerBackend: Grantedexecutor ID app-20170517170548-0000/0 on hostPort 192.168.27.141:42670 with 1cores, 1024.0 MB RAM

17/05/17 17:05:48 INFO client.StandaloneAppClient$ClientEndpoint:Executor added: app-20170517170548-0000/1 onworker-20170517170421-192.168.27.142-42367 (192.168.27.142:42367) with 1 cores

17/05/17 17:05:48 INFO cluster.StandaloneSchedulerBackend: Grantedexecutor ID app-20170517170548-0000/1 on hostPort 192.168.27.142:42367 with 1cores, 1024.0 MB RAM

17/05/17 17:05:48 INFO client.StandaloneAppClient$ClientEndpoint:Executor updated: app-20170517170548-0000/0 is now RUNNING

17/05/17 17:05:48 INFO client.StandaloneAppClient$ClientEndpoint:Executor updated: app-20170517170548-0000/1 is now RUNNING

17/05/17 17:05:49 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@6974a715{/metrics/json,null,AVAILABLE,@Spark}

17/05/17 17:05:49 INFO cluster.StandaloneSchedulerBackend:SchedulerBackend is ready for scheduling beginning after reachedminRegisteredResourcesRatio: 0.0

17/05/17 17:05:49 INFO internal.SharedState: Warehouse path is'file:/opt/spark/spark-2.1.1-bin-hadoop2.7/spark-warehouse/'.

17/05/17 17:05:49 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@2228db21{/SQL,null,AVAILABLE,@Spark}

17/05/17 17:05:49 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@241a0c3a{/SQL/json,null,AVAILABLE,@Spark}

17/05/17 17:05:49 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@3f4f9acd{/SQL/execution,null,AVAILABLE,@Spark}

17/05/17 17:05:49 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@4bf324f9{/SQL/execution/json,null,AVAILABLE,@Spark}

17/05/17 17:05:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5ffc5491{/static/sql,null,AVAILABLE,@Spark}

17/05/17 17:05:51 INFO spark.SparkContext: Starting job: reduce atSparkPi.scala:38

17/05/17 17:05:51 INFO scheduler.DAGScheduler: Got job 0 (reduce atSparkPi.scala:38) with 2 output partitions

17/05/17 17:05:51 INFO scheduler.DAGScheduler: Final stage:ResultStage 0 (reduce at SparkPi.scala:38)

17/05/17 17:05:51 INFO scheduler.DAGScheduler: Parents of finalstage: List()

17/05/17 17:05:51 INFO scheduler.DAGScheduler: Missing parents:List()

17/05/17 17:05:52 INFO scheduler.DAGScheduler: SubmittingResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34), which has nomissing parents

17/05/17 17:05:52 INFO memory.MemoryStore: Block broadcast_0 storedas values in memory (estimated size 1832.0 B, free 413.9 MB)

17/05/17 17:05:52 INFO memory.MemoryStore: Block broadcast_0_piece0stored as bytes in memory (estimated size 1167.0 B, free 413.9 MB)

17/05/17 17:05:52 INFO storage.BlockManagerInfo: Addedbroadcast_0_piece0 in memory on 192.168.27.143:42511 (size: 1167.0 B, free:413.9 MB)

17/05/17 17:05:52 INFO spark.SparkContext: Created broadcast 0 frombroadcast at DAGScheduler.scala:996

17/05/17 17:05:52 INFO scheduler.DAGScheduler: Submitting 2 missingtasks from ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34)

17/05/17 17:05:52 INFO scheduler.TaskSchedulerImpl: Adding task set0.0 with 2 tasks

17/05/17 17:05:55 INFOcluster.CoarseGrainedSchedulerBackend$DriverEndpoint: Registered executorNettyRpcEndpointRef(null) (192.168.27.141:49512) with ID 0

17/05/17 17:05:55 INFO scheduler.TaskSetManager: Starting task 0.0in stage 0.0 (TID 0, 192.168.27.141, executor 0, partition 0, PROCESS_LOCAL,6030 bytes)

17/05/17 17:05:55 INFO storage.BlockManagerMasterEndpoint:Registering block manager 192.168.27.141:38597 with 413.9 MB RAM,BlockManagerId(0, 192.168.27.141, 38597, None)

17/05/17 17:05:56 INFOcluster.CoarseGrainedSchedulerBackend$DriverEndpoint: Registered executorNettyRpcEndpointRef(null) (192.168.27.142:42822) with ID 1

17/05/17 17:05:56 INFO scheduler.TaskSetManager: Starting task 1.0in stage 0.0 (TID 1, 192.168.27.142, executor 1, partition 1, PROCESS_LOCAL,6030 bytes)

17/05/17 17:05:56 INFO storage.BlockManagerMasterEndpoint:Registering block manager 192.168.27.142:36281 with 413.9 MB RAM,BlockManagerId(1, 192.168.27.142, 36281, None)

17/05/17 17:05:57 INFO storage.BlockManagerInfo: Addedbroadcast_0_piece0 in memory on 192.168.27.141:38597 (size: 1167.0 B, free:413.9 MB)

17/05/17 17:05:57 INFO scheduler.TaskSetManager: Finished task 0.0in stage 0.0 (TID 0) in 2273 ms on 192.168.27.141 (executor 0) (1/2)

17/05/17 17:05:58 INFO storage.BlockManagerInfo: Addedbroadcast_0_piece0 in memory on 192.168.27.142:36281 (size: 1167.0 B, free:413.9 MB)

17/05/17 17:05:58 INFO scheduler.DAGScheduler: ResultStage 0 (reduceat SparkPi.scala:38) finished in 5.860 s

17/05/17 17:05:58 INFO scheduler.TaskSetManager: Finished task 1.0in stage 0.0 (TID 1) in 2686 ms on 192.168.27.142 (executor 1) (2/2)

17/05/17 17:05:58 INFO scheduler.TaskSchedulerImpl: Removed TaskSet0.0, whose tasks have all completed, from pool

17/05/17 17:05:58 INFO scheduler.DAGScheduler: Job 0 finished:reduce at SparkPi.scala:38, took 6.955772 s

Pi is roughly 3.1422557112785565

17/05/17 17:05:58 INFO server.ServerConnector: StoppedSpark@64da2a7{HTTP/1.1}{0.0.0.0:4040}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@33617539{/stages/stage/kill,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@47874b25{/jobs/job/kill,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@290b1b2e{/api,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@1fc0053e{/,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@77307458{/static,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@389adf1d{/executors/threadDump/json,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@7bf9b098{/executors/threadDump,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@72e34f77{/executors/json,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@6e9319f{/executors,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@6fa590ba{/environment/json,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@2416a51{/environment,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@293bb8a5{/storage/rdd/json,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@37ebc9d8{/storage/rdd,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@5217f3d0{/storage/json,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@32232e55{/storage,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@54361a9{/stages/pool/json,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@549621f3{/stages/pool,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@332a7fce{/stages/stage/json,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@3c9168dc{/stages/stage,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@72f46e16{/stages/json,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@4985cbcb{/stages,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@71104a4{/jobs/job/json,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@5af28b27{/jobs/job,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@4a3e3e8b{/jobs/json,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@7876d598{/jobs,null,UNAVAILABLE,@Spark}

17/05/17 17:05:58 INFO ui.SparkUI: Stopped Spark web UI athttp://192.168.27.143:4040

17/05/17 17:05:58 INFO cluster.StandaloneSchedulerBackend: Shuttingdown all executors

17/05/17 17:05:58 INFO cluster.CoarseGrainedSchedulerBackend$DriverEndpoint:Asking each executor to shut down

17/05/17 17:05:58 INFO spark.MapOutputTrackerMasterEndpoint:MapOutputTrackerMasterEndpoint stopped!

17/05/17 17:05:59 INFO memory.MemoryStore: MemoryStore cleared

17/05/17 17:05:59 INFO storage.BlockManager: BlockManager stopped

17/05/17 17:05:59 INFO storage.BlockManagerMaster:BlockManagerMaster stopped

17/05/17 17:05:59 INFOscheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint:OutputCommitCoordinator stopped!

17/05/17 17:05:59 INFO spark.SparkContext: Successfully stoppedSparkContext

17/05/17 17:05:59 INFO util.ShutdownHookManager: Shutdown hookcalled

17/05/17 17:05:59 INFO util.ShutdownHookManager: Deleting directory/tmp/spark-f6058b61-f5cb-4f5f-8d01-204ae28eac2c

[root@hserver1 spark-2.1.1-bin-hadoop2.7]#3 使用Spark 集群+Hadoop集群的模式提交任务

这种模式也叫On-Yarn模式,主要包括yarn-Client和yarn-Cluster两种模式。在这种模式下提交任务,需要先启动Hadoop集群,然后在启动Spark集群。

启动Hadoop集群的方法这里不说了,有需要可以参考该博文:

http://blog.csdn.net/pucao_cug/article/details/71698903

Hadoop集群启动成功后,在启动Spark集群,启动方式看本文的第2章即可。

Spark集群的安装,有需要可以参考该博文;

http://blog.csdn.net/pucao_cug/article/details/72353701

Hadoop集群和Spark集群都启动成功后,可以接着往下操作。

3.1 用yarn-client模式执行计算程序

3.1.1 操作步骤和方法

进入到Spark2.1.1的安装目录,命令是:

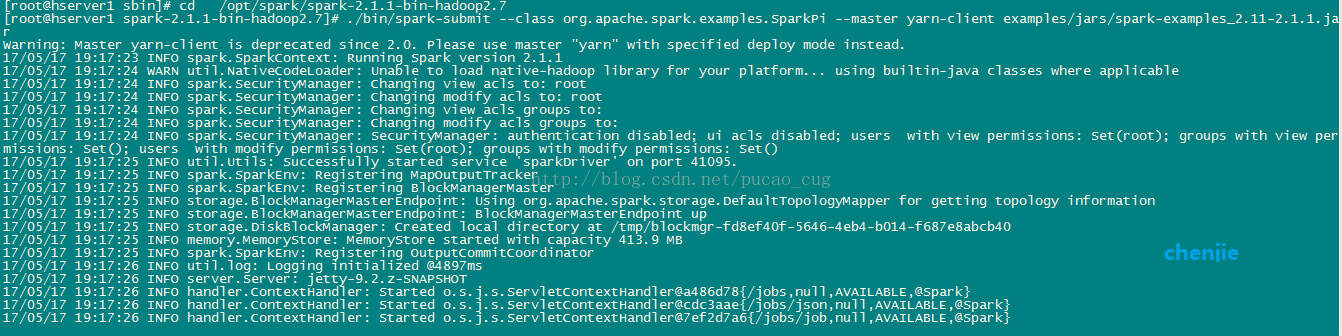

cd /opt/spark/spark-2.1.1-bin-hadoop2.7执行命令,用yarn-client模式运行计算圆周率的Demo:

./bin/spark-submit --class org.apache.spark.examples.SparkPi --master yarn-client examples/jars/spark-examples_2.11-2.1.1.jar如图:

稍等几秒后,即可完成计算

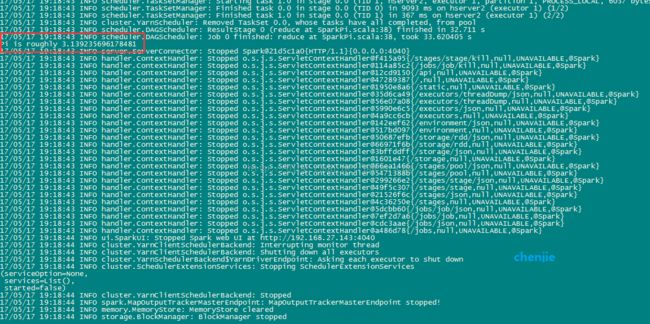

如图:

完整的输出信息是:

[root@hserver1 sbin]# cd /opt/spark/spark-2.1.1-bin-hadoop2.7

[root@hserver1 spark-2.1.1-bin-hadoop2.7]#./bin/spark-submit --class org.apache.spark.examples.SparkPi --masteryarn-client examples/jars/spark-examples_2.11-2.1.1.jar

Warning: Master yarn-client is deprecatedsince 2.0. Please use master "yarn" with specified deploy modeinstead.

17/05/17 19:17:23 INFO spark.SparkContext:Running Spark version 2.1.1

17/05/17 19:17:24 WARNutil.NativeCodeLoader: Unable to load native-hadoop library for yourplatform... using builtin-java classes where applicable

17/05/17 19:17:24 INFO spark.SecurityManager:Changing view acls to: root

17/05/17 19:17:24 INFOspark.SecurityManager: Changing modify acls to: root

17/05/17 19:17:24 INFOspark.SecurityManager: Changing view acls groups to:

17/05/17 19:17:24 INFOspark.SecurityManager: Changing modify acls groups to:

17/05/17 19:17:24 INFOspark.SecurityManager: SecurityManager: authentication disabled; ui aclsdisabled; users with view permissions:Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groupswith modify permissions: Set()

17/05/17 19:17:25 INFO util.Utils:Successfully started service 'sparkDriver' on port 41095.

17/05/17 19:17:25 INFO spark.SparkEnv:Registering MapOutputTracker

17/05/17 19:17:25 INFO spark.SparkEnv:Registering BlockManagerMaster

17/05/17 19:17:25 INFOstorage.BlockManagerMasterEndpoint: Usingorg.apache.spark.storage.DefaultTopologyMapper for getting topology information

17/05/17 19:17:25 INFOstorage.BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

17/05/17 19:17:25 INFOstorage.DiskBlockManager: Created local directory at/tmp/blockmgr-fd8ef40f-5646-4eb4-b014-f687e8abcb40

17/05/17 19:17:25 INFO memory.MemoryStore:MemoryStore started with capacity 413.9 MB

17/05/17 19:17:25 INFO spark.SparkEnv:Registering OutputCommitCoordinator

17/05/17 19:17:26 INFO util.log: Logginginitialized @4897ms

17/05/17 19:17:26 INFO server.Server:jetty-9.2.z-SNAPSHOT

17/05/17 19:17:26 INFOhandler.ContextHandler: Startedo.s.j.s.ServletContextHandler@a486d78{/jobs,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOhandler.ContextHandler: Startedo.s.j.s.ServletContextHandler@cdc3aae{/jobs/json,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOhandler.ContextHandler: Startedo.s.j.s.ServletContextHandler@7ef2d7a6{/jobs/job,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOhandler.ContextHandler: Startedo.s.j.s.ServletContextHandler@5dcbb60{/jobs/job/json,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOhandler.ContextHandler: Startedo.s.j.s.ServletContextHandler@4c36250e{/stages,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOhandler.ContextHandler: Startedo.s.j.s.ServletContextHandler@21526f6c{/stages/json,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOhandler.ContextHandler: Startedo.s.j.s.ServletContextHandler@49f5c307{/stages/stage,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOhandler.ContextHandler: Startedo.s.j.s.ServletContextHandler@299266e2{/stages/stage/json,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOhandler.ContextHandler: Startedo.s.j.s.ServletContextHandler@5471388b{/stages/pool,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOhandler.ContextHandler: Startedo.s.j.s.ServletContextHandler@66ea1466{/stages/pool/json,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOhandler.ContextHandler: Started o.s.j.s.ServletContextHandler@1601e47{/storage,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOhandler.ContextHandler: Startedo.s.j.s.ServletContextHandler@3bffddff{/storage/json,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOhandler.ContextHandler: Started o.s.j.s.ServletContextHandler@66971f6b{/storage/rdd,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOhandler.ContextHandler: Startedo.s.j.s.ServletContextHandler@50687efb{/storage/rdd/json,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOhandler.ContextHandler: Started o.s.j.s.ServletContextHandler@517bd097{/environment,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOhandler.ContextHandler: Startedo.s.j.s.ServletContextHandler@142eef62{/environment/json,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOhandler.ContextHandler: Started o.s.j.s.ServletContextHandler@4a9cc6cb{/executors,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOhandler.ContextHandler: Startedo.s.j.s.ServletContextHandler@5990e6c5{/executors/json,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOhandler.ContextHandler: Started o.s.j.s.ServletContextHandler@56e07a08{/executors/threadDump,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOhandler.ContextHandler: Startedo.s.j.s.ServletContextHandler@35d6ca49{/executors/threadDump/json,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOhandler.ContextHandler: Startedo.s.j.s.ServletContextHandler@1950e8a6{/static,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOhandler.ContextHandler: Startedo.s.j.s.ServletContextHandler@47289387{/,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOhandler.ContextHandler: Started o.s.j.s.ServletContextHandler@12cd9150{/api,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOhandler.ContextHandler: Startedo.s.j.s.ServletContextHandler@114a85c2{/jobs/job/kill,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOhandler.ContextHandler: Started o.s.j.s.ServletContextHandler@f415a95{/stages/stage/kill,null,AVAILABLE,@Spark}

17/05/17 19:17:26 INFOserver.ServerConnector: Started Spark@21d5c1a0{HTTP/1.1}{0.0.0.0:4040}

17/05/17 19:17:26 INFO server.Server:Started @5240ms

17/05/17 19:17:26 INFO util.Utils: Successfullystarted service 'SparkUI' on port 4040.

17/05/17 19:17:26 INFO ui.SparkUI: BoundSparkUI to 0.0.0.0, and started at http://192.168.27.143:4040

17/05/17 19:17:26 INFO spark.SparkContext:Added JAR file:/opt/spark/spark-2.1.1-bin-hadoop2.7/examples/jars/spark-examples_2.11-2.1.1.jarat spark://192.168.27.143:41095/jars/spark-examples_2.11-2.1.1.jar withtimestamp 1495019846524

17/05/17 19:17:28 INFO client.RMProxy:Connecting to ResourceManager at hserver1/192.168.27.143:8032

17/05/17 19:17:29 INFO yarn.Client:Requesting a new application from cluster with 2 NodeManagers

17/05/17 19:17:29 INFO yarn.Client:Verifying our application has not requested more than the maximum memorycapability of the cluster (2048 MB per container)

17/05/17 19:17:29 INFO yarn.Client: Willallocate AM container, with 896 MB memory including 384 MB overhead

17/05/17 19:17:29 INFO yarn.Client: Settingup container launch context for our AM

17/05/17 19:17:29 INFO yarn.Client: Settingup the launch environment for our AM container

17/05/17 19:17:29 INFO yarn.Client:Preparing resources for our AM container

17/05/17 19:17:33 WARN yarn.Client: Neitherspark.yarn.jars nor spark.yarn.archive is set, falling back to uploadinglibraries under SPARK_HOME.

17/05/17 19:17:39 INFO yarn.Client:Uploading resourcefile:/tmp/spark-73613bca-fc94-455a-a152-d9b38f3940fd/__spark_libs__4346568075628334835.zip->hdfs://hserver1:9000/user/root/.sparkStaging/application_1495019556890_0001/__spark_libs__4346568075628334835.zip

17/05/17 19:17:47 INFO yarn.Client:Uploading resourcefile:/tmp/spark-73613bca-fc94-455a-a152-d9b38f3940fd/__spark_conf__4541417269674378465.zip->hdfs://hserver1:9000/user/root/.sparkStaging/application_1495019556890_0001/__spark_conf__.zip

17/05/17 19:17:47 INFOspark.SecurityManager: Changing view acls to: root

17/05/17 19:17:47 INFOspark.SecurityManager: Changing modify acls to: root

17/05/17 19:17:47 INFOspark.SecurityManager: Changing view acls groups to:

17/05/17 19:17:47 INFOspark.SecurityManager: Changing modify acls groups to:

17/05/17 19:17:47 INFOspark.SecurityManager: SecurityManager: authentication disabled; ui aclsdisabled; users with view permissions:Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groupswith modify permissions: Set()

17/05/17 19:17:47 INFO yarn.Client:Submitting application application_1495019556890_0001 to ResourceManager

17/05/17 19:17:47 INFO impl.YarnClientImpl:Submitted application application_1495019556890_0001

17/05/17 19:17:47 INFOcluster.SchedulerExtensionServices: Starting Yarn extension services with appapplication_1495019556890_0001 and attemptId None

17/05/17 19:17:49 INFO yarn.Client:Application report for application_1495019556890_0001 (state: ACCEPTED)

17/05/17 19:17:49 INFO yarn.Client:

client token: N/A

diagnostics: [Wed May 17 19:17:48 +0800 2017] Scheduler has assigned acontainer for AM, waiting for AM container to be launched

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: default

start time: 1495019867505

final status: UNDEFINED

tracking URL: http://hserver1:8088/proxy/application_1495019556890_0001/

user: root

17/05/17 19:17:50 INFO yarn.Client:Application report for application_1495019556890_0001 (state: ACCEPTED)

17/05/17 19:17:51 INFO yarn.Client:Application report for application_1495019556890_0001 (state: ACCEPTED)

17/05/17 19:17:52 INFO yarn.Client:Application report for application_1495019556890_0001 (state: ACCEPTED)

17/05/17 19:17:53 INFO yarn.Client:Application report for application_1495019556890_0001 (state: ACCEPTED)

17/05/17 19:17:54 INFO yarn.Client:Application report for application_1495019556890_0001 (state: ACCEPTED)

17/05/17 19:17:55 INFO yarn.Client:Application report for application_1495019556890_0001 (state: ACCEPTED)

17/05/17 19:17:56 INFO yarn.Client:Application report for application_1495019556890_0001 (state: ACCEPTED)

17/05/17 19:17:57 INFO yarn.Client:Application report for application_1495019556890_0001 (state: ACCEPTED)

17/05/17 19:17:58 INFO yarn.Client:Application report for application_1495019556890_0001 (state: ACCEPTED)

17/05/17 19:17:59 INFO yarn.Client:Application report for application_1495019556890_0001 (state: ACCEPTED)

17/05/17 19:18:00 INFO yarn.Client:Application report for application_1495019556890_0001 (state: ACCEPTED)

17/05/17 19:18:01 INFO yarn.Client:Application report for application_1495019556890_0001 (state: ACCEPTED)

17/05/17 19:18:02 INFO yarn.Client:Application report for application_1495019556890_0001 (state: ACCEPTED)

17/05/17 19:18:03 INFO yarn.Client:Application report for application_1495019556890_0001 (state: ACCEPTED)

17/05/17 19:18:04 INFO yarn.Client:Application report for application_1495019556890_0001 (state: ACCEPTED)

17/05/17 19:18:05 INFO yarn.Client:Application report for application_1495019556890_0001 (state: ACCEPTED)

17/05/17 19:18:06 INFO yarn.Client:Application report for application_1495019556890_0001 (state: ACCEPTED)

17/05/17 19:18:06 INFOcluster.YarnSchedulerBackend$YarnSchedulerEndpoint: ApplicationMasterregistered as NettyRpcEndpointRef(null)

17/05/17 19:18:07 INFOcluster.YarnClientSchedulerBackend: Add WebUI Filter. org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter,Map(PROXY_HOSTS -> hserver1, PROXY_URI_BASES ->http://hserver1:8088/proxy/application_1495019556890_0001),/proxy/application_1495019556890_0001

17/05/17 19:18:07 INFO ui.JettyUtils:Adding filter: org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter

17/05/17 19:18:07 INFO yarn.Client:Application report for application_1495019556890_0001 (state: ACCEPTED)

17/05/17 19:18:08 INFO yarn.Client:Application report for application_1495019556890_0001 (state: RUNNING)

17/05/17 19:18:08 INFO yarn.Client:

client token: N/A

diagnostics: N/A

ApplicationMaster host: 192.168.27.142

ApplicationMaster RPC port: 0

queue: default

start time: 1495019867505

final status: UNDEFINED

tracking URL: http://hserver1:8088/proxy/application_1495019556890_0001/

user: root

17/05/17 19:18:08 INFOcluster.YarnClientSchedulerBackend: Application application_1495019556890_0001has started running.

17/05/17 19:18:08 INFO util.Utils:Successfully started service'org.apache.spark.network.netty.NettyBlockTransferService' on port 40716.

17/05/17 19:18:08 INFOnetty.NettyBlockTransferService: Server created on 192.168.27.143:40716

17/05/17 19:18:08 INFO storage.BlockManager:Using org.apache.spark.storage.RandomBlockReplicationPolicy for blockreplication policy

17/05/17 19:18:08 INFOstorage.BlockManagerMaster: Registering BlockManager BlockManagerId(driver,192.168.27.143, 40716, None)

17/05/17 19:18:08 INFO storage.BlockManagerMasterEndpoint:Registering block manager 192.168.27.143:40716 with 413.9 MB RAM,BlockManagerId(driver, 192.168.27.143, 40716, None)

17/05/17 19:18:08 INFOstorage.BlockManagerMaster: Registered BlockManager BlockManagerId(driver,192.168.27.143, 40716, None)

17/05/17 19:18:08 INFOstorage.BlockManager: Initialized BlockManager: BlockManagerId(driver,192.168.27.143, 40716, None)

17/05/17 19:18:08 INFOhandler.ContextHandler: Startedo.s.j.s.ServletContextHandler@27960b1e{/metrics/json,null,AVAILABLE,@Spark}

17/05/17 19:18:08 INFOcluster.YarnClientSchedulerBackend: SchedulerBackend is ready for schedulingbeginning after waiting maxRegisteredResourcesWaitingTime: 30000(ms)

17/05/17 19:18:09 INFOinternal.SharedState: Warehouse path is 'file:/opt/spark/spark-2.1.1-bin-hadoop2.7/spark-warehouse/'.

17/05/17 19:18:09 INFOhandler.ContextHandler: Startedo.s.j.s.ServletContextHandler@2fc49538{/SQL,null,AVAILABLE,@Spark}

17/05/17 19:18:09 INFOhandler.ContextHandler: Started o.s.j.s.ServletContextHandler@d5e575c{/SQL/json,null,AVAILABLE,@Spark}

17/05/17 19:18:09 INFOhandler.ContextHandler: Startedo.s.j.s.ServletContextHandler@1cd6b1bd{/SQL/execution,null,AVAILABLE,@Spark}

17/05/17 19:18:09 INFOhandler.ContextHandler: Started o.s.j.s.ServletContextHandler@3b41e1bf{/SQL/execution/json,null,AVAILABLE,@Spark}

17/05/17 19:18:09 INFOhandler.ContextHandler: Startedo.s.j.s.ServletContextHandler@67e0fd6d{/static/sql,null,AVAILABLE,@Spark}

17/05/17 19:18:10 INFO spark.SparkContext:Starting job: reduce at SparkPi.scala:38

17/05/17 19:18:10 INFOscheduler.DAGScheduler: Got job 0 (reduce at SparkPi.scala:38) with 2 outputpartitions

17/05/17 19:18:10 INFOscheduler.DAGScheduler: Final stage: ResultStage 0 (reduce at SparkPi.scala:38)

17/05/17 19:18:10 INFOscheduler.DAGScheduler: Parents of final stage: List()

17/05/17 19:18:10 INFOscheduler.DAGScheduler: Missing parents: List()

17/05/17 19:18:10 INFOscheduler.DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[1] at map atSparkPi.scala:34), which has no missing parents

17/05/17 19:18:10 INFO memory.MemoryStore:Block broadcast_0 stored as values in memory (estimated size 1832.0 B, free413.9 MB)

17/05/17 19:18:11 INFO memory.MemoryStore:Block broadcast_0_piece0 stored as bytes in memory (estimated size 1167.0 B,free 413.9 MB)

17/05/17 19:18:11 INFOstorage.BlockManagerInfo: Added broadcast_0_piece0 in memory on192.168.27.143:40716 (size: 1167.0 B, free: 413.9 MB)

17/05/17 19:18:11 INFO spark.SparkContext:Created broadcast 0 from broadcast at DAGScheduler.scala:996

17/05/17 19:18:11 INFOscheduler.DAGScheduler: Submitting 2 missing tasks from ResultStage 0(MapPartitionsRDD[1] at map at SparkPi.scala:34)

17/05/17 19:18:11 INFOcluster.YarnScheduler: Adding task set 0.0 with 2 tasks

17/05/17 19:18:26 WARNcluster.YarnScheduler: Initial job has not accepted any resources; check yourcluster UI to ensure that workers are registered and have sufficient resources

17/05/17 19:18:34 INFOcluster.YarnSchedulerBackend$YarnDriverEndpoint: Registered executorNettyRpcEndpointRef(null) (192.168.27.141:44490) with ID 1

17/05/17 19:18:34 INFOscheduler.TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, hserver2,executor 1, partition 0, PROCESS_LOCAL, 6037 bytes)

17/05/17 19:18:35 INFO storage.BlockManagerMasterEndpoint:Registering block manager hserver2:32930 with 413.9 MB RAM, BlockManagerId(1,hserver2, 32930, None)

17/05/17 19:18:40 INFOstorage.BlockManagerInfo: Added broadcast_0_piece0 in memory on hserver2:32930(size: 1167.0 B, free: 413.9 MB)

17/05/17 19:18:43 INFOscheduler.TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, hserver2,executor 1, partition 1, PROCESS_LOCAL, 6037 bytes)

17/05/17 19:18:43 INFOscheduler.TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 9093 ms onhserver2 (executor 1) (1/2)

17/05/17 19:18:43 INFOscheduler.TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 367 ms onhserver2 (executor 1) (2/2)

17/05/17 19:18:43 INFOcluster.YarnScheduler: Removed TaskSet 0.0, whose tasks have all completed,from pool

17/05/17 19:18:43 INFOscheduler.DAGScheduler: ResultStage 0 (reduce at SparkPi.scala:38) finished in32.711 s

17/05/17 19:18:43 INFOscheduler.DAGScheduler: Job 0 finished: reduce at SparkPi.scala:38, took33.620405 s

Pi is roughly 3.139235696178481

17/05/17 19:18:43 INFOserver.ServerConnector: Stopped Spark@21d5c1a0{HTTP/1.1}{0.0.0.0:4040}

17/05/17 19:18:43 INFOhandler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@f415a95{/stages/stage/kill,null,UNAVAILABLE,@Spark}

17/05/17 19:18:43 INFO handler.ContextHandler:Stoppedo.s.j.s.ServletContextHandler@114a85c2{/jobs/job/kill,null,UNAVAILABLE,@Spark}

17/05/17 19:18:43 INFOhandler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@12cd9150{/api,null,UNAVAILABLE,@Spark}

17/05/17 19:18:43 INFO handler.ContextHandler:Stopped o.s.j.s.ServletContextHandler@47289387{/,null,UNAVAILABLE,@Spark}

17/05/17 19:18:43 INFOhandler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@1950e8a6{/static,null,UNAVAILABLE,@Spark}

17/05/17 19:18:43 INFO handler.ContextHandler:Stoppedo.s.j.s.ServletContextHandler@35d6ca49{/executors/threadDump/json,null,UNAVAILABLE,@Spark}

17/05/17 19:18:43 INFOhandler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@56e07a08{/executors/threadDump,null,UNAVAILABLE,@Spark}

17/05/17 19:18:43 INFOhandler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@5990e6c5{/executors/json,null,UNAVAILABLE,@Spark}

17/05/17 19:18:43 INFOhandler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@4a9cc6cb{/executors,null,UNAVAILABLE,@Spark}

17/05/17 19:18:43 INFOhandler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@142eef62{/environment/json,null,UNAVAILABLE,@Spark}

17/05/17 19:18:43 INFOhandler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@517bd097{/environment,null,UNAVAILABLE,@Spark}

17/05/17 19:18:43 INFOhandler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@50687efb{/storage/rdd/json,null,UNAVAILABLE,@Spark}

17/05/17 19:18:43 INFOhandler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@66971f6b{/storage/rdd,null,UNAVAILABLE,@Spark}

17/05/17 19:18:43 INFOhandler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@3bffddff{/storage/json,null,UNAVAILABLE,@Spark}

17/05/17 19:18:43 INFOhandler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@1601e47{/storage,null,UNAVAILABLE,@Spark}

17/05/17 19:18:43 INFOhandler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@66ea1466{/stages/pool/json,null,UNAVAILABLE,@Spark}

17/05/17 19:18:43 INFO handler.ContextHandler:Stoppedo.s.j.s.ServletContextHandler@5471388b{/stages/pool,null,UNAVAILABLE,@Spark}

17/05/17 19:18:43 INFOhandler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@299266e2{/stages/stage/json,null,UNAVAILABLE,@Spark}

17/05/17 19:18:43 INFO handler.ContextHandler:Stoppedo.s.j.s.ServletContextHandler@49f5c307{/stages/stage,null,UNAVAILABLE,@Spark}

17/05/17 19:18:43 INFOhandler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@21526f6c{/stages/json,null,UNAVAILABLE,@Spark}

17/05/17 19:18:43 INFOhandler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@4c36250e{/stages,null,UNAVAILABLE,@Spark}

17/05/17 19:18:43 INFOhandler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@5dcbb60{/jobs/job/json,null,UNAVAILABLE,@Spark}

17/05/17 19:18:43 INFOhandler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@7ef2d7a6{/jobs/job,null,UNAVAILABLE,@Spark}

17/05/17 19:18:43 INFOhandler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@cdc3aae{/jobs/json,null,UNAVAILABLE,@Spark}

17/05/17 19:18:43 INFOhandler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@a486d78{/jobs,null,UNAVAILABLE,@Spark}

17/05/17 19:18:44 INFO ui.SparkUI: StoppedSpark web UI at http://192.168.27.143:4040

17/05/17 19:18:44 INFOcluster.YarnClientSchedulerBackend: Interrupting monitor thread

17/05/17 19:18:44 INFOcluster.YarnClientSchedulerBackend: Shutting down all executors

17/05/17 19:18:44 INFOcluster.YarnSchedulerBackend$YarnDriverEndpoint: Asking each executor to shutdown

17/05/17 19:18:44 INFO cluster.SchedulerExtensionServices:Stopping SchedulerExtensionServices

(serviceOption=None,

services=List(),

started=false)

17/05/17 19:18:44 INFOcluster.YarnClientSchedulerBackend: Stopped

17/05/17 19:18:44 INFOspark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

17/05/17 19:18:44 INFO memory.MemoryStore:MemoryStore cleared

17/05/17 19:18:44 INFOstorage.BlockManager: BlockManager stopped

17/05/17 19:18:44 INFOstorage.BlockManagerMaster: BlockManagerMaster stopped

17/05/17 19:18:44 INFOscheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint:OutputCommitCoordinator stopped!

17/05/17 19:18:44 INFO spark.SparkContext:Successfully stopped SparkContext

17/05/17 19:18:44 INFOutil.ShutdownHookManager: Shutdown hook called

17/05/17 19:18:44 INFOutil.ShutdownHookManager: Deleting directory/tmp/spark-73613bca-fc94-455a-a152-d9b38f3940fd

[root@hserver1 spark-2.1.1-bin-hadoop2.7]#3.1.2 常见错误解决

在yarn-client模式下,需要访问spark集群和hadoop集群,如果配置不对,容易出问题。常见错误如下:

3.1.2.1 Yarn application has already ended!

3.1.2.1.1 主要错误信息

Exceptionin thread "main" org.apache.spark.SparkException: Yarn applicationhas already ended! It might have been killed or unable to launch applicationmaster.

at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.waitForApplication(YarnClientSchedulerBackend.scala:85)

atorg.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.start(YarnClientSchedulerBackend.scala:62)

at org.apache.spark.scheduler.TaskSchedulerImpl.start(TaskSchedulerImpl.scala:156)

at org.apache.spark.SparkContext.(SparkContext.scala:509)

at org.apache.spark.SparkContext$.getOrCreate(SparkContext.scala:2320)

atorg.apache.spark.sql.SparkSession$Builder$$anonfun$6.apply(SparkSession.scala:868)

atorg.apache.spark.sql.SparkSession$Builder$$anonfun$6.apply(SparkSession.scala:860)

at scala.Option.getOrElse(Option.scala:121)

atorg.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:860)

at org.apache.spark.examples.SparkPi$.main(SparkPi.scala:31)

at org.apache.spark.examples.SparkPi.main(SparkPi.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

atsun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:743)

atorg.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:187)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:212)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:126)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

17/05/17 15:16:15 INFOutil.ShutdownHookManager: Shutdown hook called

17/05/17 15:16:15 INFOutil.ShutdownHookManager: Deleting directory/tmp/spark-3cc28864-370d-4a14-a892-f31a67bd2023 完整输出如下:

[root@hserver1 sbin]# cd /opt/spark/spark-2.1.1-bin-hadoop2.7

[root@hserver1 spark-2.1.1-bin-hadoop2.7]# ./bin/spark-submit --classorg.apache.spark.examples.SparkPi --master yarn-clientexamples/jars/spark-examples_2.11-2.1.1.jar

Warning: Master yarn-client is deprecated since 2.0. Please usemaster "yarn" with specified deploy mode instead.

17/05/17 20:40:03 INFO spark.SparkContext: Running Spark version2.1.1

17/05/17 20:40:04 WARN util.NativeCodeLoader: Unable to loadnative-hadoop library for your platform... using builtin-java classes whereapplicable

17/05/17 20:40:04 INFO spark.SecurityManager: Changing view acls to:root

17/05/17 20:40:04 INFO spark.SecurityManager: Changing modify aclsto: root

17/05/17 20:40:04 INFO spark.SecurityManager: Changing view aclsgroups to:

17/05/17 20:40:04 INFO spark.SecurityManager: Changing modify aclsgroups to:

17/05/17 20:40:04 INFO spark.SecurityManager: SecurityManager:authentication disabled; ui acls disabled; users with view permissions: Set(root); groups withview permissions: Set(); users withmodify permissions: Set(root); groups with modify permissions: Set()

17/05/17 20:40:05 INFO util.Utils: Successfully started service 'sparkDriver'on port 38765.

17/05/17 20:40:05 INFO spark.SparkEnv: Registering MapOutputTracker

17/05/17 20:40:05 INFO spark.SparkEnv: RegisteringBlockManagerMaster

17/05/17 20:40:05 INFO storage.BlockManagerMasterEndpoint: Usingorg.apache.spark.storage.DefaultTopologyMapper for getting topology information

17/05/17 20:40:05 INFO storage.BlockManagerMasterEndpoint:BlockManagerMasterEndpoint up

17/05/17 20:40:05 INFO storage.DiskBlockManager: Created localdirectory at /tmp/blockmgr-59574c4e-a88b-4bbb-8e81-27c68d536de4

17/05/17 20:40:05 INFO memory.MemoryStore: MemoryStore started withcapacity 413.9 MB

17/05/17 20:40:06 INFO spark.SparkEnv: RegisteringOutputCommitCoordinator

17/05/17 20:40:06 INFO util.log: Logging initialized @4932ms

17/05/17 20:40:06 INFO server.Server: jetty-9.2.z-SNAPSHOT

17/05/17 20:40:06 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@a486d78{/jobs,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@cdc3aae{/jobs/json,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@7ef2d7a6{/jobs/job,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5dcbb60{/jobs/job/json,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@4c36250e{/stages,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@21526f6c{/stages/json,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@49f5c307{/stages/stage,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@299266e2{/stages/stage/json,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@5471388b{/stages/pool,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@66ea1466{/stages/pool/json,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@1601e47{/storage,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@3bffddff{/storage/json,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@66971f6b{/storage/rdd,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@50687efb{/storage/rdd/json,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@517bd097{/environment,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@142eef62{/environment/json,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@4a9cc6cb{/executors,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@5990e6c5{/executors/json,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@56e07a08{/executors/threadDump,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@35d6ca49{/executors/threadDump/json,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@1950e8a6{/static,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@47289387{/,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@12cd9150{/api,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@114a85c2{/jobs/job/kill,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO handler.ContextHandler: Startedo.s.j.s.ServletContextHandler@f415a95{/stages/stage/kill,null,AVAILABLE,@Spark}

17/05/17 20:40:06 INFO server.ServerConnector: StartedSpark@21d5c1a0{HTTP/1.1}{0.0.0.0:4040}

17/05/17 20:40:06 INFO server.Server: Started @5350ms

17/05/17 20:40:06 INFO util.Utils: Successfully started service'SparkUI' on port 4040.

17/05/17 20:40:06 INFO ui.SparkUI: Bound SparkUI to 0.0.0.0, andstarted at http://192.168.27.143:4040

17/05/17 20:40:06 INFO spark.SparkContext: Added JARfile:/opt/spark/spark-2.1.1-bin-hadoop2.7/examples/jars/spark-examples_2.11-2.1.1.jarat spark://192.168.27.143:38765/jars/spark-examples_2.11-2.1.1.jar withtimestamp 1495024806742

17/05/17 20:40:08 INFO client.RMProxy: Connecting to ResourceManagerat hserver1/192.168.27.143:8032

17/05/17 20:40:09 INFO yarn.Client: Requesting a new applicationfrom cluster with 2 NodeManagers

17/05/17 20:40:09 INFO yarn.Client: Verifying our application hasnot requested more than the maximum memory capability of the cluster (2048 MBper container)

17/05/17 20:40:09 INFO yarn.Client: Will allocate AM container, with896 MB memory including 384 MB overhead

17/05/17 20:40:09 INFO yarn.Client: Setting up container launchcontext for our AM

17/05/17 20:40:09 INFO yarn.Client: Setting up the launchenvironment for our AM container

17/05/17 20:40:09 INFO yarn.Client: Preparing resources for our AMcontainer

17/05/17 20:40:13 WARN yarn.Client: Neither spark.yarn.jars norspark.yarn.archive is set, falling back to uploading libraries underSPARK_HOME.

17/05/17 20:40:18 INFO yarn.Client: Uploading resourcefile:/tmp/spark-5e00aae5-dc98-48fd-83b4-978714e002d4/__spark_libs__2259912550909667048.zip->hdfs://hserver1:9000/user/root/.sparkStaging/application_1495024538278_0001/__spark_libs__2259912550909667048.zip

17/05/17 20:40:27 INFO yarn.Client: Uploading resourcefile:/tmp/spark-5e00aae5-dc98-48fd-83b4-978714e002d4/__spark_conf__5098178835931124874.zip->hdfs://hserver1:9000/user/root/.sparkStaging/application_1495024538278_0001/__spark_conf__.zip

17/05/17 20:40:27 INFO spark.SecurityManager: Changing view acls to:root

17/05/17 20:40:27 INFO spark.SecurityManager: Changing modify aclsto: root

17/05/17 20:40:27 INFO spark.SecurityManager: Changing view aclsgroups to:

17/05/17 20:40:27 INFO spark.SecurityManager: Changing modify aclsgroups to:

17/05/17 20:40:27 INFO spark.SecurityManager: SecurityManager:authentication disabled; ui acls disabled; users with view permissions: Set(root); groups withview permissions: Set(); users withmodify permissions: Set(root); groups with modify permissions: Set()

17/05/17 20:40:27 INFO yarn.Client: Submitting applicationapplication_1495024538278_0001 to ResourceManager

17/05/17 20:40:28 INFO impl.YarnClientImpl: Submitted applicationapplication_1495024538278_0001

17/05/17 20:40:28 INFO cluster.SchedulerExtensionServices: StartingYarn extension services with app application_1495024538278_0001 and attemptIdNone

17/05/17 20:40:29 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:29 INFO yarn.Client:

client token: N/A

diagnostics: [Wed May17 20:40:28 +0800 2017] Scheduler has assigned a container for AM, waiting forAM container to be launched

ApplicationMasterhost: N/A

ApplicationMaster RPCport: -1

queue: default

start time:1495024828093

final status:UNDEFINED

tracking URL:http://hserver1:8088/proxy/application_1495024538278_0001/

user: root

17/05/17 20:40:30 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:31 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:32 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:33 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:34 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:35 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:36 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:37 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:38 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:39 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:40 INFO yarn.Client: Application report for application_1495024538278_0001(state: ACCEPTED)

17/05/17 20:40:41 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:42 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:43 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:44 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:45 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:46 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:47 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:48 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:49 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:50 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:51 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:52 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:53 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:54 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:55 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:56 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:57 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: ACCEPTED)

17/05/17 20:40:58 INFO yarn.Client: Application report forapplication_1495024538278_0001 (state: FAILED)

17/05/17 20:40:58 INFO yarn.Client:

client token: N/A

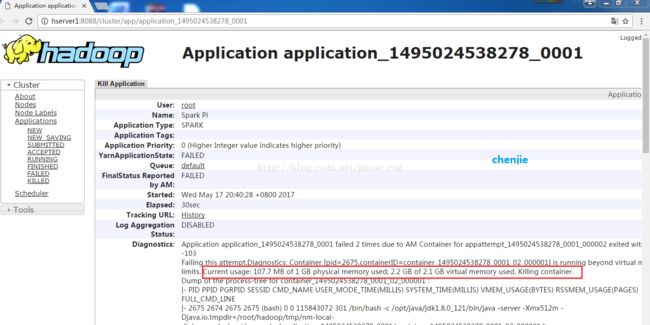

diagnostics:Application application_1495024538278_0001 failed 2 times due to AM Containerfor appattempt_1495024538278_0001_000002 exited with exitCode: -103

Failing this attempt.Diagnostics: Container[pid=2675,containerID=container_1495024538278_0001_02_000001] is running beyondvirtual memory limits. Current usage: 107.7 MB of 1 GB physical memory used;2.2 GB of 2.1 GB virtual memory used. Killing container.

Dump of the process-tree for container_1495024538278_0001_02_000001:

|- PID PPID PGRPIDSESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES)RSSMEM_USAGE(PAGES) FULL_CMD_LINE

|- 2675 2674 2675 2675(bash) 0 0 115843072 301 /bin/bash -c /opt/java/jdk1.8.0_121/bin/java -server-Xmx512m -Djava.io.tmpdir=/root/hadoop/tmp/nm-local-dir/usercache/root/appcache/application_1495024538278_0001/container_1495024538278_0001_02_000001/tmp-Dspark.yarn.app.container.log.dir=/opt/hadoop/hadoop-2.8.0/logs/userlogs/application_1495024538278_0001/container_1495024538278_0001_02_000001org.apache.spark.deploy.yarn.ExecutorLauncher --arg '192.168.27.143:38765'--properties-file/root/hadoop/tmp/nm-local-dir/usercache/root/appcache/application_1495024538278_0001/container_1495024538278_0001_02_000001/__spark_conf__/__spark_conf__.properties1>/opt/hadoop/hadoop-2.8.0/logs/userlogs/application_1495024538278_0001/container_1495024538278_0001_02_000001/stdout2>/opt/hadoop/hadoop-2.8.0/logs/userlogs/application_1495024538278_0001/container_1495024538278_0001_02_000001/stderr

|- 2680 2675 2675 2675(java) 514 18 2283139072 27263 /opt/java/jdk1.8.0_121/bin/java -server -Xmx512m-Djava.io.tmpdir=/root/hadoop/tmp/nm-local-dir/usercache/root/appcache/application_1495024538278_0001/container_1495024538278_0001_02_000001/tmp-Dspark.yarn.app.container.log.dir=/opt/hadoop/hadoop-2.8.0/logs/userlogs/application_1495024538278_0001/container_1495024538278_0001_02_000001org.apache.spark.deploy.yarn.ExecutorLauncher --arg 192.168.27.143:38765--properties-file /root/hadoop/tmp/nm-local-dir/usercache/root/appcache/application_1495024538278_0001/container_1495024538278_0001_02_000001/__spark_conf__/__spark_conf__.properties

Container killed on request. Exit code is 143

Container exited with a non-zero exit code 143

For more detailed output, check the application tracking page:http://hserver1:8088/cluster/app/application_1495024538278_0001 Then click onlinks to logs of each attempt.

. Failing the application.

ApplicationMasterhost: N/A

ApplicationMaster RPCport: -1

queue: default

start time:1495024828093

final status: FAILED

tracking URL: http://hserver1:8088/cluster/app/application_1495024538278_0001

user: root

17/05/17 20:40:58 INFO yarn.Client: Deleted staging directoryhdfs://hserver1:9000/user/root/.sparkStaging/application_1495024538278_0001

17/05/17 20:40:58 ERROR spark.SparkContext: Error initializingSparkContext.

org.apache.spark.SparkException: Yarn application has already ended!It might have been killed or unable to launch application master.

atorg.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.waitForApplication(YarnClientSchedulerBackend.scala:85)

atorg.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.start(YarnClientSchedulerBackend.scala:62)

atorg.apache.spark.scheduler.TaskSchedulerImpl.start(TaskSchedulerImpl.scala:156)

atorg.apache.spark.SparkContext.(SparkContext.scala:509)

atorg.apache.spark.SparkContext$.getOrCreate(SparkContext.scala:2320)

atorg.apache.spark.sql.SparkSession$Builder$$anonfun$6.apply(SparkSession.scala:868)

atorg.apache.spark.sql.SparkSession$Builder$$anonfun$6.apply(SparkSession.scala:860)

atscala.Option.getOrElse(Option.scala:121)

atorg.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:860)

atorg.apache.spark.examples.SparkPi$.main(SparkPi.scala:31)

at org.apache.spark.examples.SparkPi.main(SparkPi.scala)

atsun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

atsun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

atsun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

atjava.lang.reflect.Method.invoke(Method.java:498)

atorg.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:743)

atorg.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:187)

atorg.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:212)

atorg.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:126)

atorg.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

17/05/17 20:40:58 INFO server.ServerConnector: StoppedSpark@21d5c1a0{HTTP/1.1}{0.0.0.0:4040}

17/05/17 20:40:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@f415a95{/stages/stage/kill,null,UNAVAILABLE,@Spark}

17/05/17 20:40:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@114a85c2{/jobs/job/kill,null,UNAVAILABLE,@Spark}

17/05/17 20:40:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@12cd9150{/api,null,UNAVAILABLE,@Spark}

17/05/17 20:40:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@47289387{/,null,UNAVAILABLE,@Spark}

17/05/17 20:40:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@1950e8a6{/static,null,UNAVAILABLE,@Spark}

17/05/17 20:40:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@35d6ca49{/executors/threadDump/json,null,UNAVAILABLE,@Spark}

17/05/17 20:40:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@56e07a08{/executors/threadDump,null,UNAVAILABLE,@Spark}

17/05/17 20:40:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@5990e6c5{/executors/json,null,UNAVAILABLE,@Spark}

17/05/17 20:40:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@4a9cc6cb{/executors,null,UNAVAILABLE,@Spark}

17/05/17 20:40:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@142eef62{/environment/json,null,UNAVAILABLE,@Spark}

17/05/17 20:40:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@517bd097{/environment,null,UNAVAILABLE,@Spark}

17/05/17 20:40:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@50687efb{/storage/rdd/json,null,UNAVAILABLE,@Spark}

17/05/17 20:40:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@66971f6b{/storage/rdd,null,UNAVAILABLE,@Spark}

17/05/17 20:40:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@3bffddff{/storage/json,null,UNAVAILABLE,@Spark}

17/05/17 20:40:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@1601e47{/storage,null,UNAVAILABLE,@Spark}

17/05/17 20:40:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@66ea1466{/stages/pool/json,null,UNAVAILABLE,@Spark}

17/05/17 20:40:58 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@5471388b{/stages/pool,null,UNAVAILABLE,@Spark}

17/05/17 20:40:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@299266e2{/stages/stage/json,null,UNAVAILABLE,@Spark}

17/05/17 20:40:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@49f5c307{/stages/stage,null,UNAVAILABLE,@Spark}

17/05/17 20:40:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@21526f6c{/stages/json,null,UNAVAILABLE,@Spark}

17/05/17 20:40:58 INFO handler.ContextHandler: Stoppedo.s.j.s.ServletContextHandler@4c36250e{/stages,null,UNAVAILABLE,@Spark}