Matlab R2017b 自动驾驶工具箱学习笔记(3)_Tutorials_Forward Collision Warning Using Sensor Fusion

Forward Collision Warning Using Sensor Fusion

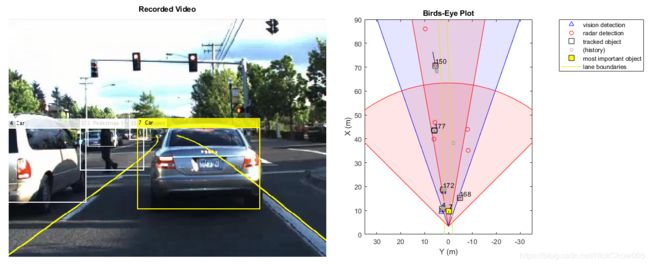

本例程展示基于融合视觉和雷达传感器的物体检测的FCW实现方式。

概述

FCW是驾驶辅助和自动驾驶系统的重要功能,他在即将发生碰撞之前给驾驶员提供精确、及时和可靠的报警信息。为了实现此功能,汽车装配有前视视觉和雷达传感器。为了提高精确报警的可靠性和最大化的降低错误报警的可能性,需要对传感器信息进行融合。

本例程中,测试车辆装配有不同类型的传感器,各传感器的输出均会被记录。本例程中使用的传感器有:

1. 视觉传感器, 提供所观测物体的列表、分类以及车道线信息,物体列表每秒更新10次,车道线每秒更新20次。

2. 中距和长距毫米波雷达,提供未分类的物体列表。物体列表每秒更新20次。

3. IMU,提供车速和转向信息,每秒更新20次。

4. 摄像机,录制车辆前方场景的视频。备注:算法不使用视频信息,只为显示追踪结果以作算法验证。

FCW功能实现由以下步骤组成:

1. 传感器信息采集;

2. 融合传感器信息,生成跟踪目标列表,i.e. 车辆前方物体的估计位置和速度;

3. 基于跟踪目标和FCW策略的报警。FCW策略基于Euro NCAP AEB测试流程,并同时参考车辆前方物体的相对距离和相对速度;

更多详细关于多目标跟踪的信息,请参考Multiple Object tracking

本例中的显示信息均基于monoCamera和birdsEyePlot。为简洁起见,创建和更新显示信息被移植入帮助函数中。更多关于显示信息的使用方法,请参看Annotate Video using Dectections in vehicle coordinates和visualize sensor coverage, detection and tracks.

本例是一个脚本,这里显示的主体和文后以local functions形式出现的帮助函数。

% Set up the display

[videoReader, videoDisplayHandle, bepPlotters, sensor] = helperCreateFCWDemoDisplay('01_city_c2s_fcw_10s.mp4', 'SensorConfigurationData.mat');

% Read the recorded detections file

[visionObjects, radarObjects, inertialMeasurementUnit, laneReports, ...

timeStep, numSteps] = readSensorRecordingsFile('01_city_c2s_fcw_10s_sensor.mat');

% An initial ego lane is calculated. If the recorded lane information is

% invalid, define the lane boundaries as straight lines half a lane

% distance on each side of the car

laneWidth = 3.6; % meters

egoLane = struct('left', [0 0 laneWidth/2], 'right', [0 0 -laneWidth/2]);

% Prepare some time variables

time = 0; % Time since the beginning of the recording

currentStep = 0; % Current timestep

snapTime = 9.3; % The time to capture a snapshot of the display

% Initialize the tracker

[tracker, positionSelector, velocitySelector] = setupTracker();

while currentStep < numSteps && ishghandle(videoDisplayHandle)

% Update scenario counters

currentStep = currentStep + 1;

time = time + timeStep;

% Process the sensor detections as objectDetection inputs to the tracker

[detections, laneBoundaries, egoLane] = processDetections(...

visionObjects(currentStep), radarObjects(currentStep), ...

inertialMeasurementUnit(currentStep), laneReports(currentStep), ...

egoLane, time);

% Using the list of objectDetections, return the tracks, updated to time

confirmedTracks = updateTracks(tracker, detections, time);

% Find the most important object and calculate the forward collision

% warning

mostImportantObject = findMostImportantObject(confirmedTracks, egoLane, positionSelector, velocitySelector);

% Update video and birds-eye plot displays

frame = readFrame(videoReader); % Read video frame

helperUpdateFCWDemoDisplay(frame, videoDisplayHandle, bepPlotters, ...

laneBoundaries, sensor, confirmedTracks, mostImportantObject, positionSelector, ...

velocitySelector, visionObjects(currentStep), radarObjects(currentStep));

% Capture a snapshot

if time >= snapTime && time < snapTime + timeStep

snapnow;

end

end创建多目标跟踪器

多目标跟踪器跟踪视觉和雷达传感器返回的物体列表中物体,通过融合两种传感器的信息,误报的概率将大大被降低。

setupTracker函数返回多目标跟踪器。创建多目标跟踪器时应考虑以下信息:

- FilterInitializationFcn:likely运动和测量模型。这种情况下,物体定义为匀加速运动。尽管可以通过配置线性卡尔曼滤波实现此模型;initConstantAccelerationFilter却配置了扩展卡尔曼滤波。参考“卡尔曼滤波定义”部分;

- Assignmentthreshold:检测距离多远时会放弃追踪。默认值是30,如果有检测到应该被追踪的物体却没有被追踪,增大该值。如果被追踪到的物体太远,减小该值。本例中该值为35.

- NumCoastingUpdates:追踪请求被清除之前的滑移次数。术语滑移是指在没有检测到目标(预测)的情形下更新跟踪器。默认值为5。本例中,跟踪器每秒被调用20次并且有两个传感器,所以不需修改默认值。

- ConfirmationParameters:确认追踪参数。新的追踪器初始化后检测均未分配。一些检测也许是误报,所以所有追踪目标均为“Tentative”。为确认追踪目标,目标必须至少在N次追踪器更新中被检测到M次。M,N的选择依赖于目标的可见性,本例中默认设置为3次更新中检测到2次。

setupTracker输出为:

-

tracker - 针对本例配置的多目标跟踪器

-

positionSelector- 指定某一状态向量元素为位置信息的矩阵: position = positionSelector * State -

velocitySelector - 指定某一状态向量为速度信息的矩阵: velocity = velocitySelector * State

function [tracker, positionSelector, velocitySelector] = setupTracker()

tracker = multiObjectTracker(...

'FilterInitializationFcn', @initConstantAccelerationFilter, ...

'AssignmentThreshold', 35, 'ConfirmationParameters', [2 3], ...

'NumCoastingUpdates', 5);

% The State vector is:

% In constant velocity: State = [x;vx;y;vy]

% In constant acceleration: State = [x;vx;ax;y;vy;ay]

% Define which part of the State is the position. For example:

% In constant velocity: [x;y] = [1 0 0 0; 0 0 1 0] * State

% In constant acceleration: [x;y] = [1 0 0 0 0 0; 0 0 0 1 0 0] * State

positionSelector = [1 0 0 0 0 0; 0 0 0 1 0 0];

% Define which part of the State is the velocity. For example:

% In constant velocity: [x;y] = [0 1 0 0; 0 0 0 1] * State

% In constant acceleration: [x;y] = [0 1 0 0 0 0; 0 0 0 0 1 0] * State

velocitySelector = [0 1 0 0 0 0; 0 0 0 0 1 0];

endDefine a Kalman Filter

定义卡尔曼滤波

上文定义的multipleObjectTracker使用此处定义的滤波器初始化函数创建卡尔曼滤波器(线性,扩展或无损)。而后,滤波器将被用于车辆周围的物体追踪。

function filter = initConstantAccelerationFilter(detection)

% This function shows how to configure a constant acceleration filter. The

% input is an objectDetection and the output is a tracking filter.

% For clarity, this function shows how to configure a trackingKF,

% trackingEKF, or trackingUKF for constant acceleration.

%

% Steps for creating a filter:

% 1. Define the motion model and state

% 2. Define the process noise

% 3. Define the measurement model

% 4. Initialize the state vector based on the measurement

% 5. Initialize the state covariance based on the measurement noise

% 6. Create the correct filter

% Step 1: Define the motion model and state

% This example uses a constant acceleration model, so:

STF = @constacc; % State-transition function, for EKF and UKF

STFJ = @constaccjac; % State-transition function Jacobian, only for EKF

% The motion model implies that the state is [x;vx;ax;y;vy;ay]

% You can also use constvel and constveljac to set up a constant

% velocity model, constturn and constturnjac to set up a constant turn

% rate model, or write your own models.

% Step 2: Define the process noise

dt = 0.05; % Known timestep size

sigma = 1; % Magnitude of the unknown acceleration change rate

% The process noise along one dimension

Q1d = [dt^4/4, dt^3/2, dt^2/2; dt^3/2, dt^2, dt; dt^2/2, dt, 1] * sigma^2;

Q = blkdiag(Q1d, Q1d); % 2-D process noise

% Step 3: Define the measurement model

MF = @fcwmeas; % Measurement function, for EKF and UKF

MJF = @fcwmeasjac; % Measurement Jacobian function, only for EKF

% Step 4: Initialize a state vector based on the measurement

% The sensors measure [x;vx;y;vy] and the constant acceleration model's

% state is [x;vx;ax;y;vy;ay], so the third and sixth elements of the

% state vector are initialized to zero.

state = [detection.Measurement(1); detection.Measurement(2); 0; detection.Measurement(3); detection.Measurement(4); 0];

% Step 5: Initialize the state covariance based on the measurement

% noise. The parts of the state that are not directly measured are

% assigned a large measurement noise value to account for that.

L = 100; % A large number relative to the measurement noise

stateCov = blkdiag(detection.MeasurementNoise(1:2,1:2), L, detection.MeasurementNoise(3:4,3:4), L);

% Step 6: Create the correct filter.

% Use 'KF' for trackingKF, 'EKF' for trackingEKF, or 'UKF' for trackingUKF

FilterType = 'EKF';

% Creating the filter:

switch FilterType

case 'EKF'

filter = trackingEKF(STF, MF, state,...

'StateCovariance', stateCov, ...

'MeasurementNoise', detection.MeasurementNoise(1:4,1:4), ...

'StateTransitionJacobianFcn', STFJ, ...

'MeasurementJacobianFcn', MJF, ...

'ProcessNoise', Q ...

);

case 'UKF'

filter = trackingUKF(STF, MF, state, ...

'StateCovariance', stateCov, ...

'MeasurementNoise', detection.MeasurementNoise(1:4,1:4), ...

'Alpha', 1e-1, ...

'ProcessNoise', Q ...

);

case 'KF' % The ConstantAcceleration model is linear and KF can be used

% Define the measurement model: measurement = H * state

% In this case:

% measurement = [x;vx;y;vy] = H * [x;vx;ax;y;vy;ay]

% So, H = [1 0 0 0 0 0; 0 1 0 0 0 0; 0 0 0 1 0 0; 0 0 0 0 1 0]

%

% Note that ProcessNoise is automatically calculated by the

% ConstantAcceleration motion model

H = [1 0 0 0 0 0; 0 1 0 0 0 0; 0 0 0 1 0 0; 0 0 0 0 1 0];

filter = trackingKF('MotionModel', '2D Constant Acceleration', ...

'MeasurementModel', H, 'State', state, ...

'MeasurementNoise', detection.MeasurementNoise(1:4,1:4), ...

'StateCovariance', stateCov);

end

end处理并格式化检测

记录的信息在输入追踪器之前需要处理和格式化,步骤如下:

- 清理雷达检测到的杂波信息。雷达会检测到很多禁止的物体:包括护栏,隔离带和交通标志等,若这类检测结果输入到追踪器中,就会误追踪到道路边缘的固定物体,因此这类检测需要移除。车辆前方的静止或者车辆附近移动的物体会被雷达检测到;

- 格式化检测结果并输入追踪器,ie,objectDetection数组。请参看文末的processVideo和ProcessRadar帮助函数。

function [detections,laneBoundaries, egoLane] = processDetections...

(visionFrame, radarFrame, IMUFrame, laneFrame, egoLane, time)

% Inputs:

% visionFrame - objects reported by the vision sensor for this time frame

% radarFrame - objects reported by the radar sensor for this time frame

% IMUFrame - inertial measurement unit data for this time frame

% laneFrame - lane reports for this time frame

% egoLane - the estimated ego lane

% time - the time corresponding to the time frame

% Remove clutter radar objects

[laneBoundaries, egoLane] = processLanes(laneFrame, egoLane);

realRadarObjects = findNonClutterRadarObjects(radarFrame.object,...

radarFrame.numObjects, IMUFrame.velocity, laneBoundaries);

% Return an empty list if no objects are reported

% Counting the total number of object

detections = {};

if (visionFrame.numObjects + numel(realRadarObjects)) == 0

return;

end

% Process the remaining radar objects

detections = processRadar(detections, realRadarObjects, time);

% Process video objects

detections = processVideo(detections, visionFrame, time);

end跟踪器更新

To update the tracker, call the updateTracks method with the following inputs:

调用updateTracks函数及以下参数更新追踪器:

-

跟踪器 - 先前配置的多目标跟踪器。参看“多目标跟踪器创建”部分;

-

检测 - processDetections生成的objectDetection物体列表;

-

时间 - 当前场景时间;

跟踪器输出包含跟踪目标信息的结构体

寻找MIO并实现FCW

MIO定义为本车道线距本车最近的跟踪对象,也即是具有最小正x值的对象。为了减少误报,MIO只参考确认的跟踪目标。

当MIO确定,本车和MIO的相对速度需立即计算。FCW取决于相对距离和相对速度,三种FCW情形如下:

- 预警(黄色):MIO接近本车,但是距离大于FCW距离;FCW距离根据Euro NCAP AEB规范计算。请注意,MIO和本车的相对速度不同,FCW距离也会不同,接近速度越大FCW距离越大;

- 安全(绿色):本车道线无车(无MIO),MIO远离本车,或者相对距离恒定;

- 警报(红色):MIO靠近车辆且相对距离小于FCW距离;

Euro NCAP AEB测试规范定义的距离计算方法:

式中:

d(FCW)为FCW距离;

v(rel)为两车相对速递;

a(max)为最大减速度,定义为重力加速度的40%;

function mostImportantObject = findMostImportantObject(confirmedTracks,egoLane,positionSelector,velocitySelector)

% Initialize outputs and parameters

MIO = []; % By default, there is no MIO

trackID = []; % By default, there is no trackID associated with an MIO

FCW = 3; % By default, if there is no MIO, then FCW is 'safe'

threatColor = 'green'; % By default, the threat color is green

maxX = 1000; % Far enough forward so that no track is expected to exceed this distance

gAccel = 9.8; % Constant gravity acceleration, in m/s^2

maxDeceleration = 0.4 * gAccel; % Euro NCAP AEB definition

delayTime = 1.2; % Delay time for a driver before starting to break, in seconds

positions = getTrackPositions(confirmedTracks, positionSelector);

velocities = getTrackVelocities(confirmedTracks, velocitySelector);

for i = 1:numel(confirmedTracks)

x = positions(i,1);

y = positions(i,2);

relSpeed = velocities(i,1); % The relative speed between the cars, along the lane

if x < maxX && x > 0 % No point checking otherwise

yleftLane = polyval(egoLane.left, x);

yrightLane = polyval(egoLane.right, x);

if (yrightLane <= y) && (y <= yleftLane)

maxX = x;

trackID = i;

MIO = confirmedTracks(i).TrackID;

if relSpeed < 0 % Relative speed indicates object is getting closer

% Calculate expected braking distance according to

% Euro NCAP AEB Test Protocol

d = abs(relSpeed) * delayTime + relSpeed^2 / 2 / maxDeceleration;

if x <= d % 'warn'

FCW = 1;

threatColor = 'red';

else % 'caution'

FCW = 2;

threatColor = 'yellow';

end

end

end

end

end

mostImportantObject = struct('ObjectID', MIO, 'TrackIndex', trackID, 'Warning', FCW, 'ThreatColor', threatColor);

end总结

本例展示了装配有视觉,雷达和IMU传感器的车辆的FCW系统的创建过程。objectDetection生成传感器报告传入multipleObjectTracker跟踪器,跟踪器融合各类信息并跟踪车辆前方的目标。

尝试使用不同的跟踪器参数,观察不同参数对跟踪质量的影响。尝试更改跟踪滤波器为trackingKF或者trackingUKF,或者更换运动模型,eg. 匀速或者连续转向。最后,我们也可以定义自己的运动模型。

辅助函数

readSensorRecordingsFile读取文件记录的传感器数据

function [visionObjects, radarObjects, inertialMeasurementUnit, laneReports, ...

timeStep, numSteps] = readSensorRecordingsFile(sensorRecordingFileName)

% Read Sensor Recordings

% The |ReadDetectionsFile| function reads the recorded sensor data file.

% The recorded data is a single structure that is divided into the

% following substructures:

%

% # |inertialMeasurementUnit|, a struct array with fields: timeStamp,

% velocity, and yawRate. Each element of the array corresponds to a

% different timestep.

% # |laneReports|, a struct array with fields: left and right. Each element

% of the array corresponds to a different timestep.

% Both left and right are structures with fields: isValid, confidence,

% boundaryType, offset, headingAngle, and curvature.

% # |radarObjects|, a struct array with fields: timeStamp (see below),

% numObjects (integer) and object (struct). Each element of the array

% corresponds to a different timestep.

% |object| is a struct array, where each element is a separate object,

% with the fields: id, status, position(x;y;z), velocity(vx,vy,vz),

% amplitude, and rangeMode.

% Note: z is always constant and vz=0.

% # |visionObjects|, a struct array with fields: timeStamp (see below),

% numObjects (integer) and object (struct). Each element of the array

% corresponds to a different timestep.

% |object| is a struct array, where each element is a separate object,

% with the fields: id, classification, position (x;y;z),

% velocity(vx;vy;vz), size(dx;dy;dz). Note: z=vy=vz=dx=dz=0

%

% The timeStamp for recorded vision and radar objects is a uint64 variable

% holding microseconds since the Unix epoch. Timestamps are recorded about

% 50 milliseconds apart. There is a complete synchronization between the

% recordings of vision and radar detections, therefore the timestamps are

% not used in further calculations.

A = load(sensorRecordingFileName);

visionObjects = A.vision;

radarObjects = A.radar;

laneReports = A.lane;

inertialMeasurementUnit = A.inertialMeasurementUnit;

timeStep = 0.05; % Data is provided every 50 milliseconds

numSteps = numel(visionObjects); % Number of recorded timesteps

endprocessLanes将传感器检测到的车道线转换为parabolicLaneBoundary车道线并保持连续的本车道线估计

function [laneBoundaries, egoLane] = processLanes(laneReports, egoLane)

% Lane boundaries are updated based on the laneReports from the recordings.

% Since some laneReports contain invalid (isValid = false) reports or

% impossible parameter values (-1e9), these lane reports are ignored and

% the previous lane boundary is used.

leftLane = laneReports.left;

rightLane = laneReports.right;

% Check the validity of the reported left lane

cond = (leftLane.isValid && leftLane.confidence) && ...

~(leftLane.headingAngle == -1e9 || leftLane.curvature == -1e9);

if cond

egoLane.left = cast([leftLane.curvature, leftLane.headingAngle, leftLane.offset], 'double');

end

% Update the left lane boundary parameters or use the previous ones

leftParams = egoLane.left;

leftBoundaries = parabolicLaneBoundary(leftParams);

leftBoundaries.Strength = 1;

% Check the validity of the reported right lane

cond = (rightLane.isValid && rightLane.confidence) && ...

~(rightLane.headingAngle == -1e9 || rightLane.curvature == -1e9);

if cond

egoLane.right = cast([rightLane.curvature, rightLane.headingAngle, rightLane.offset], 'double');

end

% Update the right lane boundary parameters or use the previous ones

rightParams = egoLane.right;

rightBoundaries = parabolicLaneBoundary(rightParams);

rightBoundaries.Strength = 1;

laneBoundaries = [leftBoundaries, rightBoundaries];

endfindNonClutterRadarObjects移除雷达检测中的被确认为杂波的信息;

function realRadarObjects = findNonClutterRadarObjects(radarObject, numRadarObjects, egoSpeed, laneBoundaries)

% The radar objects include many objects that belong to the clutter.

% Clutter is defined as a stationary object that is not in front of the

% car. The following types of objects pass as nonclutter:

%

% # Any object in front of the car

% # Any moving object in the area of interest around the car, including

% objects that move at a lateral speed around the car

% Allocate memory

normVs = zeros(numRadarObjects, 1);

inLane = zeros(numRadarObjects, 1);

inZone = zeros(numRadarObjects, 1);

% Parameters

LaneWidth = 3.6; % What is considered in front of the car

ZoneWidth = 1.7*LaneWidth; % A wider area of interest

minV = 1; % Any object that moves slower than minV is considered stationary

for j = 1:numRadarObjects

[vx, vy] = calculateGroundSpeed(radarObject(j).velocity(1),radarObject(j).velocity(2),egoSpeed);

normVs(j) = norm([vx,vy]);

laneBoundariesAtObject = computeBoundaryModel(laneBoundaries, radarObject(j).position(1));

laneCenter = mean(laneBoundariesAtObject);

inLane(j) = (abs(radarObject(j).position(2) - laneCenter) <= LaneWidth/2);

inZone(j) = (abs(radarObject(j).position(2) - laneCenter) <= max(abs(vy)*2, ZoneWidth));

end

realRadarObjectsIdx = union(...

intersect(find(normVs > minV), find(inZone == 1)), ...

find(inLane == 1));

realRadarObjects = radarObject(realRadarObjectsIdx);

end

calculateGroundSpeed根据相对速度和本车速度计算雷达检测到的物体的真实速度;

function [Vx,Vy] = calculateGroundSpeed(Vxi,Vyi,egoSpeed)

% Inputs

% (Vxi,Vyi) : relative object speed

% egoSpeed : ego vehicle speed

% Outputs

% [Vx,Vy] : ground object speed

Vx = Vxi + egoSpeed; % Calculate longitudinal ground speed

theta = atan2(Vyi,Vxi); % Calculate heading angle

Vy = Vx * tan(theta); % Calculate lateral ground speed

end

processVideo转换视觉检测结果为objectDetection物体列表。

function postProcessedDetections = processVideo(postProcessedDetections, visionFrame, t)

% Process the video objects into objectDetection objects

numRadarObjects = numel(postProcessedDetections);

numVisionObjects = visionFrame.numObjects;

if numVisionObjects

classToUse = class(visionFrame.object(1).position);

visionMeasCov = cast(diag([2,2,2,100]), classToUse);

% Process Vision Objects:

for i=1:numVisionObjects

object = visionFrame.object(i);

postProcessedDetections{numRadarObjects+i} = objectDetection(t,...

[object.position(1); object.velocity(1); object.position(2); 0], ...

'SensorIndex', 1, 'MeasurementNoise', visionMeasCov, ...

'MeasurementParameters', {1}, ...

'ObjectClassID', object.classification, ...

'ObjectAttributes', {object.id, object.size});

end

end

end

processRadar转换雷达检测结果为objectDetection物体列表。

function postProcessedDetections = processRadar(postProcessedDetections, realRadarObjects, t)

% Process the radar objects into objectDetection objects

numRadarObjects = numel(realRadarObjects);

if numRadarObjects

classToUse = class(realRadarObjects(1).position);

radarMeasCov = cast(diag([2,2,2,100]), classToUse);

% Process Radar Objects:

for i=1:numRadarObjects

object = realRadarObjects(i);

postProcessedDetections{i} = objectDetection(t, ...

[object.position(1); object.velocity(1); object.position(2); object.velocity(2)], ...

'SensorIndex', 2, 'MeasurementNoise', radarMeasCov, ...

'MeasurementParameters', {2}, ...

'ObjectAttributes', {object.id, object.status, object.amplitude, object.rangeMode});

end

end

end

fcwmeas为FCW例程中的测量函数。

function measurement = fcwmeas(state, sensorID)

% The example measurements depend on the sensor type, which is reported by

% the MeasurementParameters property of the objectDetection. The following

% two sensorID values are used:

% sensorID=1: video objects, the measurement is [x;vx;y].

% sensorID=2: radar objects, the measurement is [x;vx;y;vy].

% The state is:

% Constant velocity state = [x;vx;y;vy]

% Constant turn state = [x;vx;y;vy;omega]

% Constant acceleration state = [x;vx;ax;y;vy;ay]

if numel(state) < 6 % Constant turn or constant velocity

switch sensorID

case 1 % video

measurement = [state(1:3); 0];

case 2 % radar

measurement = state(1:4);

end

else % Constant acceleration

switch sensorID

case 1 % video

measurement = [state(1:2); state(4); 0];

case 2 % radar

measurement = [state(1:2); state(4:5)];

end

end

end

fcwmeasjac为FCW例程中雅各布行列式测量函数。

function jacobian = fcwmeasjac(state, sensorID)

% The example measurements depend on the sensor type, which is reported by

% the MeasurementParameters property of the objectDetection. We choose

% sensorID=1 for video objects and sensorID=2 for radar objects. The

% following two sensorID values are used:

% sensorID=1: video objects, the measurement is [x;vx;y].

% sensorID=2: radar objects, the measurement is [x;vx;y;vy].

% The state is:

% Constant velocity state = [x;vx;y;vy]

% Constant turn state = [x;vx;y;vy;omega]

% Constant acceleration state = [x;vx;ax;y;vy;ay]

numStates = numel(state);

jacobian = zeros(4, numStates);

if numel(state) < 6 % Constant turn or constant velocity

switch sensorID

case 1 % video

jacobian(1,1) = 1;

jacobian(2,2) = 1;

jacobian(3,3) = 1;

case 2 % radar

jacobian(1,1) = 1;

jacobian(2,2) = 1;

jacobian(3,3) = 1;

jacobian(4,4) = 1;

end

else % Constant acceleration

switch sensorID

case 1 % video

jacobian(1,1) = 1;

jacobian(2,2) = 1;

jacobian(3,4) = 1;

case 2 % radar

jacobian(1,1) = 1;

jacobian(2,2) = 1;

jacobian(3,4) = 1;

jacobian(4,5) = 1;

end

end

end