CSPNet: A New Backbone that can Enhance Learning Capability of CNN

CSPNet: A New Backbone that can Enhance Learning Capability of CNN

CSPNet:可以增强 CNN 学习能力的新型 backbone

Chien-Yao Wang, Hong-Yuan Mark Liao, I-Hau Yeh, Yueh-Hua Wu, Ping-Yang Chen, Jun-Wei Hsieh

https://github.com/WongKinYiu/CrossStagePartialNetworks

Institute of Information Science, Academia Sinica:中央研究院资讯科学研究所

Academia Sinica:中央研究院,国立中央研究院

National Chiao Tung University,NCTU:台湾交通大学,国立交通大学,交大

College of Artificial Intelligence and Green Energy:人工智能与绿色能源学院

Department of Computer Science:计算机科学系

academia [ˌækəˈdiːmiə] :n. 学术界,学术生涯

Taiwan:n. 台湾

ELAN Microelectronics Corporation,ELAN Microelectronics Corp.:义隆电子股份有限公司

backbone ['bæk.bəʊn]:n. 骨干,支柱,脊柱,骨气

partial [ˈpɑːʃl]:adj. 局部的,偏爱的,不公平的

Computer Science,CS:计算机科学

Computer Vision,CV:计算机视觉

interpretation [ɪnˌtɜːprəˈteɪʃn]:n. 解释,翻译,演出

intuition [ˌɪntjuˈɪʃn]:n. 直觉,直觉力,直觉的知识

moderation [ˌmɒdəˈreɪʃn]:n. 适度,节制,温和,缓和

incremental [,ɪnkrɪ'mentəl]:adj. (定额) 增长的,逐渐的,逐步的,递增的

corresponding author:n. 通讯作者,联系人

arXiv (archive - the X represents the Greek letter chi [χ]) is a repository of electronic preprints approved for posting after moderation, but not full peer review.

November 28, 2019

ABSTRACT

Neural networks have enabled state-of-the-art approaches to achieve incredible results on computer vision tasks such as object detection. However, such success greatly relies on costly computation resources, which hinders people with cheap devices from appreciating the advanced technology. In this paper, we propose Cross Stage Partial Network (CSPNet) to mitigate the problem that previous works require heavy inference computations from the network architecture perspective. We attribute the problem to the duplicate gradient information within network optimization. The proposed networks respect the variability of the gradients by integrating feature maps from the beginning and the end of a network stage, which, in our experiments, reduces computations by 20% with equivalent or even superior accuracy on the ImageNet dataset, and significantly outperforms state-of-the-art approaches in terms of AP 50 \text{AP}_{50} AP50 on the MS COCO object detection dataset. The CSPNet is easy to implement and general enough to cope with architectures based on ResNet, ResNeXt, and DenseNet. Source code is at https://github.com/WongKinYiu/CrossStagePartialNetworks.

神经网络使先进的方法能够在诸如目标检测之类的计算机视觉任务上实现令人难以置信的结果。但是,这种成功极大地依赖于昂贵的计算资源,这阻碍了具有廉价设备的人们使用这种先进技术。在本文中,我们提出了 Cross Stage Partial Network (CSPNet),以缓解先前的工作从网络体系结构角度出发需要大量推理计算的问题。我们将问题归因于网络优化中的重复梯度信息。提出的网络通过集成网络阶段开始和结束时的特征图来遵守梯度的可变性,在我们的实验中,在 ImageNet 数据集上具有同等甚至更高的准确性的情况下,将计算量减少了 20%,并且明显优于 MS COCO 目标检测数据集中的 AP 50 \text{AP}_{50} AP50 度量的最先进方法。CSPNet 易于实施,并且通用性足以应付基于 ResNet、ResNeXt 和 DenseNet 的体系结构。源代码位于 https://github.com/WongKinYiu/CrossStagePartialNetworks。

incredible [ɪnˈkredəbl]:adj. 不能相信的,难以置信的,极好的,极大的

hinder [ˈhɪndə(r)]:vi. 成为阻碍 vt. 阻碍,打扰 adj. 后面的

appreciate [əˈpriːʃieɪt]:vt. 欣赏,感激,领会,鉴别 vi. 增值,涨价

mitigate [ˈmɪtɪɡeɪt]:vt. 使缓和,使减轻 vi. 减轻,缓和下来

1 Introduction

Neural networks have been shown to be especially powerful when it gets deeper [7, 39, 11] and wider [40]. However, extending the architecture of neural networks usually brings up a lot more computations, which makes computationally heavy tasks such as object detection unaffordable for most people. Light-weight computing has gradually received stronger attention since real-world applications usually require short inference time on small devices, which poses a serious challenge for computer vision algorithms. Although some approaches were designed exclusively for mobile CPU [9, 31, 8, 33, 43, 24], the depth-wise separable convolution techniques they adopted are not compatible with industrial IC design such as Application-Specific Integrated Circuit (ASIC) for edge-computing systems. In this work, we investigate the computational burden in state-of-the-art approaches such as ResNet, ResNeXt, and DenseNet. We further develop computationally efficient components that enable the mentioned networks to be deployed on both CPUs and mobile GPUs without sacrificing the performance.

当神经网络变得更深 [7, 39, 11] 和更宽 [40] 时,神经网络特别强大。但是,扩展神经网络的体系结构通常会带来更多的计算,这使大多数人难以承受诸如目标检测之类的计算量繁重的任务。轻量级计算已逐渐受到越来越多的关注,因为现实世界中的应用程序通常需要在小型设备上缩短推理时间,这对计算机视觉算法提出了严峻的挑战。尽管某些方法是专门为移动 CPU 设计的 [9, 31, 8, 33, 43, 24],但它们采用的深度可分离卷积技术与工业 IC 设计不兼容,例如用于边缘计算系统 Application-Specific Integrated Circuit (ASIC)。在这项工作中,我们研究了诸如 ResNet、ResNeXt 和 DenseNet 等最新方法的计算负担。我们进一步开发了计算有效的组件,这些组件使上述网络可以部署在 CPU 和移动 GPU 上,而不会牺牲性能。

unaffordable [ˌʌnəˈfɔːdəbl]:adj. (普通人) 付不起的,高价的

gradually [ˈɡrædʒuəli]:adv. 逐步地,渐渐地

sacrificial [ˌsækrɪˈfɪʃl]:adj. 牺牲的,献祭的

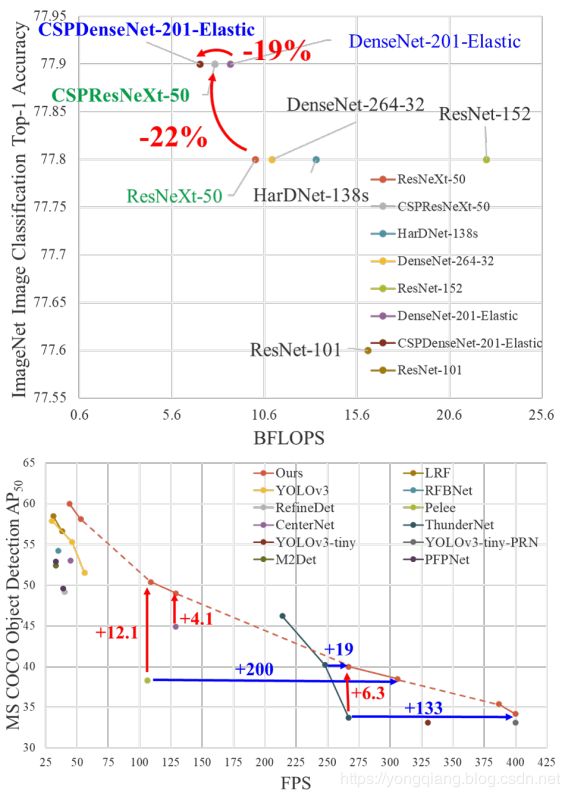

In this study, we introduce Cross Stage Partial Network (CSPNet). The main purpose of designing CSPNet is to enable this architecture to achieve a richer gradient combination while reducing the amount of computation. This aim is achieved by partitioning feature map of the base layer into two parts and then merging them through a proposed cross-stage hierarchy. Our main concept is to make the gradient flow propagate through different network paths by splitting the gradient flow. In this way, we have confirmed that the propagated gradient information can have a large correlation difference by switching concatenation and transition steps. In addition, CSPNet can greatly reduce the amount of computation, and improve inference speed as well as accuracy, as illustrated in Fig 1. The proposed CSPNet-based object detector deals with the following three problems:

在本研究中,我们介绍 Cross Stage Partial Network (CSPNet)。设计 CSPNet 的主要目的是使该体系结构能够实现更丰富的梯度组合,同时减少计算量。通过将基础层的特征图划分为两个部分,然后通过提出的跨阶段层次结构将它们合并,可以实现此目标。我们的主要概念是通过分割梯度流,使梯度流通过不同的网络路径传播。这样,我们已经确认,通过切换 concatenation and transition 步骤,传播的梯度信息可以具有较大的相关性差异。此外,CSPNet 可以大大减少计算量,并提高推理速度和准确性,如图 1 所示。提出的基于 CSPNet 的目标检测器解决了以下三个问题:

hierarchy [ˈhaɪərɑːki]:n. 层级,等级制度

correlation [ˌkɒrəˈleɪʃn]:n. 相关,关联,相互关系

transition [trænˈzɪʃn; trænˈsɪʃn]:n. 过渡,转变,转换,变调

concatenation [kən.kætə'neɪʃ(ə)n]:n. 一系列相关联的事物 (或事件)

transition [trænˈzɪʃn; trænˈsɪʃn]:n. 过渡,转变,转换,变调

strengthen [ˈstreŋθn]:vt. 加强,巩固 vi. 变强,变坚挺

Figure 1: Proposed CSPNet can be applied on ResNet [7], ResNeXt [39], DenseNet [11], etc. It not only reduce computation cost and memory usage of these networks, but also benefit on inference speed and accuracy.

1) Strengthening learning ability of a CNN The accuracy of existing CNN is greatly degraded after lightweightening, so we hope to strengthen CNN’s learning ability, so that it can maintain sufficient accuracy while being lightweightening. The proposed CSPNet can be easily applied to ResNet, ResNeXt, and DenseNet. After applying CSPNet on the above mentioned networks, the computation effort can be reduced from 10% to 20%, but it outperforms ResNet [7], ResNeXt [39], DenseNet [11], HarDNet [1], Elastic [36], and Res2Net [5], in terms of accuracy, in conducting image classification task on ImageNet [2].

1) Strengthening learning ability of a CNN 轻量化后,现有 CNN 的准确性会大大降低,因此我们希望增强 CNN 的学习能力,以便在轻量化时能够保持足够的准确性。提出的 CSPNet 可以轻松地应用于 ResNet、ResNeXt 和 DenseNet。在上述网络上使用 CSPNet 后,计算工作量可以减少从 10% 到 20%,但在准确性方面,在 ImageNet [2] 上执行图像分类任务,其性能优于 ResNet [7], ResNeXt [39], DenseNet [11], HarDNet [1], Elastic [36], and Res2Net [5]。

2) Removing computational bottlenecks Too high a computational bottleneck will result in more cycles to complete the inference process, or some arithmetic units will often idle. Therefore, we hope we can evenly distribute the amount of computation at each layer in CNN so that we can effectively upgrade the utilization rate of each computation unit and thus reduce unnecessary energy consumption. It is noted that the proposed CSPNet makes the computational bottlenecks of PeleeNet [37] cut into half. Moreover, in the MS COCO [18] dataset-based object detection experiments, our proposed model can effectively reduce 80% computational bottleneck when test on YOLOv3-based models.

2) Removing computational bottlenecks 计算瓶颈太大会导致更多的周期来完成推理过程,或者某些算术单元通常会闲置。因此,我们希望能够在 CNN 的每一层平均分配计算量,从而可以有效地提高每个计算单元的利用率,从而减少不必要的能耗。值得注意的是,提出的 CSPNet 使 PeleeNet [37] 的计算瓶颈减少了一半。此外,在基于 MS COCO [18] 数据集的目标检测实验中,当在基于 YOLOv3 的模型上进行测试时,我们提出的模型可以有效地减少 80% 的计算瓶颈。

3) Reducing memory costs The wafer fabrication cost of Dynamic Random-Access Memory (DRAM) is very expensive, and it also takes up a lot of space. If one can effectively reduce the memory cost, he/she will greatly reduce the cost of ASIC. In addition, a small area wafer can be used in a variety of edge computing devices. In reducing the use of memory usage, we adopt cross-channel pooling [6] to compress the feature maps during the feature pyramid generating process. In this way, the proposed CSPNet with the proposed object detector can cut down 75% memory usage on PeleeNet when generating feature pyramids.

3) Reducing memory costs Dynamic Random-Access Memory (DRAM) 的晶圆制造成本非常昂贵,并且还占用大量空间。如果能够有效降低存储器成本,那么他/她将大大降低 ASIC 的成本。另外,小面积晶片可用于各种边缘计算设备中。为了减少内存使用量,我们采用跨通道池化 [6] 在特征金字塔生成过程中压缩特征图。这样,在生成特征金字塔时,the proposed CSPNet with the proposed object detector 可以减少 PeleeNet 上 75% 的内存使用。

bottleneck [ˈbɒtlnek]:n. 瓶颈,障碍物

arithmetic [əˈrɪθmətɪk]:n. 算术,算法

fabrication [ˌfæbrɪˈkeɪʃn]:n. 制造,建造,装配,伪造物

wafer [ˈweɪfə(r)]:n. 圆片,晶片,薄片,干胶片,薄饼,圣饼 vt. 用干胶片封

Since CSPNet is able to promote the learning capability of a CNN, we thus use smaller models to achieve better accuracy. Our proposed model can achieve 50% COCO AP 50 \text{AP}_{50} AP50 at 109 fps on GTX 1080ti. Since CSPNet can effectively cut down a significant amount of memory traffic, our proposed method can achieve 40% COCO AP 50 \text{AP}_{50} AP50 at 52 fps on Intel Core i9-9900K. In addition, since CSPNet can significantly lower down the computational bottleneck and Exact Fusion Model (EFM) can effectively cut down the required memory bandwidth, our proposed method can achieve 42% COCO AP 50 \text{AP}_{50} AP50 at 49 fps on Nvidia Jetson TX2.

由于 CSPNet 能够提升 CNN 的学习能力,因此我们使用较小的模型来实现更高的准确性。我们提出的模型可以在 GTX 1080ti 上以 109 fps 的速度获得 50% 的 COCO AP 50 \text{AP}_{50} AP50。由于 CSPNet 可以有效减少大量内存流量,因此我们提出的方法可以在 Intel Core i9-9900K 上以 52 fps 的速度实现 40% 的 COCO AP 50 \text{AP}_{50} AP50。此外,由于 CSPNet 可以显著降低计算瓶颈,而 Exact Fusion Model (EFM) 可以有效减少所需的内存带宽,因此我们提出的方法可以在 Nvidia Jetson TX2 上以 49 fps 的速率实现 42% 的 COCO AP 50 \text{AP}_{50} AP50。

promote [prə'məʊt]:v. 促进,推动,提升,推销

traffic ['træfɪk]:n. 路上行驶的车辆,交通,(沿固定路线的) 航行,行驶,飞行,运输,人流,货流,信息流量,通信 (量)

2 Related work

CNN architectures design. In ResNeXt [39], Xie et al. first demonstrate that cardinality can be more effective than the dimensions of width and depth. DenseNet [11] can significantly reduce the number of parameters and computations due to the strategy of adopting a large number of reuse features. And it concatenates the output features of all preceding layers as the next input, which can be considered as the way to maximize cardinality. SparseNet [46] adjusts dense connection to exponentially spaced connection can effectively improve parameter utilization and thus result in better outcomes. Wang et al. further explain why high cardinality and sparse connection can improve the learning ability of the network by the concept of gradient combination and developed the partial ResNet (PRN) [35]. For improving the inference speed of CNN, Ma et al. [24] introduce four guidelines to be followed and design ShuffleNet-v2. Chao et al. [1] proposed a low memory traffic CNN called Harmonic DenseNet (HarDNet) and a metric Convolutional Input/Output (CIO) which is an approximation of DRAM traffic proportional to the real DRAM traffic measurement.

CNN architectures design. 在 ResNeXt [39] 中,Xie et al. 首先证明基数比宽度和深度的尺寸更有效。由于采用了大量重用功能,DenseNet [11] 可以显著减少参数和计算的数量。并且它将所有先前层的输出特征串联为下一个输入,这可以被视为最大化基数的方法。SparseNet [46] 将密集连接调整为指数间隔连接可以有效地提高参数利用率,从而获得更好的结果。Wang et al. 通过梯度组合的概念进一步解释了为什么高基数和稀疏连接可以提高网络的学习能力,并开发了 partial ResNet (PRN) [35]。为了提高 CNN 的推理速度,Ma et al. [24] 介绍了要遵循的四个准则并设计 ShuffleNet-v2。Chao et al. [1] 提出了一种低内存流量 CNN,称为 Harmonic DenseNet (HarDNet) 和度量 Convolutional Input/Output (CIO),它是与实际 DRAM 流量测量值成比例的 DRAM 流量近似值。

cardinality [kɑːdɪ'nælɪtɪ]:n. 基数

exponentially [ˌekspəˈnenʃəli]:adv. 以指数方式

Real-time object detector. The most famous two real-time object detectors are YOLOv3 [29] and SSD [21]. Based on SSD, LRF [38] and RFBNet [19] can achieve state-of-the-art real-time object detection performance on GPU. Recently, anchor-free based object detector [3, 45, 13, 14, 42] has become main-stream object detection system. Two object detector of this sort are CenterNet [45] and CornerNet-Lite [14], and they both perform very well in terms of efficiency and efficacy. For real-time object detection on CPU or mobile GPU, SSD-based Pelee [37], YOLOv3-based PRN [35], and Light-Head RCNN [17]-based ThunderNet [25] all receive excellent performance on object detection.

Real-time object detector. 最著名的两个实时物体检测器是 YOLOv3 [29] 和 SSD [21]。基于 SSD 的 LRF [38] 和 RFBNet [19] 可以在 GPU 上实现最新的实时目标检测性能。近来,基于无锚的物体检测器 [3, 45, 13, 14, 42] 已经成为主流的物体检测系统。这样的两个物体检测器是 CenterNet [45] 和 CornerNet-Lite [14],它们在效率和功效方面都表现出色。对于在 CPU 或移动 GPU 上进行实时目标检测,SSD-based Pelee [37], YOLOv3-based PRN [35], and Light-Head RCNN [17]-based ThunderNet [25] 都在目标检测方面获得了出色的性能。

twitter ['twɪtə(r)]:n. 兴奋,(鸟的) 唧啾声,紧张,激动 v. 叽喳,唧唧喳喳地说话,运用推特社交网络发送信息

generative adversarial network,GAN:生成式对抗网络

whole [həʊl]:adj. 完整的,纯粹的 n. 整体,全部

kinda ['kaɪndə]:adv. 有点,有几分

intro [ˈɪntrəʊ]:n. 前奏,前言,导言,介绍,简介

contemplate [ˈkɒntəmpleɪt]:vt. 沉思,注视,思忖,预期 vi. 冥思苦想,深思熟虑

signpost [ˈsaɪnpəʊst]:n. 路标,指示牌

separate ['sepəreɪt]:v. 分离,区分,隔开,区别 adj. 单独的,独立的,分开的,不同的 n. (杂志论文的) 抽印本,单行本,可以不配套单独穿的妇女服装

Figure 2: Illustrations of (a) DenseNet and (b) our proposed Cross Stage Partial DenseNet (CSPDenseNet). CSPNet separates feature map of the base layer into two part, one part will go through a dense block and a transition layer; the other one part is then combined with transmitted feature map to the next stage.

Figure 2: (a) DenseNet 和 (b) 我们提出的 Cross Stage Partial DenseNet (CSPDenseNet) 的插图。CSPNet 将基础层的特征图分为两部分,一部分将通过一个密集块和一个 transition layer,然后将另一部分与传输的特征图组合到下一个阶段。

3 Method

3.1 Cross Stage Partial Network

DenseNet. Figure 2 (a) shows the detailed structure of one-stage of the DenseNet proposed by Huang et al. [11]. Each stage of a DenseNet contains a dense block and a transition layer, and each dense block is composed of k k k dense layers. The output of the i t h i^{th} ith dense layer will be concatenated with the input of the i t h i^{th} ith dense layer, and the concatenated outcome will become the input of the ( i + 1 ) t h (i + 1)^{th} (i+1)th dense layer. The equations showing the above-mentioned mechanism can be expressed as:

DenseNet. Figure 2 (a) 显示了 Huang et al. [11] 提出的 DenseNet 的 one-stage 详细结构。DenseNet 的每个阶段都包含一个密集块和一个 transition layer,每个密集块由 k k k 个密集层组成。 i t h i^{th} ith 密集层的输出将与 i t h i^{th} ith 密集层的输入串联,并且串联的结果将成为 ( i + 1 ) t h (i + 1)^{th} (i+1)th 密集层的输入。表示上述机制的等式可以表示为:

x 1 = w 1 ∗ x 0 x 2 = w 2 ∗ [ x 0 , x 1 ] ⋮ x k = w k ∗ [ x 0 , x 1 , . . . , x k − 1 ] (1) \begin{aligned} x_{1} &= w_{1} * x_{0} \\ x_{2} &= w_{2} * [x_{0}, x_{1}] \\ \vdots \\ x_{k} &= w_{k} * [x_{0}, x_{1}, ..., x_{k-1}] \\ \tag{1} \end{aligned} x1x2⋮xk=w1∗x0=w2∗[x0,x1]=wk∗[x0,x1,...,xk−1](1)

where ∗ represents the convolution operator, and [ x 0 , x 1 , . . . ] [x_{0}, x_{1}, ...] [x0,x1,...] means to concatenate x 0 , x 1 , . . . x_{0}, x_{1}, ... x0,x1,..., and w i w_i wi and x i x_i xi are the weights and output of the i t h i^{th} ith dense layer, respectively.

If one makes use of a backpropagation algorithm to update weights, the equations of weight updating can be written as:

如果使用反向传播算法更新权重,则权重更新方程可写为:

w 1 , = f ( w 1 , g 0 ) w 2 , = f ( w 2 , g 0 , g 1 ) w 3 , = f ( w 3 , g 0 , g 1 , g 2 ) ⋮ w k , = f ( w k , g 0 , g 1 , g 2 , . . . , g k − 1 ) (2) \begin{aligned} w_{1}^{,} &= f(w_{1}, \mathcal{g}_{0}) \\ w_{2}^{,} &= f(w_{2}, \mathcal{g}_{0}, \mathcal{g}_{1}) \\ w_{3}^{,} &= f(w_{3}, \mathcal{g}_{0}, \mathcal{g}_{1}, \mathcal{g}_{2}) \\ \vdots \\ w_{k}^{,} &= f(w_{k}, \mathcal{g}_{0}, \mathcal{g}_{1}, \mathcal{g}_{2}, ..., \mathcal{g}_{k-1}) \\ \tag{2} \end{aligned} w1,w2,w3,⋮wk,=f(w1,g0)=f(w2,g0,g1)=f(w3,g0,g1,g2)=f(wk,g0,g1,g2,...,gk−1)(2)

where f f f is the function of weight updating, and g i \mathcal{g}_{i} gi represents the gradient propagated to the i t h i^{th} ith dense layer. We can find that large amount of gradient information are reused for updating weights of different dense layers. This will result in different dense layers repeatedly learn copied gradient information.

其中 f f f 是权重更新的函数,而 g i \mathcal{g}_{i} gi 表示传播到 i t h i^{th} ith 密集层的梯度。我们发现大量的梯度信息被重用于更新不同密度层的权重。这将导致不同的密集层重复学习复制的梯度信息。

Cross Stage Partial DenseNet. The architecture of one-stage of the proposed CSPDenseNet is shown in Figure 2 (b). A stage of CSPDenseNet is composed of a partial dense block and a partial transition layer. In a partial dense block, the feature maps of the base layer in a stage are split into two parts through channel x 0 = [ x 0 , , x 0 , , ] x_{0} = [x_{0}^{,}, x_{0}^{,,}] x0=[x0,,x0,,]. Between x 0 , x_{0}^{,} x0, and x 0 , , x_{0}^{,,} x0,,, the former is directly linked to the end of the stage, and the latter will go through a dense block. All steps involved in a partial transition layer are as follows: First, the output of dense layers, [ x 0 , , , x 1 , . . . , x k ] [x_{0}^{,,}, x_{1}, ..., x_{k}] [x0,,,x1,...,xk], will undergo a transition layer. Second, the output of this transition layer, x T x_{T} xT, will be concatenated with x 0 , x_{0}^{,} x0, and undergo another transition layer, and then generate output x U x_{U} xU. The equations of feed-forward pass and weight updating of CSPDenseNet are shown in Equations 3 and 4, respectively.

Cross Stage Partial DenseNet. 提出的 CSPDenseNet 的 one-stage 结构如图 2 (b) 所示。A stage of CSPDenseNet is composed of a partial dense block and a partial transition layer. In a partial dense block, the feature maps of the base layer in a stage are split into two parts through channel x 0 = [ x 0 , , x 0 , , ] x_{0} = [x_{0}^{,}, x_{0}^{,,}] x0=[x0,,x0,,]. 在 x 0 , x_{0}^{,} x0, 和 x 0 , , x_{0}^{,,} x0,, 之间,前者直接链接到阶段的末尾,而后者将经过一个密集的块。a partial transition layer 涉及的所有步骤如下:First, the output of dense layers, [ x 0 , , , x 1 , . . . , x k ] [ x_{0}^{,,}, x_{1}, ..., x_{k}] [x0,,,x1,...,xk], will undergo a transition layer. Second, the output of this transition layer, x T x_{T} xT, will be concatenated with x 0 , x_{0}^{,} x0, and undergo another transition layer, and then generate output x U x_{U} xU. CSPDenseNet 的前馈传递和权重更新的方程式分别显示在方程式 3 和 4 中。

x k = w k ∗ [ x 0 , , , x 1 , . . . , x k − 1 ] x T = w T ∗ [ x 0 , , , x 1 , . . . , x k ] x U = w U ∗ [ x 0 , , x T ] (3) \begin{aligned} x_{k} &= w_{k} * [x_{0}^{,,}, x_{1}, ..., x_{k-1}] \\ x_{T} &= w_{T} * [x_{0}^{,,}, x_{1}, ..., x_{k}] \\ x_{U} &= w_{U} * [x_{0}^{,}, x_{T}] \\ \tag{3} \end{aligned} xkxTxU=wk∗[x0,,,x1,...,xk−1]=wT∗[x0,,,x1,...,xk]=wU∗[x0,,xT](3)

w k , = f ( w k , g 0 , , , g 1 , g 2 , . . . , g k − 1 ) w T , = f ( w T , g 0 , , , g 1 , g 2 , . . . , g k ) w U , = f ( w U , g 0 , , g T ) (4) \begin{aligned} w_{k}^{,} &= f(w_{k}, \mathcal{g}_{0}^{,,}, \mathcal{g}_{1}, \mathcal{g}_{2}, ..., \mathcal{g}_{k-1}) \\ w_{T}^{,} &= f(w_{T}, \mathcal{g}_{0}^{,,}, \mathcal{g}_{1}, \mathcal{g}_{2}, ..., \mathcal{g}_{k}) \\ w_{U}^{,} &= f(w_{U}, \mathcal{g}_{0}^{,}, \mathcal{g}_{T}) \\ \tag{4} \end{aligned} wk,wT,wU,=f(wk,g0,,,g1,g2,...,gk−1)=f(wT,g0,,,g1,g2,...,gk)=f(wU,g0,,gT)(4)

We can see that the gradients coming from the dense layers are separately integrated. On the other hand, the feature map x 0 , x_{0}^{,} x0, that did not go through the dense layers is also separately integrated. As to the gradient information for updating weights, both sides do not contain duplicate gradient information that belongs to other sides.

We can see that the gradients coming from the dense layers are separately integrated. On the other hand, the feature map x 0 , x_{0}^{,} x0, that did not go through the dense layers is also separately integrated. 关于用于更新权重的梯度信息,双方都不包含属于另一方的重复的梯度信息。

partial [ˈpɑːʃl]:adj. 局部的,偏爱的,不公平的

Overall speaking, the proposed CSPDenseNet preserves the advantages of DenseNet’s feature reuse characteristics, but at the same time prevents an excessively amount of duplicate gradient information by truncating the gradient flow. This idea is realized by designing a hierarchical feature fusion strategy and used in a partial transition layer.

总体而言,提出的 CSPDenseNet 保留了 DenseNet 的特征重用特性的优点,但同时通过截断梯度流来防止过多的梯度信息重复。通过设计分层特征融合策略来实现此思想,并将其用于 a partial transition layer。

excessively [ɪkˈsesɪvli]:adv. 过分地,极度

Partial Dense Block. The purpose of designing partial dense blocks is to 1.) increase gradient path: Through the split and merge strategy, the number of gradient paths can be doubled. Because of the cross-stage strategy, one can alleviate the disadvantages caused by using explicit feature map copy for concatenation; 2.) balance computation of each layer: usually, the channel number in the base layer of a DenseNet is much larger than the growth rate. Since the base layer channels involved in the dense layer operation in a partial dense block account for only half of the original number, it can effectively solve nearly half of the computational bottleneck; and 3.) reduce memory traffic: Assume the base feature map size of a dense block in a DenseNet is w × h × c w \times h \times c w×h×c, the growth rate is d d d, and there are in total m m m dense layers. Then, the CIO of that dense block is ( c × m ) + ( ( m 2 + m ) × d ) / 2 (c \times m) + ((m^{2} + m) \times d)/2 (c×m)+((m2+m)×d)/2, and the CIO of partial dense block is ( ( c × m ) + ( m 2 + m ) × d ) / 2 ((c \times m) + (m^{2} + m) \times d)/2 ((c×m)+(m2+m)×d)/2. While m m m and d d d are usually far smaller than c c c, a partial dense block is able to save at most half of the memory traffic of a network.

Partial Dense Block. 设计 partial dense blocks 的目的是为了 1.) increase gradient path (增加梯度路径): 通过拆分和合并策略,梯度路径的数量可以增加一倍。由于采用了跨阶段策略,因此可以减轻因使用显式特征图副本进行级联而造成的不利影响。2.) balance computation of each layer (平衡每层的计算量): 通常,DenseNet 的基础层中的通道数远大于增长率。Since the base layer channels involved in the dense layer operation in a partial dense block account for only half of the original number,因此它可以有效解决近一半的计算瓶颈。3.) reduce memory traffic (减少内存流量): 假设 DenseNet 中密集块的基本特征图大小为 w × h × c w \times h \times c w×h×c,增长率为 d d d,总共有 m m m dense layers。那么,那个密集块的 CIO 为 ( c × m ) + ( ( m 2 + m ) × d ) / 2 (c \times m) + ((m^{2} + m) \times d)/2 (c×m)+((m2+m)×d)/2,而 partial dense block 的 CIO 为 ( ( c × m ) + ( m 2 + m ) × d ) / 2 ((c \times m) + (m^{2} + m) \times d)/2 ((c×m)+(m2+m)×d)/2。尽管 m m m 和 d d d 通常远小于 c c c,但 partial dense block 最多可以节省网络一半的内存流量。

explicit [ɪkˈsplɪsɪt]:adj. 明确的,清楚的,直率的,详述的

Figure 3: Different kind of feature fusion strategies. (a) single path DenseNet, (b) proposed CSPDenseNet: transition -> concatenation -> transition, (c) concatenation -> transition, and (d) transition -> concatenation.

Figure 4: Effect of truncating gradient flow for maximizing difference of gradient combination.

截断梯度流对最大化梯度组合差异的影响。

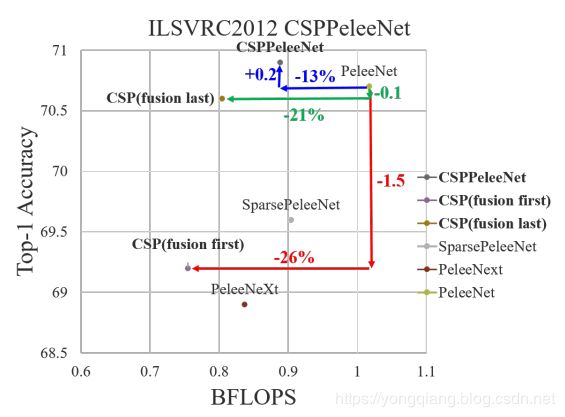

Figure 5: Applying CSPNet to ResNe(X)t.

Partial Transition Layer. The purpose of designing partial transition layers is to maximize the difference of gradient combination. The partial transition layer is a hierarchical feature fusion mechanism, which uses the strategy of truncating the gradient flow to prevent distinct layers from learning duplicate gradient information. Here we design two variations of CSPDenseNet to show how this sort of gradient flow truncating affects the learning ability of a network. 3 (c) and 3 (d) show two different fusion strategies. CSP (fusion first) means to concatenate the feature maps generated by two parts, and then do transition operation. If this strategy is adopted, a large amount of gradient information will be reused. As to the CSP (fusion last) strategy, the output from the dense block will go through the transition layer and then do concatenation with the feature map coming from part 1. If one goes with the CSP (fusion last) strategy, the gradient information will not be reused since the gradient flow is truncated. If we use the four architectures shown in 3 to perform image classification, the corresponding results are shown in Figure 4. It can be seen that if one adopts the CSP (fusion last) strategy to perform image classification, the computation cost is significantly dropped, but the top-1 accuracy only drop 0.1%. On the other hand, the CSP (fusion first) strategy does help the significant drop in computation cost, but the top-1 accuracy significantly drops 1.5%. By using the split and merge strategy across stages, we are able to effectively reduce the possibility of duplication during the information integration process. From the results shown in Figure 4, it is obvious that if one can effectively reduce the repeated gradient information, the learning ability of a network will be greatly improved.

Partial Transition Layer. 设计 partial transition layer 的目的是使梯度组合的差异最大化。partial transition layer 是分层特征融合机制,它使用截断梯度流的策略来防止不同的层学习重复的梯度信息。在这里,我们设计了 CSPDenseNet 的两个变体,以显示这种梯度流截断如何影响网络的学习能力。3 (c) and 3 (d) 示出了两种不同的融合策略。CSP (fusion first) 是指将两部分生成的特征图连接起来,然后进行 transition operation。如果采用这种策略,大量的梯度信息将被重用。对于 CSP (fusion last) 策略,密集块的输出将通过 transition layer,然后与来自 part 1 的特征图进行连接。如果使用 CSP (fusion last) 策略,则梯度信息由于梯度流被截断,因此将不会重用。如果我们使用图 3 中所示的四种架构进行图像分类,则相应的结果如图 4 所示。可以看出,如果采用 CSP (fusion last) 策略进行图像分类,则计算成本将大大降低,但 top-1 的准确性仅下降 0.1%。另一方面,CSP (fusion first) 策略确实有助于显着降低计算成本,但是 top-1 的准确性显着降低了 1.5%。通过跨阶段使用拆分和合并策略,我们能够有效地减少信息集成过程中重复的可能性。从图 4 所示的结果来看,很明显,如果可以有效地减少重复梯度信息,则网络的学习能力将大大提高。

Apply CSPNet to Other Architectures. CSPNet can be also easily applied to ResNet and ResNeXt, the architectures are shown in Figure 5. Since only half of the feature channels are going through Res(X)Blocks, there is no need to introduce the bottleneck layer anymore. This makes the theoretical lower bound of the Memory Access Cost (MAC) when the FLoating-point OPerations (FLOPs) is fixed.

Apply CSPNet to Other Architectures. CSPNet 也可以轻松地应用于 ResNet and ResNeXt,其体系结构如图 5 所示。由于只有一半的功能通道通过 Res(X)Blocks,因此不再需要引入瓶颈层。当固定 FLoating-point OPerations (FLOPs) 时,这使理论上的 Memory Access Cost (MAC) 下限成为可能。

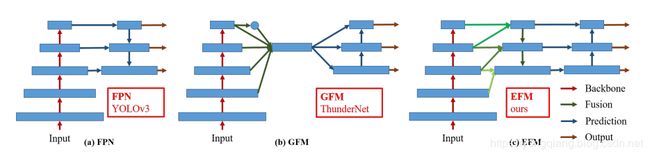

3.2 Exact Fusion Model

Looking Exactly to predict perfectly. We propose EFM that captures an appropriate Field of View (FoV) for each anchor, which enhances the accuracy of the one-stage object detector. For segmentation tasks, since pixel-level labels usually do not contain global information, it is usually more preferable to consider larger patches for better information retrieval [22]. However, for tasks like image classification and object detection, some critical information can be obscure when observed from image-level and bounding box-level labels. Li et al. [15] found that CNN can be often distracted when it learns from image-level labels and concluded that it is one of the main reasons that two-stage object detectors outperform one-stage object detectors.

Looking Exactly to predict perfectly (准确地寻找以进行完美预测). 我们建议使用 EFM 为每个锚点捕获合适的视场 (FoV),从而提高 one-stage 目标检测器的准确性。对于分割任务,由于像素级标签通常不包含全局信息,因此通常更可取的是考虑使用更大的块以获得更好的信息检索 [22]。但是,对于诸如图像分类和目标检测之类的任务,从图像级别和边框级别的标签中观察时,某些关键信息可能会模糊不清。Li et al. [15] 发现,当从图像级标签中学习时,CNN 经常会分散注意力,并得出结论,这是两级目标检测器优于一级目标检测器的主要原因之一。

distract [dɪˈstrækt]:vt. 转移,分心

Aggregate Feature Pyramid. The proposed EFM is able to better aggregate the initial feature pyramid. The EFM is based on YOLOv3 [29], which assigns exactly one bounding-box prior to each ground truth object. Each ground truth bounding box corresponds to one anchor box that surpasses the threshold IoU. If the size of an anchor box is equivalent to the FoV of the grid cell, then for the grid cells of the s t h s^{th} sth scale, the corresponding bounding box will be lower bounded by the ( s − 1 ) t h {(s - 1)}^{th} (s−1)th scale and upper bounded by the ( s + 1 ) t h {(s + 1)}^{th} (s+1)th scale. Therefore, the EFM assembles features from the three scales.

Aggregate Feature Pyramid. 提出的 EFM 能够更好地聚合初始特征金字塔。The EFM is based on YOLOv3 [29], which assigns exactly one bounding-box prior to each ground truth object. Each ground truth bounding box corresponds to one anchor box that surpasses the threshold IoU. If the size of an anchor box is equivalent to the FoV of the grid cell, then for the grid cells of the s t h s^{th} sth scale, the corresponding bounding box will be lower bounded by the ( s − 1 ) t h {(s - 1)}^{th} (s−1)th scale and upper bounded by the ( s + 1 ) t h {(s + 1)}^{th} (s+1)th scale. 因此,EFM 会从三个比例尺中组合特征。

Balance Computation. Since the concatenated feature maps from the feature pyramid are enormous, it introduces a great amount of memory and computation cost. To alleviate the problem, we incorporate the Maxout technique to compress the feature maps.

Balance Computation. 由于来自特征金字塔的串联特征图非常大,因此会引入大量的内存和计算成本。为了缓解该问题,我们采用了 Maxout 技术来压缩特征图。

enormous [ɪˈnɔːməs]:adj. 庞大的,巨大的,凶暴的,极恶的

multilabel classification:多标签分类

cross entropy:交叉熵

prediction [prɪˈdɪkʃn]:n. 预报,预言

independent [ˌɪndɪˈpendənt]:adj. 独立的,单独的,无党派的,不受约束的 n. 独立自主者,无党派者

deliberately [dɪˈlɪbərətli]:adv. 故意地,谨慎地,慎重地

segmentation [ˌseɡmenˈteɪʃn]:n. 分割,割断,细胞分裂

justification [ˌdʒʌstɪfɪˈkeɪʃn]:n. 理由,辩护,认为有理,认为正当,释罪

Figure 6: Different feature pyramid fusion strategies. (a) Feature Pyramid Network (FPN): fuse features from current scale and previous scale. (b) Global Fusion Model (GFM): fuse features of all scales. (c) Exact Fusion Model (EFM): fuse features depand on anchor size.

4 Experiments

We will use ImageNet’s image classification dataset [2] used in ILSVRC 2012 to validate our proposed CSPNet. Besides, we also use the MS COCO object detection dataset [18] to verify the proposed EFM. Details of the proposed architectures will be elaborated in the appendix.

提议的体系结构的细节将在附录中详细说明。

4.1 Implementation Details

ImageNet. In ImageNet image classification experiments, all hyper-parameters such as training steps, learning rate schedule, optimizer, data augmentation, etc., we all follow the settings defined in Redmon et al. [29]. For ResNet-based models and ResNeXt-based models, we set 8000,000 training steps. As to DenseNet-based models, we set 1,600,000 training steps. We set the initial learning rate 0.1 and adopt the polynomial decay learning rate scheduling strategy. The momentum and weight decay are respectively set as 0.9 and 0.005. All architectures use a single GPU to train universally in the batch size of 128. Finally, we use the validation set of ILSVRC 2012 to validate our method.

universally [ˌjuːnɪˈvɜːsəli]:adv. 普遍地,人人,到处

momentum [məˈmentəm]:n. 势头,动量,动力,冲力

polynomial [.pɒli'nəʊmiəl]:adj. 多项式的,多词学名的 n. 多项式,多词学名

MS COCO. In MS COCO object detection experiments, all hyper-parameters also follow the settings defined in Redmon et al. [29]. Altogether we did 500,000 training steps. We adopt the step decay learning rate scheduling strategy and multiply with a factor 0.1 at the 400,000 steps and the 450,000 steps, respectively. The momentum and weight decay are respectively set as 0.9 and 0.0005. All architectures use a single GPU to execute multi-scale training in the batch size of 64. Finally, the COCO test-dev set is adopted to verify our method.

4.2 Ablation Experiments

Ablation study of CSPNet on ImageNet. In the ablation experiments conducted on the CSPNet, we adopt PeleeNet [37] as the baseline, and the ImageNet is used to verify the performance of the CSPNet. We use different partial ratios γ \gamma γ and the different feature fusion strategies for ablation study. Table 1 shows the results of ablation study on CSPNet. In Table 1, SPeleeNet and PeleeNeXt are, respectively, the architectures that introduce sparse connection and group convolution to PeleeNet. As to CSP (fusion first) and CSP (fusion last), they are the two strategies proposed to validate the benefits of a partial transition.

Ablation study of CSPNet on ImageNet. 在 CSPNet 上进行的消融实验中,我们采用 PeleeNet [37] 作为基准,并使用 ImageNet 来验证 CSPNet 的性能。我们对消融研究使用不同的 partial ratios γ \gamma γ 和不同的特征融合策略。Table 1 显示了在 CSPNet 上进行消融研究的结果。In Table 1,SPeleeNet and PeleeNeXt 分别是将稀疏连接和组卷积引入 PeleeNet 的体系结构。对于 CSP (fusion first) and CSP (fusion last),它们是验证 partial transition 的好处的两种策略。

From the experimental results, if one only uses the CSP (fusion first) strategy on the cross-stage partial dense block, the performance can be slightly better than SPeleeNet and PeleeNeXt. However, the partial transition layer designed to reduce the learning of redundant information can achieve very good performance. For example, when the computation is cut down by 21%, the accuracy only degrades by 0.1%. One thing to be noted is that when γ = 0.25 \gamma = 0.25 γ=0.25, the computation is cut down by 11%, but the accuracy is increased by 0.1%. Compared to the baseline PeleeNet, the proposed CSPPeleeNet achieves the best performance, it can cut down 13% computation, but at the same time upgrade the accuracy by 0.2%. If we adjust the partial ratio to γ = 0.25 \gamma = 0.25 γ=0.25, we are able to upgrade the accuracy by 0.8% and at the same time cut down 3% computation.

从实验结果来看,如果仅在跨阶段 partial dense block 上使用 CSP (fusion first) 策略,则性能可能会比 SPeleeNet and PeleeNeXt 稍好。但是,设计用于减少冗余信息学习的 partial transition layer 可以实现非常好的性能。例如,当计算减少 21% 时,精度只会降低 0.1%。要注意的一件事是,当 γ = 0.25 \gamma = 0.25 γ=0.25 时,计算量减少了 11%,但准确性提高了 0.1%。与 baseline PeleeNet 相比,proposed CSPPeleeNet 达到了最佳性能,可以减少 13% 的计算,但同时将精度提高了 0.2%。如果我们将部分比率调整为 γ = 0.25 \gamma = 0.25 γ=0.25,则可以将精度提高 0.8%,同时减少 3% 的计算量。

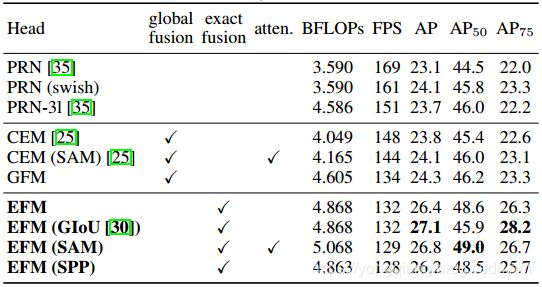

Ablation study of EFM on MS COCO. Next, we shall conduct an ablation study of EFM based on the MS COCO dataset. In this series of experiments, we compare three different feature fusion strategies shown in Figure 6. We choose two state-of-the-art lightweight models, PRN [35] and ThunderNet [25], to make comparison. PRN is the feature pyramid architecture used for comparison, and the ThunderNet with Context Enhancement Module (CEM) and Spatial Attention Module (SAM) are the global fusion architecture used for comparison. We design a Global Fusion Model (GFM) to compare with the proposed EFM. Moreover, GIoU [30], SPP, and SAM are also applied to EFM to conduct an ablation study. All experiment results listed in Table 2 adopt CSPPeleeNet as the backbone.

Ablation study of EFM on MS COCO. 接下来,我们将基于 MS COCO 数据集进行 EFM 的消融研究。在这一系列实验中,我们比较了图 6 中所示的三种不同的特征融合策略。我们选择了两个最新的轻量级模型 PRN [35] and ThunderNet [25] 进行比较。PRN 是用于比较的特征金字塔体系结构,而带有 Context Enhancement Module (CEM) and Spatial Attention Module (SAM) 的 ThunderNet 是用于比较的全局融合体系结构。我们设计了一个 Global Fusion Model (GFM) 与提出的 EFM 进行比较。此外,GIoU [30], SPP, and SAM 也被应用于 EFM 进行消融研究。表 2 中列出的所有实验结果均以 CSPPeleeNet 为骨干。

As reflected in the experiment results, the proposed EFM is 2 fps slower than GFM, but its AP and AP 50 \text{AP}_{50} AP50 are significantly upgraded by 2.1% and 2.4%, respectively. Although the introduction of GIoU can upgrade AP by 0.7%, the AP 50 \text{AP}_{50} AP50 is, however, significantly degraded by 2.7%. However, for edge computing, what really matters is the number and locations of the objects rather than their coordinates. Therefore, we will not use GIoU training in the subsequent models. The attention mechanism used by SAM can get a better frame rate and AP compared with SPP’s increase of FoV mechanism, so we use EFM (SAM) as the final architecture. In addition, although the CSPPeleeNet with swish activation can improve AP by 1%, its operation requires a lookup table on the hardware design to accelerate, we finally also abandoned the swish activation function.

从实验结果可以看出,提出的 EFM 比 GFM 慢 2 fps,但其 AP 和 AP 50 \text{AP}_{50} AP50 分别显著提高了 2.1% 和 2.4%。尽管引入 GIoU 可以使 AP 升级 0.7%,但是 AP 50 \text{AP}_{50} AP50 却大大降低了 2.7%。但是,对于边缘计算,真正重要的是目标的数量和位置,而不是其坐标。因此,我们将不在后续模型中使用 GIoU 训练。与 SPP 增加 FoV 机制相比,SAM 使用的注意力机制可以获得更好的帧速率和 AP,因此我们将 EFM (SAM) 作为最终架构。此外,尽管具有 swish activation 功能的 CSPPeleeNet 可以将 AP 提高 1%,但其运行需要硬件设计上的查找表来加速,我们最终也放弃了 swish activation 功能。

Table 1: Ablation study of CSPNet on ImageNet.

Table 2: Ablation study of EFM on MS COCO.

4.3 ImageNet Image Classification

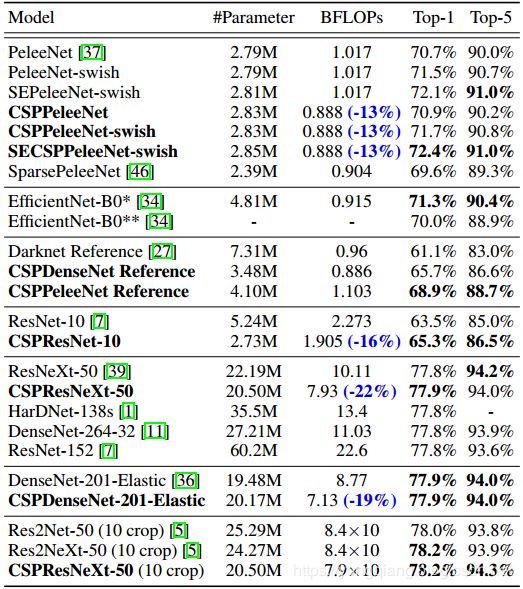

We apply the proposed CSPNet to ResNet-10 [7], ResNeXt-50 [39], PeleeNet [37], and DenseNet-201-Elastic [36] and compare with state-of-the-art methods. The experimental results are shown in Table 3.

elastic [ɪˈlæstɪk]:adj. 有弹性的,灵活的,易伸缩的 n. 松紧带,橡皮圈

It is confirmed by experimental results that no matter it is ResNet-based models, ResNeXt-based models, or DenseNet-based models, when the concept of CSPNet is introduced, the computational load is reduced at least by 10% and the accuracy is either remain unchanged or upgraded. Introducing the concept of CSPNet is especially useful for the improvement of lightweight models. For example, compared to ResNet-10, CSPResNet-10 can improve accuracy by 1.8%. As to PeleeNet and DenseNet-201-Elastic, CSPPeleeNet and CSPDenseNet-201-Elastic can respectively cut down 13% and 19% computation, and either upgrade a little bit or maintain the accuracy. As to the case of ResNeXt-50, CSPResNeXt-50 can cut down 22% computation and upgrade top-1 accuracy to 77.9%.

实验结果证实,无论是基于 ResNet 的模型,基于 ResNeXt 的模型,还是基于 DenseNet 的模型,引入 CSPNet 的概念后,计算量至少减少了 10%,并且精度保持不变或提高。引入 CSPNet 的概念对于改进轻量级模型特别有用。例如,与 ResNet-10 相比,CSPResNet-10 可以将准确性提高 1.8%。对于 PeleeNet 和 DenseNet-201-Elastic,CSPPeleeNet 和 CSPDenseNet-201-Elastic 可以分别减少 13% 和 19% 的计算量,或者稍加升级或保持准确性。对于 ResNeXt-50,CSPResNeXt-50 可以减少 22% 的计算量,并将 top-1 精度提高到 77.9%。

If compared with the state-of-the-art lightweight model - EfficientNet-B0, although it can achieve 76.8% accuracy when the batch size is 2048, when the experiment environment is the same as ours, that is, only one GPU is used, EfficientNetB0 can only reach 70.0% accuracy. In fact, the swish activation function and SE block used by EfficientNet-B0 are not efficient on the mobile GPU. A similar analysis has been conducted during the development of EfficientNet-EdgeTPU.

与先进的轻量级模型 EfficientNet-B0 相比,尽管在批量大小为 2048 时可以达到 76.8% 的精度,但是在与我们相同的实验环境下,即仅使用一个 GPU,EfficientNetB0 只能达到 70.0% 的准确性。实际上,EfficientNet-B0 使用的 swish activation function and SE block 在移动 GPU 上效率不高。在 EfficientNet-EdgeTPU 的开发过程中进行了类似的分析。

Here, for demonstrating the learning ability of CSPNet, we introduce swish and SE into CSPPeleeNet and then make a comparison with EfficientNet-B0*. In this experiment, SECSPPeleeNet-swish cut down computation by 3% and upgrade 1.1% top-1 accuracy.

在这里,为了证明 CSPNet 的学习能力,我们将 swish and SE 引入 CSPPeleeNet,然后与 EfficientNet-B0* 进行比较。在此实验中,SECSPPeleeNet-swish 将计算量减少了 3%,并将 top-1 准确性提高了 1.1%。

Proposed CSPResNeXt-50 is compared with ResNeXt-50 [39], ResNet-152 [7], DenseNet-264 [11], and HarDNet-138s [1], regardless of parameter quantity, amount of computation, and top-1 accuracy, CSPResNeXt-50 all achieve the best result. As to the 10-crop test, CSPResNeXt-50 also outperforms Res2Net-50 [5] and Res2NeXt-50 [5].

提出的 CSPResNeXt-50 与 ResNeXt-50 [39], ResNet-152 [7], DenseNet-264 [11], and HarDNet-138s [1] 进行比较,无论参数数量,计算量和 top-1 精度如何,CSPResNeXt-50 均达到最佳效果。至于 10-crop 的测试,CSPResNeXt-50 也优于 Res2Net-50 [5] 和 Res2NeXt-50 [5]。

Table 3: Compare with state-of-the-art methods on ImageNet.

swish [swɪʃ]:adj. 华贵入时的,豪华的 n. 快速的空中移动 (或挥动、摆动等),呼呼声 v. 唰地 (或嗖地、呼地等) 挥动,(使) 快速空中移动

squeeze [skwiːz]:v. 挤压,挤出,捏,(使) 挤入 n. 挤压,塞,捏,榨出的液体

excitation [ˌeksaɪ'teɪʃən]:n. 激发,激发,激励,激感 (现象)

1 EfficientNet* is implemented by Darknet framework.

2 EfficientNet** is trained by official code with batch size 256.

3 Swish activation function is presented by [4, 26].

4 Squeeze-and-excitation (SE) network is presented by [10].

4.4 MS COCO Object Detection

In the task of object detection, we aim at three targeted scenarios: (1) real-time on GPU: we adopt CSPResNeXt50 with PANet (SPP) [20]; (2) real-time on mobile GPU: we adopt CSPPeleeNet, CSPPeleeNet Reference, and CSPDenseNet Reference with the proposed EFM (SAM); and (3) real-time on CPU: we adopt CSPPeleeNet Reference and CSPDenseNet Reference with PRN [35]. The comparisons between the above models and the state-of-the-art methods are listed in Table 4. As to the analysis on the inference speed of CPU and mobile GPU will be detailed in the next subsection.

在目标检测任务中,我们针对三个目标场景:(1) 在 GPU 上实时:我们将 CSPResNeXt50 与 PANet (SPP) 结合使用 [20];(2) 在移动 GPU 上实时:我们将 CSPPeleeNet, CSPPeleeNet Reference, and CSPDenseNet Reference with the proposed EFM (SAM) 结合使用;(3) 在 CPU 上实时:我们采用 CSPPeleeNet Reference and CSPDenseNet Reference with PRN [35]。表 4 中列出了上述模型与最新方法之间的比较。关于 CPU 和移动 GPU 推理速度的分析将在下一部分中详细介绍。

scenario [səˈnɑːriəʊ]:n. 方案,情节,剧本,设想

If compared to object detectors running at 30~100 fps, CSPResNeXt50 with PANet (SPP) achieves the best performance in AP, AP 50 \text{AP}_{50} AP50 and AP 75 \text{AP}_{75} AP75. They receive, respectively, 38.4%, 60.6%, and 41.6% detection rates. If compared to state-ofthe-art LRF [38] under the input image size 512$\times$512, CSPResNeXt50 with PANet (SPP) outperforms ResNet101 with LRF by 0.7% AP, 1.5% AP 50 \text{AP}_{50} AP50 and 1.1% AP 75 \text{AP}_{75} AP75. If compared to object detectors running at 100~200 fps, CSPPeleeNet with EFM (SAM) boosts 12.1% AP 50 \text{AP}_{50} AP50 at the same speed as Pelee [37] and increases 4.1% [37] at the same speed as CenterNet [45].

与以 30~100 fps 运行的物体检测器相比,CSPResNeXt50 with PANet (SPP) 在 AP, AP 50 \text{AP}_{50} AP50 and AP 75 \text{AP}_{75} AP75 中达到最佳性能。他们分别获得 38.4%, 60.6%, and 41.6% 的检出率。如果与最新的 LRF [38] 在输入图像大小 512$\times$512 下进行比较,则 CSPResNeXt50 with PANet (SPP) 相比于带有 LRF 的 ResNet101 分别高 0.7% AP, 1.5% AP 50 \text{AP}_{50} AP50 and 1.1% AP 75 \text{AP}_{75} AP75。与运行在 100~200 fps 的物体检测器相比,CSPPeleeNet with EFM 以与 Pelee [37] 相同的速度提高 AP 50 \text{AP}_{50} AP50 12.1%,以与 CenterNet [45] 相同的速度提高 4.1% [37]。

If compared to very fast object detectors such as ThunderNet [25], YOLOv3-tiny [29], and YOLOv3-tiny-PRN [35], the proposed CSPDenseNetb Reference with PRN is the fastest. It can reach 400 fps frame rate, i.e., 133 fps faster than ThunderNet with SNet49. Besides, it gets 0.5% higher on AP 50 \text{AP}_{50} AP50. If compared to ThunderNet146, CSPPeleeNet Reference with PRN (3l) increases the frame rate by 19 fps while maintaining the same level of AP 50 \text{AP}_{50} AP50.

如果与 ThunderNet [25], YOLOv3-tiny [29], and YOLOv3-tiny-PRN [35] 等非常快速的物体检测器进行比较,则the proposed CSPDenseNetb Reference with PRN 是最快的。它可以达到 400 fps 帧速率,即比使用 SNet49 的 ThunderNet 快 133 fps。此外,它的 AP 50 \text{AP}_{50} AP50 值提高了 0.5%。如果与 ThunderNet146 进行比较,CSPPeleeNet Reference with PRN (3l) 将帧速率提高了 19 fps,同时保持了 AP 50 \text{AP}_{50} AP50 的相同水平。

Table 4: Compare with state-of-the-art methods on MSCOCO Object Detection.

1 The table is separated into four parts, <100 fps, 100∼200 fps, 200∼300 fps, and >300 fps.

2 We mainly focus on FPS and AP 50 \text{AP}_{50} AP50 since almost all applications need fast inference to locate and count objects.

3 Inference speed are tested on GTX 1080ti with batch size equals to 1 if possible, and our models are tested using Darknet [28].

4 All results are obtained by COCO test-dev set except for TTFNet [23] models which are verified on minval5k set.

4.5 Analysis

Computational Bottleneck. Figure 7 shows the BLOPS of each layer of PeleeNet-YOLO, PeleeNet-PRN and proposed CSPPeleeNet-EFM. From Figure 7, it is obvious that the computational bottleneck of PeleeNet-YOLO occurs when the head integrates the feature pyramid. The computational bottleneck of PeleeNet-PRN occurs on the transition layers of the PeleeNet backbone. As to the proposed CSPPeleeNet-EFM, it can balance the overall computational bottleneck, which reduces the PeleeNet backbone 44% computational bottleneck and reduces PeleeNet-YOLO 80% computational bottleneck. Therefore, we can say that the proposed CSPNet can provide hardware with a higher utilization rate.

Computational Bottleneck. Figure 7 显示了 PeleeNet-YOLO, PeleeNet-PRN and proposed CSPPeleeNet-EFM 每一层的 BLOPS。从图 7 中可以明显看出,当头部集成了特征金字塔时,PeleeNet-YOLO 就会出现计算瓶颈。PeleeNet-PRN 的计算瓶颈出现在 PeleeNet 主干的过渡层上。对于提出的 CSPPeleeNet-EFM,它可以平衡总体计算瓶颈,从而减少了 PeleeNet 主干网 44% 的计算瓶颈,减少了 PeleeNet-YOLO 80% 的计算瓶颈。因此,可以说,提出的 CSPNet 可以为硬件提供更高的利用率。

bottleneck [ˈbɒtlnek]:n. 瓶颈,障碍物

vanilla [və'nɪlə]:adj. 有香子兰香味的,香草味的,普通的,寻常的 n. 香草醛

Memory Traffic. Figure 8 shows the size of each layer of ResNeXt50 and the proposed CSPResNeXt50. The CIO of the proposed CSPResNeXt (32.6M) is lower than that of the original ResNeXt50 (34.4M). In addition, our CSPResNeXt50 removes the bottleneck layers in the ResXBlock and maintains the same numbers of the input channel and the output channel, which is shown in Ma et al. [24] that this will have the lowest MAC and the most efficient computation when FLOPs are fixed. The low CIO and FLOPs enable our CSPResNeXt50 to outperform the vanilla ResNeXt50 by 22% in terms of computations.

Memory Traffic. 图 8 显示了 ResNeXt50 and the proposed CSPResNeXt50 每一层的大小。the proposed CSPResNeXt (32.6M) 的 CIO 低于 the original ResNeXt50 (34.4M)。另外,我们的 CSPResNeXt50 消除了 ResXBlock 中的瓶颈层,并保持了相同数量的输入通道和输出通道,这在 Ma et al. [24] 的文章中已显示,当固定 FLOPs 时,它将具有最低的 MAC 和最有效的计算。低的 CIO 和 FLOPs 使我们的 CSPResNeXt50 在计算方面比普通的 ResNeXt50 高出 22%。

Figure 7: Computational bottleneck of PeleeNet-YOLO, PeleeNet-PRN and CSPPeleeNet-EFM.

Figure 8: Input size and output size of ResNeXt and proposed CSPResNeXt.

Inference Rate. We further evaluate whether the proposed methods are able to be deployed on real-time detectors with mobile GPU or CPU. Our experiments are based on NVIDIA Jetson TX2 and Intel Core i9-9900K, and the inference rate on CPU is evaluated with the OpenCV DNN module. We do not adopt model compression or quantization for fair comparisons. The results are shown in Table 5.

Inference Rate. 我们进一步评估了所提出的方法是否能够部署在带有移动 GPU 或 CPU 的实时检测器上。我们的实验基于 NVIDIA Jetson TX2 和 Intel Core i9-9900K,并且使用 OpenCV DNN 模块评估了 CPU 的推理率。我们不采用模型压缩或量化进行公平比较。结果示于 Table 5。

quantization [,kwɑntɪ'zeʃən]:n. 量子化,分层,数字化

fair [feə(r)]:adj. 公平的,美丽的,白皙的,晴朗的 adv. 公平地,直接地,清楚地 vi. 转晴 n. 展览会,市集,美人

If we compare the inference speed executed on CPU, CSPDenseNetb Ref.-PRN receives higher AP 50 \text{AP}_{50} AP50 than SNet49-TunderNet, YOLOv3-tiny, and YOLOv3-tiny-PRN, and it also outperforms the above three models by 55 fps, 48 fps, and 31 fps, respectively, in terms of frame rate. On the other hand, CSPPeleeNet Ref.-PRN (3l) reaches the same accuracy level as SNet146-ThunderNet but significantly upgrades the frame rate by 20 fps on CPU.

如果我们比较在 CPU 上执行的推理速度,CSPDenseNetb Ref.-PRN 的 AP 50 \text{AP}_{50} AP50 高于 SNet49-TunderNet, YOLOv3-tiny, and YOLOv3-tiny-PRN,并且它的性能 (帧速率方面) 也比上述三个模型分别高 55 fps, 48 fps, and 31 fps。另一方面,CSPPeleeNet Ref.-PRN (3l) 达到与 SNet146-ThunderNet 相同的准确度,但 CPU 的帧速率显着提高了 20 fps。

If we compare the inference speed executed on mobile GPU, our proposed EFM will be a good model to use. Since our proposed EFM can greatly reduce the memory requirement when generating feature pyramids, it is definitely beneficial to function under the memory bandwidth restricted mobile environment. For example, CSPPeleeNet Ref.-EFM (SAM) can have a higher frame rate than YOLOv3-tiny, and its AP 50 \text{AP}_{50} AP50 is 11.5% higher than YOLOv3-tiny, which is significantly upgraded. For the same CSPPeleeNet Ref. backbone, although EFM (SAM) is 62 fps slower than PRN (3l) on GTX 1080ti, it reaches 41 fps on Jetson TX2, 3 fps faster than PRN (3l), and at AP50 4.6% growth.

如果我们比较在移动 GPU 上执行的推理速度,那么我们提出的 EFM 将是一个很好的模型。由于我们提出的 EFM 可以大大减少生成特征金字塔时的内存需求,因此在内存带宽受限的移动环境下运行绝对有利。例如,CSPPeleeNet Ref.-EFM (SAM) 的帧速率可以比 YOLOv3-tiny 高,并且其 AP 50 \text{AP}_{50} AP50 比 YOLOv3-tiny 高 11.5%。对于相同的 CSPPeleeNet Ref. 骨干网,尽管 EFM (SAM) 在 GTX 1080ti 上比 PRN (3l) 慢 62 fps,但在 Jetson TX2 上达到 41 fps,比 PRN (3l) 快 3 fps,并且 AP50 增长 4.6%。

Table 5: Inference rate on mobile GPU (mGPU) and CPU real-time object detectors (in fps).

5 Conclusion

We have proposed the CSPNet that enables state-of-the-art methods such as ResNet, ResNeXt, and DenseNet to be light-weighted for mobile GPUs or CPUs. One of the main contributions is that we have recognized the redundant gradient information problem that results in inefficient optimization and costly inference computations. We have proposed to utilize the cross-stage feature fusion strategy and the truncating gradient flow to enhance the variability of the learned features within different layers. In addition, we have proposed the EFM that incorporates the Maxout operation to compress the features maps generated from the feature pyramid, which largely reduces the required memory bandwidth and thus the inference is efficient enough to be compatible with edge computing devices. Experimentally, we have shown that the proposed CSPNet with the EFM significantly outperforms competitors in terms of accuracy and inference rate on mobile GPU and CPU for real-time object detection tasks.

我们已经提出了 CSPNet,它可以使诸如 ResNet, ResNeXt, and DenseNet 之类的最新方法轻量化以用于移动 GPU 或 CPU。主要贡献之一是,我们已经认识到冗余梯度信息问题,该问题导致效率低下的优化和昂贵的推理计算。我们提出了利用跨阶段特征融合策略和截断梯度流来增强不同层中学习特征的可变性。此外,我们提出了结合了 Maxout 操作的 EFM,以压缩从特征金字塔生成的特征图,从而大大减少了所需的内存带宽,因此推理效率很高,足以与边缘计算设备兼容。通过实验,我们已经证明,提出的带有 EFM 的 CSPNet 在用于实时对象检测任务的移动 GPU 和 CPU 的准确性和推断率方面明显优于竞争对手。

References

[1] HarDNet: A Low Memory Traffic Network

[14] CornerNet-Lite: Efficient Keypoint Based Object Detection

[24] ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design

[35] Enriching Variety of Layer-wise Learning Information by Gradient Combination

[37] Pelee: A Real-Time Object Detection System on Mobile Devices

[39] Aggregated Residual Transformations for Deep Neural Networks

WORDBOOK

mean Average Precision,mAP:平均精度均值

floating point operations per second,FLOPS

frame rate or frames per second,FPS:每秒帧数

hertz,Hz:赫兹 (频率单位)

billion,Bn

operations,Ops

configuration,cfg

AP small,AP_S

AP medium,AP_M

AP large,AP_L

Feature Pyramid Network,FPN

KEY POINTS

https://pjreddie.com/publications/

https://github.com/AlexeyAB/darknet

https://pjreddie.com/darknet/

OpenCV DNN module