通过python处理解析accesslog日志文件,kettle抽取数据并做PV/UV的统计实现

处理流程

- 记录accesslog日志

- 切割整理accesslog日志

- 入库数据

- 数据抽取、分割

- 制作报表

适用场景

- accesslog日志记录了比较全面的访问日志。针对访问日志进行不同接口/页面/用户/终端的PV/UV统计,或者数据报表统计。

- 业务log日志中记录了比较全面的业务节点数据,针对这些节点数据做统计处理。

ps: 当然日志也可以用==ELK==这套框架进行分析,但是对于数据的整合重组可能需要额外处理。详见《利用logstash6.4.2监控access访问日志并切割,使用geoip插件分析ip地址在kibana控制台形成用户热点地图》

基本准备

- tomcat服务器(将access.log日志按需进行配置,也可以其他服务器只要能记录访问日志即可)

- python(解析日志,一般linux/mac自带。搭建成本低)

- 数据库(可以是RDBMS也可以是NoSql,主要用来存储数据)

- kettle(ETL工具,可做数据抽取、转移、加载从而建立数据仓库。比较方便,也可通过其他方式进行抽取)

- cboard(图形报表展示工具,可配置数据源进行数据抽象并报表展示)

配置、代码说明

- tomcat日志配置,打开 /opt/tomcat/apache-tomcat-7.0.85/conf/server.xml文件最下方有如下配置

tomcat日志默认每天记录一份日志。pattern代表了访问日志所记录的信息,除了基本的参数配置外,还可以根据自身程序的请求参数做额外处理,如上的 %{auth}i是从请求头中获取用户等信息。参考官方说明如下:(上半部分是固定配置,下半部分是自定义配置)

Values for the pattern attribute are made up of literal text strings, combined with pattern identifiers prefixed by the "%" character to cause replacement by the corresponding variable value from the current request and response. The following pattern codes are supported:

%a - Remote IP address

%A - Local IP address

%b - Bytes sent, excluding HTTP headers, or '-' if zero

%B - Bytes sent, excluding HTTP headers

%h - Remote host name (or IP address if resolveHosts is false)

%H - Request protocol

%l - Remote logical username from identd (always returns '-')

%m - Request method (GET, POST, etc.)

%p - Local port on which this request was received

%q - Query string (prepended with a '?' if it exists)

%r - First line of the request (method and request URI)

%s - HTTP status code of the response

%S - User session ID

%t - Date and time, in Common Log Format

%u - Remote user that was authenticated (if any), else '-'

%U - Requested URL path

%v - Local server name

%D - Time taken to process the request, in millis

%T - Time taken to process the request, in seconds

%I - Current request thread name (can compare later with stacktraces)

There is also support to write information from the cookie, incoming header, the Session or something else in the ServletRequest. It is modeled after the Apache HTTP Server log configuration syntax:

%{xxx}i for incoming headers

%{xxx}o for outgoing response headers

%{xxx}c for a specific cookie

%{xxx}r xxx is an attribute in the ServletRequest

%{xxx}s xxx is an attribute in the HttpSessi

- 建立数据库表,记录匹配规则以及存储解析的日志,建表如下:

# 用来存储log解析规则,按照不同请求路径划分

CREATE TABLE "T_ACCESSLOG_URL"

("ID" VARCHAR2(32),

"URL_PATTERN" VARCHAR2(2000), //请求路径唯一标记

"URL_DEMO" VARCHAR2(500),

"URL_TYPE" VARCHAR2(4),

"GMT_CREATE" VARCHAR2(32),

"MATCH_TYPE" VARCHAR2(4),

"URL_FROM" VARCHAR2(32),

"FIELD_PATTERN" VARCHAR2(2000), //log解析所需字段表达式

"CHANNEL" VARCHAR2(100),

"BLACKLIST" VARCHAR2(500), //黑白名单

"USERAGENT_PATTERN" VARCHAR2(2000), //代理过滤

"RETRY" VARCHAR2(4),

"REMARK" VARCHAR2(512)

)

# 用来存储解析后的数据

CREATE TABLE "T_ACCESSLOG_APP"

("ID" NUMBER,

"IP" VARCHAR2(100), //ip

"LOGTIME" VARCHAR2(100), //记录时间

"REQUESTED" VARCHAR2(500), //请求地址

"STATUS" VARCHAR2(40), //状态

"BANDWIDTH" VARCHAR2(40), //手机屏幕宽度

"REFERRER" VARCHAR2(1024), //

"USERAGENT" VARCHAR2(2000), //请求头

"FROMIP" VARCHAR2(100), //从哪里来

"PROJECTID" VARCHAR2(200), //业务标记

"FILENAME" VARCHAR2(50), //文件名称

"CHANNEL" VARCHAR2(50), //渠道(h5,ios,android,wap,web)

"CHANNELNO" VARCHAR2(50), //

"CREATE_TIME" VARCHAR2(50), //创建时间

"USERID" VARCHAR2(64), //用户id

"LOGTIME_FT" VARCHAR2(32) NOT NULL ENABLE,

"URL_TYPE" VARCHAR2(4),

"TAB" VARCHAR2(32),

"APP" VARCHAR2(32),

"APP_VERSION" VARCHAR2(32), //app版本号

"DEVICE_ID" VARCHAR2(128),

"CHANNEL_SOURCE" VARCHAR2(64), //其他

"CHANNEL_FROM" VARCHAR2(64)

)

表1的规则数据插入如下:

insert into t_accesslog_url

(URL_PARTTERN,URL_DEMO,URL_TYPE,GMT_CREATE,MATCH_TYPE,URL_FROM,FIELD_PATTERN,CHANNEL,BALCKLIST,USERAGENT_PATTERN,RETRY,REMARK)

values('^/bbs/home/top',null,4444,

sysdate,2,'api','[ {"pattern": "(/id/[A-Za-z0-9_]{0,32})", "split": "/id/", "field_name": "PROJECTID"}, {"pattern": "(/u/[A-Za-z0-9_]{0,32})",

"split": "/u/", "field_name": "USERID"}, {"pattern": "(/tab/[A-Za-z0-9_]{0,32})", "split": "/tab/", "field_name": "TAB"}, {"pattern": "(/app/[A-Za-z0-9_]{0,32})", "split": "/app/", "field_name": "APP","replace":{"ios":"app-iphone","android":"app-android","detail3":"","detail":""}}, {"pattern": "(/version/[A-Za-z0-9_.]{0,32})", "split": "/version/", "field_name": "APP_VERSION"}, {"pattern": "(/deviceid/[A-Za-z0-9_.]{0,32})", "split": "/deviceid/", "field_name": "DEVICE_ID"} ] ',null,null,null,0,'社区个人主页')

# URL_PARTTERN 代表每次log记录的请求项目地址,排除个性化url参数

# FIELD_PATTERN 代表解析log中的哪些数据,及正则匹配规则(这里和python的解析方式要对应)

- python 脚本制作定时任务,处理昨天的log汇总日志

# -*- coding:utf-8 -*-

__author__ = "**"

__email__ = "**"

__license__ = "None"

# import MySQLdb

import re

from urlparse import urlparse

import time

import datetime

import cx_Oracle

import os

import json

import sys

from urllib import unquote

from operator import itemgetter

reload(sys)

sys.setdefaultencoding('utf-8')

db = cx_Oracle.connect('xx', 'xx', 'xxx')

now = time.strftime('%Y-%m-%d', time.localtime(time.time()))

d = datetime.datetime.now()

def day_get(d):

oneday = datetime.timedelta(days=1)

day = d - oneday

return day.strftime("%Y-%m-%d")

def _format_time_log(dateString, fromexp, toexp):

dString = dateString[0:dateString.find(' ')]

dString = dString.replace(':', ' ', 1)

dt = datetime.datetime.strptime(dString, fromexp)

dt_rst = dt.strftime(toexp)

return dt_rst

logtime = day_get(d)

filename = "access_log." + logtime

print filename

def process_log(log):

requests = get_requests(log)

files = get_files(requests)

totals = file_occur(files)

return totals

# 日志格式如下

# xxx.xx.xx.xxx|

# xxx.xx.xx.xxx, xxx.xx.xx.xxx|

# 5|

# 2017-10-19 00:00:00|

# GET|

# 200|

# 764|

# /bbs/home/top?id=387017AB87BCC11FE050190AFD012B2B&client=web|

# Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/53.0.2785.104 Safari/537.36 Core/1.53.3485.400 QQBrowser/9.6.12190.400

def get_requests(f):

log_line = f.read()

pat = (r''

'(.*)\|'

'(.*)\|'

'(\d+)\|' # status

'(.*)\|'

'(.*)\|'

'(\d+)\|' # status

'(\d+)\|' # status

'(.*?)\|' # reffer

'(.*)\|' # user agent

'(.*)' # userid token

)

requests = find(pat, log_line)

return requests

def find(pat, text):

match = re.findall(pat, text)

if match:

return match

return False

def findone(pat, text):

match = re.find(pat, text)

if match:

return match

return False

def get_urls():

cursor = db.cursor()

sql = "select * from t_accesslog_url where url_from in ('api') "

cursor.execute(sql);

result = cursor.fetchall()

cursor.close()

return result

def insert(table_name, insert_dict, p_db, p_cur):

param = '';

value = '';

if(isinstance(insert_dict, dict)):

for key in insert_dict.keys():

if insert_dict[key] is None :

continue

param = param + key + ","

value = value + "'" + insert_dict[key] + "',"

param = param[:-1]

value = value[:-1]

sql = "insert into %s (%s) values(%s)" % (table_name, param, value)

try:

p_cur.execute(sql)

p_db.commit()

p_cur.close()

except Exception as err:

print(sql)

print(err)

def importdata(req, requested, fromip, referrer, useragent, url, url_type, match_type, url_from, field_pattern, channel, useragent_pattern, blacklist):

if blacklist is not None:

blacklist = json.loads(blacklist)

for black in blacklist:

rs = find(black['pattern'], requested)

if rs != False:

# print requested

return

cur = db.cursor()

params = requested.split('/')

logtime_ft = req[2]

table_name = 'T_ACCESSLOG_APP';

dict = {}

fromip = ''

innerip = ''

if req[1] == '-':

fromip = req[0]

else:

fromip_list = req[1].split(',')

fromip = fromip_list[0]

if len(fromip_list) == 1:

innerip = req[0]

elif len(fromip_list) == 2:

innerip = fromip_list[1]

dict['IP'] = innerip

dict['LOGTIME'] = req[3]

dict['REQUESTED'] = req[7]

dict['STATUS'] = req[5]

dict['BANDWIDTH'] = req[6]

dict['USERAGENT'] = req[8]

dict['FILENAME'] = filename

dict['CREATE_TIME'] = now

dict['LOGTIME_FT'] = req[3]

dict['URL_TYPE'] = url_type

dict['FROMIP'] = fromip

dict['USERID'] = req[9].split(' ')[0]

if field_pattern != None:

fields = field_pattern

fields = json.loads(fields)

for field in fields:

rs = find(field['pattern'], requested)

if rs != False:

if field.has_key('alias') and field['alias'] is not None and field['alias'] != '':

dict[field['field_name'].encode("utf-8")] = field['alias'].encode("utf-8")

else:

match_val = rs[len(rs) - 1]

if len(match_val.split(field['split'])) > 1:

field_val = match_val.split(field['split'].encode("utf-8"))[1]

if field_val == 'app-share-1':

field_val = 'app-share'

if field.has_key('replace') and field['replace'] is not None and field['replace'] != '':

replaces = field['replace']

for k in replaces.keys():

field_val = field_val.replace(k, replaces[k])

dict[field['field_name'].encode("utf-8")] = field_val.encode("utf-8")

else:

field_val = match_val.split(field['split_null'].encode("utf-8"))[1]

if field.has_key('replace') and field['replace'] is not None and field['replace'] != '':

replaces = field['replace']

for k in replaces.keys():

field_val = field_val.replace(k, replaces[k])

dict[field['field_name'].encode("utf-8")] = field_val.encode("utf-8")

if useragent_pattern != None:

agents = useragent_pattern

agents = json.loads(agents)

for agent in agents:

rs = find(agent['pattern'], useragent)

if rs != False:

if agent.has_key('alias') and agent['alias'] is not None and agent['alias'] != '':

dict[agent['field_name'].encode("utf-8")] = agent['alias'].encode("utf-8")

insert(table_name, dict, db, cur);

def get_files(requests):

requested_files = []

i = 0

urls = get_urls()

for req in requests:

try:

requested = req[7];

fromip = req[0];

referrer = ''

useragent = req[8]

if("Baiduspider" in req[8]) or ("Baidu-YunGuanCe-SLABot" in req[8]) or ("Googlebot" in req[8]) or ("YisouSpider" in req[8]) or ("GrapeshotCrawler" in req[8]

):

# print req[8]

continue

for row in urls:

url = row[1]

url_type = row[3]

match_type = row[5]

url_from = row[6]

field_pattern = row[7]

channel = row[8]

blacklist = row[9]

useragent_pattern = row[10]

if match_type == '1':

if requested == url:

importdata(req, requested, fromip, referrer, useragent, url, url_type, match_type, url_from, field_pattern, channel, useragent_pattern, blacklist)

else:

res = find(url, requested)

if res != False:

importdata(req, requested, fromip, referrer, useragent, url, url_type, match_type, url_from, field_pattern, channel, useragent_pattern, blacklist)

except Exception as err:

print(err)

continue

# db.close()

return requested_files

def file_occur(files):

# file occurrences in requested files

d = {}

for file in files:

d[file] = d.get(file, 0) + 1

return d

if __name__ == '__main__':

print 'AAA'

log_file = open(filename, 'r')

urls_with_counts = process_log(log_file)

-

kettle的配置。先配置数据源连接到解析好的库表,然后制定规则抽取数据统计到新库新表中

-

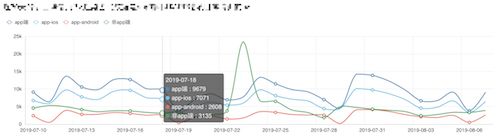

利用cboard工具,配置数据源,制定报表。

最终得到以下报表

功能总结

这套流程从规模上、所需插件等要比ELK整套环境多一些。

选择elk或是这个更多的是需要看业务场景。

ELK切割好日志后,也可以在不拆分索引文件的基础上进行多种高质量的查询,前提是索引规划的好(4核8G的阿里云服务器,单节点运行elasticsearch且2000w左右的数据量,运行良好)。如果对于日志数据需要进行较详细的区分、筛选、过滤,用本文这一种方式也许更佳。