hive3.1.2 分布式安装 (基于hadoo3.1.3+spark)

节点信息

dockerapache-01

dockerapache-02 #master

dockerapache-03

1.首先你需要先安装一个mysql

https://downloads.mysql.com/archives/community/

hive需要采用MySQL数据库保存Hive元数据。因为Hive自带的derby存储元数据不能支持多个用户连接,实际上derby只能允许一个会话连接。

安装教程在以下链接:

https://blog.csdn.net/gulugulu_gulu/article/details/105727581

2. 下载hive安装包

https://mirror.bit.edu.cn/apache/hive/hive-3.1.2/

以下在master上进行操作

3. 配置环境变量,hive

vim /etc/profile.d/hive.sh

export HIVE_HOME=/usr/local/apache-hive-3.1.2-bin

export HIVE_CONF_DIR=${HIVE_HOME}/conf

export PATH=$PATH:$JAVA_HOME/bin:$HIVE_HOME/bin:$HIVE_HOME/sbin

之后在 source /etc/profile 一下

4. 配置hive-env.sh

cp hive-env.sh.template hive-env.sh

HADOOP_HOME=/usr/local/hadoop-3.1.3

JAVA_HOME=/usr/local/jdk1.8.0_161

HBASE_HOME=/usr/local/hbase-2.2.4

SPARK_HOME=/SPARK/HOME

5.conf 下新建 hive-site.xml

javax.jdo.option.ConnectionURL</name>

jdbc:mysql://DockerApache-01:3306/hive?createDatabaseIfNotExist=true&;useSSL=false</value>

</property>

javax.jdo.option.ConnectionDriverName</name>

com.mysql.jdbc.Driver</value>

</property>

javax.jdo.option.ConnectionUserName</name>

hive</value>

</property>

javax.jdo.option.ConnectionPassword</name>

hive</value>

</property>

</configuration>

6. 上传mysql jdbc驱动至hive/lib

cp mysql-connector-java-8.0.17.jar /usr/local/apache-hive-3.1.2-bin/lib/

7. 初始化

schematool -initSchema -dbType mysql

./hive --service metastore

8. 启动和使用hive

hive

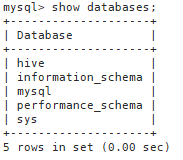

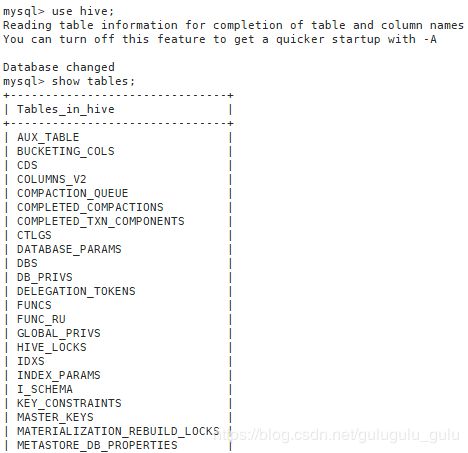

9. mysql中发生了什么?

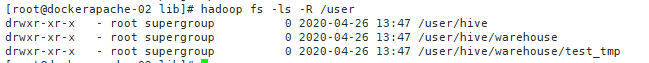

10.hdfs 目录

不需要自己在hdfs上新建目录

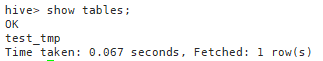

在hive中新建表:

create table test_tmp(

id int,

name string,

age int,

tel string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY ','

STORED AS TEXTFILE;

查看表

hive> show tables;

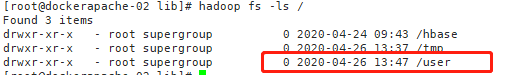

再去查看hdfs目录会发现,hdfs上已经自动创建了对应的目录 (default)

接着,对于其他节点

1. 将master节点上的hive安装包拷贝至其他节点

scp -r /usr/local/apache-hive-3.1.2-bin/ DockerApache-01:`pwd`

scp -r /usr/local/apache-hive-3.1.2-bin/ DockerApache-03:`pwd`

2. 分别配置从节点hive-site.xml

hive.metastore.uris</name>

thrift://dockerapache-02:9083</value>

</property>

3.直接运行 ./hive

有问题可以试试master节点上重启一下

./hive --service metastore &

配置spark

1.拷贝spark/jars的三个包到 $HIVE_HOME/lib:

scala-library-2.12.10.jar

spark-core_2.12-3.0.0-preview2.jar

spark-network-common_2.12-3.0.0-preview2.jar

cp spark-network-common_2.12-3.0.0-preview2.jar /usr/local/apache-hive-3.1.2-bin/lib/

cp spark-core_2.12-3.0.0-preview2.jar scala-library-2.12.10.jar /usr/local/apache-hive-3.1.2-bin/lib/

2.在hdfs上新建spark的文件夹,将【/usr/local/spark-3.0.0-preview2-bin-hadoop3.2/jars】下所有的包上传至spark目录下。

hadoop fs -mkdir /user/spark

hadoop fs -put * /user/spark

- 配置hive-site.xml,增加以下内容

yarn.resourcemanager.scheduler.class</name>

org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value>

</property>

spark.yarn.jars</name>

hdfs://dockerapache-02:9000 /user/spark/*</value>

</property>

spark.home</name>

/usr/local/spark-3.0.0-preview2-bin-hadoop3.2</value>

</property>

spark.master</name>

yarn</value>

</property>

spark.executor.memory</name>

8g</value>

</property>

spark.executor.cores</name>

2</value>

</property>

spark.executor.instances</name>

32</value>

</property>

spark.driver.memory</name>

8g</value>

</property>

spark.driver.cores</name>

2</value>

</property>

spark.serializer</name>

org.apache.spark.serializer.KryoSerializer</value>

</property>

错误及solution

Exception in thread "main" java.lang.NoSuchMethodError: com.google.common.base.Preconditions.checkArgument(ZLjava/lang/String;Ljava/lang/Object;)V

这可能是因为hive内依赖的guava.jar和hadoop内的版本不一致造成的。 检查:

查看hadoop安装目录下share/hadoop/common/lib内guava.jar版本

查看hive安装目录下lib内guava.jar的版本 如果两者不一致,删除版本低的,并拷贝高版本的。

Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary.

org.apache.hadoop.hive.metastore.HiveMetaException: Failed to get schema version.

Underlying cause: java.sql.SQLNonTransientConnectionException : Public Key Retrieval is not allowed

再检查一下hive-site.xml 中jdbc配置信息,是否出现类似‘&’乱码?有的话删掉

参考:http://www.mamicode.com/info-detail-2854343.html

Underlying cause: java.sql.SQLException : Access denied for user 'hive'@'dockerapache-02' (using password: YES)

用户权限问题。

注意hive-site.xml中用户名和密码,和安装教程https://blog.csdn.net/gulugulu_gulu/article/details/105727581步骤6中都对应

javax.jdo.option.ConnectionUserName</name>

root</value>

</property>

javax.jdo.option.ConnectionPassword</name>

qwe123</value>

</property>